核心概念

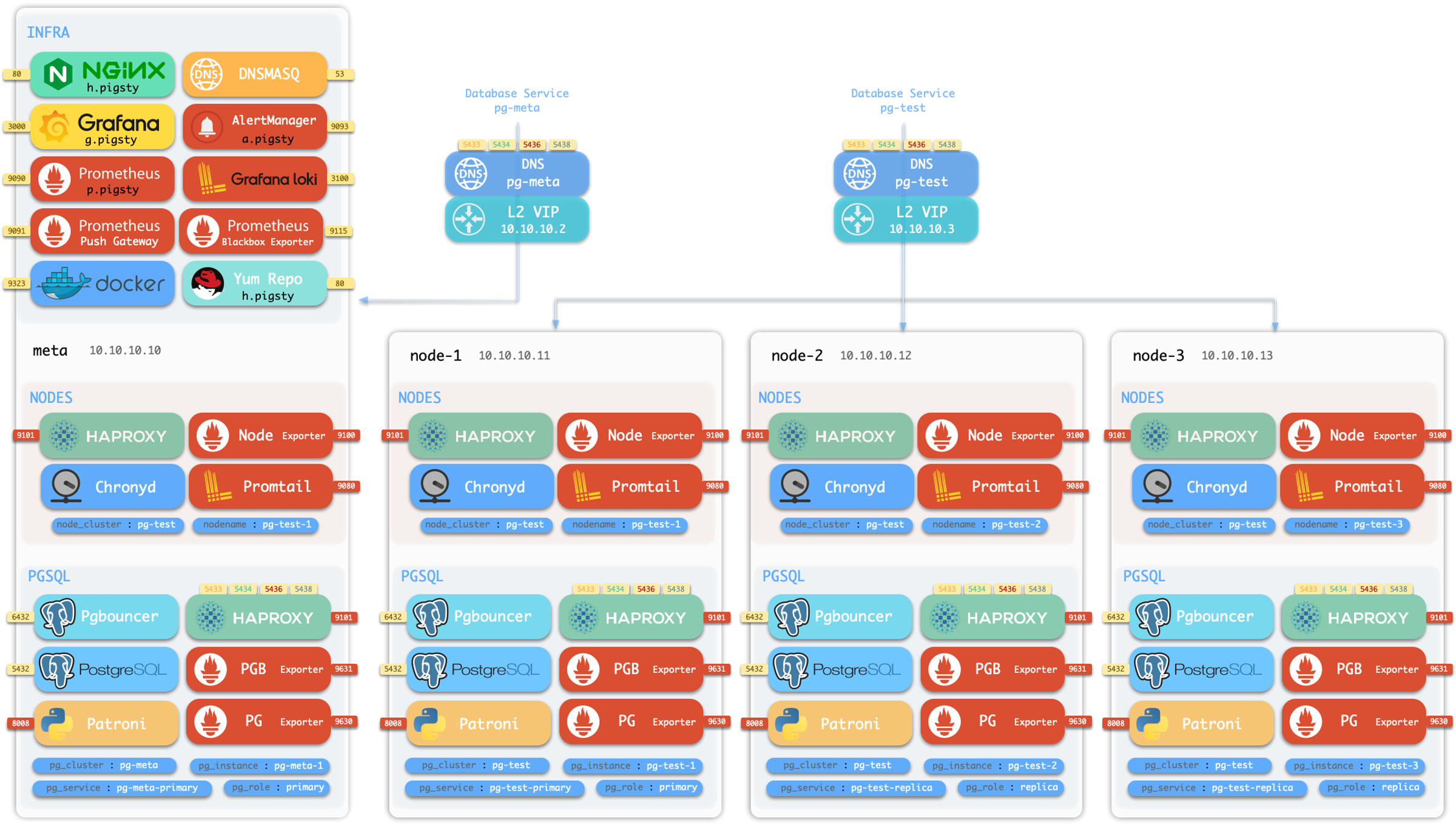

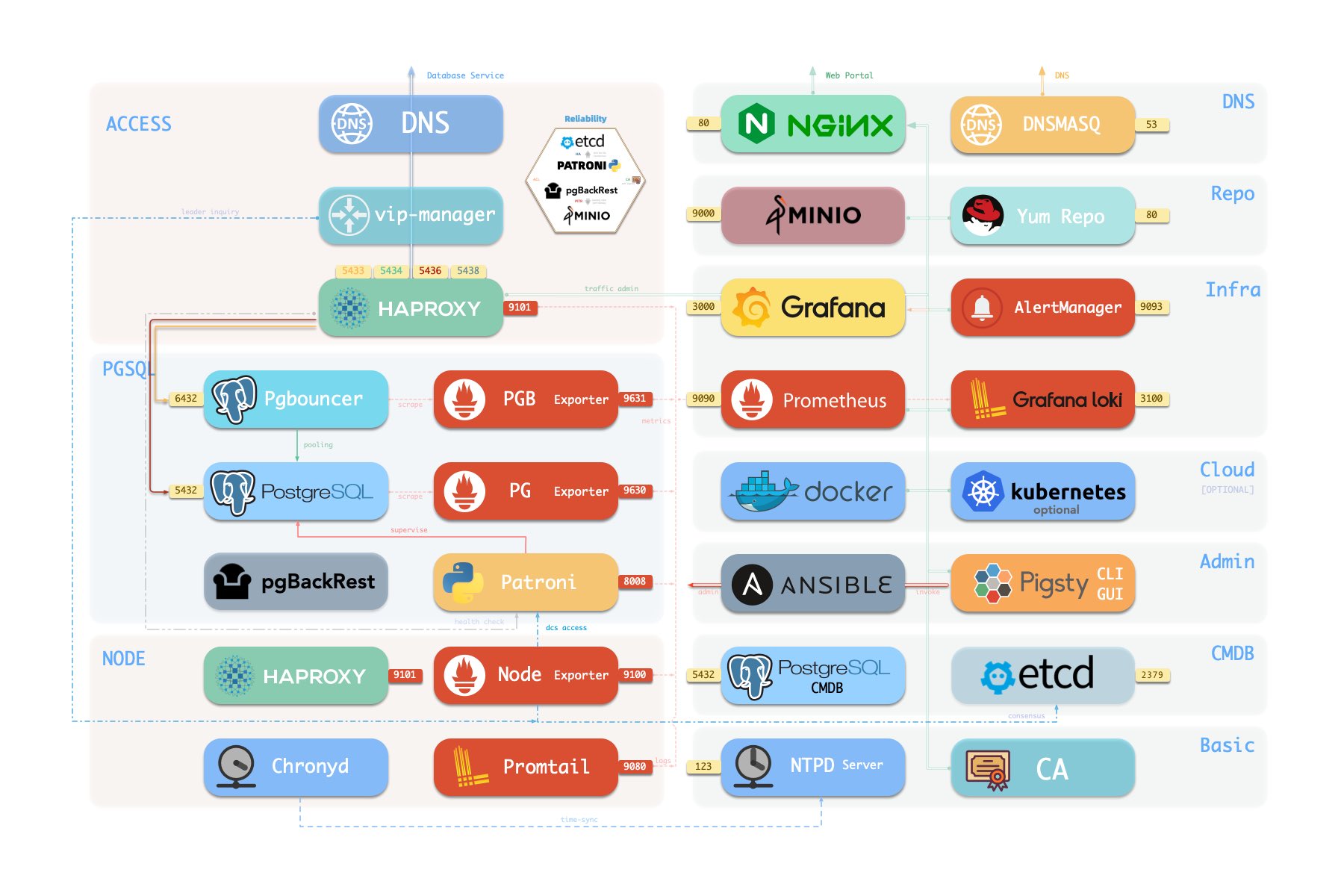

1 - 系统架构

Pigsty 使用 模块化架构 与 声明式接口。

- Pigsty 使用配置清单描述整套部署环境,并通过 ansible 剧本实现。

- Pigsty 在可以在任意节点上运行,无论是物理裸机还是虚拟机,只要操作系统兼容即可。

- Pigsty 的行为由配置参数控制,具有幂等性的剧本 会将节点调整到配置所描述的状态。

- Pigsty 采用模块化设计,可自由组合以适应不同场景。使用剧本将模块安装到配置指定的节点上。

模块

Pigsty 采用模块化设计,有六个主要的默认模块:PGSQL、INFRA、NODE、ETCD、REDIS 和 MINIO。

PGSQL:由 Patroni、Pgbouncer、HAproxy、PgBackrest 等驱动的自治高可用 Postgres 集群。INFRA:本地软件仓库、Prometheus、Grafana、Loki、AlertManager、PushGateway、Blackbox Exporter…NODE:调整节点到所需状态、名称、时区、NTP、ssh、sudo、haproxy、docker、promtail、keepalivedETCD:分布式键值存储,用作高可用 Postgres 集群的 DCS:共识选主/配置管理/服务发现。REDIS:Redis 服务器,支持独立主从、哨兵、集群模式,并带有完整的监控支持。MINIO:与 S3 兼容的简单对象存储服务器,可作为 PG数据库备份的可选目的地。

你可以声明式地自由组合它们。如果你想要主机监控,在基础设施节点上安装INFRA模块,并在纳管节点上安装 NODE 模块就足够了。

ETCD 和 PGSQL 模块用于搭建高可用 PG 集群,将模块安装在多个节点上,可以自动形成一个高可用的数据库集群。

您可以复用 Pigsty 基础架构并开发您自己的模块,REDIS 和 MINIO 可以作为一个样例。后续还会有更多的模块加入,例如对 Mongo 与 MySQL 的初步支持已经提上了日程。

请注意,所有模块都强依赖 NODE 模块:在 Pigsty 中节点必须先安装 NODE 模块,被 Pigsty 纳管后方可部署其他模块。

当节点(默认)使用本地软件源进行安装时,NODE 模块对 INFRA 模块有弱依赖。因此安装 INFRA 模块的管理节点/基础设施节点会在 [install.yml] 剧本中完成 Bootstrap 过程,解决循环依赖。

单机安装

默认情况下,Pigsty 将在单个 节点 (物理机/虚拟机) 上安装。install.yml 剧本将在当前节点上安装 INFRA、ETCD、PGSQL 和可选的 MINIO 模块,

这将为你提供一个功能完备的可观测性技术栈全家桶 (Prometheus、Grafana、Loki、AlertManager、PushGateway、BlackboxExporter 等) ,以及一个内置的 PostgreSQL 单机实例作为 CMDB,也可以开箱即用。 (集群名 pg-meta,库名为 meta)。

这个节点现在会有完整的自我监控系统、可视化工具集,以及一个自动配置有 PITR 的 Postgres 数据库(HA不可用,因为你只有一个节点)。你可以使用此节点作为开发箱、测试、运行演示以及进行数据可视化和分析。或者,还可以把这个节点当作管理节点,部署纳管更多的节点!

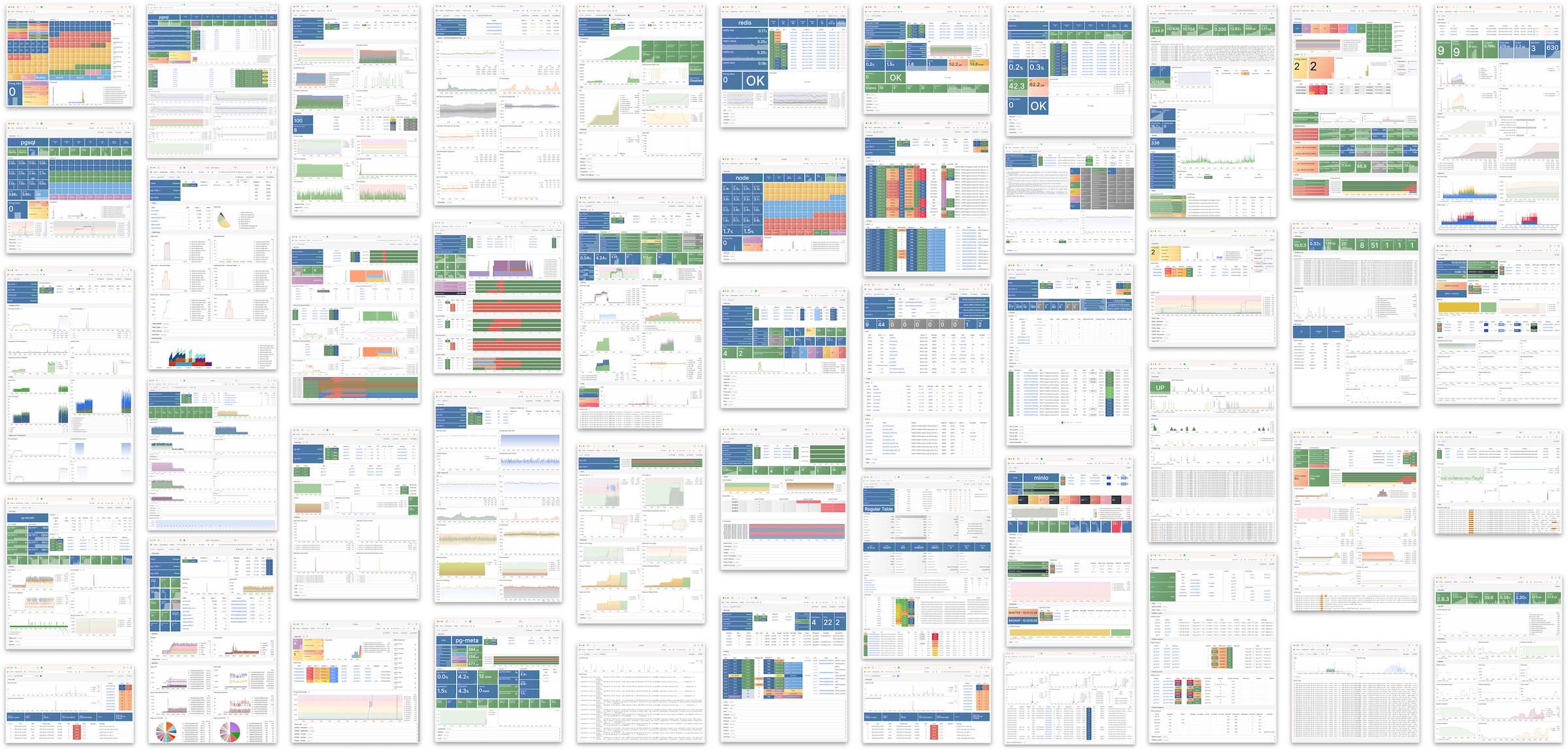

监控

安装的 单机元节点 可用作管理节点和监控中心,以将更多节点和数据库服务器置于其监视和控制之下。

Pigsty 的监控系统可以独立使用,如果你想安装 Prometheus / Grafana 可观测性全家桶,Pigsty 为你提供了最佳实践! 它为 主机节点 和 PostgreSQL数据库 提供了丰富的仪表盘。 无论这些节点或 PostgreSQL 服务器是否由 Pigsty 管理,只需简单的配置,你就可以立即拥有生产级的监控和告警系统,并将现有的主机与PostgreSQL纳入监管。

高可用PG集群

Pigsty 帮助您在任何地方 拥有 您自己的生产级高可用 PostgreSQL RDS 服务。

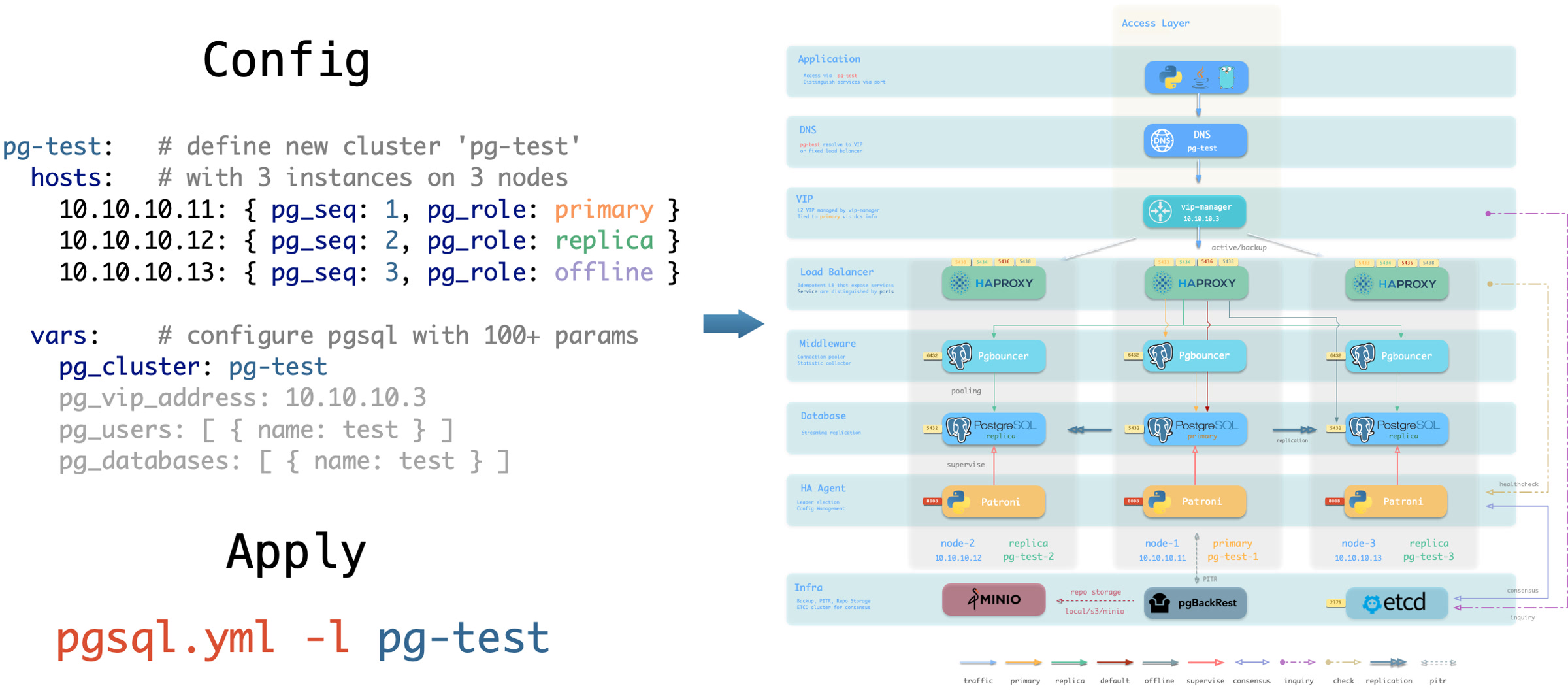

要创建这样一个高可用 PostgreSQL 集群/RDS服务,你只需用简短的配置来描述它,并运行剧本来创建即可:

pg-test:

hosts:

10.10.10.11: { pg_seq: 1, pg_role: primary }

10.10.10.12: { pg_seq: 2, pg_role: replica }

10.10.10.13: { pg_seq: 3, pg_role: replica }

vars: { pg_cluster: pg-test }

$ bin/pgsql-add pg-test # 初始化集群 'pg-test'

不到10分钟,您将拥有一个服务接入,监控,备份PITR,高可用配置齐全的 PostgreSQL 数据库集群。

硬件故障由 patroni、etcd 和 haproxy 提供的自愈高可用架构来兜底,在主库故障的情况下,默认会在 30 秒内执行自动故障转移(Failover)。 客户端无需修改配置重启应用:Haproxy 利用 patroni 健康检查进行流量分发,读写请求会自动分发到新的集群主库中,并避免脑裂的问题。 这一过程十分丝滑,例如在从库故障,或主动切换(switchover)的情况下,客户端只有一瞬间的当前查询闪断,

软件故障、人为错误和 数据中心级灾难由 pgbackrest 和可选的 MinIO 集群来兜底。这为您提供了本地/云端的 PITR 能力,并在数据中心失效的情况下提供了跨地理区域复制,与异地容灾功能。

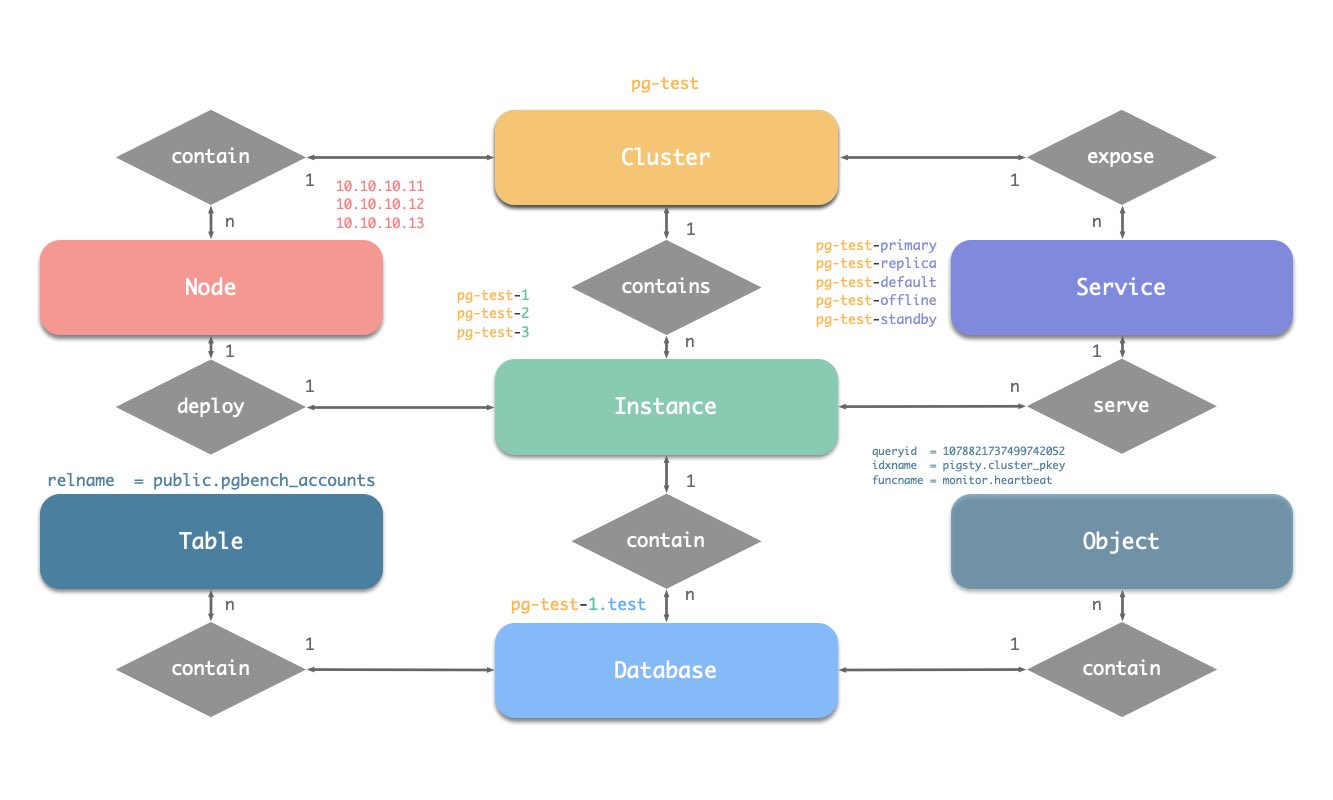

2 - 集群模型

在 Pigsty 中,功能模块是以 “集群” 的方式组织起来的。每一个集群都是一个 Ansible 分组,包含有若干节点资源,定义有实例

PGSQL 模块总览:关键概念与架构细节

PGSQL模块在生产环境中以集群的形式组织,这些集群是由一组由主-备关联的数据库实例组成的逻辑实体。 每个数据库集群都是一个自治的业务服务单元,由至少一个 数据库(主库)实例 组成。

实体概念图

让我们从ER图开始。在Pigsty的PGSQL模块中,有四种核心实体:

- 集群(Cluster):自治的PostgreSQL业务单元,用作其他实体的顶级命名空间。

- 服务(Service):集群能力的命名抽象,路由流量,并使用节点端口暴露postgres服务。

- 实例(Instance):一个在单个节点上的运行进程和数据库文件组成的单一postgres服务器。

- 节点(Node):硬件资源的抽象,可以是裸金属、虚拟机或甚至是k8s pods。

命名约定

- 集群名应为有效的 DNS 域名,不包含任何点号,正则表达式为:

[a-zA-Z0-9-]+ - 服务名应以集群名为前缀,并以特定单词作为后缀:

primary、replica、offline、delayed,中间用-连接。 - 实例名以集群名为前缀,以正整数实例号为后缀,用

-连接,例如${cluster}-${seq}。 - 节点由其首要内网IP地址标识,因为PGSQL模块中数据库与主机1:1部署,所以主机名通常与实例名相同。

身份参数

Pigsty使用身份参数来识别实体:PG_ID。

除了节点IP地址,pg_cluster、pg_role和pg_seq三个参数是定义postgres集群所必需的最小参数集。

以沙箱环境测试集群pg-test为例:

pg-test:

hosts:

10.10.10.11: { pg_seq: 1, pg_role: primary }

10.10.10.12: { pg_seq: 2, pg_role: replica }

10.10.10.13: { pg_seq: 3, pg_role: replica }

vars:

pg_cluster: pg-test

集群的三个成员如下所示:

| 集群 | 序号 | 角色 | 主机 / IP | 实例 | 服务 | 节点名 |

|---|---|---|---|---|---|---|

pg-test |

1 |

primary |

10.10.10.11 |

pg-test-1 |

pg-test-primary |

pg-test-1 |

pg-test |

2 |

replica |

10.10.10.12 |

pg-test-2 |

pg-test-replica |

pg-test-2 |

pg-test |

3 |

replica |

10.10.10.13 |

pg-test-3 |

pg-test-replica |

pg-test-3 |

这里包含了:

- 一个集群:该集群命名为

pg-test。 - 两种角色:

primary和replica。 - 三个实例:集群由三个实例组成:

pg-test-1、pg-test-2、pg-test-3。 - 三个节点:集群部署在三个节点上:

10.10.10.11、10.10.10.12和10.10.10.13。 - 四个服务:

- 读写服务:

pg-test-primary - 只读服务:

pg-test-replica - 直接连接的管理服务:

pg-test-default - 离线读服务:

pg-test-offline

- 读写服务:

在监控系统(Prometheus/Grafana/Loki)中,相应的指标将会使用这些身份参数进行标记:

pg_up{cls="pg-meta", ins="pg-meta-1", ip="10.10.10.10", job="pgsql"}

pg_up{cls="pg-test", ins="pg-test-1", ip="10.10.10.11", job="pgsql"}

pg_up{cls="pg-test", ins="pg-test-2", ip="10.10.10.12", job="pgsql"}

pg_up{cls="pg-test", ins="pg-test-3", ip="10.10.10.13", job="pgsql"}

3 - 监控系统

4 - 本地 CA

Pigsty 部署默认启用了一些安全最佳实践:使用 SSL 加密网络流量,使用 HTTPS 加密 Web 界面。

为了实现这一功能,Pigsty 内置了本地自签名的 CA ,用于签发 SSL 证书,加密网络通信流量。

在默认情况下,SSL 与 HTTPS 是启用,但不强制使用的。对于有着较高安全要求的环境,您可以强制使用 SSL 与 HTTPS。

本地CA

Pigsty 默认会在初始化时,在 ADMIN节点 本机 Pigsty 源码目录(~/pigsty)中生成一个自签名的 CA,当您需要使用 SSL,HTTPS,数字签名,签发数据库客户端证书,高级安全特性时,可以使用此 CA。

因此,每一套 Pigsty 部署使用的 CA 都是唯一的,不同的 Pigsty 部署之间的 CA 是不相互信任的。

本地 CA 由两个文件组成,默认放置于 files/pki/ca 目录中:

ca.crt:自签名的 CA 根证书,应当分发安装至到所有纳管节点,用于证书验证。ca.key:CA 私钥,用于签发证书,验证 CA 身份,应当妥善保管,避免泄漏!

请保护好CA私钥文件

请妥善保管 CA 私钥文件,不要遗失,不要泄漏。我们建议您在完成 Pigsty 安装后,加密备份此文件。使用现有CA

如果您本身已经有 CA 公私钥基础设施,Pigsty 也可以配置为使用现有 CA 。

将您的 CA 公钥与私钥文件放置于 files/pki/ca 目录中即可。

files/pki/ca/ca.key # 核心的 CA 私钥文件,必须存在,如果不存在,默认会重新随机生成一个

files/pki/ca/ca.crt # 如果没有证书文件,Pigsty会自动重新从 CA 私钥生成新的根证书文件

当 Pigsty 执行 install.yml 与 infra.yml 剧本进行安装时,如果发现 files/pki/ca 目录中的 ca.key 私钥文件存在,则会使用已有的 CA 。ca.crt 文件可以从 ca.key 私钥文件生成,所以如果没有证书文件,Pigsty 会自动重新从 CA 私钥生成新的根证书文件。

信任CA

在 Pigsty 安装过程中,ca.crt 会在 node.yml 剧本的 node_ca 任务中,被分发至所有节点上的 /etc/pki/ca.crt 路径下。

EL系操作系统与 Debian系操作系统默认信任的 CA 根证书路径不同,因此分发的路径与更新的方式也不同。

rm -rf /etc/pki/ca-trust/source/anchors/ca.crt

ln -s /etc/pki/ca.crt /etc/pki/ca-trust/source/anchors/ca.crt

/bin/update-ca-trustrm -rf /usr/local/share/ca-certificates/ca.crt

ln -s /etc/pki/ca.crt /usr/local/share/ca-certificates/ca.crt

/usr/sbin/update-ca-certificatesPigsty 默认会为基础设施节点上的 Web 系统使用的域名签发 HTTPS 证书,您可以 HTTPS 访问 Pigsty 的 Web 系统。

如果您希望在客户端电脑上浏览器访问时不要弹出“不受信任的 CA 证书”信息,可以将 ca.crt 分发至客户端电脑的信任证书目录中。

您可以双击 ca.crt 文件将其加入系统钥匙串,例如在 MacOS 系统中,需要打开“钥匙串访问” 搜索 pigsty-ca 然后“信任”此根证书

查看证书内容

使用以下命令,可以查阅 Pigsty CA 证书的内容

openssl x509 -text -in /etc/pki/ca.crt

本地 CA 根证书内容样例

Certificate:

Data:

Version: 3 (0x2)

Serial Number:

50:29:e3:60:96:93:f4:85:14:fe:44:81:73:b5:e1:09:2a:a8:5c:0a

Signature Algorithm: sha256WithRSAEncryption

Issuer: O=pigsty, OU=ca, CN=pigsty-ca

Validity

Not Before: Feb 7 00:56:27 2023 GMT

Not After : Jan 14 00:56:27 2123 GMT

Subject: O=pigsty, OU=ca, CN=pigsty-ca

Subject Public Key Info:

Public Key Algorithm: rsaEncryption

Public-Key: (4096 bit)

Modulus:

00:c1:41:74:4f:28:c3:3c:2b:13:a2:37:05:87:31:

....

e6:bd:69:a5:5b:e3:b4:c0:65:09:6e:84:14:e9:eb:

90:f7:61

Exponent: 65537 (0x10001)

X509v3 extensions:

X509v3 Subject Alternative Name:

DNS:pigsty-ca

X509v3 Key Usage:

Digital Signature, Certificate Sign, CRL Sign

X509v3 Basic Constraints: critical

CA:TRUE, pathlen:1

X509v3 Subject Key Identifier:

C5:F6:23:CE:BA:F3:96:F6:4B:48:A5:B1:CD:D4:FA:2B:BD:6F:A6:9C

Signature Algorithm: sha256WithRSAEncryption

Signature Value:

89:9d:21:35:59:6b:2c:9b:c7:6d:26:5b:a9:49:80:93:81:18:

....

9e:dd:87:88:0d:c4:29:9e

-----BEGIN CERTIFICATE-----

...

cXyWAYcvfPae3YeIDcQpng==

-----END CERTIFICATE-----

签发证书

如果您希望通过客户端证书认证,那么可以使用本地 CA 与 cert.yml 剧本手工签发PostgreSQL 客户端证书。

将证书的 CN 字段设置为数据库用户名即可:

./cert.yml -e cn=dbuser_dba

./cert.yml -e cn=dbuser_monitor

签发的证书会默认生成在 files/pki/misc/<cn>.{key,crt} 路径下。

5 - 基础设施即代码

基础设施即代码,Infra as Code,简称 IaC。

Pigsty 遵循 IaC 与 GitOPS 的理念:Pigsty 的部署由声明式的 配置清单 描述,并通过 幂等剧本 来实现。

用户用声明的方式通过 配置参数 来描述自己期望的状态,而剧本则以幂等的方式调整目标节点以达到这个状态。这类似于 Kubernetes 的 CRD & Operator,Pigsty 在裸机和虚拟机上实现了同样的功能。

声明模块

以下面的默认配置片段为例,这段配置描述了一个节点 10.10.10.10,其上安装了 INFRA、NODE、ETCD 和 PGSQL 模块。

# 监控、告警、DNS、NTP 等基础设施集群...

infra: { hosts: { 10.10.10.10: { infra_seq: 1 } } }

# minio 集群,兼容 s3 的对象存储

minio: { hosts: { 10.10.10.10: { minio_seq: 1 } }, vars: { minio_cluster: minio } }

# etcd 集群,用作 PostgreSQL 高可用所需的 DCS

etcd: { hosts: { 10.10.10.10: { etcd_seq: 1 } }, vars: { etcd_cluster: etcd } }

# PGSQL 示例集群: pg-meta

pg-meta: { hosts: { 10.10.10.10: { pg_seq: 1, pg_role: primary }, vars: { pg_cluster: pg-meta } }

要真正安装这些模块,执行以下剧本:

./infra.yml -l 10.10.10.10 # 在节点 10.10.10.10 上初始化 infra 模块

./etcd.yml -l 10.10.10.10 # 在节点 10.10.10.10 上初始化 etcd 模块

./minio.yml -l 10.10.10.10 # 在节点 10.10.10.10 上初始化 minio 模块

./pgsql.yml -l 10.10.10.10 # 在节点 10.10.10.10 上初始化 pgsql 模块

声明集群

您可以声明 PostgreSQL 数据库集群,在多个节点上安装 PGSQL 模块,并使其成为一个服务单元:

例如,要在以下三个已被 Pigsty 纳管的节点上,部署一个使用流复制组建的三节点高可用 PostgreSQL 集群,

您可以在配置文件 pigsty.yml 的 all.children 中添加以下定义:

pg-test:

hosts:

10.10.10.11: { pg_seq: 1, pg_role: primary }

10.10.10.12: { pg_seq: 2, pg_role: replica }

10.10.10.13: { pg_seq: 3, pg_role: offline }

vars: { pg_cluster: pg-test }

定义完后,可以使用 剧本 将集群创建:

bin/pgsql-add pg-test # 创建 pg-test 集群

你可以使用不同的的实例角色,例如 主库(primary),从库(replica),离线从库(offline),延迟从库(delayed),同步备库(sync standby); 以及不同的集群:例如 备份集群(Standby Cluster),Citus集群,甚至是 Redis / MinIO / Etcd 集群

定制集群内容

您不仅可以使用声明式的方式定义集群,还可以定义集群中的数据库、用户、服务、HBA 规则等内容,例如,下面的配置文件对默认的 pg-meta 单节点数据库集群的内容进行了深度定制:

包括:声明了六个业务数据库与七个业务用户,添加了一个额外的 standby 服务(同步备库,提供无复制延迟的读取能力),定义了一些额外的 pg_hba 规则,一个指向集群主库的 L2 VIP 地址,与自定义的备份策略。

pg-meta:

hosts: { 10.10.10.10: { pg_seq: 1, pg_role: primary , pg_offline_query: true } }

vars:

pg_cluster: pg-meta

pg_databases: # define business databases on this cluster, array of database definition

- name: meta # REQUIRED, `name` is the only mandatory field of a database definition

baseline: cmdb.sql # optional, database sql baseline path, (relative path among ansible search path, e.g files/)

pgbouncer: true # optional, add this database to pgbouncer database list? true by default

schemas: [pigsty] # optional, additional schemas to be created, array of schema names

extensions: # optional, additional extensions to be installed: array of `{name[,schema]}`

- { name: postgis , schema: public }

- { name: timescaledb }

comment: pigsty meta database # optional, comment string for this database

owner: postgres # optional, database owner, postgres by default

template: template1 # optional, which template to use, template1 by default

encoding: UTF8 # optional, database encoding, UTF8 by default. (MUST same as template database)

locale: C # optional, database locale, C by default. (MUST same as template database)

lc_collate: C # optional, database collate, C by default. (MUST same as template database)

lc_ctype: C # optional, database ctype, C by default. (MUST same as template database)

tablespace: pg_default # optional, default tablespace, 'pg_default' by default.

allowconn: true # optional, allow connection, true by default. false will disable connect at all

revokeconn: false # optional, revoke public connection privilege. false by default. (leave connect with grant option to owner)

register_datasource: true # optional, register this database to grafana datasources? true by default

connlimit: -1 # optional, database connection limit, default -1 disable limit

pool_auth_user: dbuser_meta # optional, all connection to this pgbouncer database will be authenticated by this user

pool_mode: transaction # optional, pgbouncer pool mode at database level, default transaction

pool_size: 64 # optional, pgbouncer pool size at database level, default 64

pool_size_reserve: 32 # optional, pgbouncer pool size reserve at database level, default 32

pool_size_min: 0 # optional, pgbouncer pool size min at database level, default 0

pool_max_db_conn: 100 # optional, max database connections at database level, default 100

- { name: grafana ,owner: dbuser_grafana ,revokeconn: true ,comment: grafana primary database }

- { name: bytebase ,owner: dbuser_bytebase ,revokeconn: true ,comment: bytebase primary database }

- { name: kong ,owner: dbuser_kong ,revokeconn: true ,comment: kong the api gateway database }

- { name: gitea ,owner: dbuser_gitea ,revokeconn: true ,comment: gitea meta database }

- { name: wiki ,owner: dbuser_wiki ,revokeconn: true ,comment: wiki meta database }

pg_users: # define business users/roles on this cluster, array of user definition

- name: dbuser_meta # REQUIRED, `name` is the only mandatory field of a user definition

password: DBUser.Meta # optional, password, can be a scram-sha-256 hash string or plain text

login: true # optional, can log in, true by default (new biz ROLE should be false)

superuser: false # optional, is superuser? false by default

createdb: false # optional, can create database? false by default

createrole: false # optional, can create role? false by default

inherit: true # optional, can this role use inherited privileges? true by default

replication: false # optional, can this role do replication? false by default

bypassrls: false # optional, can this role bypass row level security? false by default

pgbouncer: true # optional, add this user to pgbouncer user-list? false by default (production user should be true explicitly)

connlimit: -1 # optional, user connection limit, default -1 disable limit

expire_in: 3650 # optional, now + n days when this role is expired (OVERWRITE expire_at)

expire_at: '2030-12-31' # optional, YYYY-MM-DD 'timestamp' when this role is expired (OVERWRITTEN by expire_in)

comment: pigsty admin user # optional, comment string for this user/role

roles: [dbrole_admin] # optional, belonged roles. default roles are: dbrole_{admin,readonly,readwrite,offline}

parameters: {} # optional, role level parameters with `ALTER ROLE SET`

pool_mode: transaction # optional, pgbouncer pool mode at user level, transaction by default

pool_connlimit: -1 # optional, max database connections at user level, default -1 disable limit

- {name: dbuser_view ,password: DBUser.Viewer ,pgbouncer: true ,roles: [dbrole_readonly], comment: read-only viewer for meta database}

- {name: dbuser_grafana ,password: DBUser.Grafana ,pgbouncer: true ,roles: [dbrole_admin] ,comment: admin user for grafana database }

- {name: dbuser_bytebase ,password: DBUser.Bytebase ,pgbouncer: true ,roles: [dbrole_admin] ,comment: admin user for bytebase database }

- {name: dbuser_kong ,password: DBUser.Kong ,pgbouncer: true ,roles: [dbrole_admin] ,comment: admin user for kong api gateway }

- {name: dbuser_gitea ,password: DBUser.Gitea ,pgbouncer: true ,roles: [dbrole_admin] ,comment: admin user for gitea service }

- {name: dbuser_wiki ,password: DBUser.Wiki ,pgbouncer: true ,roles: [dbrole_admin] ,comment: admin user for wiki.js service }

pg_services: # extra services in addition to pg_default_services, array of service definition

# standby service will route {ip|name}:5435 to sync replica's pgbouncer (5435->6432 standby)

- name: standby # required, service name, the actual svc name will be prefixed with `pg_cluster`, e.g: pg-meta-standby

port: 5435 # required, service exposed port (work as kubernetes service node port mode)

ip: "*" # optional, service bind ip address, `*` for all ip by default

selector: "[]" # required, service member selector, use JMESPath to filter inventory

dest: default # optional, destination port, default|postgres|pgbouncer|<port_number>, 'default' by default

check: /sync # optional, health check url path, / by default

backup: "[? pg_role == `primary`]" # backup server selector

maxconn: 3000 # optional, max allowed front-end connection

balance: roundrobin # optional, haproxy load balance algorithm (roundrobin by default, other: leastconn)

options: 'inter 3s fastinter 1s downinter 5s rise 3 fall 3 on-marked-down shutdown-sessions slowstart 30s maxconn 3000 maxqueue 128 weight 100'

pg_hba_rules:

- {user: dbuser_view , db: all ,addr: infra ,auth: pwd ,title: 'allow grafana dashboard access cmdb from infra nodes'}

pg_vip_enabled: true

pg_vip_address: 10.10.10.2/24

pg_vip_interface: eth1

node_crontab: # make a full backup 1 am everyday

- '00 01 * * * postgres /pg/bin/pg-backup full'

声明访问控制

您还可以通过声明式的配置,深度定制 Pigsty 的访问控制能力。例如下面的配置文件对 pg-meta 集群进行了深度安全定制:

使用三节点核心集群模板:crit.yml,确保数据一致性有限,故障切换数据零丢失。

启用了 L2 VIP,并将数据库与连接池的监听地址限制在了 本地环回IP + 内网IP + VIP 三个特定地址。

模板强制启用了 Patroni 的 SSL API,与 Pgbouncer 的 SSL,并在 HBA 规则中强制要求使用 SSL 访问数据库集群。

同时还在 pg_libs 中启用了 $libdir/passwordcheck 扩展,来强制执行密码强度安全策略。

最后,还单独声明了一个 pg-meta-delay 集群,作为 pg-meta 在一个小时前的延迟镜像从库,用于紧急数据误删恢复。

pg-meta: # 3 instance postgres cluster `pg-meta`

hosts:

10.10.10.10: { pg_seq: 1, pg_role: primary }

10.10.10.11: { pg_seq: 2, pg_role: replica }

10.10.10.12: { pg_seq: 3, pg_role: replica , pg_offline_query: true }

vars:

pg_cluster: pg-meta

pg_conf: crit.yml

pg_users:

- { name: dbuser_meta , password: DBUser.Meta , pgbouncer: true , roles: [ dbrole_admin ] , comment: pigsty admin user }

- { name: dbuser_view , password: DBUser.Viewer , pgbouncer: true , roles: [ dbrole_readonly ] , comment: read-only viewer for meta database }

pg_databases:

- {name: meta ,baseline: cmdb.sql ,comment: pigsty meta database ,schemas: [pigsty] ,extensions: [{name: postgis, schema: public}, {name: timescaledb}]}

pg_default_service_dest: postgres

pg_services:

- { name: standby ,src_ip: "*" ,port: 5435 , dest: default ,selector: "[]" , backup: "[? pg_role == `primary`]" }

pg_vip_enabled: true

pg_vip_address: 10.10.10.2/24

pg_vip_interface: eth1

pg_listen: '${ip},${vip},${lo}'

patroni_ssl_enabled: true

pgbouncer_sslmode: require

pgbackrest_method: minio

pg_libs: 'timescaledb, $libdir/passwordcheck, pg_stat_statements, auto_explain' # add passwordcheck extension to enforce strong password

pg_default_roles: # default roles and users in postgres cluster

- { name: dbrole_readonly ,login: false ,comment: role for global read-only access }

- { name: dbrole_offline ,login: false ,comment: role for restricted read-only access }

- { name: dbrole_readwrite ,login: false ,roles: [dbrole_readonly] ,comment: role for global read-write access }

- { name: dbrole_admin ,login: false ,roles: [pg_monitor, dbrole_readwrite] ,comment: role for object creation }

- { name: postgres ,superuser: true ,expire_in: 7300 ,comment: system superuser }

- { name: replicator ,replication: true ,expire_in: 7300 ,roles: [pg_monitor, dbrole_readonly] ,comment: system replicator }

- { name: dbuser_dba ,superuser: true ,expire_in: 7300 ,roles: [dbrole_admin] ,pgbouncer: true ,pool_mode: session, pool_connlimit: 16 , comment: pgsql admin user }

- { name: dbuser_monitor ,roles: [pg_monitor] ,expire_in: 7300 ,pgbouncer: true ,parameters: {log_min_duration_statement: 1000 } ,pool_mode: session ,pool_connlimit: 8 ,comment: pgsql monitor user }

pg_default_hba_rules: # postgres host-based auth rules by default

- {user: '${dbsu}' ,db: all ,addr: local ,auth: ident ,title: 'dbsu access via local os user ident' }

- {user: '${dbsu}' ,db: replication ,addr: local ,auth: ident ,title: 'dbsu replication from local os ident' }

- {user: '${repl}' ,db: replication ,addr: localhost ,auth: ssl ,title: 'replicator replication from localhost'}

- {user: '${repl}' ,db: replication ,addr: intra ,auth: ssl ,title: 'replicator replication from intranet' }

- {user: '${repl}' ,db: postgres ,addr: intra ,auth: ssl ,title: 'replicator postgres db from intranet' }

- {user: '${monitor}' ,db: all ,addr: localhost ,auth: pwd ,title: 'monitor from localhost with password' }

- {user: '${monitor}' ,db: all ,addr: infra ,auth: ssl ,title: 'monitor from infra host with password'}

- {user: '${admin}' ,db: all ,addr: infra ,auth: ssl ,title: 'admin @ infra nodes with pwd & ssl' }

- {user: '${admin}' ,db: all ,addr: world ,auth: cert ,title: 'admin @ everywhere with ssl & cert' }

- {user: '+dbrole_readonly',db: all ,addr: localhost ,auth: ssl ,title: 'pgbouncer read/write via local socket'}

- {user: '+dbrole_readonly',db: all ,addr: intra ,auth: ssl ,title: 'read/write biz user via password' }

- {user: '+dbrole_offline' ,db: all ,addr: intra ,auth: ssl ,title: 'allow etl offline tasks from intranet'}

pgb_default_hba_rules: # pgbouncer host-based authentication rules

- {user: '${dbsu}' ,db: pgbouncer ,addr: local ,auth: peer ,title: 'dbsu local admin access with os ident'}

- {user: 'all' ,db: all ,addr: localhost ,auth: pwd ,title: 'allow all user local access with pwd' }

- {user: '${monitor}' ,db: pgbouncer ,addr: intra ,auth: ssl ,title: 'monitor access via intranet with pwd' }

- {user: '${monitor}' ,db: all ,addr: world ,auth: deny ,title: 'reject all other monitor access addr' }

- {user: '${admin}' ,db: all ,addr: intra ,auth: ssl ,title: 'admin access via intranet with pwd' }

- {user: '${admin}' ,db: all ,addr: world ,auth: deny ,title: 'reject all other admin access addr' }

- {user: 'all' ,db: all ,addr: intra ,auth: ssl ,title: 'allow all user intra access with pwd' }

# OPTIONAL delayed cluster for pg-meta

pg-meta-delay: # delayed instance for pg-meta (1 hour ago)

hosts: { 10.10.10.13: { pg_seq: 1, pg_role: primary, pg_upstream: 10.10.10.10, pg_delay: 1h } }

vars: { pg_cluster: pg-meta-delay }

Citus分布式集群

下面是一个四节点的 Citus 分布式集群的声明式配置:

all:

children:

pg-citus0: # citus coordinator, pg_group = 0

hosts: { 10.10.10.10: { pg_seq: 1, pg_role: primary } }

vars: { pg_cluster: pg-citus0 , pg_group: 0 }

pg-citus1: # citus data node 1

hosts: { 10.10.10.11: { pg_seq: 1, pg_role: primary } }

vars: { pg_cluster: pg-citus1 , pg_group: 1 }

pg-citus2: # citus data node 2

hosts: { 10.10.10.12: { pg_seq: 1, pg_role: primary } }

vars: { pg_cluster: pg-citus2 , pg_group: 2 }

pg-citus3: # citus data node 3, with an extra replica

hosts:

10.10.10.13: { pg_seq: 1, pg_role: primary }

10.10.10.14: { pg_seq: 2, pg_role: replica }

vars: { pg_cluster: pg-citus3 , pg_group: 3 }

vars: # global parameters for all citus clusters

pg_mode: citus # pgsql cluster mode: citus

pg_shard: pg-citus # citus shard name: pg-citus

patroni_citus_db: meta # citus distributed database name

pg_dbsu_password: DBUser.Postgres # all dbsu password access for citus cluster

pg_users: [ { name: dbuser_meta ,password: DBUser.Meta ,pgbouncer: true ,roles: [ dbrole_admin ] } ]

pg_databases: [ { name: meta ,extensions: [ { name: citus }, { name: postgis }, { name: timescaledb } ] } ]

pg_hba_rules:

- { user: 'all' ,db: all ,addr: 127.0.0.1/32 ,auth: ssl ,title: 'all user ssl access from localhost' }

- { user: 'all' ,db: all ,addr: intra ,auth: ssl ,title: 'all user ssl access from intranet' }

Redis集群

下面给出了 Redis 主从集群、哨兵集群、以及 Redis Cluster 的声明配置样例

redis-ms: # redis classic primary & replica

hosts: { 10.10.10.10: { redis_node: 1 , redis_instances: { 6379: { }, 6380: { replica_of: '10.10.10.10 6379' } } } }

vars: { redis_cluster: redis-ms ,redis_password: 'redis.ms' ,redis_max_memory: 64MB }

redis-meta: # redis sentinel x 3

hosts: { 10.10.10.11: { redis_node: 1 , redis_instances: { 26379: { } ,26380: { } ,26381: { } } } }

vars:

redis_cluster: redis-meta

redis_password: 'redis.meta'

redis_mode: sentinel

redis_max_memory: 16MB

redis_sentinel_monitor: # primary list for redis sentinel, use cls as name, primary ip:port

- { name: redis-ms, host: 10.10.10.10, port: 6379 ,password: redis.ms, quorum: 2 }

redis-test: # redis native cluster: 3m x 3s

hosts:

10.10.10.12: { redis_node: 1 ,redis_instances: { 6379: { } ,6380: { } ,6381: { } } }

10.10.10.13: { redis_node: 2 ,redis_instances: { 6379: { } ,6380: { } ,6381: { } } }

vars: { redis_cluster: redis-test ,redis_password: 'redis.test' ,redis_mode: cluster, redis_max_memory: 32MB }

ETCD集群

下面给出了一个三节点的 Etcd 集群声明式配置样例:

etcd: # dcs service for postgres/patroni ha consensus

hosts: # 1 node for testing, 3 or 5 for production

10.10.10.10: { etcd_seq: 1 } # etcd_seq required

10.10.10.11: { etcd_seq: 2 } # assign from 1 ~ n

10.10.10.12: { etcd_seq: 3 } # odd number please

vars: # cluster level parameter override roles/etcd

etcd_cluster: etcd # mark etcd cluster name etcd

etcd_safeguard: false # safeguard against purging

etcd_clean: true # purge etcd during init process

MinIO集群

下面给出了一个三节点的 MinIO 集群声明式配置样例:

minio:

hosts:

10.10.10.10: { minio_seq: 1 }

10.10.10.11: { minio_seq: 2 }

10.10.10.12: { minio_seq: 3 }

vars:

minio_cluster: minio

minio_data: '/data{1...2}' # 每个节点使用两块磁盘

minio_node: '${minio_cluster}-${minio_seq}.pigsty' # 节点名称的模式

haproxy_services:

- name: minio # [必选] 服务名称,需要唯一

port: 9002 # [必选] 服务端口,需要唯一

options:

- option httpchk

- option http-keep-alive

- http-check send meth OPTIONS uri /minio/health/live

- http-check expect status 200

servers:

- { name: minio-1 ,ip: 10.10.10.10 , port: 9000 , options: 'check-ssl ca-file /etc/pki/ca.crt check port 9000' }

- { name: minio-2 ,ip: 10.10.10.11 , port: 9000 , options: 'check-ssl ca-file /etc/pki/ca.crt check port 9000' }

- { name: minio-3 ,ip: 10.10.10.12 , port: 9000 , options: 'check-ssl ca-file /etc/pki/ca.crt check port 9000' }

6 - 数据库高可用

概览

Pigsty 的 PostgreSQL 集群带有开箱即用的高可用方案,由 Patroni、Etcd 和 HAProxy 强力驱动。

当您的 PostgreSQL 集群含有两个或更多实例时,您无需任何配置即拥有了硬件故障自愈的数据库高可用能力 —— 只要集群中有任意实例存活,集群就可以对外提供完整的服务,而客户端只要连接至集群中的任意节点,即可获得完整的服务,而无需关心主从拓扑变化。

在默认配置下,主库故障恢复时间目标 RTO ≈ 30s,数据恢复点目标 RPO < 1MB;从库故障 RPO = 0,RTO ≈ 0 (闪断);在一致性优先模式下,可确保故障切换数据零损失:RPO = 0。以上指标均可通过参数,根据您的实际硬件条件与可靠性要求 按需配置。

Pigsty 内置了 HAProxy 负载均衡器用于自动流量切换,提供 DNS/VIP/LVS 等多种接入方式供客户端选用。故障切换与主动切换对业务侧除零星闪断外几乎无感知,应用不需要修改连接串重启。 极小的维护窗口需求带来了极大的灵活便利:您完全可以在无需应用配合的情况下滚动维护升级整个集群。硬件故障可以等到第二天再抽空善后处置的特性,让研发,运维与 DBA 都能在故障时安心睡个好觉。

许多大型组织与核心机构已经在生产环境中长时间使用 Pigsty ,最大的部署有 25K CPU 核心与 220+ PostgreSQL 超大规格实例(64c / 512g / 3TB NVMe SSD);在这一部署案例中,五年内经历了数十次硬件故障与各类事故,但依然可以保持高于 99.999% 的总体可用性战绩。

高可用(High-Availability)解决什么问题?

- 将数据安全C/IA中的可用性提高到一个新高度:RPO ≈ 0, RTO < 30s。

- 获得无缝滚动维护的能力,最小化维护窗口需求,带来极大便利。

- 硬件故障可以立即自愈,无需人工介入,运维DBA可以睡个好觉。

- 从库可以用于承载只读请求,分担主库负载,让资源得以充分利用。

高可用有什么代价?

- 基础设施依赖:高可用需要依赖 DCS (etcd/zk/consul) 提供共识。

- 起步门槛增加:一个有意义的高可用部署环境至少需要 三个节点。

- 额外的资源消耗:一个新从库就要消耗一份额外资源,不算大问题。

- 复杂度代价显著升高:备份成本显著加大,需要使用工具压制复杂度。

高可用的局限性

因为复制实时进⾏,所有变更被⽴即应⽤⾄从库。因此基于流复制的高可用方案⽆法应对⼈为错误与软件缺陷导致的数据误删误改。(例如:DROP TABLE,或 DELETE 数据)

此类故障需要使用 延迟集群 ,或使用先前的基础备份与 WAL 归档进行 时间点恢复。

| 配置策略 | RTO | RPO |

|---|---|---|

| 单机 + 什么也不做 | 数据永久丢失,无法恢复 | 数据全部丢失 |

| 单机 + 基础备份 | 取决于备份大小与带宽(几小时) | 丢失上一次备份后的数据(几个小时到几天) |

| 单机 + 基础备份 + WAL归档 | 取决于备份大小与带宽(几小时) | 丢失最后尚未归档的数据(几十MB) |

| 主从 + 手工故障切换 | 十分钟 | 丢失复制延迟中的数据(约百KB) |

| 主从 + 自动故障切换 | 一分钟内 | 丢失复制延迟中的数据(约百KB) |

| 主从 + 自动故障切换 + 同步提交 | 一分钟内 | 无数据丢失 |

原理

在 Pigsty 中,高可用架构的实现原理如下:

- PostgreSQL 使⽤标准流复制搭建物理从库,主库故障时由从库接管。

- Patroni 负责管理 PostgreSQL 服务器进程,处理高可用相关事宜。

- Etcd 提供分布式配置存储(DCS)能力,并用于故障后的领导者选举

- Patroni 依赖 Etcd 达成集群领导者共识,并对外提供健康检查接口。

- HAProxy 对外暴露集群服务,并利⽤ Patroni 健康检查接口,自动分发流量至健康节点。

- vip-manager 提供一个可选的二层 VIP,从 Etcd 中获取领导者信息,并将 VIP 绑定在集群主库所在节点上。

当主库故障时,将触发新一轮领导者竞选,集群中最为健康的从库将胜出(LSN位点最高,数据损失最小者),并被提升为新的主库。 胜选从库提升后,读写流量将立即路由至新的主库。 主库故障影响是 写服务短暂不可用:从主库故障到新主库提升期间,写入请求将被阻塞或直接失败,不可用时长通常在 15秒 ~ 30秒,通常不会超过 1 分钟。

当从库故障时,只读流量将路由至其他从库,如果所有从库都故障,只读流量才会最终由主库承载。 从库故障的影响是 部分只读查询闪断:当前从库上正在运行查询将由于连接重置而中止,并立即由其他可用从库接管。

故障检测由 Patroni 和 Etcd 共同完成,集群领导者将持有一个租约, 如果集群领导者因为故障而没有及时续租(10s),租约将会被释放,并触发 故障切换(Failover) 与新一轮集群选举。

即使没有出现任何故障,您依然可以主动通过 主动切换 (Switchover)变更集群的主库。 在这种情况下,主库上的写入查询将会闪断,并立即路由至新主库执行。这一操作通常可用于滚动维护/升级数据库服务器。

利弊权衡

故障恢复时间目标(RTO)与 数据恢复点目标(RPO)是高可用集群设计时需要仔细进行利弊权衡的两个参数。

Pigsty 使用的 RTO 与 RPO 默认值满足绝大多数场景下的可靠性要求,您可以根据您的硬件水平,网络质量,业务需求来合理调整它们。

RTO 与 RPO 并非越小越好!

过小的 RTO 将增大误报几率,过小的 RPO 将降低成功自动切换的概率。故障切换时的不可用时长上限由 pg_rto 参数控制,RTO 默认值为 30s,增大它将导致更长的主库故障转移写入不可用时长,而减少它将增加误报故障转移率(例如,由短暂网络抖动导致的反复切换)。

潜在数据丢失量的上限由 pg_rpo 参数控制,默认为 1MB,减小这个值可以降低故障切换时的数据损失上限,但也会增加故障时因为从库不够健康(落后太久)而拒绝自动切换的概率。

Pigsty 默认使用可用性优先模式,这意味着当主库故障时,它将尽快进行故障转移,尚未复制到从库的数据可能会丢失(常规万兆网络下,复制延迟在通常在几KB到100KB)。

如果您需要确保故障切换时不丢失任何数据,您可以使用 crit.yml 模板来确保在故障转移期间没有数据丢失,但这会牺牲一些性能作为代价。

相关参数

pg_rto

参数名称: pg_rto, 类型: int, 层次:C

以秒为单位的恢复时间目标(RTO)。这将用于计算 Patroni 的 TTL 值,默认为 30 秒。

如果主实例在这么长时间内失踪,将触发新的领导者选举,此值并非越低越好,它涉及到利弊权衡: 减小这个值可以减少集群故障转移期间的不可用时间(无法写入), 但会使集群对短期网络抖动更加敏感,从而增加误报触发故障转移的几率。 您需要根据网络状况和业务约束来配置这个值,在 故障几率 和 故障影响 之间做出权衡。

pg_rpo

参数名称: pg_rpo, 类型: int, 层次:C

以字节为单位的恢复点目标(RPO),默认值:1048576。

默认为 1MiB,这意味着在故障转移期间最多可以容忍 1MiB 的数据丢失。

当主节点宕机并且所有副本都滞后时,你必须做出一个艰难的选择: 是马上提升一个从库成为新的主库,付出可接受的数据丢失代价(例如,少于 1MB),并尽快将系统恢复服务。 还是等待主库重新上线(可能永远不会)以避免任何数据丢失,或放弃自动故障切换,等人工介入作出最终决策。 您需要根据业务的需求偏好配置这个值,在 可用性 和 一致性 之间进行 利弊权衡。

此外,您始终可以通过启用同步提交(例如:使用 crit.yml 模板),通过牺牲集群一部分延迟/吞吐性能来强制确保 RPO = 0。

对于数据一致性至关重要

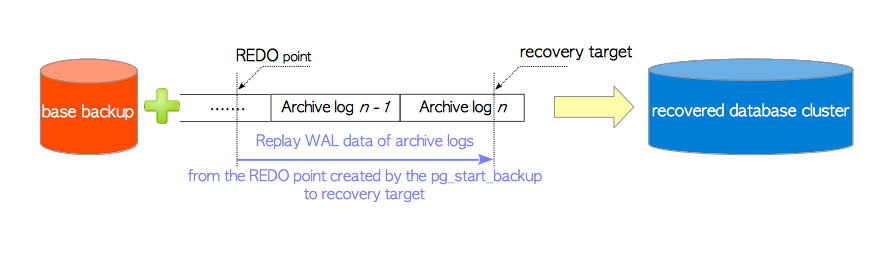

7 - 时间点恢复

概览

您可以将集群恢复回滚至过去任意时刻,避免软件缺陷与人为失误导致的数据损失。

Pigsty 的 PostgreSQL 集群带有自动配置的时间点恢复(PITR)方案,基于备份组件 pgBackRest 与可选的对象存储仓库 MinIO 提供。

高可用方案 可以解决硬件故障,但却对软件缺陷与人为失误导致的数据删除/覆盖写入/删库等问题却无能为力。 对于这种情况,Pigsty 提供了开箱即用的 时间点恢复(Point in Time Recovery, PITR)能力,无需额外配置即默认启用。

Pigsty 为您提供了基础备份与 WAL 归档的默认配置,您可以使用本地目录与磁盘,亦或专用的 MinIO 集群或 S3 对象存储服务来存储备份并实现异地容灾。 当您使用本地磁盘时,默认保留恢复至过去一天内的任意时间点的能力。当您使用 MinIO 或 S3 时,默认保留恢复至过去一周内的任意时间点的能力。 只要存储空间管够,您尽可保留任意长地可恢复时间段,丰俭由人。

时间点恢复(PITR)解决什么问题?

- 容灾能⼒增强:RPO 从 ∞ 降⾄ ⼗⼏MB, RTO 从 ∞ 降⾄ ⼏⼩时/⼏刻钟。

- 确保数据安全:C/I/A 中的 数据完整性:避免误删导致的数据⼀致性问题。

- 确保数据安全:C/I/A 中的 数据可⽤性:提供对“永久不可⽤”这种灾难情况的兜底

| 单实例配置策略 | 事件 | RTO | RPO |

|---|---|---|---|

| 什么也不做 | 宕机 | 永久丢失 | 全部丢失 |

| 基础备份 | 宕机 | 取决于备份大小与带宽(几小时) | 丢失上一次备份后的数据(几个小时到几天) |

| 基础备份 + WAL归档 | 宕机 | 取决于备份大小与带宽(几小时) | 丢失最后尚未归档的数据(几十MB) |

时间点恢复有什么代价?

- 降低数据安全中的 C:机密性,产生额外泄漏点,需要额外对备份进⾏保护。

- 额外的资源消耗:本地存储或⽹络流量 / 带宽开销,通常并不是⼀个问题。

- 复杂度代价升⾼:⽤户需要付出备份管理成本。

时间点恢复的局限性

如果只有 PITR 用于故障恢复,则 RTO 与 RPO 指标相比 高可用方案 更为逊色,通常应两者组合使用。

- RTO:如果只有单机 + PITR,恢复时长取决于备份大小与网络/磁盘带宽,从十几分钟到几小时,几天不等。

- RPO:如果只有单机 + PITR,宕机时可能丢失少量数据,一个或几个 WAL 日志段文件可能尚未归档,损失 16 MB 到⼏⼗ MB 不等的数据。

除了 PITR 之外,您还可以在 Pigsty 中使用 延迟集群 来解决人为失误或软件缺陷导致的数据误删误改问题。

原理

时间点恢复允许您将集群恢复回滚至过去的“任意时刻”,避免软件缺陷与人为失误导致的数据损失。要做到这一点,首先需要做好两样准备工作:基础备份 与 WAL归档。 拥有 基础备份,允许用户将数据库恢复至备份时的状态,而同时拥有从某个基础备份开始的 WAL归档,允许用户将数据库恢复至基础备份时刻之后的任意时间点。

详细原理,请参阅:基础备份与时间点恢复;具体操作,请参考 PGSQL管理:备份恢复。

基础备份

Pigsty 使用 pgbackrest 管理 PostgreSQL 备份。pgBackRest 将在所有集群实例上初始化空仓库,但只会在集群主库上实际使用仓库。

pgBackRest 支持三种备份模式:全量备份,增量备份,差异备份,其中前两者最为常用。 全量备份将对数据库集群取一个当前时刻的全量物理快照,增量备份会记录当前数据库集群与上一次全量备份之间的差异。

Pigsty 为备份提供了封装命令:/pg/bin/pg-backup [full|incr]。您可以通过 Crontab 或任何其他任务调度系统,按需定期制作基础备份。

WAL归档

Pigsty 默认在集群主库上启⽤了 WAL 归档,并使⽤ pgbackrest 命令行工具持续推送 WAL 段⽂件至备份仓库。

pgBackRest 会⾃动管理所需的 WAL ⽂件,并根据备份的保留策略及时清理过期的备份,与其对应的 WAL 归档⽂件。

如果您不需要 PITR 功能,可以通过 配置集群: archive_mode: off 来关闭 WAL 归档,移除 node_crontab 来停止定期备份任务。

实现

默认情况下,Pigsty提供了两种预置备份策略:默认使用本地文件系统备份仓库,在这种情况下每天进行一次全量备份,确保用户任何时候都能回滚至一天内的任意时间点。备选策略使用专用的 MinIO 集群或S3存储备份,每周一全备,每天一增备,默认保留两周的备份与WAL归档。

Pigsty 使用 pgBackRest 管理备份,接收 WAL 归档,执行 PITR。备份仓库可以进行灵活配置(pgbackrest_repo):默认使用主库本地文件系统(local),但也可以使用其他磁盘路径,或使用自带的可选 MinIO 服务(minio)与云上 S3 服务。

pgbackrest_enabled: true # 在 pgsql 主机上启用 pgBackRest 吗?

pgbackrest_clean: true # 初始化时删除 pg 备份数据?

pgbackrest_log_dir: /pg/log/pgbackrest # pgbackrest 日志目录,默认为 `/pg/log/pgbackrest`

pgbackrest_method: local # pgbackrest 仓库方法:local, minio, [用户定义...]

pgbackrest_repo: # pgbackrest 仓库:https://pgbackrest.org/configuration.html#section-repository

local: # 默认使用本地 posix 文件系统的 pgbackrest 仓库

path: /pg/backup # 本地备份目录,默认为 `/pg/backup`

retention_full_type: count # 按计数保留完整备份

retention_full: 2 # 使用本地文件系统仓库时,最多保留 3 个完整备份,至少保留 2 个

minio: # pgbackrest 的可选 minio 仓库

type: s3 # minio 是与 s3 兼容的,所以使用 s3

s3_endpoint: sss.pigsty # minio 端点域名,默认为 `sss.pigsty`

s3_region: us-east-1 # minio 区域,默认为 us-east-1,对 minio 无效

s3_bucket: pgsql # minio 桶名称,默认为 `pgsql`

s3_key: pgbackrest # pgbackrest 的 minio 用户访问密钥

s3_key_secret: S3User.Backup # pgbackrest 的 minio 用户秘密密钥

s3_uri_style: path # 对 minio 使用路径风格的 uri,而不是主机风格

path: /pgbackrest # minio 备份路径,默认为 `/pgbackrest`

storage_port: 9000 # minio 端口,默认为 9000

storage_ca_file: /etc/pki/ca.crt # minio ca 文件路径,默认为 `/etc/pki/ca.crt`

bundle: y # 将小文件打包成一个文件

cipher_type: aes-256-cbc # 为远程备份仓库启用 AES 加密

cipher_pass: pgBackRest # AES 加密密码,默认为 'pgBackRest'

retention_full_type: time # 在 minio 仓库上按时间保留完整备份

retention_full: 14 # 保留过去 14 天的完整备份

# 您还可以添加其他的可选备份仓库,例如 S3,用于异地容灾

Pigsty 参数 pgbackrest_repo 中的目标仓库会被转换为 /etc/pgbackrest/pgbackrest.conf 配置文件中的仓库定义。

例如,如果您定义了一个美西区的 S3 仓库用于存储冷备份,可以使用下面的参考配置。

s3: # ------> /etc/pgbackrest/pgbackrest.conf

repo1-type: s3 # ----> repo1-type=s3

repo1-s3-region: us-west-1 # ----> repo1-s3-region=us-west-1

repo1-s3-endpoint: s3-us-west-1.amazonaws.com # ----> repo1-s3-endpoint=s3-us-west-1.amazonaws.com

repo1-s3-key: '<your_access_key>' # ----> repo1-s3-key=<your_access_key>

repo1-s3-key-secret: '<your_secret_key>' # ----> repo1-s3-key-secret=<your_secret_key>

repo1-s3-bucket: pgsql # ----> repo1-s3-bucket=pgsql

repo1-s3-uri-style: host # ----> repo1-s3-uri-style=host

repo1-path: /pgbackrest # ----> repo1-path=/pgbackrest

repo1-bundle: y # ----> repo1-bundle=y

repo1-cipher-type: aes-256-cbc # ----> repo1-cipher-type=aes-256-cbc

repo1-cipher-pass: pgBackRest # ----> repo1-cipher-pass=pgBackRest

repo1-retention-full-type: time # ----> repo1-retention-full-type=time

repo1-retention-full: 90 # ----> repo1-retention-full=90

恢复

您可以直接使用以下封装命令可以用于 PostgreSQL 数据库集群的 时间点恢复。

Pigsty 默认使用增量差分并行恢复,允许您以最快速度恢复到指定时间点。

pg-pitr # 恢复到WAL存档流的结束位置(例如在整个数据中心故障的情况下使用)

pg-pitr -i # 恢复到最近备份完成的时间(不常用)

pg-pitr --time="2022-12-30 14:44:44+08" # 恢复到指定的时间点(在删除数据库或表的情况下使用)

pg-pitr --name="my-restore-point" # 恢复到使用 pg_create_restore_point 创建的命名恢复点

pg-pitr --lsn="0/7C82CB8" -X # 在LSN之前立即恢复

pg-pitr --xid="1234567" -X -P # 在指定的事务ID之前立即恢复,然后将集群直接提升为主库

pg-pitr --backup=latest # 恢复到最新的备份集

pg-pitr --backup=20221108-105325 # 恢复到特定备份集,备份集可以使用 pgbackrest info 列出

pg-pitr # pgbackrest --stanza=pg-meta restore

pg-pitr -i # pgbackrest --stanza=pg-meta --type=immediate restore

pg-pitr -t "2022-12-30 14:44:44+08" # pgbackrest --stanza=pg-meta --type=time --target="2022-12-30 14:44:44+08" restore

pg-pitr -n "my-restore-point" # pgbackrest --stanza=pg-meta --type=name --target=my-restore-point restore

pg-pitr -b 20221108-105325F # pgbackrest --stanza=pg-meta --type=name --set=20221230-120101F restore

pg-pitr -l "0/7C82CB8" -X # pgbackrest --stanza=pg-meta --type=lsn --target="0/7C82CB8" --target-exclusive restore

pg-pitr -x 1234567 -X -P # pgbackrest --stanza=pg-meta --type=xid --target="0/7C82CB8" --target-exclusive --target-action=promote restore

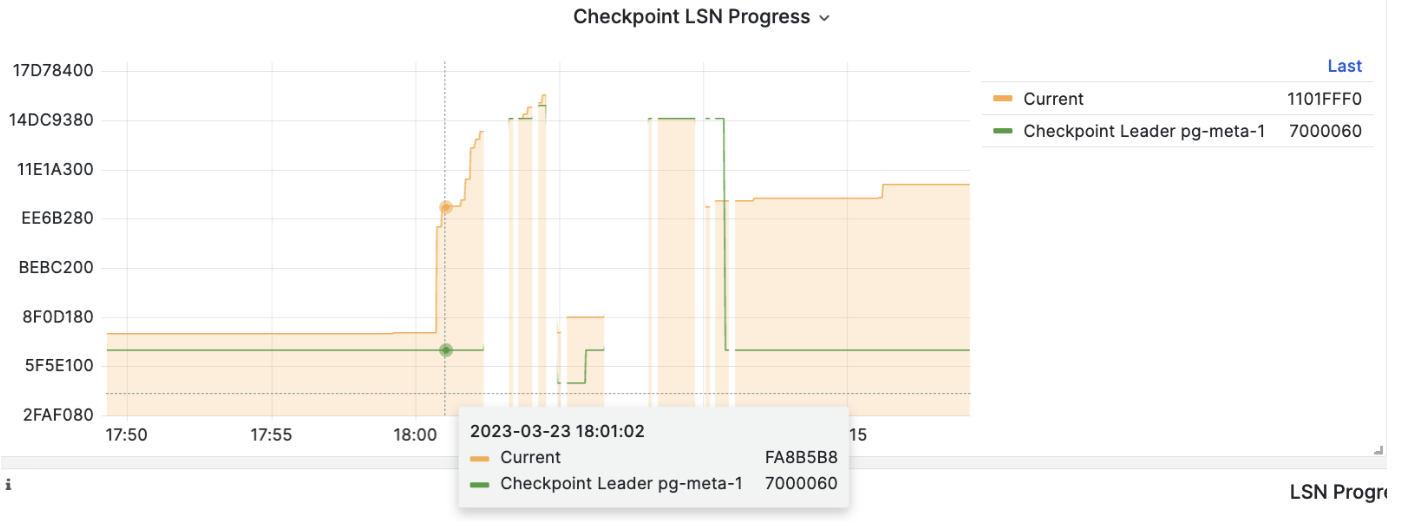

在执行 PITR 时,您可以使用 Pigsty 监控系统观察集群 LSN 位点状态,判断是否成功恢复到指定的时间点,事务点,LSN位点,或其他点位。

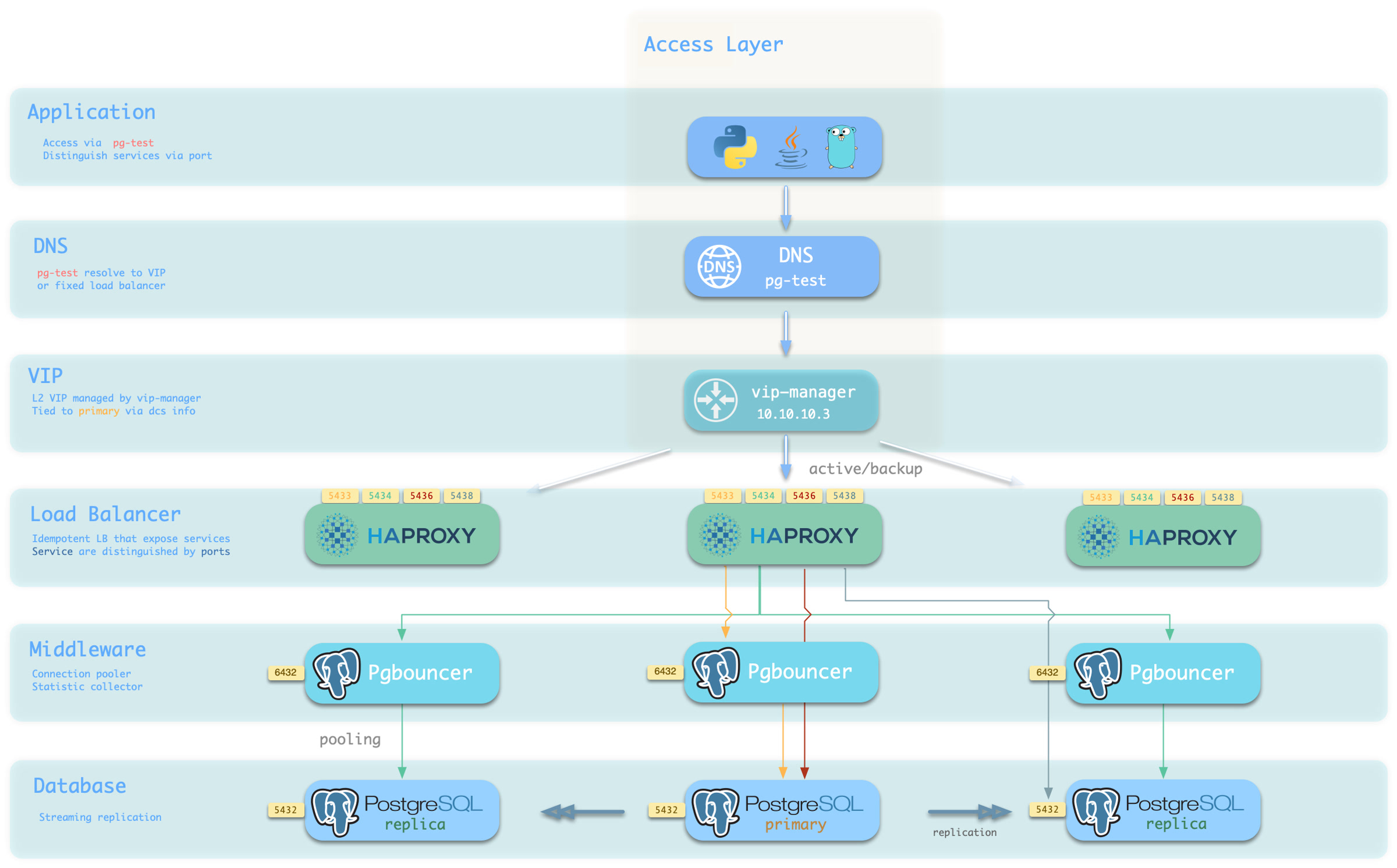

8 - 服务接入

分离读写操作,正确路由流量,稳定可靠地交付 PostgreSQL 集群提供的能力。

服务是一种抽象:它是数据库集群对外提供能力的形式,并封装了底层集群的细节。

服务对于生产环境中的稳定接入至关重要,在高可用集群自动故障时方显其价值,单机用户通常不需要操心这个概念。

单机用户

“服务” 的概念是给生产环境用的,个人用户/单机集群可以不折腾,直接拿实例名/IP地址访问数据库。

例如,Pigsty 默认的单节点 pg-meta.meta 数据库,就可以直接用下面三个不同的用户连接上去。

psql postgres://dbuser_dba:DBUser.DBA@10.10.10.10/meta # 直接用 DBA 超级用户连上去

psql postgres://dbuser_meta:DBUser.Meta@10.10.10.10/meta # 用默认的业务管理员用户连上去

psql postgres://dbuser_view:DBUser.View@pg-meta/meta # 用默认的只读用户走实例域名连上去

服务概述

在真实世界生产环境中,我们会使用基于复制的主从数据库集群。集群中有且仅有一个实例作为领导者(主库)可以接受写入。 而其他实例(从库)则会从持续从集群领导者获取变更日志,与领导者保持一致。同时,从库还可以承载只读请求,在读多写少的场景下可以显著分担主库的负担, 因此对集群的写入请求与只读请求进行区分,是一种十分常见的实践。

此外对于高频短连接的生产环境,我们还会通过连接池中间件(Pgbouncer)对请求进行池化,减少连接与后端进程的创建开销。但对于ETL与变更执行等场景,我们又需要绕过连接池,直接访问数据库。 同时,高可用集群在故障时会出现故障切换(Failover),故障切换会导致集群的领导者出现变更。因此高可用的数据库方案要求写入流量可以自动适配集群的领导者变化。 这些不同的访问需求(读写分离,池化与直连,故障切换自动适配)最终抽象出 服务 (Service)的概念。

通常来说,数据库集群都必须提供这种最基础的服务:

- 读写服务(primary) :可以读写数据库

对于生产数据库集群,至少应当提供这两种服务:

- 读写服务(primary) :写入数据:只能由主库所承载。

- 只读服务(replica) :读取数据:可以由从库承载,没有从库时也可由主库承载

此外,根据具体的业务场景,可能还会有其他的服务,例如:

- 默认直连服务(default) :允许(管理)用户,绕过连接池直接访问数据库的服务

- 离线从库服务(offline) :不承接线上只读流量的专用从库,用于ETL与分析查询

- 同步从库服务(standby) :没有复制延迟的只读服务,由同步备库/主库处理只读查询

- 延迟从库服务(delayed) :访问同一个集群在一段时间之前的旧数据,由延迟从库来处理

接入服务

Pigsty的服务交付边界止步于集群的HAProxy,用户可以用各种手段访问这些负载均衡器。

典型的做法是使用 DNS 或 VIP 接入,将其绑定在集群所有或任意数量的负载均衡器上。

你可以使用不同的 主机 & 端口 组合,它们以不同的方式提供 PostgreSQL 服务。

主机

| 类型 | 样例 | 描述 |

|---|---|---|

| 集群域名 | pg-test |

通过集群域名访问(由 dnsmasq @ infra 节点解析) |

| 集群 VIP 地址 | 10.10.10.3 |

通过由 vip-manager 管理的 L2 VIP 地址访问,绑定到主节点 |

| 实例主机名 | pg-test-1 |

通过任何实例主机名访问(由 dnsmasq @ infra 节点解析) |

| 实例 IP 地址 | 10.10.10.11 |

访问任何实例的 IP 地址 |

端口

Pigsty 使用不同的 端口 来区分 pg services

| 端口 | 服务 | 类型 | 描述 |

|---|---|---|---|

| 5432 | postgres | 数据库 | 直接访问 postgres 服务器 |

| 6432 | pgbouncer | 中间件 | 访问 postgres 前先通过连接池中间件 |

| 5433 | primary | 服务 | 访问主 pgbouncer (或 postgres) |

| 5434 | replica | 服务 | 访问备份 pgbouncer (或 postgres) |

| 5436 | default | 服务 | 访问主 postgres |

| 5438 | offline | 服务 | 访问离线 postgres |

组合

# 通过集群域名访问

postgres://test@pg-test:5432/test # DNS -> L2 VIP -> 主直接连接

postgres://test@pg-test:6432/test # DNS -> L2 VIP -> 主连接池 -> 主

postgres://test@pg-test:5433/test # DNS -> L2 VIP -> HAProxy -> 主连接池 -> 主

postgres://test@pg-test:5434/test # DNS -> L2 VIP -> HAProxy -> 备份连接池 -> 备份

postgres://dbuser_dba@pg-test:5436/test # DNS -> L2 VIP -> HAProxy -> 主直接连接 (用于管理员)

postgres://dbuser_stats@pg-test:5438/test # DNS -> L2 VIP -> HAProxy -> 离线直接连接 (用于 ETL/个人查询)

# 通过集群 VIP 直接访问

postgres://test@10.10.10.3:5432/test # L2 VIP -> 主直接访问

postgres://test@10.10.10.3:6432/test # L2 VIP -> 主连接池 -> 主

postgres://test@10.10.10.3:5433/test # L2 VIP -> HAProxy -> 主连接池 -> 主

postgres://test@10.10.10.3:5434/test # L2 VIP -> HAProxy -> 备份连接池 -> 备份

postgres://dbuser_dba@10.10.10.3:5436/test # L2 VIP -> HAProxy -> 主直接连接 (用于管理员)

postgres://dbuser_stats@10.10.10.3::5438/test # L2 VIP -> HAProxy -> 离线直接连接 (用于 ETL/个人查询)

# 直接指定任何集群实例名

postgres://test@pg-test-1:5432/test # DNS -> 数据库实例直接连接 (单例访问)

postgres://test@pg-test-1:6432/test # DNS -> 连接池 -> 数据库

postgres://test@pg-test-1:5433/test # DNS -> HAProxy -> 连接池 -> 数据库读/写

postgres://test@pg-test-1:5434/test # DNS -> HAProxy -> 连接池 -> 数据库只读

postgres://dbuser_dba@pg-test-1:5436/test # DNS -> HAProxy -> 数据库直接连接

postgres://dbuser_stats@pg-test-1:5438/test # DNS -> HAProxy -> 数据库离线读/写

# 直接指定任何集群实例 IP 访问

postgres://test@10.10.10.11:5432/test # 数据库实例直接连接 (直接指定实例, 没有自动流量分配)

postgres://test@10.10.10.11:6432/test # 连接池 -> 数据库

postgres://test@10.10.10.11:5433/test # HAProxy -> 连接池 -> 数据库读/写

postgres://test@10.10.10.11:5434/test # HAProxy -> 连接池 -> 数据库只读

postgres://dbuser_dba@10.10.10.11:5436/test # HAProxy -> 数据库直接连接

postgres://dbuser_stats@10.10.10.11:5438/test # HAProxy -> 数据库离线读-写

# 智能客户端:通过URL读写分离

postgres://test@10.10.10.11:6432,10.10.10.12:6432,10.10.10.13:6432/test?target_session_attrs=primary

postgres://test@10.10.10.11:6432,10.10.10.12:6432,10.10.10.13:6432/test?target_session_attrs=prefer-standby

9 - 访问控制

权限控制很重要,但很多用户做不好。因此 Pigsty 提供了一套开箱即用的精简访问控制模型,为您的集群安全性提供一个兜底。

角色系统

Pigsty 默认的角色系统包含四个默认角色和四个默认用户:

| 角色名称 | 属性 | 所属 | 描述 |

|---|---|---|---|

dbrole_readonly |

NOLOGIN |

角色:全局只读访问 | |

dbrole_readwrite |

NOLOGIN |

dbrole_readonly | 角色:全局读写访问 |

dbrole_admin |

NOLOGIN |

pg_monitor,dbrole_readwrite | 角色:管理员/对象创建 |

dbrole_offline |

NOLOGIN |

角色:受限的只读访问 | |

postgres |

SUPERUSER |

系统超级用户 | |

replicator |

REPLICATION |

pg_monitor,dbrole_readonly | 系统复制用户 |

dbuser_dba |

SUPERUSER |

dbrole_admin | pgsql 管理用户 |

dbuser_monitor |

pg_monitor | pgsql 监控用户 |

这些角色与用户的详细定义如下所示:

pg_default_roles: # 全局默认的角色与系统用户

- { name: dbrole_readonly ,login: false ,comment: role for global read-only access }

- { name: dbrole_offline ,login: false ,comment: role for restricted read-only access }

- { name: dbrole_readwrite ,login: false ,roles: [dbrole_readonly] ,comment: role for global read-write access }

- { name: dbrole_admin ,login: false ,roles: [pg_monitor, dbrole_readwrite] ,comment: role for object creation }

- { name: postgres ,superuser: true ,comment: system superuser }

- { name: replicator ,replication: true ,roles: [pg_monitor, dbrole_readonly] ,comment: system replicator }

- { name: dbuser_dba ,superuser: true ,roles: [dbrole_admin] ,pgbouncer: true ,pool_mode: session, pool_connlimit: 16 ,comment: pgsql admin user }

- { name: dbuser_monitor ,roles: [pg_monitor] ,pgbouncer: true ,parameters: {log_min_duration_statement: 1000 } ,pool_mode: session ,pool_connlimit: 8 ,comment: pgsql monitor user }

默认角色

Pigsty 中有四个默认角色:

- 业务只读 (

dbrole_readonly): 用于全局只读访问的角色。如果别的业务想要此库只读访问权限,可以使用此角色。 - 业务读写 (

dbrole_readwrite): 用于全局读写访问的角色,主属业务使用的生产账号应当具有数据库读写权限 - 业务管理员 (

dbrole_admin): 拥有DDL权限的角色,通常用于业务管理员,或者需要在应用中建表的场景(比如各种业务软件) - 离线只读访问 (

dbrole_offline): 受限的只读访问角色(只能访问 offline 实例,通常是个人用户,ETL工具账号)

默认角色在 pg_default_roles 中定义,除非您确实知道自己在干什么,建议不要更改默认角色的名称。

- { name: dbrole_readonly , login: false , comment: role for global read-only access } # 生产环境的只读角色

- { name: dbrole_offline , login: false , comment: role for restricted read-only access (offline instance) } # 受限的只读角色

- { name: dbrole_readwrite , login: false , roles: [dbrole_readonly], comment: role for global read-write access } # 生产环境的读写角色

- { name: dbrole_admin , login: false , roles: [pg_monitor, dbrole_readwrite] , comment: role for object creation } # 生产环境的 DDL 更改角色

默认用户

Pigsty 也有四个默认用户(系统用户):

- 超级用户 (

postgres),集群的所有者和创建者,与操作系统 dbsu 名称相同。 - 复制用户 (

replicator),用于主-从复制的系统用户。 - 监控用户 (

dbuser_monitor),用于监控数据库和连接池指标的用户。 - 管理用户 (

dbuser_dba),执行日常操作和数据库更改的管理员用户。

这4个默认用户的用户名/密码通过4对专用参数进行定义,并在很多地方引用:

pg_dbsu:操作系统 dbsu 名称,默认为 postgres,最好不要更改它pg_dbsu_password:dbsu 密码,默认为空字符串意味着不设置 dbsu 密码,最好不要设置。pg_replication_username:postgres 复制用户名,默认为replicatorpg_replication_password:postgres 复制密码,默认为DBUser.Replicatorpg_admin_username:postgres 管理员用户名,默认为dbuser_dbapg_admin_password:postgres 管理员密码的明文,默认为DBUser.DBApg_monitor_username:postgres 监控用户名,默认为dbuser_monitorpg_monitor_password:postgres 监控密码,默认为DBUser.Monitor

在生产部署中记得更改这些密码,不要使用默认值!

pg_dbsu: postgres # 数据库超级用户名,这个用户名建议不要修改。

pg_dbsu_password: '' # 数据库超级用户密码,这个密码建议留空!禁止dbsu密码登陆。

pg_replication_username: replicator # 系统复制用户名

pg_replication_password: DBUser.Replicator # 系统复制密码,请务必修改此密码!

pg_monitor_username: dbuser_monitor # 系统监控用户名

pg_monitor_password: DBUser.Monitor # 系统监控密码,请务必修改此密码!

pg_admin_username: dbuser_dba # 系统管理用户名

pg_admin_password: DBUser.DBA # 系统管理密码,请务必修改此密码!

如果您修改默认用户的参数,在 pg_default_roles 中修改相应的角色定义即可:

- { name: postgres ,superuser: true ,comment: system superuser }

- { name: replicator ,replication: true ,roles: [pg_monitor, dbrole_readonly] ,comment: system replicator }

- { name: dbuser_dba ,superuser: true ,roles: [dbrole_admin] ,pgbouncer: true ,pool_mode: session, pool_connlimit: 16 , comment: pgsql admin user }

- { name: dbuser_monitor ,roles: [pg_monitor, dbrole_readonly] ,pgbouncer: true ,parameters: {log_min_duration_statement: 1000 } ,pool_mode: session ,pool_connlimit: 8 ,comment: pgsql monitor user }

权限系统

Pigsty 拥有一套开箱即用的权限模型,该模型与默认角色一起配合工作。

- 所有用户都可以访问所有模式。

- 只读用户(

dbrole_readonly)可以从所有表中读取数据。(SELECT,EXECUTE) - 读写用户(

dbrole_readwrite)可以向所有表中写入数据并运行 DML。(INSERT,UPDATE,DELETE)。 - 管理员用户(

dbrole_admin)可以创建对象并运行 DDL(CREATE,USAGE,TRUNCATE,REFERENCES,TRIGGER)。 - 离线用户(

dbrole_offline)类似只读用户,但访问受到限制,只允许访问离线实例(pg_role = 'offline'或pg_offline_query = true) - 由管理员用户创建的对象将具有正确的权限。

- 所有数据库上都配置了默认权限,包括模板数据库。

- 数据库连接权限由数据库定义管理。

- 默认撤销

PUBLIC在数据库和public模式下的CREATE权限。

对象权限

数据库中新建对象的默认权限由参数 pg_default_privileges 所控制:

- GRANT USAGE ON SCHEMAS TO dbrole_readonly

- GRANT SELECT ON TABLES TO dbrole_readonly

- GRANT SELECT ON SEQUENCES TO dbrole_readonly

- GRANT EXECUTE ON FUNCTIONS TO dbrole_readonly

- GRANT USAGE ON SCHEMAS TO dbrole_offline

- GRANT SELECT ON TABLES TO dbrole_offline

- GRANT SELECT ON SEQUENCES TO dbrole_offline

- GRANT EXECUTE ON FUNCTIONS TO dbrole_offline

- GRANT INSERT ON TABLES TO dbrole_readwrite

- GRANT UPDATE ON TABLES TO dbrole_readwrite

- GRANT DELETE ON TABLES TO dbrole_readwrite

- GRANT USAGE ON SEQUENCES TO dbrole_readwrite

- GRANT UPDATE ON SEQUENCES TO dbrole_readwrite

- GRANT TRUNCATE ON TABLES TO dbrole_admin

- GRANT REFERENCES ON TABLES TO dbrole_admin

- GRANT TRIGGER ON TABLES TO dbrole_admin

- GRANT CREATE ON SCHEMAS TO dbrole_admin

由管理员新创建的对象,默认将会上述权限。使用 \ddp+ 可以查看这些默认权限:

| 类型 | 访问权限 |

|---|---|

| 函数 | =X |

| dbrole_readonly=X | |

| dbrole_offline=X | |

| dbrole_admin=X | |

| 模式 | dbrole_readonly=U |

| dbrole_offline=U | |

| dbrole_admin=UC | |

| 序列号 | dbrole_readonly=r |

| dbrole_offline=r | |

| dbrole_readwrite=wU | |

| dbrole_admin=rwU | |

| 表 | dbrole_readonly=r |

| dbrole_offline=r | |

| dbrole_readwrite=awd | |

| dbrole_admin=arwdDxt |

默认权限

SQL 语句 ALTER DEFAULT PRIVILEGES 允许您设置将来创建的对象的权限。 它不会影响已经存在对象的权限,也不会影响非管理员用户创建的对象。

在 Pigsty 中,默认权限针对三个角色进行定义:

{% for priv in pg_default_privileges %}

ALTER DEFAULT PRIVILEGES FOR ROLE {{ pg_dbsu }} {{ priv }};

{% endfor %}

{% for priv in pg_default_privileges %}

ALTER DEFAULT PRIVILEGES FOR ROLE {{ pg_admin_username }} {{ priv }};

{% endfor %}

-- 对于其他业务管理员而言,它们应当在执行 DDL 前执行 SET ROLE dbrole_admin,从而使用对应的默认权限配置。

{% for priv in pg_default_privileges %}

ALTER DEFAULT PRIVILEGES FOR ROLE "dbrole_admin" {{ priv }};

{% endfor %}

这些内容将会被 PG集群初始化模板 pg-init-template.sql 所使用,在集群初始化的过程中渲染并输出至 /pg/tmp/pg-init-template.sql。

该命令会在 template1 与 postgres 数据库中执行,新创建的数据库会通过模板 template1 继承这些默认权限配置。

也就是说,为了维持正确的对象权限,您必须用管理员用户来执行 DDL,它们可以是:

{{ pg_dbsu }},默认为postgres{{ pg_admin_username }},默认为dbuser_dba- 授予了

dbrole_admin角色的业务管理员用户(通过SET ROLE切换为dbrole_admin身份)。

使用 postgres 作为全局对象所有者是明智的。如果您希望以业务管理员用户身份创建对象,创建之前必须使用 SET ROLE dbrole_admin 来维护正确的权限。

当然,您也可以在数据库中通过 ALTER DEFAULT PRIVILEGE FOR ROLE <some_biz_admin> XXX 来显式对业务管理员授予默认权限。

数据库权限

在 Pigsty 中,数据库(Database)层面的权限在数据库定义中被涵盖。

数据库有三个级别的权限:CONNECT、CREATE、TEMP,以及一个特殊的’权限’:OWNERSHIP。

- name: meta # 必选,`name` 是数据库定义中唯一的必选字段

owner: postgres # 可选,数据库所有者,默认为 postgres

allowconn: true # 可选,是否允许连接,默认为 true。显式设置 false 将完全禁止连接到此数据库

revokeconn: false # 可选,撤销公共连接权限。默认为 false,设置为 true 时,属主和管理员之外用户的 CONNECT 权限会被回收

- 如果

owner参数存在,它作为数据库属主,替代默认的{{ pg_dbsu }}(通常也就是postgres) - 如果

revokeconn为false,所有用户都有数据库的CONNECT权限,这是默认的行为。 - 如果显式设置了

revokeconn为true:- 数据库的

CONNECT权限将从PUBLIC中撤销:普通用户无法连接上此数据库 CONNECT权限将被显式授予{{ pg_replication_username }}、{{ pg_monitor_username }}和{{ pg_admin_username }}CONNECT权限将GRANT OPTION被授予数据库属主,数据库属主用户可以自行授权其他用户连接权限。

- 数据库的

revokeconn选项可用于在同一个集群间隔离跨数据库访问,您可以为每个数据库创建不同的业务用户作为属主,并为它们设置revokeconn选项。

示例:数据库隔离

pg-infra:

hosts:

10.10.10.40: { pg_seq: 1, pg_role: primary }

10.10.10.41: { pg_seq: 2, pg_role: replica , pg_offline_query: true }

vars:

pg_cluster: pg-infra

pg_users:

- { name: dbuser_confluence, password: mc2iohos , pgbouncer: true, roles: [ dbrole_admin ] }

- { name: dbuser_gitlab, password: sdf23g22sfdd , pgbouncer: true, roles: [ dbrole_readwrite ] }

- { name: dbuser_jira, password: sdpijfsfdsfdfs , pgbouncer: true, roles: [ dbrole_admin ] }

pg_databases:

- { name: confluence , revokeconn: true, owner: dbuser_confluence , connlimit: 100 }

- { name: gitlab , revokeconn: true, owner: dbuser_gitlab, connlimit: 100 }

- { name: jira , revokeconn: true, owner: dbuser_jira , connlimit: 100 }

CREATE权限

出于安全考虑,Pigsty 默认从 PUBLIC 撤销数据库上的 CREATE 权限,从 PostgreSQL 15 开始这也是默认行为。

数据库属主总是可以根据实际需要,来自行调整 CREATE 权限。