模块:INFRA

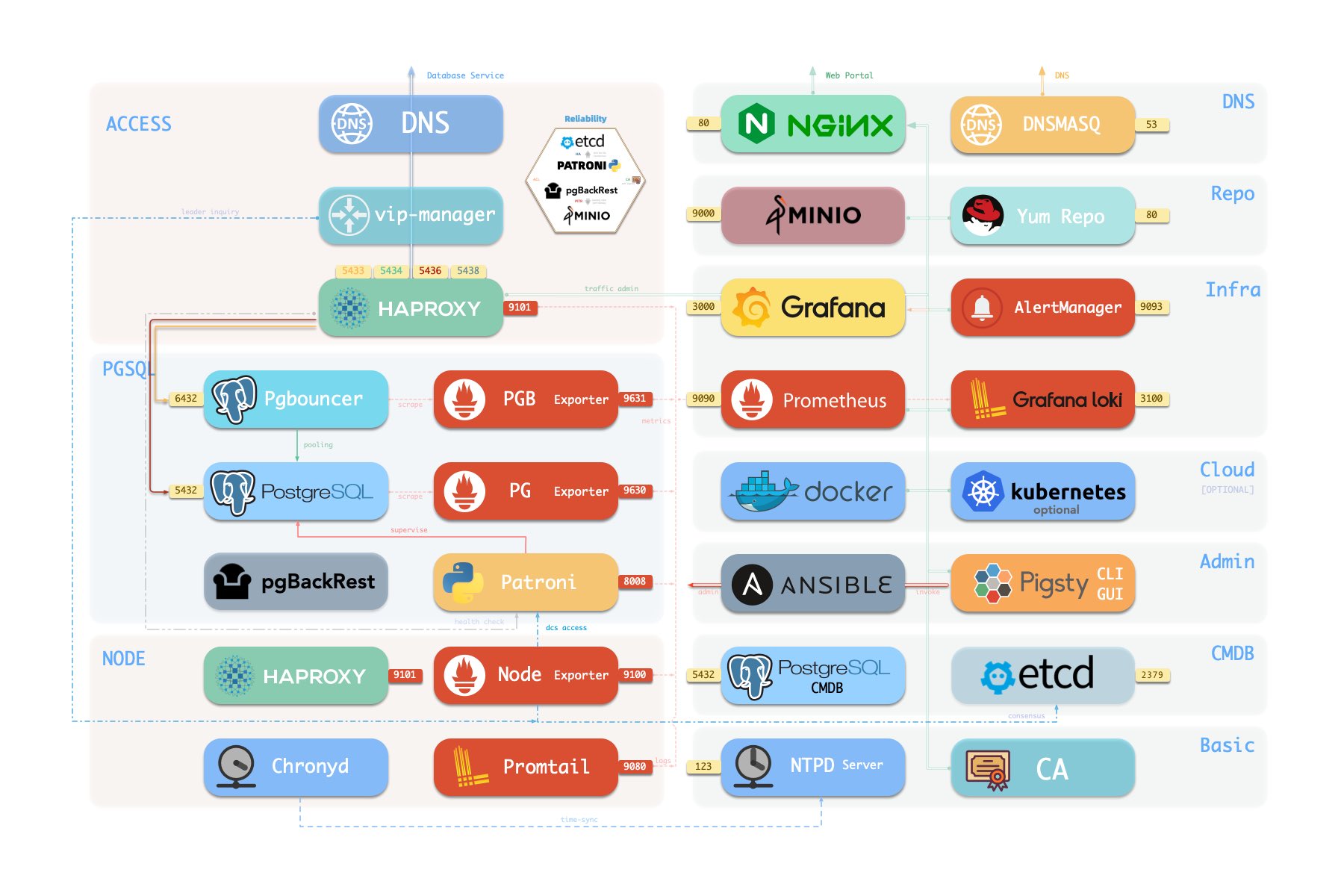

1 - 系统架构

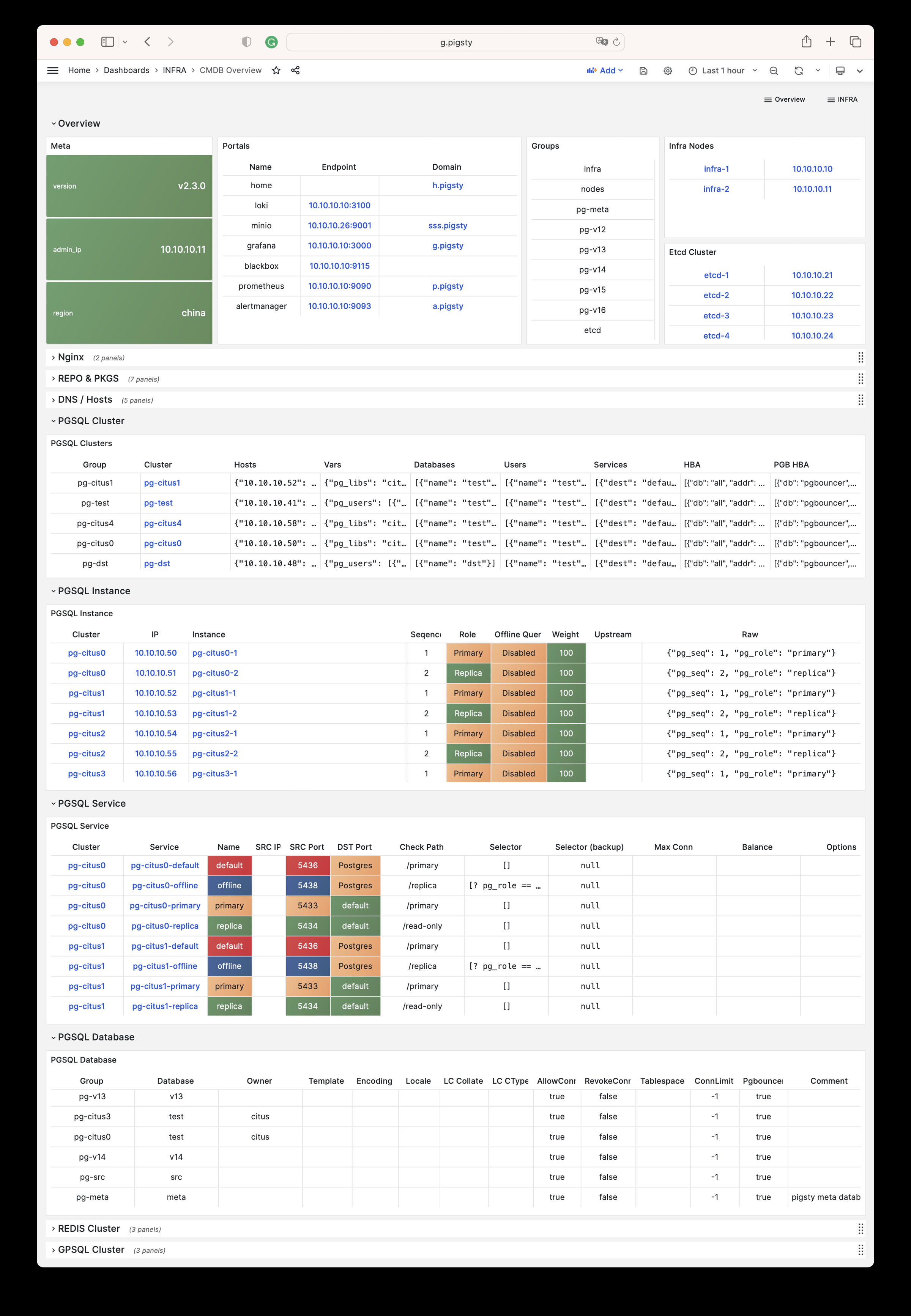

一套标准的 Pigsty 部署会带有一个 INFRA 模块,为纳管的节点与数据库集群提供服务:

- Nginx:作为 Web 服务器,提供本地软件仓库服务;作为反向代理,统一收拢其他 Web UI 服务的访问

- Grafana:可视化平台,呈现监控指标,展现面板大屏,或者进行数据分析与可视化。

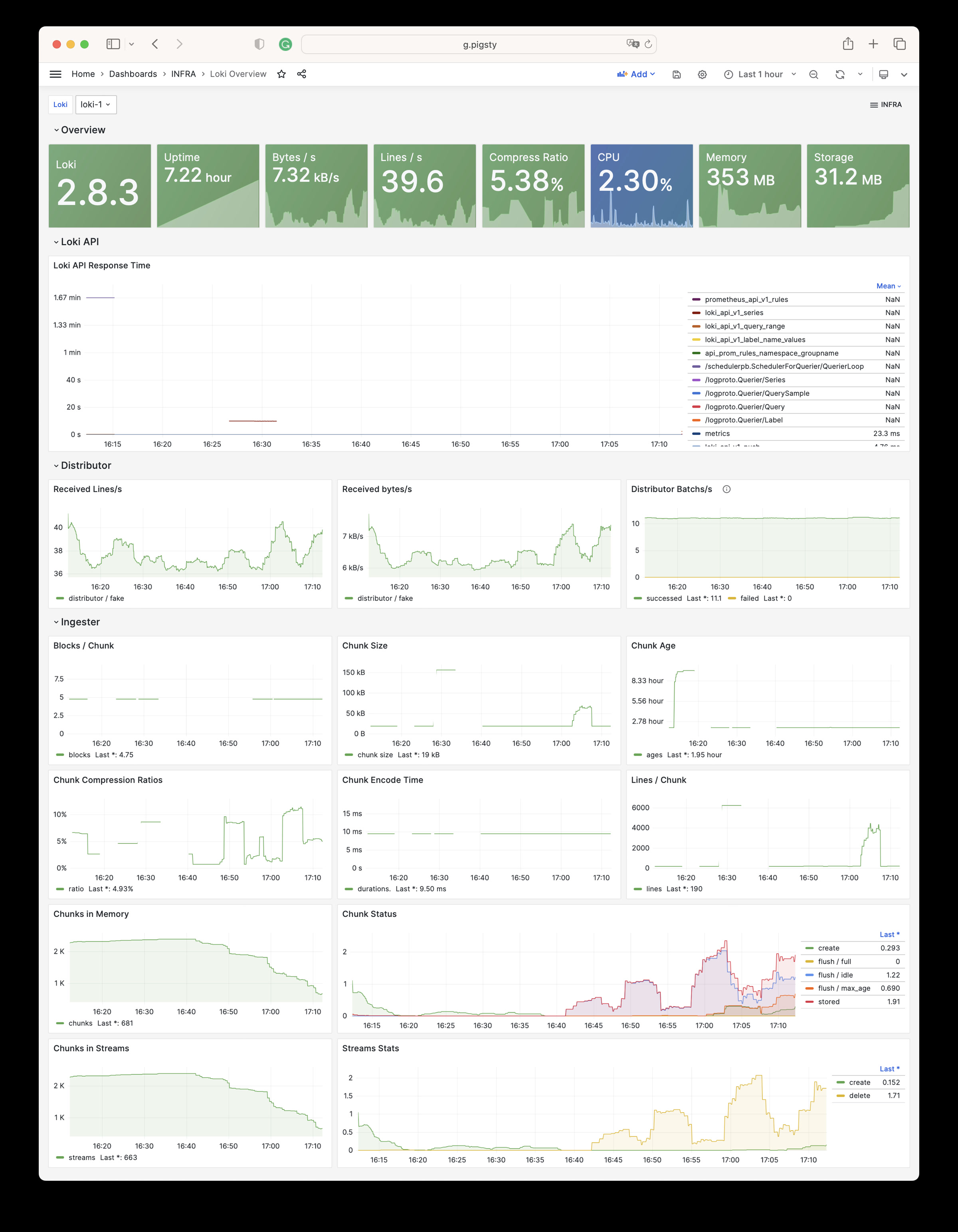

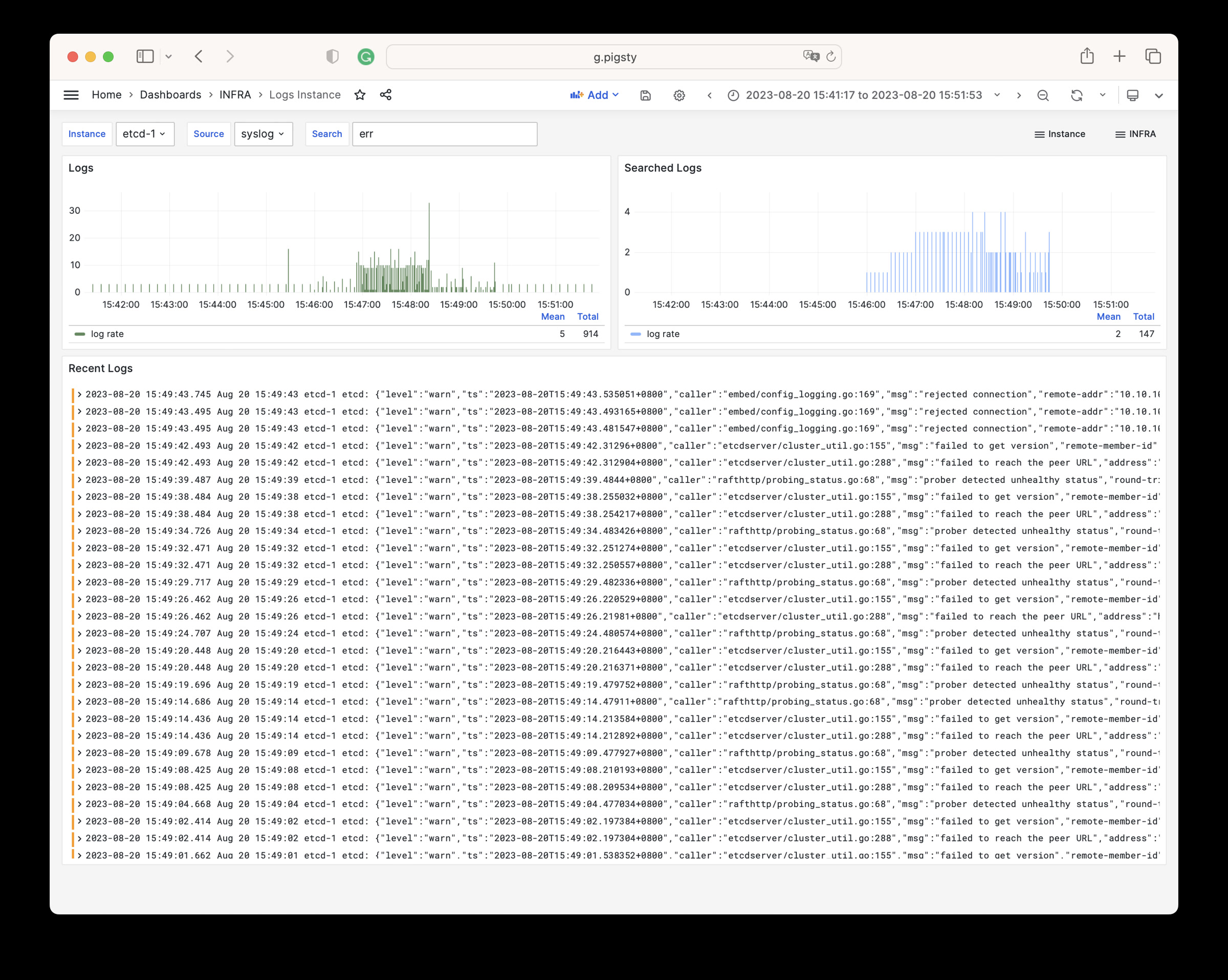

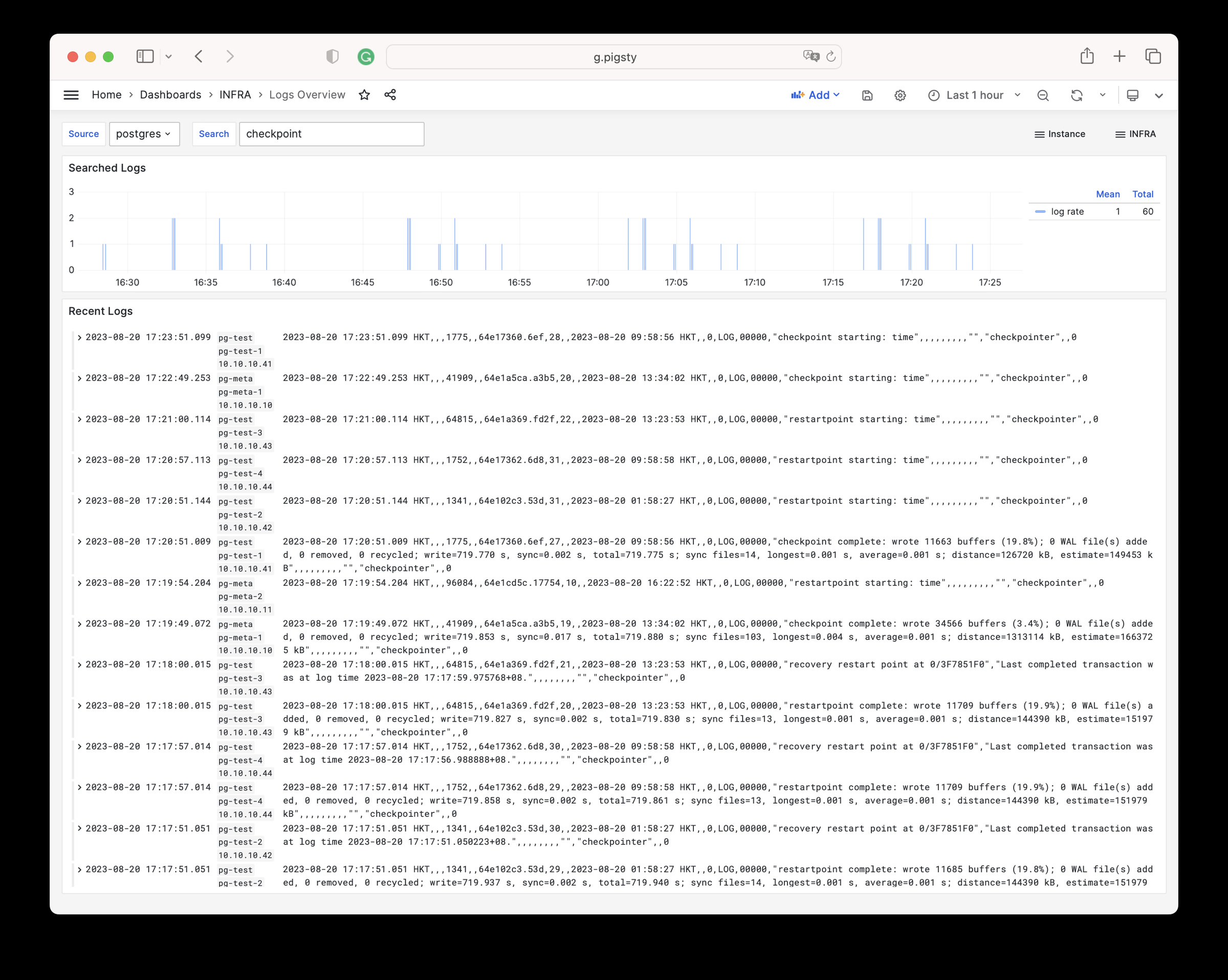

- Loki:集中收集存储日志,便于从 Grafana 中查询。

- Prometheus:监控时序数据库,拉取监控指标,存储监控数据,计算报警规则。

- AlertManager:聚合告警事件,分发告警通知,告警屏蔽与管理。

- PushGateway:收集一次性任务/跑批任务的监控指标

- BlackboxExporter:探测各个节点 IP 与 VIP 地址的可达性

- DNSMASQ:提供 DNS 解析服务,解析 Pigsty 内部使用到的域名

- Chronyd:提供 NTP 时间同步服务,确保所有节点时间一致

INFRA 模块对于高可用 PostgreSQL 并非必选项,例如在 精简安装 模式下,就不会安装 Infra 模块。

但 INFRA 模块提供了运行生产级高可用 PostgreSQL 集群所需要的支持性服务,通常强烈建议安装启用。

如果您已经有自己的基础设施(Nginx,本地仓库,监控系统,DNS,NTP),您也可以停用 INFRA 模块,并通过 修改配置 来使用现有的基础设施。

架构总览

Infra 模块默认包含以下组件,使用以下默认端口与域名:

| 组件 | 端口 | 默认域名 | 描述 |

|---|---|---|---|

| Nginx | 80/443 |

h.pigsty |

Web服务门户(本地软件仓库) |

| Grafana | 3000 |

g.pigsty |

可视化平台 |

| Prometheus | 9090 |

p.pigsty |

时间序列数据库(收存监控指标) |

| AlertManager | 9093 |

a.pigsty |

告警聚合分发 |

| Loki | 3100 |

- | 日志收集服务器 |

| PushGateway | 9091 |

- | 接受一次性的任务指标 |

| BlackboxExporter | 9115 |

- | 黑盒监控探测 |

| DNSMasq | 53 |

- | DNS 服务器 |

| Chronyd | 123 |

- | NTP 时间服务器 |

在单机上完整安装 Pigsty 功能集,节点上的组件大致如下图所示:

在默认情况下,INFRA 模块的故障 通常 不会影响现有 PostgreSQL 数据库集群的正常运行

在 Pigsty 中,PGSQL 模块会使用到 INFRA 模块上的一些服务,具体来说包括:

-

数据库集群/主机节点的域名,依赖INFRA节点的 DNSMASQ 解析。

- Pigsty 本身不使用这些域名,而使用 IP 地址直连,避免依赖 DNS。

-

在数据库节点软件上安装,需要用到 INFRA 模块提供的 Nginx 本地 yum/apt 软件仓库。

- 用户可以直接指定

repo_upstream与node_repo_modules,直接从互联网上游/其他本地仓库下载/安装软件

- 用户可以直接指定

-

数据库集群/节点的监控指标,会被INFRA节点的 Prometheus 收集抓取。

- 当

prometheus_enabled为false,不会收集监控指标。

- 当

-

数据库节点的日志会被 Promtail 收集,并发往 INFRA节点 上的 Loki(只会发往

infra_portal定义的端点)。- 如果

loki_enabled为false,则不会收集日志。

- 如果

-

数据库节点默认会从 INFRA/ADMIN节点 上的 NTP/Chronyd 服务器同步时间

- 如果是 Infra 节点,会默认配置使用公共 NTP 服务器,

- 其他节点会使用 INFRA/ADMIN 节点上的 NTP/Chronyd 服务器同步时间

- 如果您有专用 NTP 服务器,可以配置

node_ntp_servers使用

-

如果没有专用集群,高可用组件 Patroni 会使用 INFRA 节点上的 etcd 作为高可用DCS。

-

如果没有专用集群,备份组件 pgbackrest 会使用 INFRA 节点上的 minio 作为可选的集中备份仓库。

-

用户会从 Infra/Admin 节点上使用 Ansible 或其他工具发起对数据库节点的 管理:

- 执行集群创建,扩缩容,实例/集群回收

- 创建业务用户、业务数据库、修改服务、HBA修改;

- 执行日志采集、垃圾清理,备份,巡检等

Nginx

Nginx 是 Pigsty 所有 WebUI 类服务的访问入口,默认使用 80 / 443 端口对外提供 HTTP / HTTPS 服务。

带有 WebUI 的基础设施组件可以通过 Nginx 统一对外暴露服务,例如 Grafana,Prometheus,AlertManager,以及 HAProxy 控制台,此外,本地 yum/apt 仓库等静态文件资源也通过 Nginx 对内提供服务。

Nginx 会根据 infra_portal 的定义配置本地 Web 服务器或反向代理服务器,例如默认配置为:

infra_portal:

home : { domain: h.pigsty }

grafana : { domain: g.pigsty ,endpoint: "${admin_ip}:3000" ,websocket: true }

prometheus : { domain: p.pigsty ,endpoint: "${admin_ip}:9090" }

alertmanager : { domain: a.pigsty ,endpoint: "${admin_ip}:9093" }

blackbox : { endpoint: "${admin_ip}:9115" }

loki : { endpoint: "${admin_ip}:3100" }

#minio : { domain: sss.pigsty ,endpoint: "${admin_ip}:9001" ,scheme: https ,websocket: true }

在这里默认记录的 endpoint 会被环境中的其他服务引用,例如,日志会发往 loki 对应的 endpoint 地址,而 Grafana 数据源会注册到 grafana 对应的 endpoint 地址,告警会发送至 alertmanager 对应的 endpoint 地址。

Pigsty 允许对 Nginx 进行丰富的定制,将其作为本地文件服务器,或者反向代理服务器,配置自签名或者真正的 HTTPS 证书。

Pigsty Demo 站点的样例 Nginx 配置

infra_portal: # domain names and upstream servers

home : { domain: home.pigsty.cc ,certbot: pigsty.demo }

grafana : { domain: demo.pigsty.cc ,endpoint: "${admin_ip}:3000", websocket: true ,certbot: pigsty.demo }

prometheus : { domain: p.pigsty.cc ,endpoint: "${admin_ip}:9090" ,certbot: pigsty.demo }

alertmanager : { domain: a.pigsty.cc ,endpoint: "${admin_ip}:9093" ,certbot: pigsty.demo }

blackbox : { endpoint: "${admin_ip}:9115" }

loki : { endpoint: "${admin_ip}:3100" }

postgrest : { domain: api.pigsty.cc ,endpoint: "127.0.0.1:8884" }

pgadmin : { domain: adm.pigsty.cc ,endpoint: "127.0.0.1:8885" }

pgweb : { domain: cli.pigsty.cc ,endpoint: "127.0.0.1:8886" }

bytebase : { domain: ddl.pigsty.cc ,endpoint: "127.0.0.1:8887" }

jupyter : { domain: lab.pigsty.cc ,endpoint: "127.0.0.1:8888" ,websocket: true }

gitea : { domain: git.pigsty.cc ,endpoint: "127.0.0.1:8889" ,certbot: pigsty.cc }

wiki : { domain: wiki.pigsty.cc ,endpoint: "127.0.0.1:9002" ,certbot: pigsty.cc }

noco : { domain: noco.pigsty.cc ,endpoint: "127.0.0.1:9003" ,certbot: pigsty.cc }

supa : { domain: supa.pigsty.cc ,endpoint: "10.2.82.163:8000" ,websocket: true ,certbot: pigsty.cc }

dify : { domain: dify.pigsty.cc ,endpoint: "10.2.82.163:8001" ,websocket: true ,certbot: pigsty.cc }

odoo : { domain: odoo.pigsty.cc ,endpoint: "127.0.0.1:8069" ,websocket: true ,certbot: pigsty.cc }

mm : { domain: mm.pigsty.cc ,endpoint: "10.2.82.163:8065" ,websocket: true }

web.io:

domain: en.pigsty.cc

path: "/www/web.io"

certbot: pigsty.doc

enforce_https: true

config: |

# rewrite /zh/ to /

location /zh/ {

rewrite ^/zh/(.*)$ /$1 permanent;

}

web.cc:

domain: pigsty.cc

path: "/www/web.cc"

domains: [ zh.pigsty.cc ]

certbot: pigsty.doc

config: |

# rewrite /zh/ to /

location /zh/ {

rewrite ^/zh/(.*)$ /$1 permanent;

}

repo:

domain: pro.pigsty.cc

path: "/www/repo"

index: true

certbot: pigsty.doc

更多信息,请参考以下教程:

本地软件仓库

Pigsty 会在安装时,默认在 Infra 节点是那个创建一个本地软件仓库,以加速后续软件安装。

该软件仓库默认位位于 /www/pigsty 目录,由 Nginx 提供服务,可以访问 http://h.pigsty/pigsty 使用。

Pigsty的 离线软件包 是将已经建立好的软件源目录整个打成压缩包:当Pigsty尝试构建本地源时,如果发现本地源目录 /www/pigsty 已经存在,且带有 /www/pigsty/repo_complete 标记文件,则会认为本地源已经构建完成,从而跳过从原始上游下载软件的步骤,消除了对互联网访问的依赖。

Repo定义文件位于 /www/pigsty.repo,默认可以通过 http://${admin_ip}/pigsty.repo 获取

curl -L http://h.pigsty/pigsty.repo -o /etc/yum.repos.d/pigsty.repo

您也可以在没有Nginx的情况下直接使用文件本地源:

[pigsty-local]

name=Pigsty local $releasever - $basearch

baseurl=file:///www/pigsty/

enabled=1

gpgcheck=0

本地软件仓库相关配置参数位于:配置:INFRA - REPO

Prometheus

Prometheus是监控时序数据库,默认监听9090端口,可以直接通过IP:9090或域名http://p.pigsty访问。

Prometheus是监控用时序数据库,提供以下功能:

- Prometheus默认通过本地静态文件服务发现获取监控对象,并为其关联身份信息。

- Prometheus从Exporter拉取监控指标数据,进行预计算加工后存入自己的TSDB中。

- Prometheus计算报警规则,将报警事件发往Alertmanager处理。

AlertManager是与Prometheus配套的告警平台,默认监听9093端口,可以直接通过IP:9093或域名 http://a.pigsty 访问。

Prometheus的告警事件会发送至AlertManager,但如果需要进一步处理,用户需要进一步对其进行配置,例如提供SMTP服务配置以发送告警邮件。

Prometheus、AlertManager,PushGateway,BlackboxExporter 的相关配置参数位于:配置:INFRA - PROMETHEUS

Grafana

Grafana是开源的可视化/监控平台,是Pigsty WebUI的核心,默认监听3000端口,可以直接通过IP:3000或域名http://g.pigsty访问。

Pigsty的监控系统基于Dashboard构建,通过URL进行连接与跳转。您可以快速地在监控中下钻上卷,快速定位故障与问题。

此外,Grafana还可以用作通用的低代码前后端平台,制作交互式可视化数据应用。因此,Pigsty使用的Grafana带有一些额外的可视化插件,例如ECharts面板。

Loki是用于日志收集的日志数据库,默认监听3100端口,节点上的Promtail向元节点上的Loki推送日志。

Grafana与Loki相关配置参数位于:配置:INFRA - GRAFANA,配置:INFRA - Loki

Ansible

Pigsty默认会在元节点上安装Ansible,Ansible是一个流行的运维工具,采用声明式的配置风格与幂等的剧本设计,可以极大降低系统维护的复杂度。

DNSMASQ

DNSMASQ 提供环境内的DNS解析服务,其他模块的域名将会注册到 INFRA节点上的 DNSMASQ 服务中。

DNS记录默认放置于所有INFRA节点的 /etc/hosts.d/ 目录中。

DNSMASQ相关配置参数位于:配置:INFRA - DNS

Chronyd

NTP服务用于同步环境内所有节点的时间(可选)

NTP相关配置参数位于:配置:NODES - NTP

2 - 集群配置

配置说明

INFRA 主要用于提供 监控 基础设施,对于 PostgreSQL 数据库是 可选项 。

除非您在某些地方手工配置了对 INFRA 节点上 DNS / NTP 服务的依赖,否则 INFRA 模块的故障通常不会影响 PostgreSQL 数据库集群的正常运行。

在大多数情况下,单个 INFRA 节点足以应对绝大部分场景的需求。对于有一定要求的生产环境,建议使用 2~3 个 INFRA 节点以实现高可用。

通常出于提高资源利用率的考虑,PostgreSQL 高可用依赖的 ETCD 模块可以与 INFRA 模块共用节点。

使用 3 个以上的 INFRA 节点没有太大意义,但您可以使用更多的 ETCD 节点(例如 5 个)来提高 DCS 服务的可用性与可靠性。

配置样例

要在节点上安装 INFRA 模块,首先需要在 配置清单 中的 infra 分组加入节点 IP,并为其分配 Infra 实例号 infra_seq。

默认情况下,配置单个 INFRA 节点便足以满足大部分场景下的需求,所有配置模板都默认带有 infra 分组的定义:

all:

children:

infra: { hosts: { 10.10.10.10: { infra_seq: 1 } }}

默认情况下,infra 分组中的 10.10.10.10 IP 占位符会在 配置 过程中被替换为 当前节点首要IP地址。

也就是会在当前节点上安装 INFRA 模块。

然后,使用 infra.yml 剧本在节点上初始化 INFRA 模块即可。

更多节点

配置两个 INFRA 节点,可以将新节点 IP 加入 infra.hosts

all:

children:

infra:

hosts:

10.10.10.10: { infra_seq: 1 }

10.10.10.11: { infra_seq: 2 }

配置三个 INFRA 节点,并配置一些 Infra 集群/节点的参数:

all:

children:

infra:

hosts:

10.10.10.10: { infra_seq: 1 }

10.10.10.11: { infra_seq: 2, repo_enabled: false }

10.10.10.12: { infra_seq: 3, repo_enabled: false }

vars:

grafana_clean: false

prometheus_clean: false

loki_clean: false

Infra 高可用

Infra 模块中的大部分组件都属于 “无状态/相同状态” ,对于这类组件,高可用只需要操心 “负载均衡” 问题。

Infra 组件负载均衡可以通过两种方式实现: Keepalived L2 VIP,或 HAProxy 四层负载均衡。

如果您的网络环境二层互通,则可以使用 Keepalived L2 VIP 实现高可用。

infra:

hosts:

10.10.10.10: { infra_seq: 1 }

10.10.10.11: { infra_seq: 2 }

10.10.10.12: { infra_seq: 3 }

vars:

vip_enabled: true

vip_vrid: 128

vip_address: 10.10.10.8

vip_interface: eth1

infra_portal:

home : { domain: h.pigsty }

grafana : { domain: g.pigsty ,endpoint: "10.10.10.8:3000" , websocket: true }

prometheus : { domain: p.pigsty ,endpoint: "10.10.10.8:9090" }

alertmanager : { domain: a.pigsty ,endpoint: "10.10.10.8:9093" }

blackbox : { endpoint: "10.10.10.8:9115" }

loki : { endpoint: "10.10.10.8:3100" }

除了设置 vip_address 等 VIP 相关参数外,您还需要在 infra_portal 中修改各项 Infra 服务的端点。

Nginx配置

本地仓库配置

DNS配置

NTP配置

3 - 参数列表

INFRA 模块有下列 10 个参数组,共计 60 个关于基础设施组件的参数:

META:Pigsty 元数据,版本号,管理节点CA:自签名公私钥基础设施/CAINFRA_ID:基础设施门户,Nginx/域名配置REPO:本地软件仓库:YUM/APTINFRA_PACKAGE:基础设施软件包NGINX:Nginx 网络服务器与 Certbot 证书DNS:DNSMASQ 域名服务器PROMETHEUS:Prometheus 时序数据库全家桶GRAFANA:Grafana 可观测性全家桶LOKI:Loki 日志服务

| 参数 | 参数组 | 类型 | 层次 | 中文说明 |

|---|---|---|---|---|

version |

META |

string |

G | pigsty 版本字符串 |

admin_ip |

META |

ip |

G | 管理节点 IP 地址 |

region |

META |

enum |

G | 上游镜像区域:default,china,europe |

proxy_env |

META |

dict |

G | 下载包时使用的全局代理环境变量 |

ca_method |

CA |

enum |

G | CA处理方式:create,recreate,copy,默认为没有则创建 |

ca_cn |

CA |

string |

G | CA CN名称,固定为 pigsty-ca |

cert_validity |

CA |

interval |

G | 证书有效期,默认为 20 年 |

infra_seq |

INFRA_ID |

int |

I | 基础设施节号,必选身份参数 |

infra_portal |

INFRA_ID |

dict |

G | 通过Nginx门户暴露的基础设施服务列表 |

repo_enabled |

REPO |

bool |

G/I | 在此基础设施节点上创建软件仓库? |

repo_home |

REPO |

path |

G | 软件仓库主目录,默认为/www |

repo_name |

REPO |

string |

G | 软件仓库名称,默认为 pigsty |

repo_endpoint |

REPO |

url |

G | 仓库的访问点:域名或 ip:port 格式 |

repo_remove |

REPO |

bool |

G/A | 构建本地仓库时是否移除现有上游仓库源定义文件? |

repo_modules |

REPO |

string |

G/A | 启用的上游仓库模块列表,用逗号分隔 |

repo_upstream |

REPO |

upstream[] |

G | 上游仓库源定义:从哪里下载上游包? |

repo_packages |

REPO |

string[] |

G | 从上游仓库下载哪些软件包? |

repo_extra_packages |

REPO |

string[] |

G/C/I | 从上游仓库下载哪些额外的软件包? |

repo_url_packages |

REPO |

string[] |

G | 使用URL下载的额外软件包列表 |

infra_packages |

INFRA_PACKAGE |

string[] |

G | 在基础设施节点上要安装的软件包 |

infra_packages_pip |

INFRA_PACKAGE |

string |

G | 在基础设施节点上使用 pip 安装的包 |

nginx_enabled |

NGINX |

bool |

G/I | 在此基础设施节点上启用 nginx? |

nginx_exporter_enabled |

NGINX |

bool |

G/I | 在此基础设施节点上启用 nginx_exporter? |

nginx_sslmode |

NGINX |

enum |

G | nginx SSL模式?disable,enable,enforce |

nginx_home |

NGINX |

path |

G | nginx 内容目录,默认为 /www,通常和仓库目录一致 |

nginx_port |

NGINX |

port |

G | nginx 监听端口,默认为 80 |

nginx_ssl_port |

NGINX |

port |

G | nginx SSL监听端口,默认为 443 |

nginx_navbar |

NGINX |

index[] |

G | nginx 首页导航链接列表 |

certbot_sign |

NGINX |

bool |

G/A | 是否使用 certbot 自动申请证书?默认为 false |

certbot_email |

NGINX |

string |

G/A | 申请证书时使用的 email,用于接受过期提醒邮件 |

certbot_option |

NGINX |

string |

G/A | 申请证书时额外传入的的配置参数 |

dns_enabled |

DNS |

bool |

G/I | 在此基础设施节点上设置dnsmasq? |

dns_port |

DNS |

port |

G | DNS 服务器监听端口,默认为 53 |

dns_records |

DNS |

string[] |

G | 由 dnsmasq 解析的动态 DNS 记录 |

prometheus_enabled |

PROMETHEUS |

bool |

G/I | 在此基础设施节点上启用 prometheus? |

prometheus_clean |

PROMETHEUS |

bool |

G/A | 初始化Prometheus的时候清除现有数据? |

prometheus_data |

PROMETHEUS |

path |

G | Prometheus 数据目录,默认为 /data/prometheus |

prometheus_sd_dir |

PROMETHEUS |

path |

G | Prometheus 服务发现目标文件目录 |

prometheus_sd_interval |

PROMETHEUS |

interval |

G | Prometheus 目标刷新间隔,默认为 5s |

prometheus_scrape_interval |

PROMETHEUS |

interval |

G | Prometheus 抓取 & 评估间隔,默认为 10s |

prometheus_scrape_timeout |

PROMETHEUS |

interval |

G | Prometheus 全局抓取超时,默认为 8s |

prometheus_options |

PROMETHEUS |

arg |

G | Prometheus 额外的命令行参数选项 |

pushgateway_enabled |

PROMETHEUS |

bool |

G/I | 在此基础设施节点上设置 pushgateway? |

pushgateway_options |

PROMETHEUS |

arg |

G | pushgateway 额外的命令行参数选项 |

blackbox_enabled |

PROMETHEUS |

bool |

G/I | 在此基础设施节点上设置 blackbox_exporter? |

blackbox_options |

PROMETHEUS |

arg |

G | blackbox_exporter 额外的命令行参数选项 |

alertmanager_enabled |

PROMETHEUS |

bool |

G/I | 在此基础设施节点上设置 alertmanager? |

alertmanager_port |

PROMETHEUS |

arg |

G | alertmanager 监听端口号,默认为 9093 |

alertmanager_options |

PROMETHEUS |

arg |

G | alertmanager 额外的命令行参数选项 |

exporter_metrics_path |

PROMETHEUS |

path |

G | exporter 指标路径,默认为 /metrics |

exporter_install |

PROMETHEUS |

enum |

G | 如何安装 exporter?none,yum,binary |

exporter_repo_url |

PROMETHEUS |

url |

G | 通过 yum 安装exporter时使用的yum仓库文件地址 |

grafana_enabled |

GRAFANA |

bool |

G/I | 在此基础设施节点上启用 Grafana? |

grafana_clean |

GRAFANA |

bool |

G/A | 初始化Grafana期间清除数据? |

grafana_admin_username |

GRAFANA |

username |

G | Grafana 管理员用户名,默认为 admin |

grafana_admin_password |

GRAFANA |

password |

G | Grafana 管理员密码,默认为 pigsty |

loki_enabled |

LOKI |

bool |

G/I | 在此基础设施节点上启用 loki? |

loki_clean |

LOKI |

bool |

G/A | 是否删除现有的 loki 数据? |

loki_data |

LOKI |

path |

G | loki 数据目录,默认为 /data/loki |

loki_retention |

LOKI |

interval |

G | loki 日志保留期,默认为 15d |

META

这一小节指定了一套 Pigsty 部署的元数据:包括版本号,管理员节点 IP 地址,软件源镜像上游区域 和下载软件包时使用的 http(s) 代理。

version: v3.4.0 # pigsty 版本号

admin_ip: 10.10.10.10 # 管理节点IP地址

region: default # 上游镜像区域:default,china,europe

proxy_env: # 全局HTTPS代理,用于下载、安装软件包。

no_proxy: "localhost,127.0.0.1,10.0.0.0/8,192.168.0.0/16,*.pigsty,*.aliyun.com,mirrors.*,*.myqcloud.com,*.tsinghua.edu.cn"

# http_proxy: # set your proxy here: e.g http://user:pass@proxy.xxx.com

# https_proxy: # set your proxy here: e.g http://user:pass@proxy.xxx.com

# all_proxy: # set your proxy here: e.g http://user:pass@proxy.xxx.com

version

参数名称: version, 类型: string, 层次:G

Pigsty 版本号字符串,默认值为当前版本:v3.3.0。

Pigsty 内部会使用版本号进行功能控制与内容渲染。

Pigsty使用语义化版本号,版本号字符串通常以字符 v 开头。

admin_ip

参数名称: admin_ip, 类型: ip, 层次:G

管理节点的 IP 地址,默认为占位符 IP 地址:10.10.10.10

由该参数指定的节点将被视为管理节点,通常指向安装 Pigsty 时的第一个节点,即中控节点。

默认值 10.10.10.10 是一个占位符,会在 configure 过程中被替换为实际的管理节点 IP 地址。

许多参数都会引用此参数,例如:

在这些参数中,字符串 ${admin_ip} 会被替换为 admin_ip 的真实取值。使用这种机制,您可以为不同的节点指定不同的中控管理节点。

region

参数名称: region, 类型: enum, 层次:G

上游镜像的区域,默认可选值为:upstream mirror region: default,china,europe,默认为: default

如果一个不同于 default 的区域被设置,且在 repo_upstream 中有对应的条目,将会使用该条目对应 baseurl 代替 default 中的 baseurl。

例如,如果您的区域被设置为 china,那么 Pigsty 会尝试使用中国地区的上游软件镜像站点以加速下载,如果某个上游软件仓库没有对应的中国地区镜像,那么会使用默认的上游镜像站点替代。

同时,在 repo_url_packages 中定义的 URL 地址,也会进行从 repo.pigsty.io 到 repo.pigsty.cc 的替换,以使用国内的镜像源。

proxy_env

参数名称: proxy_env, 类型: dict, 层次:G

下载包时使用的全局代理环境变量,默认值指定了 no_proxy,即不使用代理的地址列表:

proxy_env:

no_proxy: "localhost,127.0.0.1,10.0.0.0/8,192.168.0.0/16,*.pigsty,*.aliyun.com,mirrors.aliyuncs.com,mirrors.tuna.tsinghua.edu.cn,mirrors.zju.edu.cn"

#http_proxy: 'http://username:password@proxy.address.com'

#https_proxy: 'http://username:password@proxy.address.com'

#all_proxy: 'http://username:password@proxy.address.com'

当您在中国大陆地区从互联网上游安装时,特定的软件包可能会被墙,您可以使用代理来解决这个问题。

请注意,如果使用了 Docker 模块,那么这里的代理服务器配置也会写入 Docker Daemon 配置文件中。

请注意,如果在 ./configure 过程中指定了 -x 参数,那么当前环境中的代理配置信息将会被自动填入到生成的 pigsty.yaml 文件中。

CA

Pigsty 使用的自签名 CA 证书,用于支持高级安全特性。

ca_method: create # CA处理方式:create,recreate,copy,默认为没有则创建

ca_cn: pigsty-ca # CA CN名称,固定为 pigsty-ca

cert_validity: 7300d # 证书有效期,默认为 20 年

ca_method

参数名称: ca_method, 类型: enum, 层次:G

CA处理方式:create , recreate ,copy,默认为没有则创建

默认值为: create,即如果不存在则创建一个新的 CA 证书。

create:如果files/pki/ca中不存在现有的CA,则创建一个全新的 CA 公私钥对,否则就直接使用现有的 CA 公私钥对。recreate:总是创建一个新的 CA 公私钥对,覆盖现有的 CA 公私钥对。注意,这是一个危险的操作。copy:假设files/pki/ca目录下已经有了一对CA公私钥对,并将ca_method设置为copy,Pigsty 将会使用现有的 CA 公私钥对。如果不存在则会报错

如果您已经有了一对 CA 公私钥对,可以将其复制到 files/pki/ca 目录下,并将 ca_method 设置为 copy,Pigsty 将会使用现有的 CA 公私钥对,而不是新建一个。

请注意,务必保留并备份好一套部署新生成的 CA 私钥文件。

ca_cn

参数名称: ca_cn, 类型: string, 层次:G

CA CN名称,固定为 pigsty-ca,不建议修改。

你可以使用以下命令来查看节点上的 Pigsty CA 证书: openssl x509 -text -in /etc/pki/ca.crt

cert_validity

参数名称: cert_validity, 类型: interval, 层次:G

签发证书的有效期,默认为 20 年,对绝大多数场景都足够了。默认值为: 7300d

INFRA_ID

Infrastructure identity and portal definition.

#infra_seq: 1 # infra node identity, explicitly required

infra_portal: # infra services exposed via portal

home : { domain: h.pigsty }

grafana : { domain: g.pigsty ,endpoint: "${admin_ip}:3000" ,websocket: true }

prometheus : { domain: p.pigsty ,endpoint: "${admin_ip}:9090" }

alertmanager : { domain: a.pigsty ,endpoint: "${admin_ip}:9093" }

blackbox : { endpoint: "${admin_ip}:9115" }

loki : { endpoint: "${admin_ip}:3100" }

infra_seq

参数名称: infra_seq, 类型: int, 层次:I

基础设施节号,必选身份参数,所以不提供默认值,必须在基础设施节点上显式指定。

infra_portal

参数名称: infra_portal, 类型: dict, 层次:G

通过Nginx门户暴露的基础设施服务列表,默认情况下,Pigsty 会通过 Nginx 对外暴露以下服务:

infra_portal:

home : { domain: h.pigsty }

grafana : { domain: g.pigsty ,endpoint: "${admin_ip}:3000" ,websocket: true }

prometheus : { domain: p.pigsty ,endpoint: "${admin_ip}:9090" }

alertmanager : { domain: a.pigsty ,endpoint: "${admin_ip}:9093" }

blackbox : { endpoint: "${admin_ip}:9115" }

loki : { endpoint: "${admin_ip}:3100" }

每条记录由一个 Key 与一个 Value 字典组成,name 作为键,代表组件名称,值为对象,可以配置以下参数:

name: 必选项,指定 Nginx 服务器的名称- 默认记录:

home,grafana,prometheus,alertmanager,blackbox,loki为固定名称,请勿修改。 - 用作 Nginx 配置文件名称的一部分,对应配置文件为:

/etc/nginx/conf.d/<name>.conf - 没有配置

domain字段的 Nginx Server 不会生成配置文件,仅作为参考引用之用。

- 默认记录:

domain: 可选,当服务需要通过 Nginx 对外暴露时,为必选项,指定使用的域名- 在 Pigsty 自签名 Nginx HTTPS 证书中,域名将被添加到Nginx SSL证书的

SAN字段中 - Pigsty web 页面之间的交叉引用会使用这里的默认域名

- 在 Pigsty 自签名 Nginx HTTPS 证书中,域名将被添加到Nginx SSL证书的

endpoint:通常与path二选一,指定上游服务器地址,设置endpoint表示这是一个反向代理服务器- 在配置中可以使用

${admin_ip}作为占位符,部署时将动态替换为admin_ip - 反向代理服务器默认使用

endpoint.conf作为配置模板 - 反向代理服务器还可以配置

websocket与schema参数

- 在配置中可以使用

path: 通常与endpoint二选一,指定本地文件服务器路径,设置path表示这是一个本地Web服务器- 本地Web服务器默认使用

path.conf作为配置模板 - 本地Web服务器还可以配置

index参数,是否启用文件索引页

- 本地Web服务器默认使用

certbot:Certbot 证书名称,如果配置,会使用 Certbot 申请证书- 如果有多个服务器指定了相同的

certbot,Pigsty 会进行合并申请,最终证书名称为这个certbot的名称

- 如果有多个服务器指定了相同的

cert:Nginx 证书文件路径,如果配置,会覆盖默认的证书路径key:Nginx 证书密钥文件路径,如果配置,会覆盖默认的证书密钥路径websocket:是否启用 WebSocket 支持- 只有反向代理服务器可以配置此参数,如果开启将允许上游使用 WebSocket 连接

schema:上游服务器使用的协议,如果配置,会覆盖默认的协议- 默认为

http,如果配置https则强制使用 HTTPS 连接上游服务器

- 默认为

index:是否启用文件索引页- 只有本地Web服务器可以配置此参数,如果开启将开启

autoindex配置,自动为目录生成索引页

- 只有本地Web服务器可以配置此参数,如果开启将开启

log:Nginx 日志文件路径- 如果指定,访问日志将写入此文件,否则根据服务器类型使用默认的日志文件

- 反向代理服务器,默认使用

/var/log/nginx/<name>.log作为日志文件路径 - 本地Web服务器,使用默认的 Access 日志

conf:Nginx 配置文件路径- 显示指定使用的配置模板文件,位于

roles/infra/templates/nginx或templates/nginx目录 - 未指定本参数时,会使用默认的配置模板,位于

roles/infra/templates/nginx或templates/nginx目录

- 显示指定使用的配置模板文件,位于

config:Nginx 配置代码块- 直接注入到 Nginx Server 配置块中的配置文本

enforce_https:将 HTTP 服务器重定向到 HTTPS 服务器- 全局配置可以通过

nginx_sslmode: enforce来指定 - 此配置不影响默认的

home服务器,home服务器会始终同时监听 80 与 443 端口确保兼容性。

- 全局配置可以通过

REPO

本节配置是关于本地软件仓库的。 Pigsty 默认会在基础设施节点上启用一个本地软件仓库(APT / YUM)。

在初始化过程中,Pigsty 会从互联网上游仓库(由 repo_upstream 指定)下载所有软件包及其依赖项(由 repo_packages 指定)

到 {{ nginx_home }} / {{ repo_name }} (默认为 /www/pigsty),所有软件及其依赖的总大小约为1GB左右。

创建本地软件仓库时,如果仓库已存在(判断方式:仓库目录目录中有一个名为 repo_complete 的标记文件)Pigsty 将认为仓库已经创建完成,跳过软件下载阶段,直接使用构建好的仓库。

如果某些软件包的下载速度太慢,您可以通过使用 proxy_env 配置项来设置下载代理来完成首次下载,或直接下载预打包的 离线软件包,离线软件包本质上就是在同样操作系统上构建好的本地软件源。

repo_enabled: true # 在当前基础设施节点上启用本地软件仓库?

repo_home: /www # 仓库主目录,默认为 `/www`

repo_name: pigsty # 仓库名称,默认为 pigsty

repo_endpoint: http://${admin_ip}:80 # 访问此仓库的端点,可以是域名或IP:端口

repo_remove: true # 移除现有的上游仓库

repo_modules: infra,node,pgsql # 在仓库引导过程中安装上游仓库

#repo_upstream: [] # 从哪里下载软件包

#repo_packages: [] # 下载哪些软件包

#repo_extra_packages: [] # 额外下载的软件包

repo_url_packages: [] # 从URL下载的额外软件包

repo_enabled

参数名称: repo_enabled, 类型: bool, 层次:G/I

是否在当前的基础设施节点上启用本地软件源?默认为: true,即所有 Infra 节点都会设置一个本地软件仓库。

如果您有多个基础设施节点,可以只保留 1 ~ 2 个节点作为软件仓库,其他节点可以通过设置此参数为 false 来避免重复软件下载构建。

repo_home

参数名称: repo_home, 类型: path, 层次:G

本地软件仓库的家目录,默认为 Nginx 的根目录,也就是: /www,我们不建议您修改此目录。如果修改,需要和 nginx_home

repo_name

参数名称: repo_name, 类型: string, 层次:G

本地仓库名称,默认为 pigsty,更改此仓库的名称是不明智的行为。

repo_endpoint

参数名称: repo_endpoint, 类型: url, 层次:G

其他节点访问此仓库时使用的端点,默认值为:http://${admin_ip}:80。

Pigsty 默认会在基础设施节点 80/443 端口启动 Nginx,对外提供本地软件源(静态文件)服务。

如果您修改了 nginx_port 与 nginx_ssl_port,或者使用了不同于中控节点的基础设施节点,请相应调整此参数。

如果您使用了域名,可以在 node_default_etc_hosts、node_etc_hosts、或者 dns_records 中添加解析。

repo_remove

参数名称: repo_remove, 类型: bool, 层次:G/A

在构建本地软件源时,是否移除现有的上游仓库定义?默认值: true。

当启用此参数时,/etc/yum.repos.d 中所有已有仓库文件会被移动备份至/etc/yum.repos.d/backup,在 Debian 系上是移除 /etc/apt/sources.list 和 /etc/apt/sources.list.d,将文件备份至 /etc/apt/backup 中。

因为操作系统已有的源内容不可控,使用 Pigsty 验证过的上游软件源可以提高从互联网下载软件包的成功率与速度。

但在一些特定情况下(例如您的操作系统是某种 EL/Deb 兼容版,许多软件包使用了自己的私有源),您可能需要保留现有的上游仓库定义,此时可以将此参数设置为 false。

repo_modules

参数名称: repo_modules, 类型: string, 层次:G/A

哪些上游仓库模块会被添加到本地软件源中,默认值: infra,node,pgsql

当 Pigsty 尝试添加上游仓库时,会根据此参数的值来过滤 repo_upstream 中的条目,只有 module 字段与此参数值匹配的条目才会被添加到本地软件源中。

模块以逗号分隔,可用的模块列表请参考 repo_upstream 中的定义

repo_upstream

参数名称: repo_upstream, 类型: upstream[], 层次:G

构建本地软件源时,从哪里下载上游软件包?本参数没有默认值,如果用户不在配置文件中显式指定,则会从根据当前节点的操作系统族,从定义于 roles/node_id/vars 中的 repo_upstream_default 变量中加载获取。

对于 EL (7,8,9)系统,默认使用的软件源如下所示:

- { name: pigsty-local ,description: 'Pigsty Local' ,module: local ,releases: [7,8,9] ,arch: [x86_64, aarch64] ,baseurl: { default: 'http://${admin_ip}/pigsty' }} # used by intranet nodes

- { name: pigsty-infra ,description: 'Pigsty INFRA' ,module: infra ,releases: [7,8,9] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://repo.pigsty.io/yum/infra/$basearch' ,china: 'https://repo.pigsty.cc/yum/infra/$basearch' }}

- { name: pigsty-pgsql ,description: 'Pigsty PGSQL' ,module: pgsql ,releases: [7,8,9] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://repo.pigsty.io/yum/pgsql/el$releasever.$basearch' ,china: 'https://repo.pigsty.cc/yum/pgsql/el$releasever.$basearch' }}

- { name: nginx ,description: 'Nginx Repo' ,module: infra ,releases: [7,8,9] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://nginx.org/packages/rhel/$releasever/$basearch/' }}

- { name: docker-ce ,description: 'Docker CE' ,module: infra ,releases: [7,8,9] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://download.docker.com/linux/centos/$releasever/$basearch/stable' ,china: 'https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/$basearch/stable' ,europe: 'https://mirrors.xtom.de/docker-ce/linux/centos/$releasever/$basearch/stable' }}

- { name: baseos ,description: 'EL 8+ BaseOS' ,module: node ,releases: [ 8,9] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://dl.rockylinux.org/pub/rocky/$releasever/BaseOS/$basearch/os/' ,china: 'https://mirrors.aliyun.com/rockylinux/$releasever/BaseOS/$basearch/os/' ,europe: 'https://mirrors.xtom.de/rocky/$releasever/BaseOS/$basearch/os/' }}

- { name: appstream ,description: 'EL 8+ AppStream' ,module: node ,releases: [ 8,9] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://dl.rockylinux.org/pub/rocky/$releasever/AppStream/$basearch/os/' ,china: 'https://mirrors.aliyun.com/rockylinux/$releasever/AppStream/$basearch/os/' ,europe: 'https://mirrors.xtom.de/rocky/$releasever/AppStream/$basearch/os/' }}

- { name: extras ,description: 'EL 8+ Extras' ,module: node ,releases: [ 8,9] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://dl.rockylinux.org/pub/rocky/$releasever/extras/$basearch/os/' ,china: 'https://mirrors.aliyun.com/rockylinux/$releasever/extras/$basearch/os/' ,europe: 'https://mirrors.xtom.de/rocky/$releasever/extras/$basearch/os/' }}

- { name: powertools ,description: 'EL 8 PowerTools' ,module: node ,releases: [ 8 ] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://dl.rockylinux.org/pub/rocky/$releasever/PowerTools/$basearch/os/' ,china: 'https://mirrors.aliyun.com/rockylinux/$releasever/PowerTools/$basearch/os/' ,europe: 'https://mirrors.xtom.de/rocky/$releasever/PowerTools/$basearch/os/' }}

- { name: crb ,description: 'EL 9 CRB' ,module: node ,releases: [ 9] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://dl.rockylinux.org/pub/rocky/$releasever/CRB/$basearch/os/' ,china: 'https://mirrors.aliyun.com/rockylinux/$releasever/CRB/$basearch/os/' ,europe: 'https://mirrors.xtom.de/rocky/$releasever/CRB/$basearch/os/' }}

- { name: epel ,description: 'EL 8+ EPEL' ,module: node ,releases: [ 8,9] ,arch: [x86_64, aarch64] ,baseurl: { default: 'http://download.fedoraproject.org/pub/epel/$releasever/Everything/$basearch/' ,china: 'https://mirrors.tuna.tsinghua.edu.cn/epel/$releasever/Everything/$basearch/' ,europe: 'https://mirrors.xtom.de/epel/$releasever/Everything/$basearch/' }}

- { name: pgdg-common ,description: 'PostgreSQL Common' ,module: pgsql ,releases: [7,8,9] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://download.postgresql.org/pub/repos/yum/common/redhat/rhel-$releasever-$basearch' ,china: 'https://mirrors.tuna.tsinghua.edu.cn/postgresql/repos/yum/common/redhat/rhel-$releasever-$basearch' , europe: 'https://mirrors.xtom.de/postgresql/repos/yum/common/redhat/rhel-$releasever-$basearch' }}

- { name: pgdg-el8fix ,description: 'PostgreSQL EL8FIX' ,module: pgsql ,releases: [ 8 ] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://download.postgresql.org/pub/repos/yum/common/pgdg-centos8-sysupdates/redhat/rhel-8-x86_64/' ,china: 'https://mirrors.tuna.tsinghua.edu.cn/postgresql/repos/yum/common/pgdg-centos8-sysupdates/redhat/rhel-8-x86_64/' , europe: 'https://mirrors.xtom.de/postgresql/repos/yum/common/pgdg-centos8-sysupdates/redhat/rhel-8-x86_64/' } }

- { name: pgdg-el9fix ,description: 'PostgreSQL EL9FIX' ,module: pgsql ,releases: [ 9] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://download.postgresql.org/pub/repos/yum/common/pgdg-rocky9-sysupdates/redhat/rhel-9-x86_64/' ,china: 'https://mirrors.tuna.tsinghua.edu.cn/postgresql/repos/yum/common/pgdg-rocky9-sysupdates/redhat/rhel-9-x86_64/' , europe: 'https://mirrors.xtom.de/postgresql/repos/yum/common/pgdg-rocky9-sysupdates/redhat/rhel-9-x86_64/' }}

- { name: pgdg13 ,description: 'PostgreSQL 13' ,module: pgsql ,releases: [7,8,9] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://download.postgresql.org/pub/repos/yum/13/redhat/rhel-$releasever-$basearch' ,china: 'https://mirrors.tuna.tsinghua.edu.cn/postgresql/repos/yum/13/redhat/rhel-$releasever-$basearch' ,europe: 'https://mirrors.xtom.de/postgresql/repos/yum/13/redhat/rhel-$releasever-$basearch' }}

- { name: pgdg14 ,description: 'PostgreSQL 14' ,module: pgsql ,releases: [7,8,9] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://download.postgresql.org/pub/repos/yum/14/redhat/rhel-$releasever-$basearch' ,china: 'https://mirrors.tuna.tsinghua.edu.cn/postgresql/repos/yum/14/redhat/rhel-$releasever-$basearch' ,europe: 'https://mirrors.xtom.de/postgresql/repos/yum/14/redhat/rhel-$releasever-$basearch' }}

- { name: pgdg15 ,description: 'PostgreSQL 15' ,module: pgsql ,releases: [7,8,9] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://download.postgresql.org/pub/repos/yum/15/redhat/rhel-$releasever-$basearch' ,china: 'https://mirrors.tuna.tsinghua.edu.cn/postgresql/repos/yum/15/redhat/rhel-$releasever-$basearch' ,europe: 'https://mirrors.xtom.de/postgresql/repos/yum/15/redhat/rhel-$releasever-$basearch' }}

- { name: pgdg16 ,description: 'PostgreSQL 16' ,module: pgsql ,releases: [ 8,9] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://download.postgresql.org/pub/repos/yum/16/redhat/rhel-$releasever-$basearch' ,china: 'https://mirrors.tuna.tsinghua.edu.cn/postgresql/repos/yum/16/redhat/rhel-$releasever-$basearch' ,europe: 'https://mirrors.xtom.de/postgresql/repos/yum/16/redhat/rhel-$releasever-$basearch' }}

- { name: pgdg17 ,description: 'PostgreSQL 17' ,module: pgsql ,releases: [ 8,9] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://download.postgresql.org/pub/repos/yum/17/redhat/rhel-$releasever-$basearch' ,china: 'https://mirrors.tuna.tsinghua.edu.cn/postgresql/repos/yum/17/redhat/rhel-$releasever-$basearch' ,europe: 'https://mirrors.xtom.de/postgresql/repos/yum/17/redhat/rhel-$releasever-$basearch' }}

- { name: pgdg-extras ,description: 'PostgreSQL Extra' ,module: extra ,releases: [7,8,9] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://download.postgresql.org/pub/repos/yum/common/pgdg-rhel$releasever-extras/redhat/rhel-$releasever-$basearch' ,china: 'https://mirrors.tuna.tsinghua.edu.cn/postgresql/repos/yum/common/pgdg-rhel$releasever-extras/redhat/rhel-$releasever-$basearch' , europe: 'https://mirrors.xtom.de/postgresql/repos/yum/common/pgdg-rhel$releasever-extras/redhat/rhel-$releasever-$basearch' }}

- { name: pgdg13-nonfree ,description: 'PostgreSQL 13+' ,module: extra ,releases: [7,8,9] ,arch: [x86_64 ] ,baseurl: { default: 'https://download.postgresql.org/pub/repos/yum/non-free/13/redhat/rhel-$releasever-$basearch' ,china: 'https://mirrors.tuna.tsinghua.edu.cn/postgresql/repos/yum/non-free/13/redhat/rhel-$releasever-$basearch' ,europe: 'https://mirrors.xtom.de/postgresql/repos/yum/non-free/13/redhat/rhel-$releasever-$basearch' }}

- { name: pgdg14-nonfree ,description: 'PostgreSQL 14+' ,module: extra ,releases: [7,8,9] ,arch: [x86_64 ] ,baseurl: { default: 'https://download.postgresql.org/pub/repos/yum/non-free/14/redhat/rhel-$releasever-$basearch' ,china: 'https://mirrors.tuna.tsinghua.edu.cn/postgresql/repos/yum/non-free/14/redhat/rhel-$releasever-$basearch' ,europe: 'https://mirrors.xtom.de/postgresql/repos/yum/non-free/14/redhat/rhel-$releasever-$basearch' }}

- { name: pgdg15-nonfree ,description: 'PostgreSQL 15+' ,module: extra ,releases: [7,8,9] ,arch: [x86_64 ] ,baseurl: { default: 'https://download.postgresql.org/pub/repos/yum/non-free/15/redhat/rhel-$releasever-$basearch' ,china: 'https://mirrors.tuna.tsinghua.edu.cn/postgresql/repos/yum/non-free/15/redhat/rhel-$releasever-$basearch' ,europe: 'https://mirrors.xtom.de/postgresql/repos/yum/non-free/15/redhat/rhel-$releasever-$basearch' }}

- { name: pgdg16-nonfree ,description: 'PostgreSQL 16+' ,module: extra ,releases: [ 8,9] ,arch: [x86_64 ] ,baseurl: { default: 'https://download.postgresql.org/pub/repos/yum/non-free/16/redhat/rhel-$releasever-$basearch' ,china: 'https://mirrors.tuna.tsinghua.edu.cn/postgresql/repos/yum/non-free/16/redhat/rhel-$releasever-$basearch' ,europe: 'https://mirrors.xtom.de/postgresql/repos/yum/non-free/16/redhat/rhel-$releasever-$basearch' }}

- { name: pgdg17-nonfree ,description: 'PostgreSQL 17+' ,module: extra ,releases: [ 8,9] ,arch: [x86_64 ] ,baseurl: { default: 'https://download.postgresql.org/pub/repos/yum/non-free/17/redhat/rhel-$releasever-$basearch' ,china: 'https://mirrors.tuna.tsinghua.edu.cn/postgresql/repos/yum/non-free/17/redhat/rhel-$releasever-$basearch' ,europe: 'https://mirrors.xtom.de/postgresql/repos/yum/non-free/17/redhat/rhel-$releasever-$basearch' }}

- { name: timescaledb ,description: 'TimescaleDB' ,module: extra ,releases: [7,8,9] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://packagecloud.io/timescale/timescaledb/el/$releasever/$basearch' }}

- { name: wiltondb ,description: 'WiltonDB' ,module: mssql ,releases: [7,8,9] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://repo.pigsty.io/yum/mssql/el$releasever.$basearch', china: 'https://repo.pigsty.cc/yum/mssql/el$releasever.$basearch' , origin: 'https://download.copr.fedorainfracloud.org/results/wiltondb/wiltondb/epel-$releasever-$basearch/' }}

- { name: ivorysql ,description: 'IvorySQL' ,module: ivory ,releases: [7,8,9] ,arch: [x86_64 ] ,baseurl: { default: 'https://repo.pigsty.io/yum/ivory/el$releasever.$basearch', china: 'https://repo.pigsty.cc/yum/ivory/el$releasever.$basearch' }}

- { name: groonga ,description: 'Groonga' ,module: groonga ,releases: [ 8,9] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://packages.groonga.org/almalinux/$releasever/$basearch/' }}

- { name: mysql ,description: 'MySQL' ,module: mysql ,releases: [7,8,9] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://repo.mysql.com/yum/mysql-8.0-community/el/$releasever/$basearch/', china: 'https://mirrors.tuna.tsinghua.edu.cn/mysql/yum/mysql-8.0-community-el7-$basearch/'}}

- { name: mongo ,description: 'MongoDB' ,module: mongo ,releases: [7,8,9] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://repo.mongodb.org/yum/redhat/$releasever/mongodb-org/8.0/$basearch/' , 'https://mirrors.aliyun.com/mongodb/yum/redhat/$releasever/mongodb-org/8.0/$basearch/' }}

- { name: redis ,description: 'Redis' ,module: redis ,releases: [7 ] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://rpmfind.net/linux/remi/enterprise/$releasever/remi/$basearch/' }}

- { name: redis ,description: 'Redis' ,module: redis ,releases: [ 8,9] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://rpmfind.net/linux/remi/enterprise/$releasever/redis72/$basearch/' }}

- { name: grafana ,description: 'Grafana' ,module: grafana ,releases: [7,8,9] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://rpm.grafana.com' }}

- { name: kubernetes ,description: 'Kubernetes' ,module: kube ,releases: [7,8,9] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://pkgs.k8s.io/core:/stable:/v1.31/rpm/', china: 'https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.31/rpm/' }}

- { name: gitlab ,description: 'Gitlab' ,module: gitlab ,releases: [ 8,9] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://packages.gitlab.com/gitlab/gitlab-ee/el/$releasever/$basearch' }}

对于 Debian (11,12)或 Ubuntu (20.04,22.04),默认使用的软件源如下所示:

- { name: pigsty-local ,description: 'Pigsty Local' ,module: local ,releases: [11,12,20,22,24] ,arch: [x86_64, aarch64] ,baseurl: { default: 'http://${admin_ip}/pigsty ./' }}

- { name: pigsty-pgsql ,description: 'Pigsty PgSQL' ,module: pgsql ,releases: [11,12,20,22,24] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://repo.pigsty.io/apt/pgsql/${distro_codename} ${distro_codename} main', china: 'https://repo.pigsty.cc/apt/pgsql/${distro_codename} ${distro_codename} main' }}

- { name: pigsty-infra ,description: 'Pigsty Infra' ,module: infra ,releases: [11,12,20,22,24] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://repo.pigsty.io/apt/infra/ generic main' ,china: 'https://repo.pigsty.cc/apt/infra/ generic main' }}

- { name: nginx ,description: 'Nginx' ,module: infra ,releases: [11,12,20,22,24] ,arch: [x86_64, aarch64] ,baseurl: { default: 'http://nginx.org/packages/${distro_name} ${distro_codename} nginx' }}

- { name: docker-ce ,description: 'Docker' ,module: infra ,releases: [11,12,20,22,24] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://download.docker.com/linux/${distro_name} ${distro_codename} stable' ,china: 'https://mirrors.tuna.tsinghua.edu.cn/docker-ce/linux//${distro_name} ${distro_codename} stable' }}

- { name: base ,description: 'Debian Basic' ,module: node ,releases: [11,12 ] ,arch: [x86_64, aarch64] ,baseurl: { default: 'http://deb.debian.org/debian/ ${distro_codename} main non-free-firmware' ,china: 'https://mirrors.aliyun.com/debian/ ${distro_codename} main restricted universe multiverse' }}

- { name: updates ,description: 'Debian Updates' ,module: node ,releases: [11,12 ] ,arch: [x86_64, aarch64] ,baseurl: { default: 'http://deb.debian.org/debian/ ${distro_codename}-updates main non-free-firmware' ,china: 'https://mirrors.aliyun.com/debian/ ${distro_codename}-updates main restricted universe multiverse' }}

- { name: security ,description: 'Debian Security' ,module: node ,releases: [11,12 ] ,arch: [x86_64, aarch64] ,baseurl: { default: 'http://security.debian.org/debian-security ${distro_codename}-security main non-free-firmware' ,china: 'https://mirrors.aliyun.com/debian-security/ ${distro_codename}-security main non-free-firmware' }}

- { name: base ,description: 'Ubuntu Basic' ,module: node ,releases: [ 20,22,24] ,arch: [x86_64 ] ,baseurl: { default: 'https://mirrors.edge.kernel.org/ubuntu/ ${distro_codename} main universe multiverse restricted' ,china: 'https://mirrors.aliyun.com/ubuntu/ ${distro_codename} main restricted universe multiverse' }}

- { name: updates ,description: 'Ubuntu Updates' ,module: node ,releases: [ 20,22,24] ,arch: [x86_64 ] ,baseurl: { default: 'https://mirrors.edge.kernel.org/ubuntu/ ${distro_codename}-backports main restricted universe multiverse' ,china: 'https://mirrors.aliyun.com/ubuntu/ ${distro_codename}-updates main restricted universe multiverse' }}

- { name: backports ,description: 'Ubuntu Backports' ,module: node ,releases: [ 20,22,24] ,arch: [x86_64 ] ,baseurl: { default: 'https://mirrors.edge.kernel.org/ubuntu/ ${distro_codename}-security main restricted universe multiverse' ,china: 'https://mirrors.aliyun.com/ubuntu/ ${distro_codename}-backports main restricted universe multiverse' }}

- { name: security ,description: 'Ubuntu Security' ,module: node ,releases: [ 20,22,24] ,arch: [x86_64 ] ,baseurl: { default: 'https://mirrors.edge.kernel.org/ubuntu/ ${distro_codename}-updates main restricted universe multiverse' ,china: 'https://mirrors.aliyun.com/ubuntu/ ${distro_codename}-security main restricted universe multiverse' }}

- { name: base ,description: 'Ubuntu Basic' ,module: node ,releases: [ 20,22,24] ,arch: [ aarch64] ,baseurl: { default: 'http://ports.ubuntu.com/ubuntu-ports/ ${distro_codename} main universe multiverse restricted' ,china: 'https://mirrors.aliyun.com/ubuntu-ports/ ${distro_codename} main restricted universe multiverse' }}

- { name: updates ,description: 'Ubuntu Updates' ,module: node ,releases: [ 20,22,24] ,arch: [ aarch64] ,baseurl: { default: 'http://ports.ubuntu.com/ubuntu-ports/ ${distro_codename}-backports main restricted universe multiverse' ,china: 'https://mirrors.aliyun.com/ubuntu-ports/ ${distro_codename}-updates main restricted universe multiverse' }}

- { name: backports ,description: 'Ubuntu Backports' ,module: node ,releases: [ 20,22,24] ,arch: [ aarch64] ,baseurl: { default: 'http://ports.ubuntu.com/ubuntu-ports/ ${distro_codename}-security main restricted universe multiverse' ,china: 'https://mirrors.aliyun.com/ubuntu-ports/ ${distro_codename}-backports main restricted universe multiverse' }}

- { name: security ,description: 'Ubuntu Security' ,module: node ,releases: [ 20,22,24] ,arch: [ aarch64] ,baseurl: { default: 'http://ports.ubuntu.com/ubuntu-ports/ ${distro_codename}-updates main restricted universe multiverse' ,china: 'https://mirrors.aliyun.com/ubuntu-ports/ ${distro_codename}-security main restricted universe multiverse' }}

- { name: pgdg ,description: 'PGDG' ,module: pgsql ,releases: [11,12,20,22,24] ,arch: [x86_64, aarch64] ,baseurl: { default: 'http://apt.postgresql.org/pub/repos/apt/ ${distro_codename}-pgdg main' ,china: 'https://mirrors.tuna.tsinghua.edu.cn/postgresql/repos/apt/ ${distro_codename}-pgdg main' }}

- { name: timescaledb ,description: 'Timescaledb' ,module: extra ,releases: [11,12,20,22,24] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://packagecloud.io/timescale/timescaledb/${distro_name}/ ${distro_codename} main' }}

- { name: citus ,description: 'Citus' ,module: extra ,releases: [11,12,20,22 ] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://packagecloud.io/citusdata/community/${distro_name}/ ${distro_codename} main' } }

- { name: pgml ,description: 'PostgresML' ,module: pgml ,releases: [ 22 ] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://apt.postgresml.org ${distro_codename} main' }}

- { name: wiltondb ,description: 'WiltonDB' ,module: mssql ,releases: [ 20,22,24] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://repo.pigsty.io/apt/mssql/ ${distro_codename} main', china: 'https://repo.pigsty.cc/apt/mssql/ ${distro_codename} main' , origin: 'https://ppa.launchpadcontent.net/wiltondb/wiltondb/ubuntu/ ${distro_codename} main' }}

- { name: groonga ,description: 'Groonga Debian' ,module: groonga ,releases: [11,12 ] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://packages.groonga.org/debian/ ${distro_codename} main' }}

- { name: groonga ,description: 'Groonga Ubuntu' ,module: groonga ,releases: [ 20,22,24] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://ppa.launchpadcontent.net/groonga/ppa/ubuntu/ ${distro_codename} main' }}

- { name: mysql ,description: 'MySQL' ,module: mysql ,releases: [11,12,20,22,24] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://repo.mysql.com/apt/${distro_name} ${distro_codename} mysql-8.0 mysql-tools', china: 'https://mirrors.tuna.tsinghua.edu.cn/mysql/apt/${distro_name} ${distro_codename} mysql-8.0 mysql-tools' }}

- { name: mongo ,description: 'MongoDB' ,module: mongo ,releases: [11,12,20,22,24] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://repo.mongodb.org/apt/${distro_name} ${distro_codename}/mongodb-org/8.0 multiverse', china: 'https://mirrors.aliyun.com/mongodb/apt/${distro_name} ${distro_codename}/mongodb-org/8.0 multiverse' }}

- { name: redis ,description: 'Redis' ,module: redis ,releases: [11,12,20,22,24] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://packages.redis.io/deb ${distro_codename} main' }}

- { name: haproxyd ,description: 'Haproxy Debian' ,module: haproxy ,releases: [11,12 ] ,arch: [x86_64, aarch64] ,baseurl: { default: 'http://haproxy.debian.net/ ${distro_codename}-backports-3.1 main' }}

- { name: haproxyu ,description: 'Haproxy Ubuntu' ,module: haproxy ,releases: [ 20,22,24] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://ppa.launchpadcontent.net/vbernat/haproxy-3.1/ubuntu/ ${distro_codename} main' }}

- { name: grafana ,description: 'Grafana' ,module: grafana ,releases: [11,12,20,22,24] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://apt.grafana.com stable main' ,china: 'https://mirrors.tuna.tsinghua.edu.cn/grafana/apt/ stable main' }}

- { name: kubernetes ,description: 'Kubernetes' ,module: kube ,releases: [11,12,20,22,24] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://pkgs.k8s.io/core:/stable:/v1.31/deb/ /', china: 'https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.31/deb/ /' }}

- { name: gitlab ,description: 'Gitlab' ,module: gitlab ,releases: [11,12,20,22,24] ,arch: [x86_64, aarch64] ,baseurl: { default: 'https://packages.gitlab.com/gitlab/gitlab-ee/${distro_name}/ ${distro_codename} main' }}

repo_packages

参数名称: repo_packages, 类型: string[], 层次:G

字符串数组类型,每一行都是 由空格分隔 的软件包列表字符串,指定将要使用 repotrack 或 apt download 下载到本地的软件包(及其依赖)。

本参数没有默认值,即默认值为未定义状态。如果该参数没有被显式定义,那么 Pigsty 会从 roles/node_id/vars 中定义的 repo_packages_default 变量中加载获取默认值,默认值为:

[ node-bootstrap, infra-package, infra-addons, node-package1, node-package2, pgsql-utility, extra-modules ]

该参数中的每个元素,都会在上述文件中定义的 package_map 中,根据特定的操作系统发行版大版本进行翻译。例如在 EL 系统上会翻译为:

node-bootstrap: "ansible python3 python3-pip python3-virtualenv python3-requests python3-jmespath python3-cryptography dnf-utils modulemd-tools createrepo_c sshpass"

infra-package: "nginx dnsmasq etcd haproxy vip-manager node_exporter keepalived_exporter pg_exporter pgbackrest_exporter redis_exporter redis minio mcli pig"

infra-addons: "grafana grafana-plugins loki logcli promtail prometheus alertmanager pushgateway blackbox_exporter nginx_exporter pev2 certbot python3-certbot-nginx"

extra-modules: "docker-ce docker-compose-plugin ferretdb2 duckdb restic juicefs vray grafana-infinity-ds"

node-package1: "lz4 unzip bzip2 zlib yum pv jq git ncdu make patch bash lsof wget uuid tuned nvme-cli numactl grubby sysstat iotop htop rsync tcpdump perf flamegraph chkconfig"

node-package2: "netcat socat ftp lrzsz net-tools ipvsadm bind-utils telnet audit ca-certificates readline vim-minimal keepalived chrony openssl openssh-server openssh-clients"

pgsql-utility: "patroni patroni-etcd pgbouncer pgbackrest pgbadger pg_activity pg_timetable pgFormatter pg_filedump pgxnclient timescaledb-tools timescaledb-event-streamer pgcopydb"

而在 Debian 系统上会被翻译为对应的 Debian DEB 包名:

node-bootstrap: "ansible python3 python3-pip python3-venv python3-jmespath dpkg-dev sshpass ftp linux-tools-generic"

infra-package: "nginx dnsmasq etcd haproxy vip-manager node-exporter keepalived-exporter pg-exporter pgbackrest-exporter redis-exporter redis minio mcli pig"

infra-addons: "grafana grafana-plugins loki logcli promtail prometheus alertmanager pushgateway blackbox-exporter nginx-exporter pev2 certbot python3-certbot-nginx"

extra-modules: "docker-ce docker-compose-plugin ferretdb2 duckdb restic juicefs vray grafana-infinity-ds"

node-package1: "lz4 unzip bzip2 zlib1g pv jq git ncdu make patch bash lsof wget uuid tuned nvme-cli numactl sysstat iotop htop rsync tcpdump acl chrony"

node-package2: "netcat-openbsd socat lrzsz net-tools ipvsadm dnsutils telnet ca-certificates libreadline-dev vim-tiny keepalived openssl openssh-server openssh-client"

pgsql-utility: "patroni pgbouncer pgbackrest pgbadger pg-activity pg-timetable pgformatter postgresql-filedump pgxnclient timescaledb-tools timescaledb-event-streamer pgcopydb pgloader"

作为一个使用约定,repo_packages 中通常包括了那些与 PostgreSQL 大版本号无关的软件包(例如 Infra,Node 和 PGDG Common 等部分),而 PostgreSQL 大版本相关的软件包(内核,扩展),通常在 repo_extra_packages 中指定,方便用户切换 PG 大版本。

repo_extra_packages

参数名称: repo_extra_packages, 类型: string[], 层次:G/C/I

用于在不修改 repo_packages 的基础上,指定额外需要下载的软件包(通常是 PG 大版本相关的软件包),默认值为空列表。

如果该参数没有被显式定义,那么 Pigsty 会从 roles/node_id/vars 中定义的 repo_extra_packages_default 变量中加载获取默认值,默认值为:

[ pgsql-main ]

该参数中的每个元素,都会在上述文件中定义的 package_map 中,根据特定的操作系统发行版大版本进行翻译。例如在 EL 系统上会翻译为:

postgresql$v postgresql$v-server postgresql$v-libs postgresql$v-contrib postgresql$v-plperl postgresql$v-plpython3 postgresql$v-pltcl postgresql$v-llvmjit pg_repack_$v* wal2json_$v* pgvector_$v*

而在 Debian 系统上会被翻译为对应的 Debian DEB 包名:

postgresql-$v postgresql-client-$v postgresql-plpython3-$v postgresql-plperl-$v postgresql-pltcl-$v postgresql-$v-repack postgresql-$v-wal2json postgresql-$v-pgvector

这里的 $v 会被替换为 pg_version,即当前 PG 大版本号 (默认为 17)。通常用户可以在这里指定 PostgreSQL 大版本相关的软件包,而不影响 repo_packages 中定义的其他 PG 大版本无关的软件包。

repo_url_packages

参数名称: repo_url_packages, 类型: object[] | string[], 层次:G

直接使用 URL 从互联网上下载的软件包,默认为空数组: []

您可以直接在本参数中使用 URL 字符串作为数组元素,也可以使用 Pigsty v3 新引入的对象结构,显式指定 URL 与文件名称。

请注意,本参数会收到 region 变量的影响,如果您在中国大陆地区,Pigsty 会自动将 URL 替换为国内镜像站点,即将 URL 里的 repo.pigsty.io 替换为 repo.pigsty.cc。

INFRA_PACKAGE

这些软件包只会在 INFRA 节点上安装,包括普通的 RPM/DEB 软件包,以及 PIP 软件包。

infra_packages

参数名称: infra_packages, 类型: string[], 层次:G

字符串数组类型,每一行都是 由空格分隔 的软件包列表字符串,指定将要在 Infra 节点上安装的软件包列表。

本参数没有默认值,即默认值为未定义状态。如果用户不在配置文件中显式指定本参数,则 Pigsty 会从根据当前节点的操作系统族,

从定义于 roles/node_id/vars 中的 infra_packages_default 变量中加载获取默认值。

默认值(EL系操作系统):

infra_packages: # 将在基础设施节点上安装的软件包列表

- grafana,loki,logcli,promtail,prometheus,alertmanager,pushgateway,grafana-plugins,restic,certbot,python3-certbot-nginx

- node_exporter,blackbox_exporter,nginx_exporter,pg_exporter,pev2,nginx,dnsmasq,ansible,etcd,python3-requests,redis,mcli

默认值(Debian/Ubuntu):

infra_packages: # 将在基础设施节点上安装的软件包列表

- grafana,grafana-plugins,loki,logcli,promtail,prometheus,alertmanager,pushgateway,restic,certbot,python3-certbot-nginx

- node-exporter,blackbox-exporter,nginx-exporter,pg-exporter,pev2,nginx,dnsmasq,ansible,etcd,python3-requests,redis,mcli

infra_packages_pip

参数名称: infra_packages_pip, 类型: string, 层次:G

Infra 节点上要使用 pip 额外安装的软件包,包名使用逗号分隔,默认值是空字符串,即不安装任何额外的 python 包。

NGINX

Pigsty 会通过 Nginx 代理所有的 Web 服务访问:Home Page、Grafana、Prometheus、AlertManager 等等。

以及其他可选的工具,如 PGWe、Jupyter Lab、Pgadmin、Bytebase 等等,还有一些静态资源和报告,如 pev、schemaspy 和 pgbadger。

最重要的是,Nginx 还作为本地软件仓库(Yum/Apt)的 Web 服务器,用于存储和分发 Pigsty 的软件包。 此外,Pigsty 还可以使用 Certbot 自动申请免费的 Nginx SSL 证书,使用真实域名与HTTPS安全地对公网提供服务。

nginx_enabled: true # 在当前基础设施节点上启用 Nginx?

nginx_exporter_enabled: true # 在当前基础设施节点上启用 nginx_exporter?

nginx_sslmode: enable # Nginx 的 SSL 工作模式?disable,enable,enforce

nginx_home: /www # Nginx 静态文件目录,默认为:`/www`

nginx_port: 80 # Nginx 默认监听的端口(提供HTTP服务),默认为 `80`

nginx_ssl_port: 443 # Nginx SSL 默认监听的端口,默认为 `443`

nginx_navbar: # Nginx 首页上的导航栏内容

- { name: CA Cert ,url: '/ca.crt' ,desc: 'pigsty self-signed ca.crt' }

- { name: Package ,url: '/pigsty' ,desc: 'local yum repo packages' }

- { name: PG Logs ,url: '/logs' ,desc: 'postgres raw csv logs' }

- { name: Reports ,url: '/report' ,desc: 'pgbadger summary report' }

- { name: Explain ,url: '/pigsty/pev.html' ,desc: 'postgres explain visualizer' }

certbot_sign: false # 使用 certbot 自动申请 Nginx SSL 证书?

certbot_email: your@email.com # certbot 邮箱地址,用于接收证书过期提醒邮件

certbot_options: '' # certbot 额外选项

nginx_enabled

参数名称: nginx_enabled, 类型: bool, 层次:G/I

是否在当前的 Infra 节点上启用 Nginx?默认值为: true。

nginx_exporter_enabled

参数名称: nginx_exporter_enabled, 类型: bool, 层次:G/I

在此基础设施节点上启用 nginx_exporter ?默认值为: true。

如果禁用此选项,还会一并禁用 /nginx 健康检查 stub,当您安装使用的 Nginx 版本不支持此功能是可以考虑关闭此开关

nginx_sslmode

参数名称: nginx_sslmode, 类型: enum, 层次:G

Nginx 的 SSL工作模式?有三种选择:disable , enable , enforce, 默认值为 enable,即启用 SSL,但不强制使用。

disable:只监听nginx_port指定的端口服务 HTTP 请求。enable:同时会监听nginx_ssl_port指定的端口服务 HTTPS 请求。enforce:所有链接都会被渲染为默认使用https://- 同时 Nginx

infra_portal中除默认服务器外的其他服务器都会自动将 80 端口重定向到 443 端口。

- 同时 Nginx

nginx_home

参数名称: nginx_home, 类型: path, 层次:G

Nginx服务器静态文件目录,默认为: /www

Nginx服务器的根目录,包含静态资源和软件仓库文件。最好不要随意修改此参数,修改时需要与 repo_home 参数保持一致。

nginx_port

参数名称: nginx_port, 类型: port, 层次:G

Nginx 默认监听的端口(提供HTTP服务),默认为 80 端口,最好不要修改这个参数。

当您的服务器 80 端口被占用时,可以考虑修改此参数,但是需要同时修改 repo_endpoint ,

以及 node_repo_local_urls 所使用的端口并与这里保持一致。

nginx_ssl_port

参数名称: nginx_ssl_port, 类型: port, 层次:G

Nginx SSL 默认监听的端口,默认为 443,最好不要修改这个参数。

nginx_navbar

参数名称: nginx_navbar, 类型: index[], 层次:G

Nginx 首页上的导航栏内容,默认值:

nginx_navbar: # Nginx 首页上的导航栏内容

- { name: CA Cert ,url: '/ca.crt' ,desc: 'pigsty self-signed ca.crt' }

- { name: Package ,url: '/pigsty' ,desc: 'local yum repo packages' }

- { name: PG Logs ,url: '/logs' ,desc: 'postgres raw csv logs' }

- { name: Reports ,url: '/report' ,desc: 'pgbadger summary report' }

- { name: Explain ,url: '/pigsty/pev.html' ,desc: 'postgres explain visualizer' }

每一条记录都会被渲染为一个导航链接,链接到 Pigsty 首页的 App 下拉菜单,所有的 App 都是可选的,默认挂载在 Pigsty 默认服务器下的 http://pigsty/ 。

url 参数指定了 App 的 URL PATH,但是如果 URL 中包含 ${grafana} 字符串,它会被自动替换为 infra_portal 中定义的 Grafana 域名。

所以您可以将一些使用 Grafana 的数据应用挂载到 Pigsty 的首页导航栏中。

certbot_sign

参数名称: certbot_sign, 类型: bool, 层次:G/A

是否使用 certbot 自动申请证书?默认值为 false。

当设置为 true 时,Pigsty 将在 infra.yml 和 install.yml 剧本执行过程中(nginx 角色)中,使用 certbot 从 Let’s Encrypt 自动申请免费的 SSL 证书。

在 infra_portal 中定义的域名,如果定义了 certbot 参数,那么 Pigsty 会使用 certbot 申请 domain 域名证书,证书名为 cerbot 参数的值。

如果有多个服务器/域名指定了相同 certbot 参数,那么 Pigsty 会为这些域名合并申请一个证书,并使用 certbot 参数的值作为证书名。

启用此选项需要您:

- 当前节点可以通过公网域名访问到,DNS 解析已经正确指向当前节点的公网 IP

- 当前节点可以访问到 Let’s Encrypt 的 API 接口

此选项默认关闭,您可以在安装完成后执行 make cert 命令来手动执行,它实际会调用渲染的 /etc/nginx/sign-cert 脚本,使用 certbot 更新或申请证书。

certbot_email

参数名称: certbot_email, 类型: string, 层次:G/A

申请证书时使用的 email 地址,用于接收证书过期提醒邮件。默认值为占位邮件地址:your@email.com。

当 certbot_sign 设置为 true 时,建议提供此参数。Let’s Encrypt 会在证书即将过期时向此邮箱发送提醒邮件。

certbot_option

参数名称: certbot_option, 类型: string, 层次:G/A

申请证书时额外传入的配置参数,默认为空字符串。

您可以通过此参数向 certbot 传递额外的命令行选项,例如 --dry-run ,那么 certbot 将不会真正申请证书,而是进行预览和测试。

DNS

Pigsty 默认会在 Infra 节点上启用 DNSMASQ 服务,用于解析一些辅助域名,例如 h.pigsty a.pigsty p.pigsty g.pigsty 等等,以及可选 MinIO 的 sss.pigsty。

解析记录会记录在 Infra 节点的 /etc/hosts.d/default 文件中。 要使用这个 DNS 服务器,您必须将 nameserver <ip> 添加到 /etc/resolv 中,node_dns_servers 参数可以解决这个问题。

dns_enabled: true # 在当前基础设施节点上启用 DNSMASQ 服务?

dns_port: 53 # DNS 服务器监听端口,默认为 `53`

dns_records: # 由 dnsmasq 解析的动态 DNS 记录

- "${admin_ip} h.pigsty a.pigsty p.pigsty g.pigsty"

- "${admin_ip} api.pigsty adm.pigsty cli.pigsty ddl.pigsty lab.pigsty git.pigsty sss.pigsty wiki.pigsty"

dns_enabled

参数名称: dns_enabled, 类型: bool, 层次:G/I

是否在这个 Infra 节点上启用 DNSMASQ 服务?默认值为: true。

如果你不想使用默认的 DNS 服务器,(比如你已经有了外部的DNS服务器,或者您的供应商不允许您使用 DNS 服务器)可以将此值设置为 false 来禁用它。

并使用 node_default_etc_hosts 和 node_etc_hosts 静态解析记录代替。

dns_port

参数名称: dns_port, 类型: port, 层次:G

DNSMASQ 的默认监听端口,默认是 53,不建议修改 DNS 服务默认端口。

dns_records

参数名称: dns_records, 类型: string[], 层次:G

由 dnsmasq 负责解析的动态 DNS 记录,一般用于将一些辅助域名解析到本地,例如 h.pigsty a.pigsty p.pigsty g.pigsty 等等。这些记录会被写入到基础设置节点的 /etc/hosts.d/default 文件中。

dns_records: # 由 dnsmasq 解析的动态 DNS 记录

- "${admin_ip} h.pigsty a.pigsty p.pigsty g.pigsty"

- "${admin_ip} api.pigsty adm.pigsty cli.pigsty ddl.pigsty lab.pigsty git.pigsty sss.pigsty wiki.pigsty"

PROMETHEUS

Prometheus 被用作时序数据库,用于存储和分析监控指标数据,进行指标预计算,评估告警规则。

prometheus_enabled: true # 在当前基础设施节点上启用 Prometheus?

prometheus_clean: true # 在初始化 Prometheus 的时候清除现有数据?

prometheus_data: /data/prometheus # Prometheus 数据目录,默认为 `/data/prometheus`

prometheus_sd_dir: /etc/prometheus/targets # Prometheus 静态文件服务发现目录

prometheus_sd_interval: 5s # Prometheus 目标刷新间隔,默认为 `5s`

prometheus_scrape_interval: 10s # Prometheus 抓取 & 评估间隔,默认为 `10s`

prometheus_scrape_timeout: 8s # Prometheus 全局抓取超时,默认为 `8s`

prometheus_options: '--storage.tsdb.retention.time=15d' # Prometheus 额外的命令行参数选项

pushgateway_enabled: true # 在当前基础设施节点上启用 PushGateway?

pushgateway_options: '--persistence.interval=1m' # PushGateway 额外的命令行参数选项

blackbox_enabled: true # 在当前基础设施节点上启用 Blackbox_Exporter?

blackbox_options: '' # Blackbox_Exporter 额外的命令行参数选项

alertmanager_enabled: true # 在当前基础设施节点上启用 Alertmanager?

alertmanager_port: 9093 # Alertmanager 监听端口,默认为 `9093`

alertmanager_options: '' # Alertmanager 额外的命令行参数选项

exporter_metrics_path: /metrics # Exporter 指标路径,默认为 `/metrics`

exporter_install: none # 如何安装 Exporter?none,yum,binary

exporter_repo_url: '' # 如果通过 yum 安装 Exporter,则指定 yum 仓库文件地址

prometheus_enabled

参数名称: prometheus_enabled, 类型: bool, 层次:G/I

是否在当前 Infra 节点上启用 Prometheus? 默认值为 true,即所有基础设施节点默认都会安装启用 Prometheus。

例如,如果您有多个元节点,默认情况下,Pigsty会在所有元节点上部署Prometheus。如果您想一台用于Prometheus监控指标收集,一台用于Loki日志收集,则可以在其他元节点的实例层次上将此参数设置为false。

prometheus_clean

参数名称: prometheus_clean, 类型: bool, 层次:G/A

是否在执行 Prometheus 初始化的时候清除现有 Prometheus 数据?默认值为 true。

prometheus_data

参数名称: prometheus_data, 类型: path, 层次:G

Prometheus数据库目录, 默认位置为 /data/prometheus。

prometheus_sd_dir

参数名称: prometheus_sd_dir, 类型: path, 层次:G

Prometheus 静态文件服务发现的对象存储目录,默认值为 /etc/prometheus/targets。

prometheus_sd_interval

参数名称: prometheus_sd_interval, 类型: interval, 层次:G

Prometheus 静态文件服务发现的刷新周期,默认值为 5s。

这意味着 Prometheus 每隔这样长的时间就会重新扫描一次 prometheus_sd_dir (默认为:/etc/prometheus/targets 目录),以发现新的监控对象。

prometheus_scrape_interval

参数名称: prometheus_scrape_interval, 类型: interval, 层次:G

Prometheus 全局指标抓取周期, 默认值为 10s。在生产环境,10秒 - 30秒是一个较为合适的抓取周期。如果您需要更精细的的监控数据粒度,则可以调整此参数。

prometheus_scrape_timeout

参数名称: prometheus_scrape_timeout, 类型: interval, 层次:G

Prometheus 全局抓取超时,默认为 8s。

设置抓取超时可以有效避免监控系统查询导致的雪崩,设置原则是,本参数必须小于并接近 prometheus_scrape_interval ,确保每次抓取时长不超过抓取周期。

prometheus_options

参数名称: prometheus_options, 类型: arg, 层次:G

Prometheus 的额外的命令行参数,默认值:--storage.tsdb.retention.time=15d

默认的参数会为 Prometheus 配置一个 15 天的保留期限来限制磁盘使用量。

pushgateway_enabled

参数名称: pushgateway_enabled, 类型: bool, 层次:G/I

是否在当前 Infra 节点上启用 PushGateway? 默认值为 true,即所有基础设施节点默认都会安装启用 PushGateway。

pushgateway_options

参数名称: pushgateway_options, 类型: arg, 层次:G

PushGateway 的额外的命令行参数,默认值:--persistence.interval=1m,即每分钟进行一次持久化操作。

blackbox_enabled

参数名称: blackbox_enabled, 类型: bool, 层次:G/I

是否在当前 Infra 节点上启用 BlackboxExporter ? 默认值为 true,即所有基础设施节点默认都会安装启用 BlackboxExporter 。

BlackboxExporter 会向节点 IP 地址, VIP 地址,PostgreSQL VIP 地址发送 ICMP 报文测试网络连通性。

blackbox_options

参数名称: blackbox_options, 类型: arg, 层次:G

BlackboxExporter 的额外的命令行参数,默认值:空字符串。

alertmanager_enabled

参数名称: alertmanager_enabled, 类型: bool, 层次:G/I

是否在当前 Infra 节点上启用 AlertManager ? 默认值为 true,即所有基础设施节点默认都会安装启用 AlertManager 。

alertmanager_port

参数名称: alertmanager_port, 类型: port, 层次:G

AlertManager 的监听端口,默认值为 9093。

之所以允许特殊设置 AlertManager 的端口号,是因为 Kafka 的默认端口用到了 9093,容易出现冲突。

alertmanager_options

参数名称: alertmanager_options, 类型: arg, 层次:G

AlertManager 的额外的命令行参数,默认值:空字符串。

exporter_metrics_path

参数名称: exporter_metrics_path, 类型: path, 层次:G

监控 exporter 暴露指标的 HTTP 端点路径,默认为: /metrics ,不建议修改此参数。

exporter_install

参数名称: exporter_install, 类型: enum, 层次:G

(弃用参数)安装监控组件的方式,有三种可行选项:none, yum, binary

指明安装Exporter的方式:

none:不安装,(默认行为,Exporter已经在先前由node_pkg任务完成安装)yum:使用yum(apt)安装(如果启用yum安装,在部署Exporter前执行yum安装node_exporter与pg_exporter)binary:使用拷贝二进制的方式安装(从元节点中直接拷贝node_exporter与pg_exporter二进制,不推荐)

使用yum安装时,如果指定了exporter_repo_url(不为空),在执行安装时会首先将该URL下的REPO文件安装至/etc/yum.repos.d中。这一功能可以在不执行节点基础设施初始化的环境下直接进行Exporter的安装。

不推荐普通用户使用binary安装,这种模式通常用于紧急故障抢修与临时问题修复。

exporter_repo_url

参数名称: exporter_repo_url, 类型: url, 层次:G

(弃用参数)监控组件的 Yum Repo URL

默认为空,当 exporter_install 为 yum 时,该参数指定的Repo会被添加至节点源列表中。

GRAFANA

Pigsty 使用 Grafana 作为监控系统前端。它也可以做为数据分析与可视化平台,或者用于低代码数据应用开发,制作数据应用原型等目的。

grafana_enabled: true # enable grafana on this infra node?

grafana_clean: true # clean grafana data during init?

grafana_admin_username: admin # grafana admin username, `admin` by default

grafana_admin_password: pigsty # grafana admin password, `pigsty` by default

loki_enabled: true # enable loki on this infra node?

loki_clean: false # whether remove existing loki data?

loki_data: /data/loki # loki data dir, `/data/loki` by default

loki_retention: 15d # loki log retention period, 15d by default

grafana_enabled

参数名称: grafana_enabled, 类型: bool, 层次:G/I

是否在Infra节点上启用Grafana?默认值为: true,即所有基础设施节点默认都会安装启用 Grafana。

grafana_clean

参数名称: grafana_clean, 类型: bool, 层次:G/A

是否在初始化 Grafana 时一并清理其数据文件?默认为:true。

该操作会移除 /var/lib/grafana/grafana.db,确保 Grafana 全新安装。

grafana_admin_username

参数名称: grafana_admin_username, 类型: username, 层次:G

Grafana管理员用户名,admin by default

grafana_admin_password

参数名称: grafana_admin_password, 类型: password, 层次:G

Grafana管理员密码,pigsty by default

提示:请务必在生产部署中修改此密码参数!

LOKI

Loki 是Grafana提供的轻量级日志收集/检索平台,它可以提供一个集中查询服务器/数据库日志的地方。

loki_enabled

参数名称: loki_enabled, 类型: bool, 层次:G/I

是否在当前 Infra 节点上启用 Loki ? 默认值为 true,即所有基础设施节点默认都会安装启用 Loki 。

loki_clean

参数名称: loki_clean, 类型: bool, 层次:G/A

是否在安装Loki时清理数据库目录?默认值: false,现有日志数据在初始化时会保留。

loki_data

参数名称: loki_data, 类型: path, 层次:G

Loki的数据目录,默认值为: /data/loki

loki_retention

参数名称: loki_retention, 类型: interval, 层次:G

Loki日志默认保留天数,默认保留 15d 。

4 - 预置剧本

Pigsty 提供了三个与 INFRA 模块相关的剧本:

infra.yml:在 infra 节点上初始化 pigsty 基础设施infra-rm.yml:从 infra 节点移除基础设施组件install.yml:在当前节点上一次性完整安装 Pigsty

infra.yml

INFRA 模块剧本 infra.yml 用于在配置文件的 infra 分组所定义的 Infra节点 上初始化基础设施模块

执行该剧本将完成以下任务

- 配置 Infra节点 的目录与环境变量

- 下载并创建本地软件仓库,加速后续安装。(若使用离线软件包,或检测到已经存在本地软件源,则跳过本阶段)

- 将当前 Infra节点 作为一个 普通节点 纳入 Pigsty 管理

- 部署基础设施组件,包括 Prometheus, Grafana, Loki, Alertmanager, PushGateway,Blackbox Exporter 等

该剧本默认在 infra 分组上执行

- Pigsty 会在配置文件中固定名为

infra的分组上安装INFRA模块 - Pigsty 会在 configure 过程中默认将当前安装节点标记为 Infra节点,并使用 当前节点首要IP地址 替换配置模板中的占位IP地址

10.10.10.10。 - 该节点除了可以发起管理,部署有基础设施,与一个部署普通托管节点并无区别。

剧本注意事项

- 本剧本为幂等剧本,重复执行会 抹除 Infra节点 上的基础设施组件。

- 除非设置

prometheus_clean为false,否则 Prometheus 监控指标时序数据会丢失。 - 除非设置

loki_clean为false,否则 Loki 日志数据会丢失, - 除非设置

grafana_clean为false,否则 Grafana 监控面板与配置修改会丢失

- 除非设置

- 当本地软件仓库

/www/pigsty/repo_complete存在时,本剧本会跳过从互联网下载软件的任务。- 完整执行该剧本耗时约1~3分钟,视机器配置与网络条件而异。

- 不使用离线软件包而直接从互联网原始上游下载软件时,可能耗时5-10分钟,根据您的网络条件而异。

执行演示

可用任务

以下为 infra.yml 剧本中可用的任务列表:

#--------------------------------------------------------------#

# Tasks

#--------------------------------------------------------------#

# ca : create self-signed CA on localhost files/pki

# - ca_dir : create CA directory

# - ca_private : generate ca private key: files/pki/ca/ca.key

# - ca_cert : signing ca cert: files/pki/ca/ca.crt

#

# id : generate node identity

#

# repo : bootstrap a local yum repo from internet or offline packages

# - repo_dir : create repo directory

# - repo_check : check repo exists

# - repo_prepare : use existing repo if exists

# - repo_build : build repo from upstream if not exists

# - repo_upstream : handle upstream repo files in /etc/yum.repos.d

# - repo_remove : remove existing repo file if repo_remove == true

# - repo_add : add upstream repo files to /etc/yum.repos.d

# - repo_url_pkg : download packages from internet defined by repo_url_packages

# - repo_cache : make upstream yum cache with yum makecache

# - repo_boot_pkg : install bootstrap pkg such as createrepo_c,yum-utils,...

# - repo_pkg : download packages & dependencies from upstream repo

# - repo_create : create a local yum repo with createrepo_c & modifyrepo_c

# - repo_use : add newly built repo into /etc/yum.repos.d

# - repo_nginx : launch a nginx for repo if no nginx is serving

#

# node/haproxy/docker/monitor : setup infra node as a common node (check node.yml)

# - node_name, node_hosts, node_resolv, node_firewall, node_ca, node_repo, node_pkg

# - node_feature, node_kernel, node_tune, node_sysctl, node_profile, node_ulimit

# - node_data, node_admin, node_timezone, node_ntp, node_crontab, node_vip

# - haproxy_install, haproxy_config, haproxy_launch, haproxy_reload

# - docker_install, docker_admin, docker_config, docker_launch, docker_image

# - haproxy_register, node_exporter, node_register, promtail

#

# infra : setup infra components

# - infra_env : env_dir, env_pg, env_pgadmin, env_var

# - infra_pkg : infra_pkg_yum, infra_pkg_pip

# - infra_user : setup infra os user group

# - infra_cert : issue cert for infra components

# - dns : dns_config, dns_record, dns_launch

# - nginx : nginx_config, nginx_cert, nginx_static, nginx_launch, nginx_certbot, nginx_reload, nginx_exporter

# - prometheus : prometheus_clean, prometheus_dir, prometheus_config, prometheus_launch, prometheus_reload

# - alertmanager : alertmanager_config, alertmanager_launch

# - pushgateway : pushgateway_config, pushgateway_launch

# - blackbox : blackbox_config, blackbox_launch

# - grafana : grafana_clean, grafana_config, grafana_launch, grafana_provision

# - loki : loki clean, loki_dir, loki_config, loki_launch

# - infra_register : register infra components to prometheus

#--------------------------------------------------------------#

infra-rm.yml

INFRA模块剧本 infra-rm.yml 用于从配置文件 infra 分组定义的 Infra节点 上移除 Pigsty 基础设施

常用子任务包括:

./infra-rm.yml # 移除 INFRA 模块

./infra-rm.yml -t service # 停止 INFRA 上的基础设施服务

./infra-rm.yml -t data # 移除 INFRA 上的存留数据

./infra-rm.yml -t package # 卸载 INFRA 上安装的软件包

install.yml

INFRA模块剧本 install.yml用于在 所有节点 上一次性完整安装 Pigsty。

该剧本在 剧本:一次性安装 中有更详细的介绍。

5 - 管理预案

下面是与 INFRA 模块相关的一些管理任务:

安装Infra模块

使用 infra.yml 剧本在 Infra 节点上安装 INFRA 模块:

./infra.yml # 在 infra 分组上安装 INFRA 模块

卸载Infra模块

使用 infra-rm.yml 剧本从 Infra 节点上卸载 INFRA 模块:

./infra-rm.yml # 从 infra 分组上卸载 INFRA 模块

扩容 Infra 模块

想要扩容现有 Infra 部署,首先修改 infra 分组,添加新的节点 IP,并为其分配不重复的 Infra 实例号 infra_seq。

all:

children:

infra:

hosts:

10.10.10.10: { infra_seq: 1 } # 原有的 1 号节点

10.10.10.11: { infra_seq: 2 } # 新的 2 号节点

然后使用 infra.yml 剧本在新的节点上安装 INFRA 模块:

./infra.yml -l 10.10.10.11 # 在新节点上安装 INFRA 模块

管理本地软件仓库

您可以使用以下剧本子任务,管理 Infra节点 上的本地软件仓库(YUM/APT):

./infra.yml -t repo #从互联网或离线包中创建本地软件仓库

./infra.yml -t repo_dir # 创建本地软件仓库

./infra.yml -t repo_check # 检查本地软件仓库是否已经存在?

./infra.yml -t repo_prepare # 如果存在,直接使用已有的本地软件仓库

./infra.yml -t repo_build # 如果不存在,从上游构建本地软件仓库

./infra.yml -t repo_upstream # 添加上游仓库 repo/list 文件

./infra.yml -t repo_remove # 如果 repo_remove == true,则删除现有的仓库文件

./infra.yml -t repo_add # 将上游仓库文件添加到 /etc/yum.repos.d (或 /etc/apt/sources.list.d)

./infra.yml -t repo_url_pkg # 从由 repo_url_packages 定义的互联网下载包

./infra.yml -t repo_cache # 使用 yum makecache / apt update 创建上游软件源元数据缓存

./infra.yml -t repo_boot_pkg # 安装如 createrepo_c、yum-utils 等的引导包...(或 dpkg-)

./infra.yml -t repo_pkg # 从上游仓库下载包 & 依赖项

./infra.yml -t repo_create # 使用 createrepo_c & modifyrepo_c / dpkg-dev 创建本地软件仓库

./infra.yml -t repo_use # 将新建的仓库添加到 /etc/yum.repos.d | /etc/apt/sources.list.d

./infra.yml -t repo_nginx # 如果 nginx 没有运行,启动 nginx 作为文件服务器

其中常用的命令为:

./infra.yml -t repo_upstream # 向 INFRA 节点添加 repo_upstream 中定义的上游软件仓库

./infra.yml -t repo_pkg # 从上游软件仓库下载包及其依赖项。

./infra.yml -t repo_create # 创建/更新本地 yum/apt 仓库

管理Nginx

./infra.yml -t nginx # 重置 Nginx 组件

./infra.yml -t nginx_index # 重新渲染 Nginx 首页内容

./infra.yml -t nginx_config,nginx_reload # 重新渲染 Nginx 配置,对外暴露新的上游服务。

如果用户在 infra_portal 列表中使用了 certbot 字段填入了证书名称,则可以使用以下命令使用 certbot 申请免费 HTTPS 证书:

# 使用 certbot 申请真实域名的免费 HTTPS 证书

./infra.yml -t nginx_certbot,nginx_reload -e certbot_sign=true

管理基础设施组件

您可以使用以下剧本子任务,管理 Infra节点 上的各个基础设施组件

./infra.yml -t infra # 配置基础设施

./infra.yml -t infra_env # 配置管理节点上的环境变量:env_dir, env_pg, env_pgadmin, env_var

./infra.yml -t infra_pkg # 安装INFRA所需的软件包:infra_pkg_yum, infra_pkg_pip

./infra.yml -t infra_user # 设置 infra 操作系统用户组

./infra.yml -t infra_cert # 为 infra 组件颁发证书

./infra.yml -t dns # 配置 DNSMasq:dns_config, dns_record, dns_launch

./infra.yml -t nginx # 配置 Nginx:nginx_config, nginx_cert, nginx_static, nginx_launch, nginx_exporter

./infra.yml -t prometheus # 配置 Prometheus:prometheus_clean, prometheus_dir, prometheus_config, prometheus_launch, prometheus_reload

./infra.yml -t alertmanager # 配置 AlertManager:alertmanager_config, alertmanager_launch

./infra.yml -t pushgateway # 配置 PushGateway:pushgateway_config, pushgateway_launch

./infra.yml -t blackbox # 配置 Blackbox Exporter: blackbox_launch

./infra.yml -t grafana # 配置 Grafana:grafana_clean, grafana_config, grafana_plugin, grafana_launch, grafana_provision

./infra.yml -t loki # 配置 Loki:loki_clean, loki_dir, loki_config, loki_launch

./infra.yml -t infra_register # 将 infra 组件注册到 prometheus

其他常用的任务包括:

./infra.yml -t nginx_index # 重新渲染 Nginx 首页内容

./infra.yml -t nginx_config,nginx_reload # 重新渲染 Nginx 配置,对外暴露新的上游服务。

./infra.yml -t prometheus_conf,prometheus_reload # 重新生成 Prometheus 主配置文件,并重载配置

./infra.yml -t prometheus_rule,prometheus_reload # 重新拷贝 Prometheus 规则 & 告警,并重载配置

./infra.yml -t grafana_plugin # 从互联网上下载 Grafana 插件,通常需要科学上网

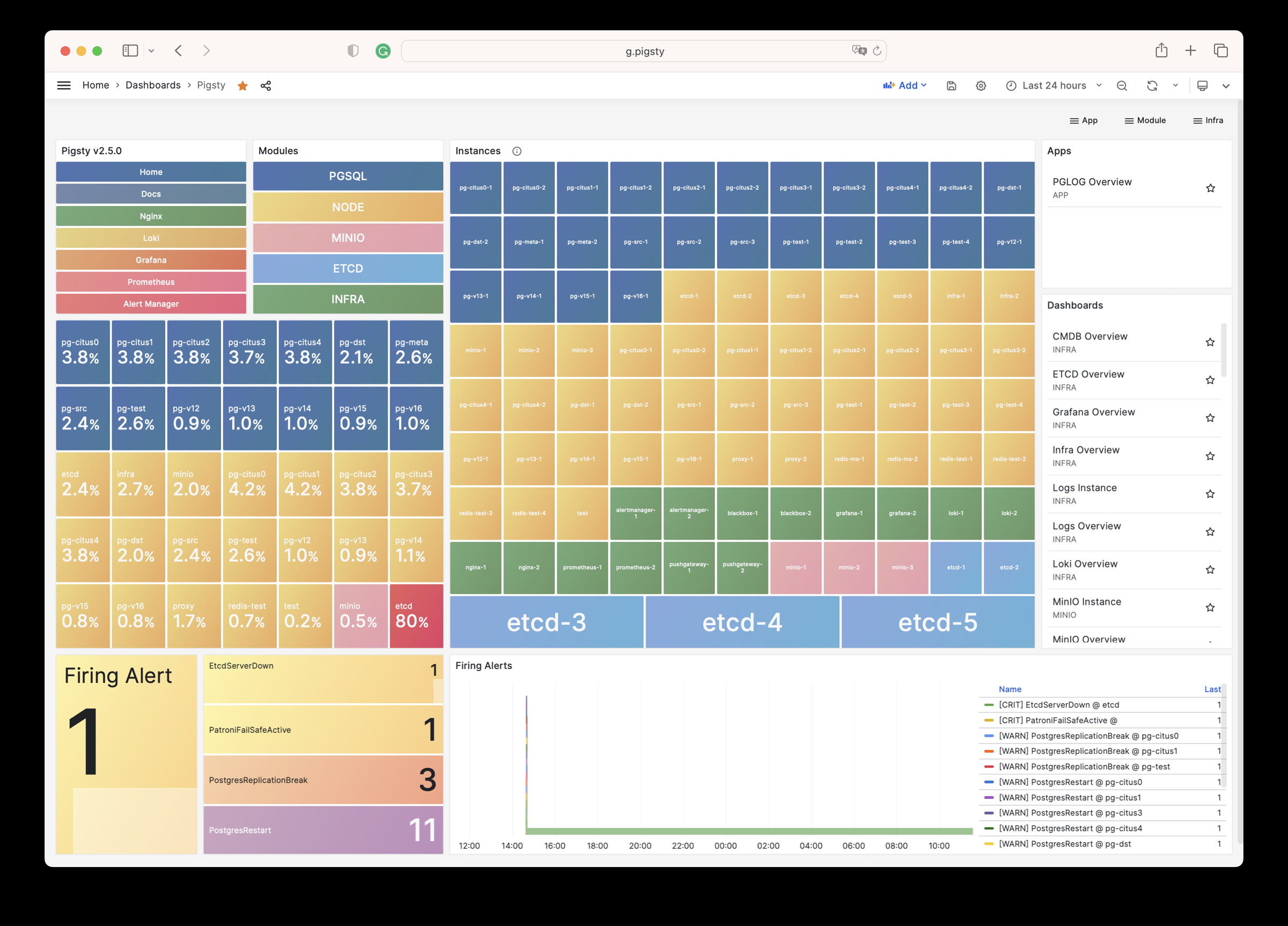

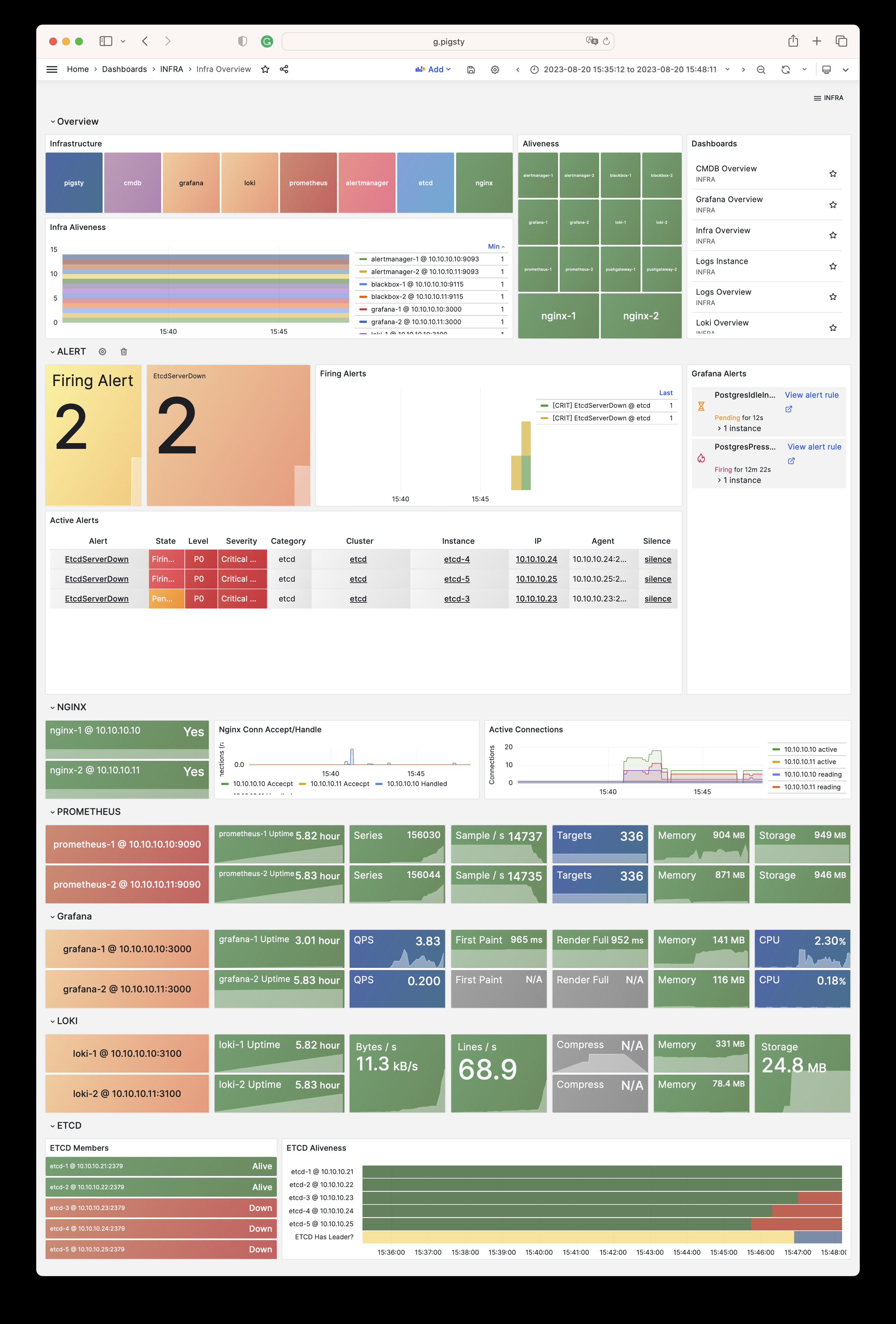

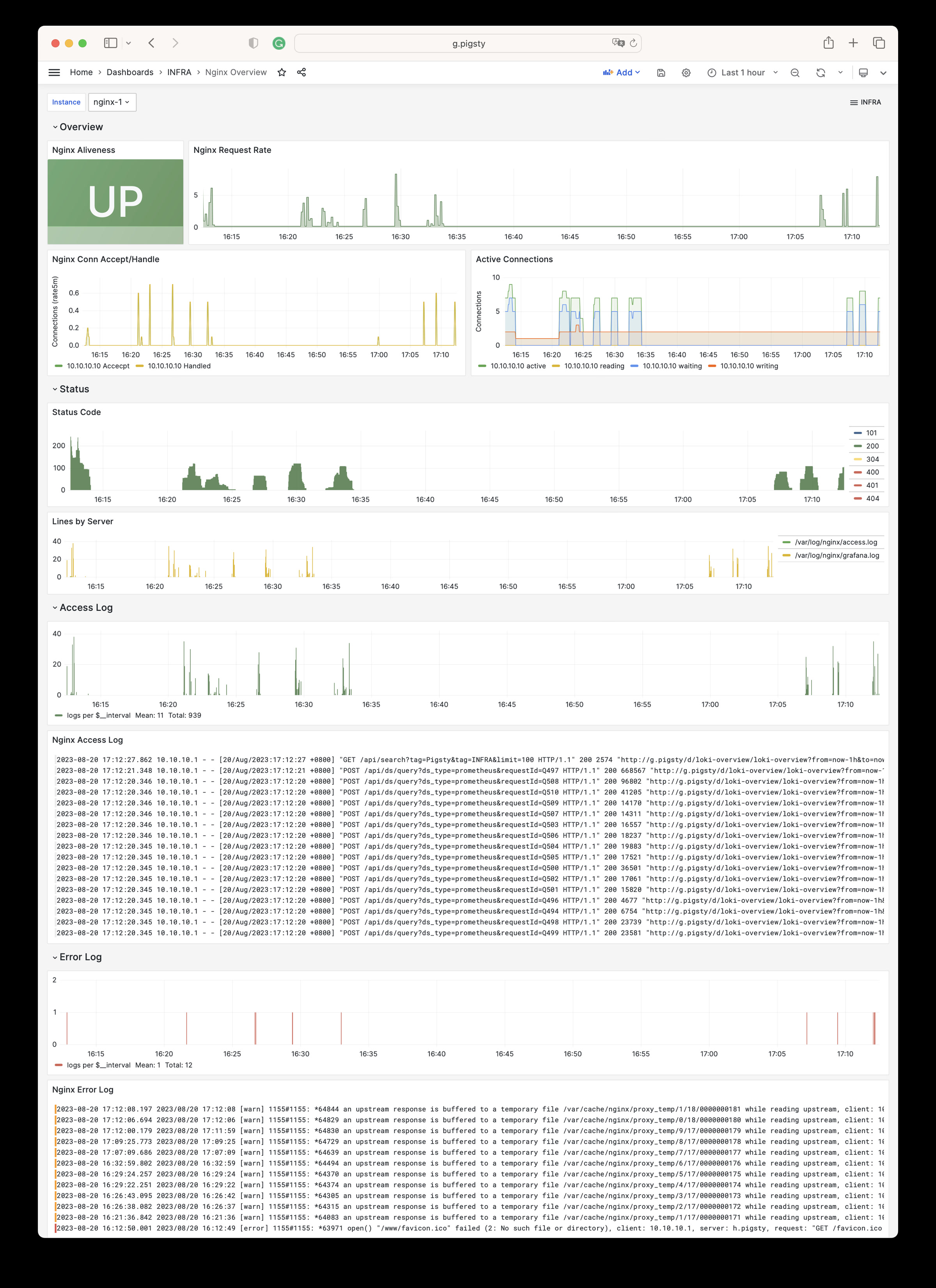

6 - 监控告警

监控面板

Pigsty 针对 Infra 模块提供了以下监控面板

Pigsty Home

Pigsty 监控系统主页

INFRA Overview

Pigsty 基础设施自监控概览

Nginx Overview

Nginx 监控指标与日志

Grafana Overview

Grafana 监控指标与日志

Prometheus Overview

Prometheus 监控指标与日志

Loki Overview

Loki 监控指标与日志

Logs Instance

查阅单个节点上的日志信息

Logs Overview

查阅全局日志信息

CMDB Overview

CMDB 可视化

告警规则

Pigsty 针对 INFRA 模块提供了以下两条告警规则:

InfraDown: 基础设施组件出现宕机AgentDown: 监控Agent代理出现宕机

您可以按需在 files/prometheus/rules/infra.yml 中修改或添加新的基础设施告警规则。

################################################################

# Infrastructure Alert Rules #

################################################################

- name: infra-alert

rules:

#==============================================================#

# Infra Aliveness #

#==============================================================#

# infra components (prometheus,grafana) down for 1m triggers a P1 alert

- alert: InfraDown

expr: infra_up < 1

for: 1m

labels: { level: 0, severity: CRIT, category: infra }

annotations:

summary: "CRIT InfraDown {{ $labels.type }}@{{ $labels.instance }}"

description: |

infra_up[type={{ $labels.type }}, instance={{ $labels.instance }}] = {{ $value | printf "%.2f" }} < 1

#==============================================================#

# Agent Aliveness #

#==============================================================#

# agent aliveness are determined directly by exporter aliveness

# including: node_exporter, pg_exporter, pgbouncer_exporter, haproxy_exporter

- alert: AgentDown

expr: agent_up < 1

for: 1m

labels: { level: 0, severity: CRIT, category: infra }

annotations:

summary: 'CRIT AgentDown {{ $labels.ins }}@{{ $labels.instance }}'

description: |

agent_up[ins={{ $labels.ins }}, instance={{ $labels.instance }}] = {{ $value | printf "%.2f" }} < 1

7 - 指标列表

INFRA 指标

INFRA 模块包含有 964 类可用监控指标。

| Metric Name | Type | Labels | Description |

|---|---|---|---|

| alertmanager_alerts | gauge | ins, instance, ip, job, cls, state |

How many alerts by state. |

| alertmanager_alerts_invalid_total | counter | version, ins, instance, ip, job, cls |

The total number of received alerts that were invalid. |

| alertmanager_alerts_received_total | counter | version, ins, instance, ip, status, job, cls |

The total number of received alerts. |

| alertmanager_build_info | gauge | revision, version, ins, instance, ip, tags, goarch, goversion, job, cls, branch, goos |

A metric with a constant ‘1’ value labeled by version, revision, branch, goversion from which alertmanager was built, and the goos and goarch for the build. |

| alertmanager_cluster_alive_messages_total | counter | ins, instance, ip, peer, job, cls |

Total number of received alive messages. |

| alertmanager_cluster_enabled | gauge | ins, instance, ip, job, cls |

Indicates whether the clustering is enabled or not. |

| alertmanager_cluster_failed_peers | gauge | ins, instance, ip, job, cls |

Number indicating the current number of failed peers in the cluster. |

| alertmanager_cluster_health_score | gauge | ins, instance, ip, job, cls |

Health score of the cluster. Lower values are better and zero means ’totally healthy’. |

| alertmanager_cluster_members | gauge | ins, instance, ip, job, cls |

Number indicating current number of members in cluster. |

| alertmanager_cluster_messages_pruned_total | counter | ins, instance, ip, job, cls |

Total number of cluster messages pruned. |

| alertmanager_cluster_messages_queued | gauge | ins, instance, ip, job, cls |

Number of cluster messages which are queued. |

| alertmanager_cluster_messages_received_size_total | counter | ins, instance, ip, msg_type, job, cls |

Total size of cluster messages received. |

| alertmanager_cluster_messages_received_total | counter | ins, instance, ip, msg_type, job, cls |

Total number of cluster messages received. |

| alertmanager_cluster_messages_sent_size_total | counter | ins, instance, ip, msg_type, job, cls |

Total size of cluster messages sent. |

| alertmanager_cluster_messages_sent_total | counter | ins, instance, ip, msg_type, job, cls |

Total number of cluster messages sent. |

| alertmanager_cluster_peer_info | gauge | ins, instance, ip, peer, job, cls |

A metric with a constant ‘1’ value labeled by peer name. |

| alertmanager_cluster_peers_joined_total | counter | ins, instance, ip, job, cls |

A counter of the number of peers that have joined. |

| alertmanager_cluster_peers_left_total | counter | ins, instance, ip, job, cls |

A counter of the number of peers that have left. |

| alertmanager_cluster_peers_update_total | counter | ins, instance, ip, job, cls |

A counter of the number of peers that have updated metadata. |

| alertmanager_cluster_reconnections_failed_total | counter | ins, instance, ip, job, cls |

A counter of the number of failed cluster peer reconnection attempts. |

| alertmanager_cluster_reconnections_total | counter | ins, instance, ip, job, cls |

A counter of the number of cluster peer reconnections. |

| alertmanager_cluster_refresh_join_failed_total | counter | ins, instance, ip, job, cls |

A counter of the number of failed cluster peer joined attempts via refresh. |

| alertmanager_cluster_refresh_join_total | counter | ins, instance, ip, job, cls |

A counter of the number of cluster peer joined via refresh. |

| alertmanager_config_hash | gauge | ins, instance, ip, job, cls |

Hash of the currently loaded alertmanager configuration. |

| alertmanager_config_last_reload_success_timestamp_seconds | gauge | ins, instance, ip, job, cls |

Timestamp of the last successful configuration reload. |

| alertmanager_config_last_reload_successful | gauge | ins, instance, ip, job, cls |

Whether the last configuration reload attempt was successful. |

| alertmanager_dispatcher_aggregation_groups | gauge | ins, instance, ip, job, cls |

Number of active aggregation groups |

| alertmanager_dispatcher_alert_processing_duration_seconds_count | Unknown | ins, instance, ip, job, cls |

N/A |

| alertmanager_dispatcher_alert_processing_duration_seconds_sum | Unknown | ins, instance, ip, job, cls |

N/A |

| alertmanager_http_concurrency_limit_exceeded_total | counter | ins, instance, method, ip, job, cls |

Total number of times an HTTP request failed because the concurrency limit was reached. |

| alertmanager_http_request_duration_seconds_bucket | Unknown | ins, instance, method, ip, le, job, cls, handler |

N/A |

| alertmanager_http_request_duration_seconds_count | Unknown | ins, instance, method, ip, job, cls, handler |

N/A |

| alertmanager_http_request_duration_seconds_sum | Unknown | ins, instance, method, ip, job, cls, handler |

N/A |

| alertmanager_http_requests_in_flight | gauge | ins, instance, method, ip, job, cls |

Current number of HTTP requests being processed. |

| alertmanager_http_response_size_bytes_bucket | Unknown | ins, instance, method, ip, le, job, cls, handler |

N/A |

| alertmanager_http_response_size_bytes_count | Unknown | ins, instance, method, ip, job, cls, handler |

N/A |

| alertmanager_http_response_size_bytes_sum | Unknown | ins, instance, method, ip, job, cls, handler |

N/A |

| alertmanager_integrations | gauge | ins, instance, ip, job, cls |

Number of configured integrations. |

| alertmanager_marked_alerts | gauge | ins, instance, ip, job, cls, state |

How many alerts by state are currently marked in the Alertmanager regardless of their expiry. |

| alertmanager_nflog_gc_duration_seconds_count | Unknown | ins, instance, ip, job, cls |

N/A |

| alertmanager_nflog_gc_duration_seconds_sum | Unknown | ins, instance, ip, job, cls |

N/A |

| alertmanager_nflog_gossip_messages_propagated_total | counter | ins, instance, ip, job, cls |

Number of received gossip messages that have been further gossiped. |

| alertmanager_nflog_maintenance_errors_total | counter | ins, instance, ip, job, cls |

How many maintenances were executed for the notification log that failed. |

| alertmanager_nflog_maintenance_total | counter | ins, instance, ip, job, cls |

How many maintenances were executed for the notification log. |

| alertmanager_nflog_queries_total | counter | ins, instance, ip, job, cls |

Number of notification log queries were received. |

| alertmanager_nflog_query_duration_seconds_bucket | Unknown | ins, instance, ip, le, job, cls |

N/A |