云计算

- 云计算泥石流:文章导航

- 阿里云rds_duckdb:致敬还是抄袭?

- 花钱买罪受的大冤种:逃离云计算妙瓦底

- OpenAI全球宕机复盘:K8S循环依赖

- WordPress社区内战:论共同体划界问题

- 云数据库:用米其林的价格,吃预制菜大锅饭

- 阿里云:高可用容灾神话破灭

- 云计算泥石流:合订本

- 草台班子唱大戏,阿里云PG翻车记

- 我们能从网易云音乐故障中学到什么?

- 蓝屏星期五:甲乙双方都是草台班子

- Ahrefs不上云,省下四亿美元

- 删库:Google云爆破了大基金的整个云账户

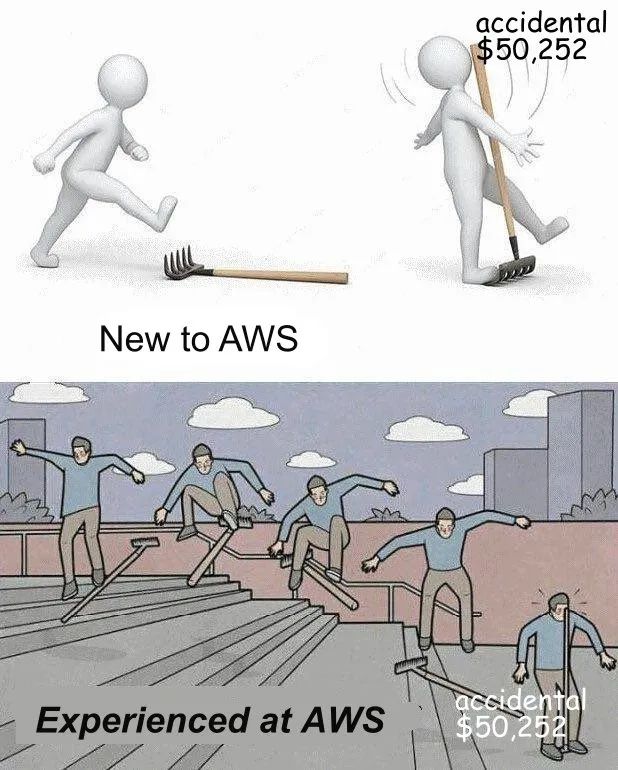

- 云上黑暗森林:打爆云账单,只需要S3桶名

- Cloudflare圆桌访谈与问答录

- 我们能从腾讯云大故障中学到什么?

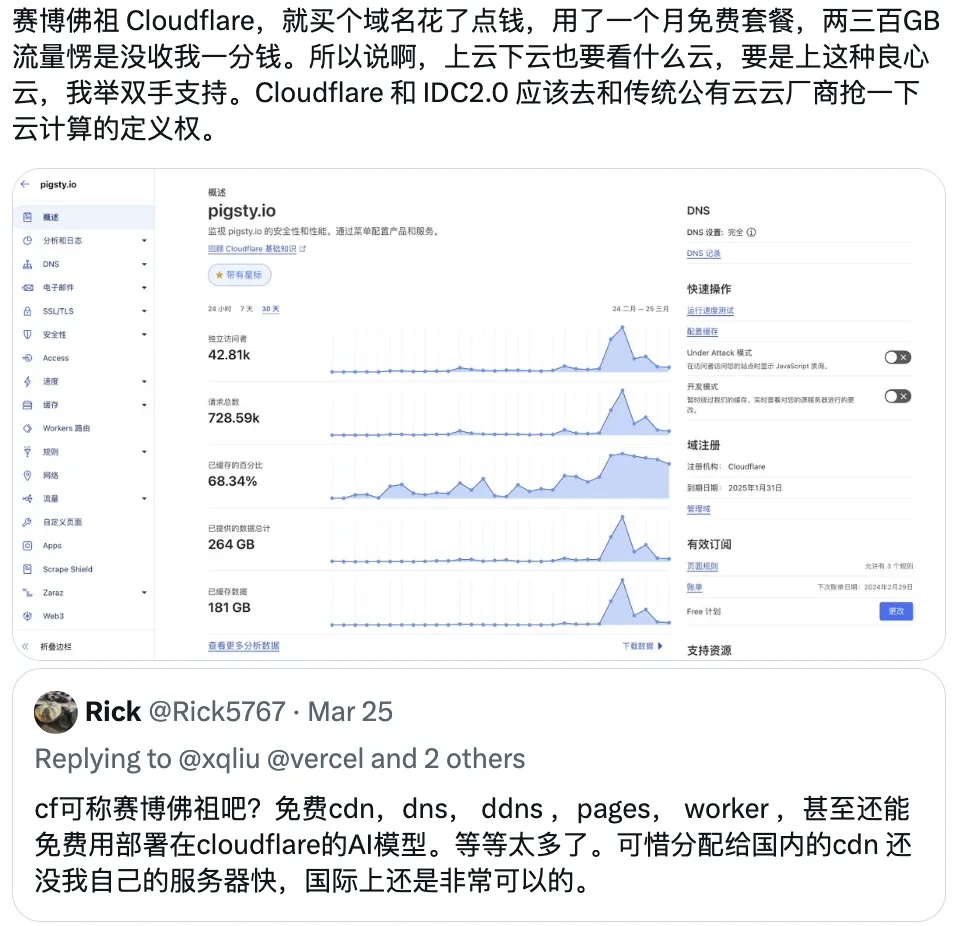

- 吊打公有云的赛博佛祖 Cloudflare

- 罗永浩救不了牙膏云?

- 剖析阿里云服务器算力成本

- 云下高可用秘诀:拒绝复杂度自慰

- 扒皮云对象存储:从降本到杀猪

- 半年下云省千万,DHH下云FAQ

- 从降本增笑到真的降本增效

- 重新拿回计算机硬件的红利

- 我们能从阿里云全球故障中学到什么?

- 薅阿里云羊毛,打造数字家园

- 云计算泥石流:用数据解构公有云

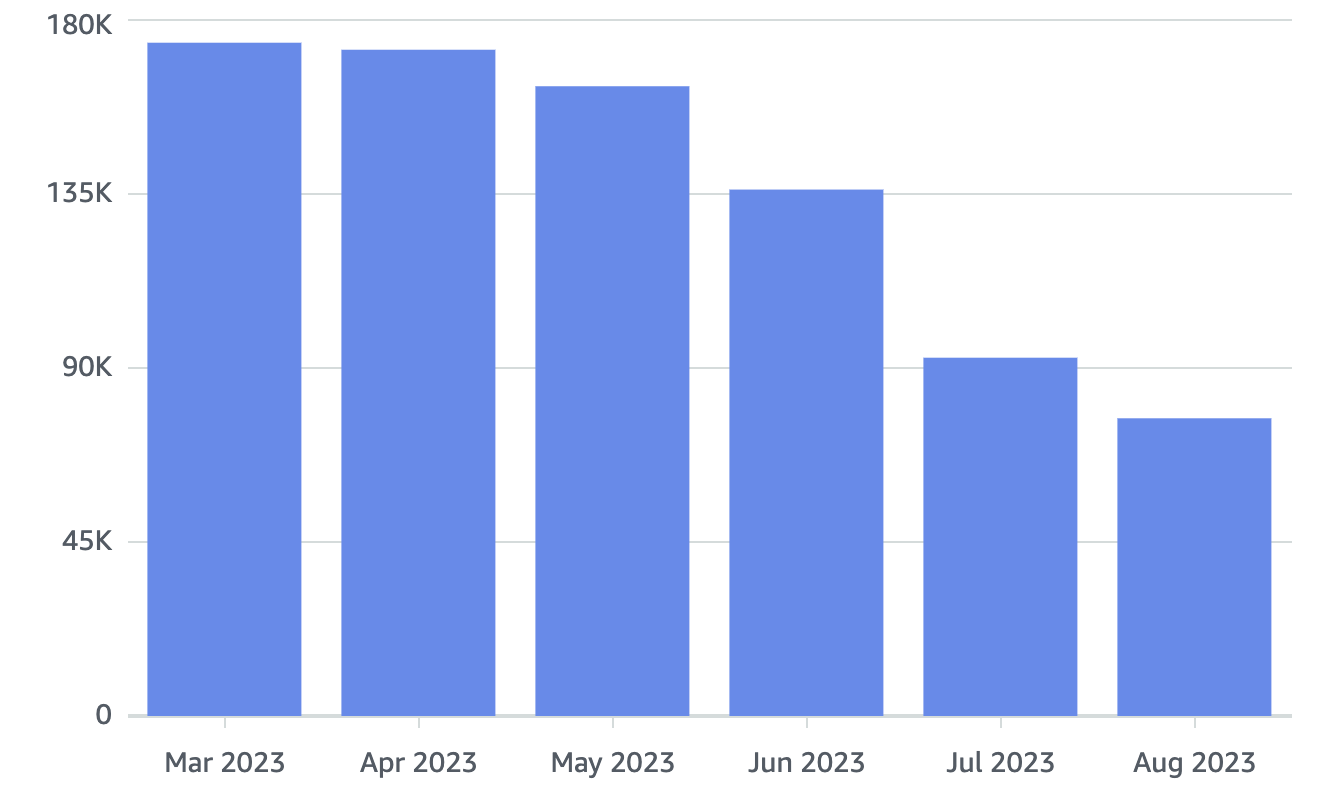

- DHH:下云省下千万美元,比预想的还要多!

- 下云奥德赛:该放弃云计算了吗?

- FinOps终点是下云

- 云计算为啥还没挖沙子赚钱?

- 云SLA是不是安慰剂?

- 云盘是不是杀猪盘?

- 垃圾腾讯云CDN:从入门到放弃?

- 驳《再论为什么你不应该招DBA》

- 范式转移:从云到本地优先

- 云数据库是不是智商税

- DBA还是一份好工作吗?

- 云RDS:从删库到跑路

- DBA会被云淘汰吗?

云计算泥石流:文章导航

世人常道云上好,托管服务烦恼少。我言云乃杀猪盘,溢价百倍实厚颜。

赛博地主搞垄断,坐地起价剥血汗。运维外包嫖开源,租赁电脑炒概念。

世人皆趋云上游,不觉开销似水流。云租天价难为持,开源自建更稳实。

下云先锋把路趟,引领潮流一肩扛。不畏浮云遮望眼,只缘身在最前线。

曾几何时,“上云“近乎成为技术圈的政治正确,整整一代应用开发者的视野被云遮蔽。就让我们用实打实的数据分析与亲身经历,讲清楚公有云租赁模式的价值与陷阱 —— 在这个降本增效的时代中,供您借鉴与参考。

下云案例篇

人仰马翻篇

基础资源篇

记一次阿里云 DCDN 加速仅 32 秒就欠了 1600 的问题处理(扯皮)

商业模式篇

RDS批判篇

滑稽肖像篇

性学家,化学家,软件行业里的废话文学家 【转载】

牙膏云?您可别吹捧云厂商了【转载】

互联网技术大师速成班 【转载】

互联网故障背后的草台班子们【转载】

云厂商眼中的客户:又穷又闲又缺爱【转载】

商业评论篇

阿里云降价背后折射出的绝望【转载】

门内的国企如何看门外的云厂商【转载】

卡在政企客户门口的阿里云【转载】

腾讯云阿里云做的真的是云计算吗?【转载】

主题分类

亚马逊

- 2024-01-13 花钱买罪受的大冤种:逃离云计算妙瓦底

- 2024-05-23 Ahrefs不上云,省下四亿美元

- 2024-04-30 云上黑暗森林:打爆云账单,只需要S3桶名

- 2024-03-26 Redis不开源是“开源”之耻,更是公有云之耻

- 2024-03-14 RDS阉掉了PostgreSQL的灵魂

- 2023-12-27 扒皮对象存储:从降本到杀猪

- 2023-11-17 重新拿回计算机硬件的红利

- 2023-10-29 是时候放弃云计算了吗?

- 2023-07-07 下云奥德赛

阿里云

- 2024-11-11 支付宝崩了?双十一整活王又来了

- 2024-10-07 记一次阿里云 DCDN 加速仅 32 秒就欠了 1600 的问题处理(扯皮) 转

- 2024-09-17 阿里云:高可用容灾神话的破灭

- 2024-09-15 阿里云故障预报:本次事故将持续至20年后?

- 2024-09-10 阿里云新加坡可用区C故障,网传机房着火

- 2024-08-20 草台班子唱大戏,阿里云RDS翻车记

- 2024-07-02 阿里云又挂了,这次是光缆被挖断了?

- 2024-04-22 云计算:菜就是一种原罪

- 2024-04-20 taobao.com 证书过期

- 2024-04-02 牙膏云?您可别吹捧云厂商了

- 2024-04-01 罗永浩救不了牙膏云

- 2024-03-21 迷失在阿里云的年轻人

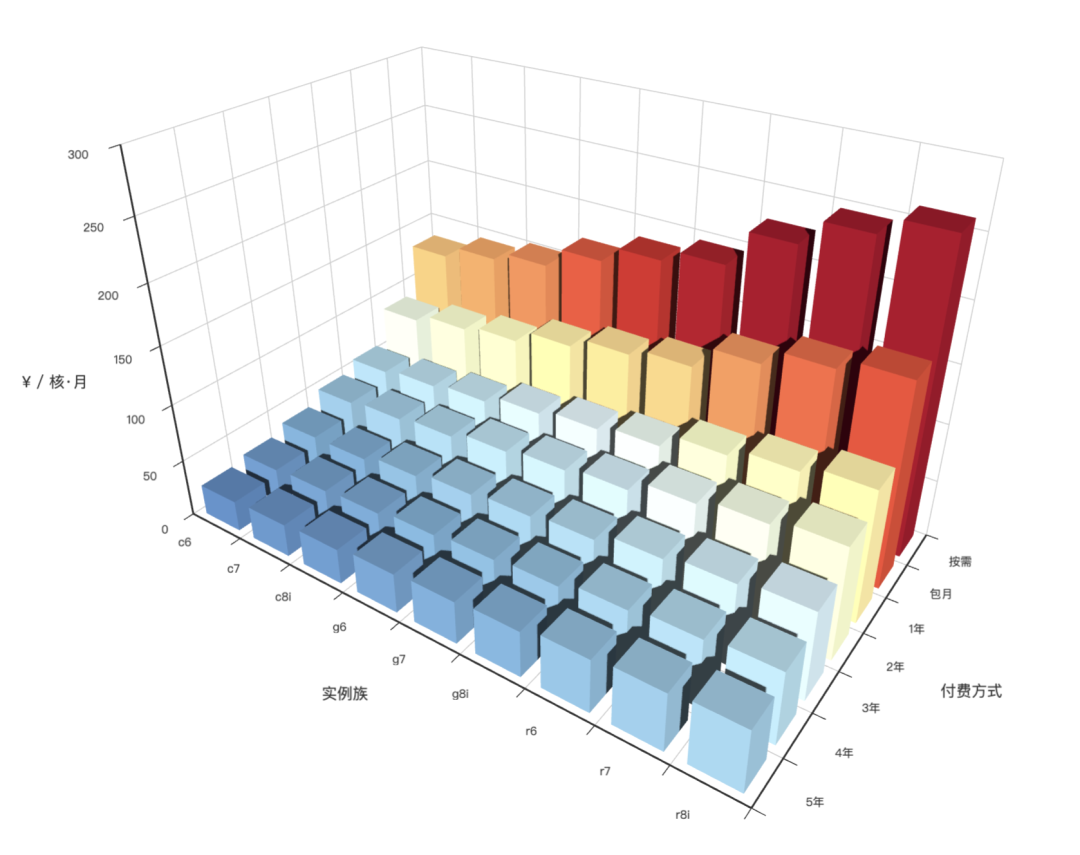

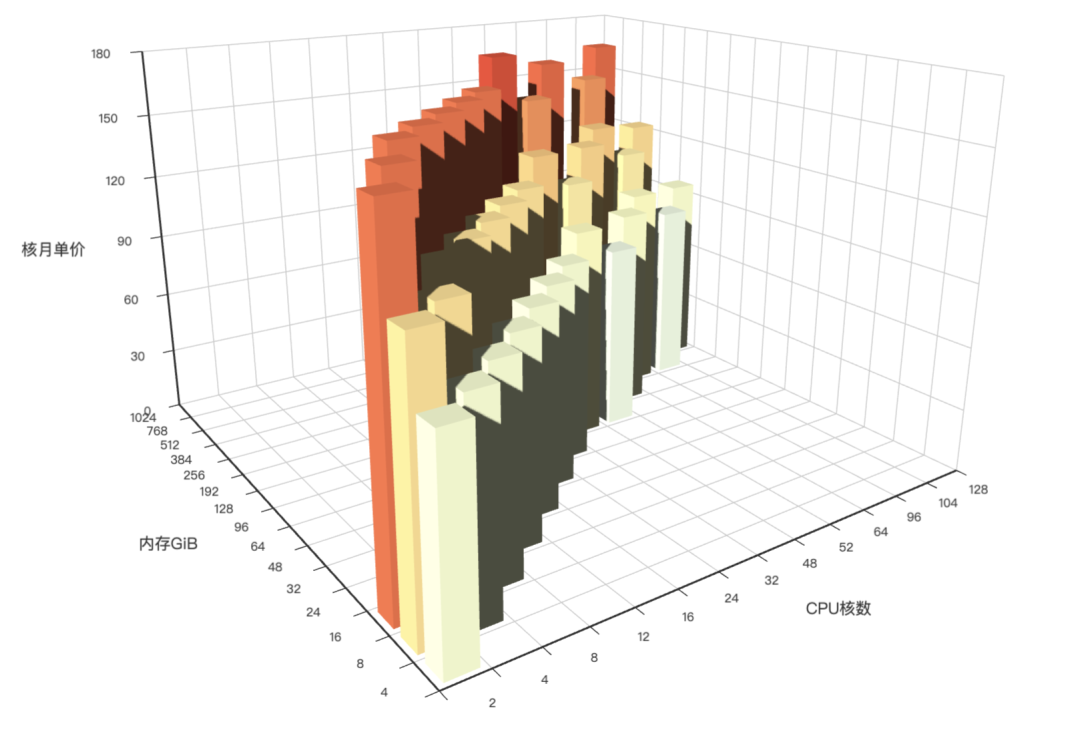

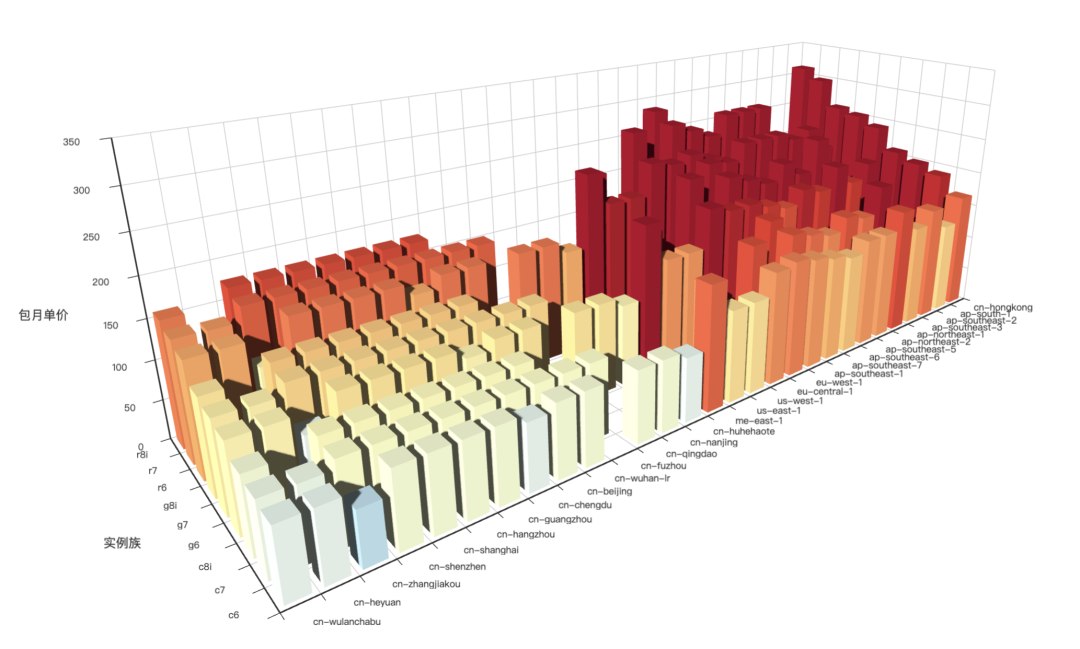

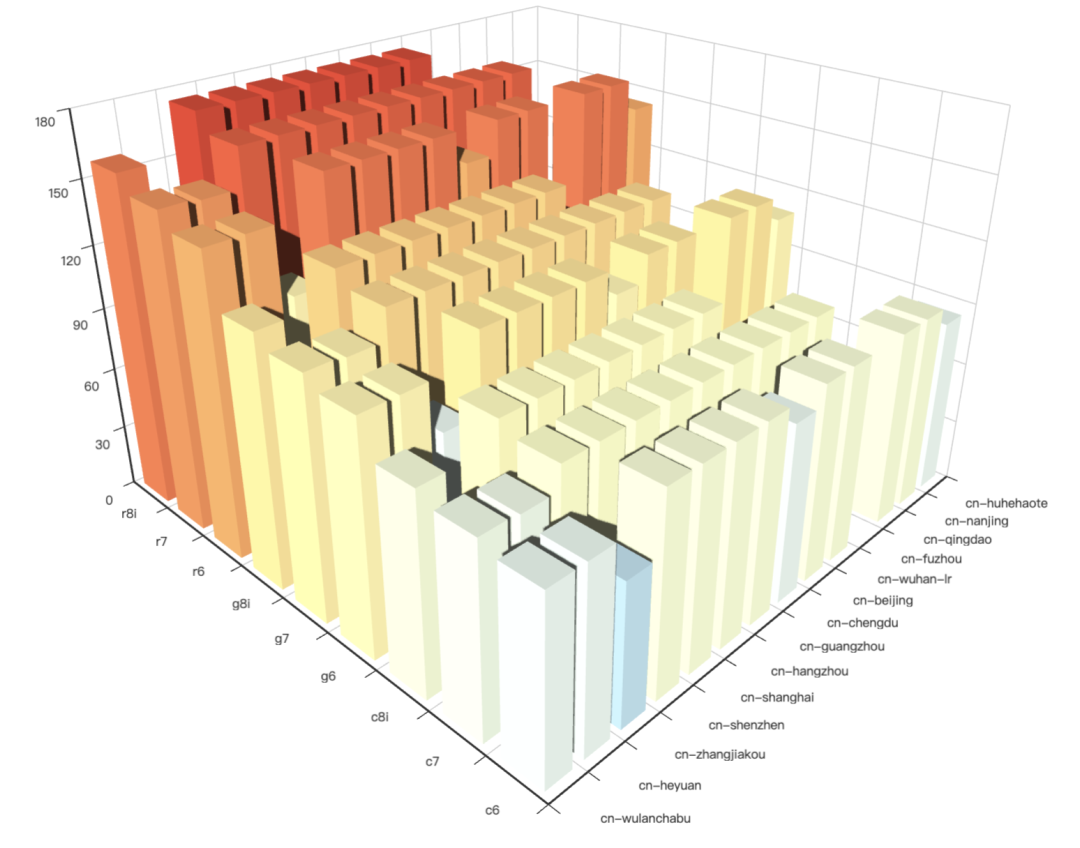

- 2024-03-10 剖析云算力成本,阿里云真的降价了吗?

- 2023-11-29 从降本增笑到真的降本增效

- 2023-11-27 阿里云周爆:云数据库管控又挂了

- 2023-11-14 我们能从阿里云史诗级故障中学到什么

- 2023-11-12 【阿里】云计算史诗级大翻车来了

- 2023-11-09 阿里云的羊毛抓紧薅,五千的云服务器三百拿

- 2023-11-06 云厂商眼中的客户:又穷又闲又缺爱 马工

腾讯云

- 2024-04-17 腾讯真的走通云原生之路了吗? 马工

- 2024-04-14 我们能从腾讯云故障复盘中学到什么?

- 2024-04-12 云SLA是安慰剂还是厕纸合同?

- 2024-04-09 腾讯云:颜面尽失的草台班子

- 2024-04-08 【腾讯】云计算史诗级二翻车来了

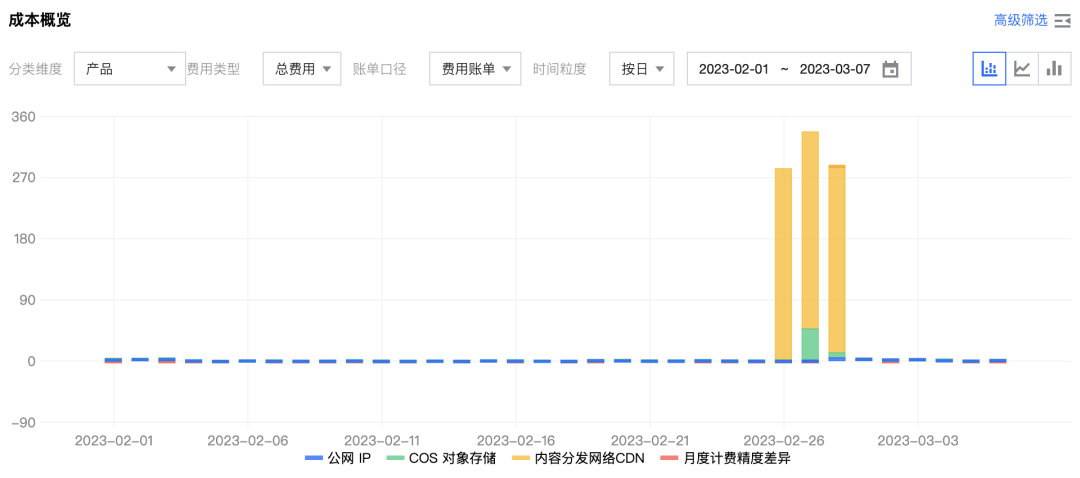

- 2023-03-08 垃圾腾讯云CDN:从入门到放弃

Cloudflare

- 2024-04-23 赛博菩萨Cloudflare圆桌访谈与问答录

- 2024-04-03 吊打公有云的赛博佛祖 Cloudflare

- 2024-05-11 删库:Google云爆破了大基金的整个云账户

Microsoft

- 2024-07-23 全球Windows蓝屏:甲乙双方都是草台班子

Oracle OCI

- 2025-03-29 Oracle云大翻车:6百万用户认证数据泄漏

其他

- 2025-03-24 今日大瓜:赛博佛祖与赛博菩萨大打出手

- 2024-12-14 OpenAI全球宕机复盘:K8S循环依赖

- 2024-11-10 草台回旋镖:Apple Music证书过期服务中断

- 2024-10-04 沸沸扬扬!!!某国内互联网公司,信息泄露导致国外用卡被盗刷。。。

- 2024-10-03 本号收到美团投诉:美团没有存储外卡CVV等敏感信息

- 2024-10-02 某平台CVV泄露:你的信用卡被盗刷了吗?

- 2024-08-21 这次轮到WPS崩了

- 2024-09-19 我们能从网易云音乐故障中学到什么?

- 2024-08-15 GitHub全站故障,又是数据库上翻的车?

DHH

- 2025-03-30 DHH下云:S3晚搬一天,就多花四万

- 2024-10-19 DHH:下云超预期,能省一个亿

- 2024-09-07 先优化碳基BIO核,再优化硅基CPU核

- 2024-01-16 单租户时代:SaaS范式转移

- 2024-01-10 拒绝用复杂度自慰,下云也保稳定运行

- 2023-12-22 半年下云省千万:DHH下云FAQ答疑

- 2023-10-29 是时候放弃云计算了吗?

- 2023-07-07 下云奥德赛

DBA vs RDS

- 2024-08-20 草台班子唱大戏,阿里云RDS翻车记

- 2024-03-14 RDS阉掉了PostgreSQL的灵魂

- 2024-02-02 DBA会被云淘汰吗?

- 2023-03-01 驳《再论为什么你不应该招DBA》

- 2023-02-03 范式转移:从云到本地优先

- 2023-01-31 云数据库是不是杀猪盘

- 2023-01-31 你怎么还在招聘DBA? 马工

- 2022-05-16 云RDS:从删库到跑路

开源软件

- 2024-10-17 WordPress社区内战:论共同体划界问题

- 2024-08-30 ElasticSearch又重新开源了???

- 2024-03-26 Redis不开源是“开源”之耻,更是公有云之耻

- 2023-02-03 范式转移:从云到本地优先

云计算泥石流专栏原文

- 2025-05-13 深度分析:迪奥数据泄露事件,云配置失当的锅?

- 2025-04-29 10万用户的软件,因腾讯云欠费2元灰飞烟灭?

- 2025-04-15 AWS 东京可用区故障:影响13项服务

- 2025-04-16 云计算不能做成云算计之一:云行贿必须清理 马工

- 2025-04-16 CVE惨遭断奶,美帝自毁安全长城

- 2025-04-02 Shopify:愚人节真的翻车了

- 2025-03-30 DHH下云:S3晚搬一天,就多花四万

- 2025-03-29 Oracle云大翻车:6百万用户认证数据泄漏

- 2025-03-24 今日大瓜:赛博佛祖与赛博菩萨大打出手

- 2024-01-13 花钱买罪受的大冤种:逃离云计算妙瓦底

- 2024-12-14 OpenAI全球宕机复盘:K8S循环依赖

- 2024-11-11 支付宝崩了?双十一整活王又来了

- 2024-11-10 草台回旋镖:Apple Music证书过期服务中断

- 2024-10-19 DHH:下云超预期,能省一个亿

- 2024-10-17 WordPress社区内战:论共同体划界问题

- 2024-10-07 记一次阿里云 DCDN 加速仅 32 秒就欠了 1600 的问题处理(扯皮) 转

- 2024-09-17 阿里云:高可用容灾神话的破灭

- 2024-09-15 阿里云故障预报:本次事故将持续至20年后?

- 2024-09-14 阿里云盘灾难级BUG:能看别人照片?

- 2024-09-10 阿里云新加坡可用区C故障,网传机房着火

- 2024-08-21 这次轮到WPS崩了

- 2024-08-20 草台班子唱大戏,阿里云RDS翻车记

- 2024-09-19 我们能从网易云音乐故障中学到什么?

- 2024-08-15 GitHub全站故障,又是数据库上翻的车?

- 2024-07-23 全球Windows蓝屏:甲乙双方都是草台班子

- 2024-07-02 阿里云又挂了,这次是光缆被挖断了?

- 2024-06-22 性学家,化学家,软件行业里的废话文学家 马工

- 2024-05-23 Ahrefs不上云,省下四亿美元

- 2024-05-11 删库:Google云爆破了大基金的整个云账户

- 2024-04-30 云上黑暗森林:打爆云账单,只需要S3桶名

- 2024-04-23 赛博菩萨Cloudflare圆桌访谈与问答录

- 2024-04-22 云计算:菜就是一种原罪

- 2024-04-20 taobao.com证书过期

- 2024-04-17 腾讯真的走通云原生之路了吗? 马工

- 2024-04-14 我们能从腾讯云故障复盘中学到什么?

- 2024-04-12 云SLA是安慰剂还是厕纸合同?

- 2024-04-09 腾讯云:颜面尽失的草台班子

- 2024-04-08 【腾讯】云计算史诗级二翻车来了

- 2024-04-03 吊打公有云的赛博佛祖 Cloudflare

- 2024-04-02 牙膏云?您可别吹捧云厂商了

- 2024-04-01 罗永浩救不了牙膏云

- 2024-03-26 Redis不开源是“开源”之耻,更是公有云之耻

- 2024-03-25 公有云厂商卖的云计算到底是什么玩意? 马工

- 2024-03-21 迷失在阿里云的年轻人

- 2024-03-14 RDS阉掉了PostgreSQL的灵魂

- 2024-03-13 云计算反叛军联盟

- 2024-03-10 剖析云算力成本,阿里云真的降价了吗?

- 2024-02-02 DBA会被云淘汰吗?

- 2024-01-16 单租户时代:SaaS范式转移

- 2024-01-09 互联网技术大师速成班 马工

- 2024-01-04 门内的国企如何看门外的云厂商 Leo

- 2023-12-29 卡在政企客户门口的阿里云 马工

- 2024-01-12 云计算泥石流

- 2024-01-10 拒绝用复杂度自慰,下云也保稳定运行

- 2023-12-27 扒皮对象存储:从降本到杀猪

- 2023-12-22 半年下云省千万:DHH下云FAQ答疑

- 2023-12-06 互联网故障背后的草台班子们 马工

- 2023-11-29 从降本增笑到真的降本增效

- 2023-11-27 阿里云周爆:云数据库管控又挂了

- 2023-11-17 重新拿回计算机硬件的红利

- 2023-11-14 我们能从阿里云史诗级故障中学到什么

- 2023-11-12 【阿里】云计算史诗级大翻车来了

- 2023-11-09 阿里云的羊毛抓紧薅,五千的云服务器三百拿

- 2023-11-06 云厂商眼中的客户:又穷又闲又缺爱 马工

- 2023-10-29 是时候放弃云计算了吗?

- 2023-07-08 云计算泥石流合集 —— 用数据解构公有云

- 2023-07-07 下云奥德赛

- 2023-07-06 FinOps终点是下云

- 2023-06-14 云计算为啥还没挖沙子赚钱?

- 2023-06-12 云SLA是不是安慰剂?

- 2023-04-28 杀猪盘真的降价了吗?

- 2023-03-15 公有云是不是杀猪盘?

- 2023-03-08 垃圾腾讯云CDN:从入门到放弃

- 2023-03-01 驳《再论为什么你不应该招DBA》

- 2023-02-03 范式转移:从云到本地优先

- 2023-01-31 云数据库是不是杀猪盘

- 2023-01-31 你怎么还在招聘DBA? 马工

- 2023-01-30 云数据库是不是智商税

- 2022-05-16 云RDS:从删库到跑路

下云之歌

甜美朋克:https://app.suno.ai/song/98f03bcd-5b4d-428f-a3f1-f581910d2e56

滑稽上口:https://app.suno.ai/song/308069a2-9d97-41c0-b1a9-ce14eb137ffe

新纪元:https://app.suno.ai/song/81e1b275-4652-4442-aa47-3127171d874d

死亡摇滚:https://app.suno.ai/song/6c203c72-0ce7-4b63-a447-a63b31080776

阿里云rds_duckdb:致敬还是抄袭?

看到有人发了一篇《天上的“PostgreSQL” 说 地上的 PostgreSQL 都是“小垃圾”》, 文中大肆宣扬阿里云 RDS 新增了一个 rds_duckdb 插件,可以用来做 OLAP 分析, 并进一步带出“云 RDS PG 高高在上、PostgreSQL 开源只是‘小垃圾’”的极端观点,令人不适。

我对 DuckDB 及衍生的 pg_duckdb 扩展都很熟悉。

其实无论阿里云或其他云厂商在技术层面如何整合开源,只要合法合理合情,我个人都乐见商业与开源互利共赢。

但如果有人在宣传层面贬低开源、抬高云服务,甚至暗示开源价值低下,那我确实有必要出来说两句以正视听。

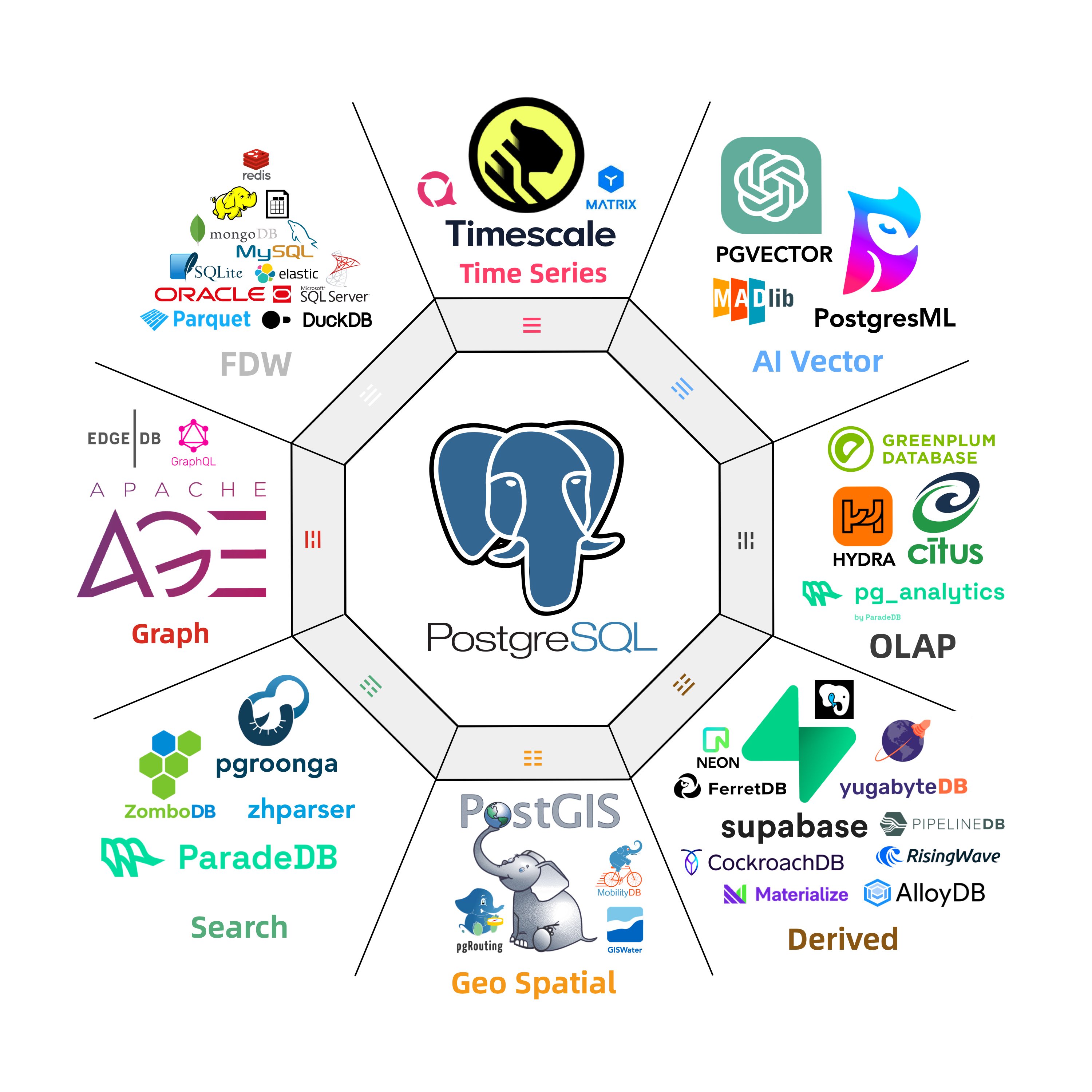

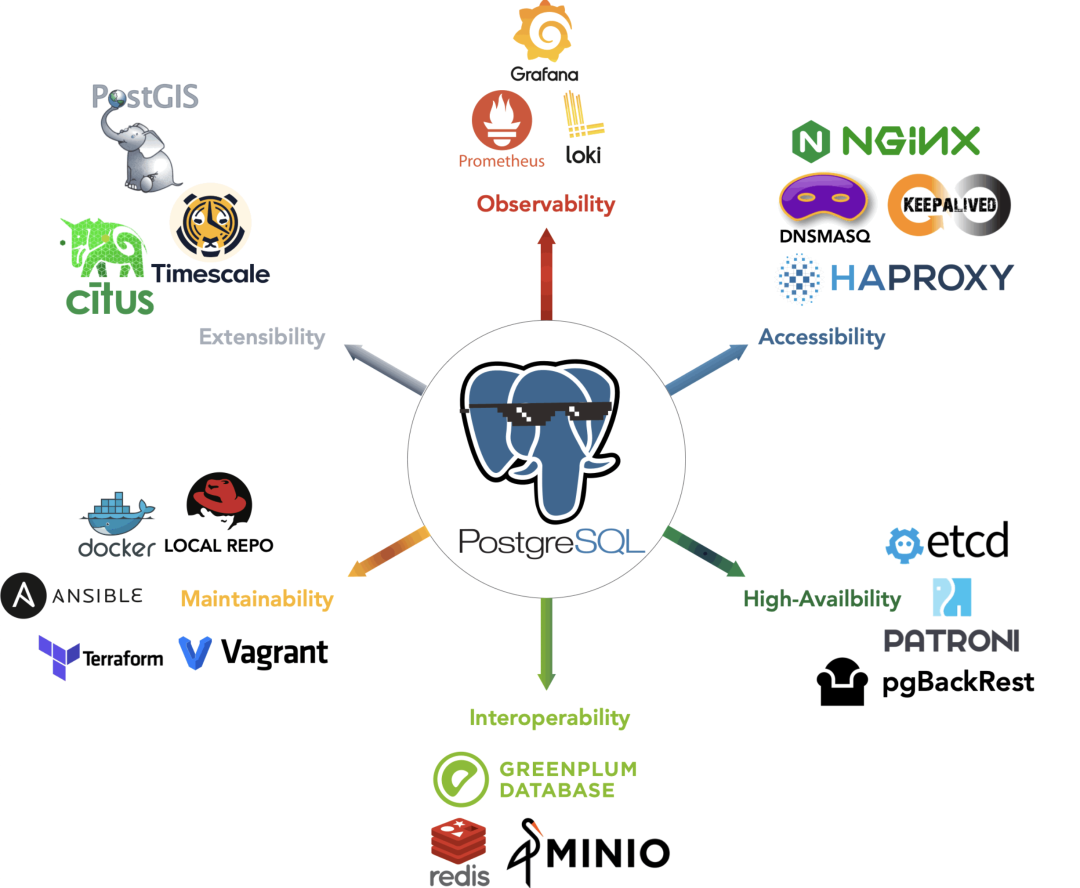

关于 PG DuckDB

DuckDB 是一个高性能的嵌入式 OLAP 分析数据库,我已经关注它很久了。 在一年前我写的《PostgreSQL正在吞噬数据库世界》一文中, 着重介绍了 PG 在 OLAP 领域缝合 DuckDB 实现真HTAP的巨大潜力,并成功点燃了全球 PG 社区缝合 DuckDB 的热情。 PG 社区中涌现出好几个将 DuckDB 整合进 PG 的扩展玩家,而这甚至成为了 2024 年数据库领域的一个标志性事件。

在这些整合 PostgreSQL 与 DuckDB 的项目中,由 DuckDB 官方母公司 MotherDuck 联合 PG OLAP 生态初创企业 Hydra 共同开发的 pg_duckdb,可谓最具潜力。

我在其公开发布的第一时间就着手跟进,不仅为 EL 8/9、Debian 12、Ubuntu 22/24 等多种 Linux 发行版的 x86 和 ARM 架构制作并分发了对应的 RPM/DEB 包,还在日常使用和测试中投入了大量精力。

事实上,在我维护的两百多个 PG 扩展插件包里,与 DuckDB 相关的四个扩展是最令我头痛却又最让我兴奋的:pg_duckdb 以及基于它衍生的 pg_mooncake ——

硕大无朋的依赖,复杂的编译过程,多个 libduckdb 的冲突,兼容不同操作系统、不同大版本的 PostgreSQL,难度可想而知。

但我相信,这种真正实现 “OLTP + OLAP” 深度融合的探索,十分难得,也值得全力投入。

致敬还是抄袭?

当去年十月阿里云宣布在 RDS 上发布 rds_duckdb 时,我最初的想法是“这总算是好事”。

毕竟 pg_duckdb 刚开源两个月,就有云厂商跟进,多少还是在推动产业发展。

但很遗憾的是,rds_duckdb并没有开源,具体实现、细节接口,我们无从得知。

不过,从它展现给外部的功能接口上,多少能看出和 pg_duckdb 的相似之处:

比如,早期第一个公开发布的 pg_duckdb 版本,核心接口非常简单,就是 SET pg_duckdb.execution = on;,然后就可以直接用 DuckDB 引擎来查询 PostgreSQL 表了。

而 rds_duckdb 的核心接口就是 SET rds_duckdb.execution = on;,不过名字从 pg_duckdb 变为 rds_duckdb。

结合 rds_duckdb 公开发布的时间点(pg_duckdb 问世后两个月),再加上它的操作方式与行为表现高度相似,

可以合理猜想:rds_duckdb 至少在接口与理念上借鉴了 pg_duckdb。至于代码层面是否有直接引用,由于 rds_duckdb并未开源,我们也无可考证。

当然,rds_duckdb 自己也“加了点料”,比如支持 PG 12 / 13,多了几个管理 DuckDB 表的小函数(拷贝数据、查看大小等),但技术上并不复杂。

要我说,这个功能现在只能算是一个简易原型,远无法跟后续版本的 pg_duckdb 以及基于它构建的 pg_mooncake 相提并论。

换句话说,以现在这个“半成品”水平,谈不上什么“剽窃”或“抄袭”。“抄袭”也得抄得更像样一点吧?

然而,如果说它是“致敬”,却也让人无从认同。因为无论是在接口文档还是其他地方,我们都看不到任何对 pg_duckdb,甚至对 duckdb 的署名或感谢。

MIT协议的义务

无论是 duckdb 本身还是基于它的 pg_duckdb,使用的都是非常宽松的 MIT 协议。

因此如果使用了 MIT 项目的代码,只要遵循 MIT 协议的基本要求

—— (1)保留原始版权声明;(2)保留 MIT 许可证文本 —— 就能够做到合规。

这个要求说白了就是:人家写了代码白给你用,你别把人家作者名字删了就好了。

不过,让人玩味的是,我在阿里云 RDS 文档里搜索翻找了半天,也没看到任何地方留存着 DuckDB 的版权声明或许可证文本。

假如 rds_duckdb 并未直接使用 pg_duckdb 的代码,倒还可以解释说“我没用你的东西”,而且知识产权只保护具体的代码而不保护想法,所以不提也就算了。

—— 但你肯定用了 MIT 协议的 duckdb 对吧? 那么 DuckDB 的 Credit 和 License 在哪里呢?

尽管法律层面最主要的合规点仍是保留版权和许可证文本,但云厂商提供的是在线服务,“交付物”是一个URL和文档,而非 RPM / DEB 软件包,所以用户能接触到的材料就是官方文档。 如果在说明文档等对外宣传中,有意无意地淡化或隐去所使用的第三方开源项目,使得公众认为“这是完全自研或完全原创”,那么在舆论与道德层面会被质疑为“抄袭”或“剽窃”

类似的例子比比皆是,先前“何同学”开源抄袭风波、抖音美摄案、“高春辉诉阿里云抄袭IPIP 案,” 以及大家耳熟能详的鹅厂。更别提有大把“国产数据库公司”将开源的 PG 换皮套壳魔改称为“100%纯自研”数据库。 都是因为不尊重开源版权、把别人的东西当成“自研”,最终声名狼藉、备受质疑。

在我看来,“从开源社区取经,做成服务售卖”本来不丢人,这在全球范围早就是商业惯例,无可厚非; 可“拿了人家的东西,当成自己的发明”就会令人不齿。 因为这会留下极其恶劣的印象:企业既没有对外披露应有的来源,也没为社区带来多少贡献,却能坐享其成,大肆盈利。 如此一来,开源作者失去了正当的尊重与声望,用户也被蒙在鼓里,这种行为不仅违背了开源精神,更破坏了生态的可持续发展。

对开源的攻讦

说实话,我并不清楚那篇文章的公众号和阿里云之间是否有某种合作关系,也不知道他们为何如此排斥开源。从其近期的文章风格看出,一连串“捧云踩开源”桥段已经不是头一回了: 例如《天上的“PostgreSQL” 说 地上的 PostgreSQL 都是“小垃圾”》将开源的 PostgreSQL 视为垃圾; 《云原生数据库砸了 K8S云自建数据库的饭碗》说K8S自建数据库是垃圾, 以及《开源软件是心怀鬼胎的大骗局 – 开源软件是人类最好的正能量 — 一个人的辩论会》,体现出对开源软件的偏见。

这种对开源的偏见与攻讦,实在令人费解。要知道,恰恰是因为开源,一系列核心基础软件才得以百花齐放、加速迭代。 Deepseek 的成功也好,PostgreSQL 越做越大也好,都是脚踏实地站在前人开源巨人的肩膀之上。 各大云厂商的“云数据库”大多直接或间接基于开源数据库衍生、优化、深度整合,没了开源,他们的产品根基都将不复存在。

然而时至今日,云厂商从开源社区赚得盆满钵满,却很少进行对等贡献或反馈,导致围绕“云厂商白嫖开源”这个问题,矛盾正逐渐加剧。 但公道自在人心,也并不是所有云厂商都只知道白拿不还:比如 AWS RDS 在这两年投入资源推动 PG 生态的 pgvector 成为向量数据库扩展的事实标准,开源了 log_fdw、pgcollection、pgtle 等多款插件,对社区的反哺社区也是看在眼里的。

再看看阿里云 RDS,似乎仍在拘泥于“从开源社区和初创公司碗里捞食”的老思路,吃相不体面也就罢了,问题在于致敬都致不到精髓,产品做得简陋不堪,难以给用户真正的信心。 实际上,对用户来说,不能容忍的不是“云厂商利用开源”,而是“云厂商家大业大,却端出一个半吊子东西敷衍了事”,搞得像个草台班子,丢的还是阿里云自己的脸。

参考阅读

花钱买罪受的大冤种:逃离云计算妙瓦底

本文来自真实咨询案例,如有雷同,纯属云厂商和钱包缘分太深。

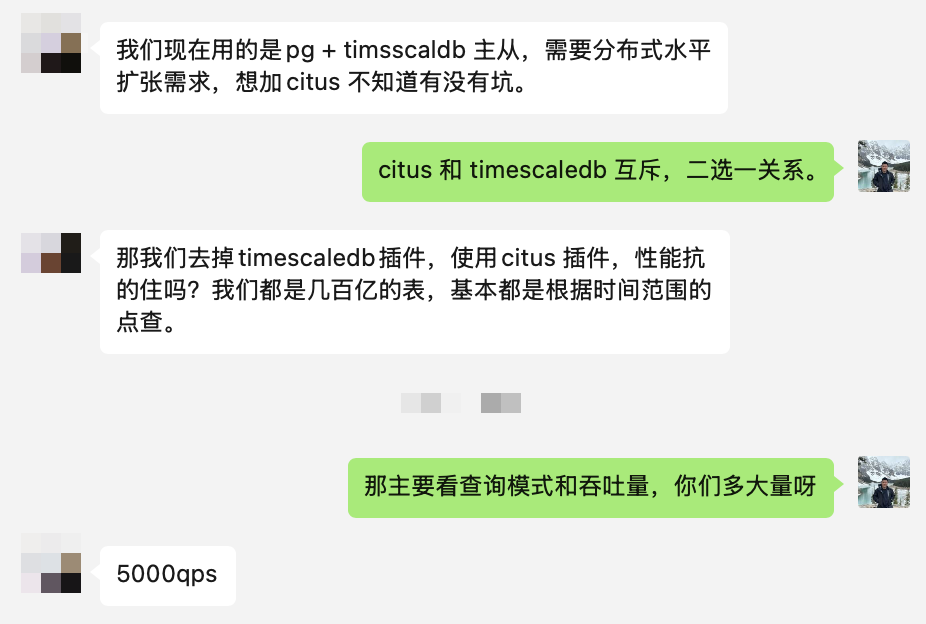

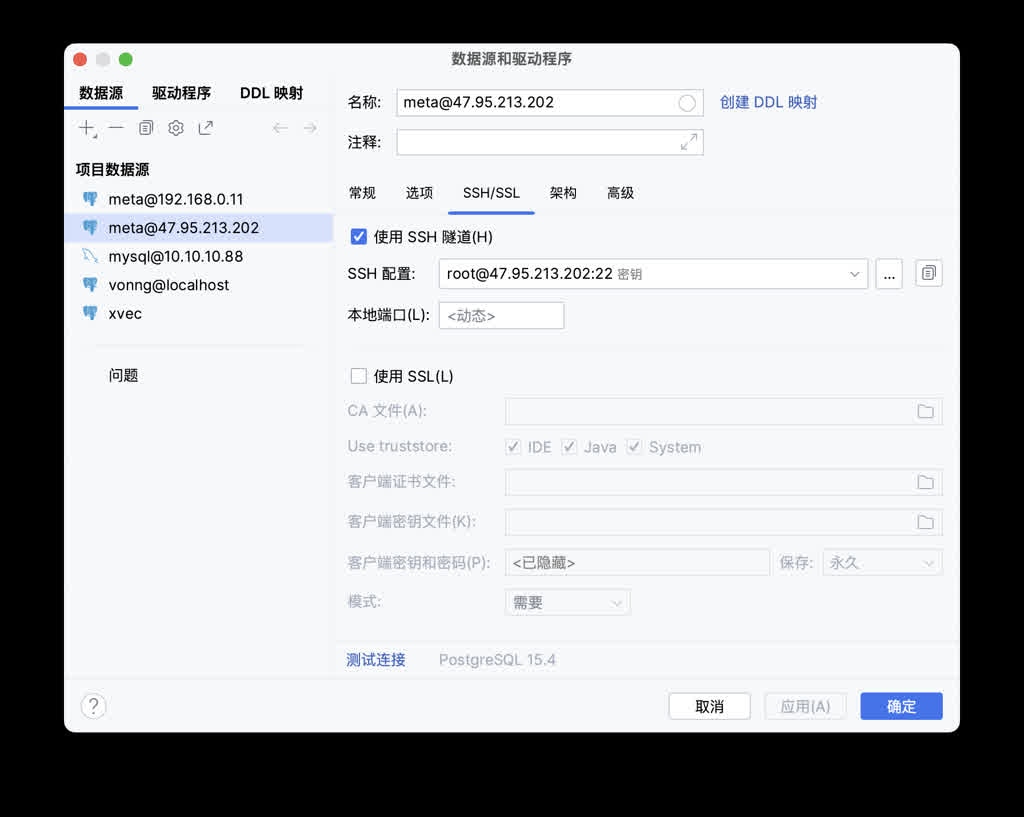

昨天一用户来咨询,问我 PostgreSQL 分布式数据库扩展 Citus 有没有什么坑,Pigsty 支持不。我想好家伙都要上分布式了,那你这数据量应该挺大了?

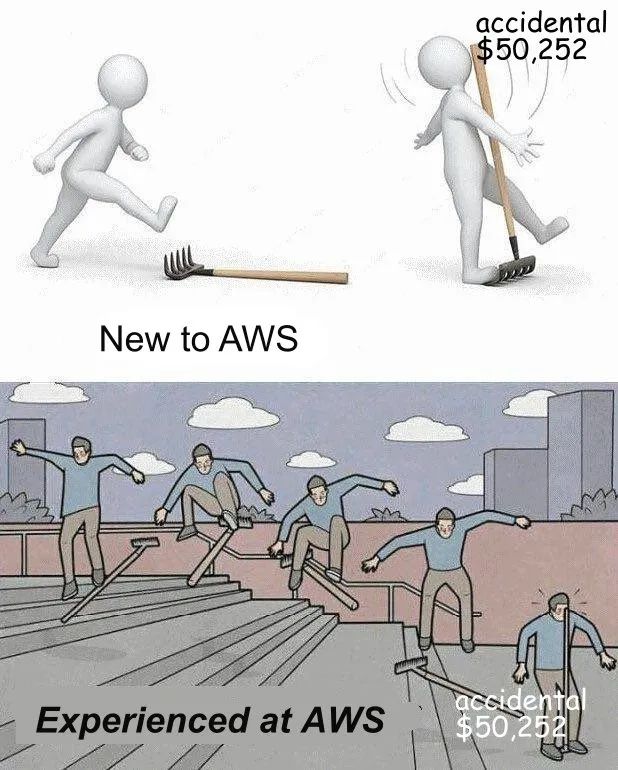

结果令人啼笑皆非,原来他并不是因为数据大到要冲破服务器柜门,而是栽在了 AWS EBS 云盘 的“杀猪盘”套路里。

捂着钱包慌忙上分布式数据库

“Citus 分布式数据库坑多吗?Pigsty 支持不?” 前两天一个用户火急火燎地跑来咨询,开口就提“Citus”。 我心想:都要上分布式了,想必有着海量数据、疯狂QPS,一定是个狠角色?估计得几百 TB 起步吧?

开源分布式扩展 Citus 在 PostgreSQL 生态里确实名气不小,尤其被微软收购后,在 Azure 上干脆还被包装成了 CosmosDB / Hyperscale PG。让 PG 原地升级成分布式数据库,一听就很酷炫。

但是老朋友们也知道,我是不太待见分布式数据库的。(《分布式数据库是伪需求吗》), 分布式数据库的基本原则就是如果你的问题能在经典主从范围内搞定就不要去折腾分布式,所以例行公事我也要问问这到底得多大负载。

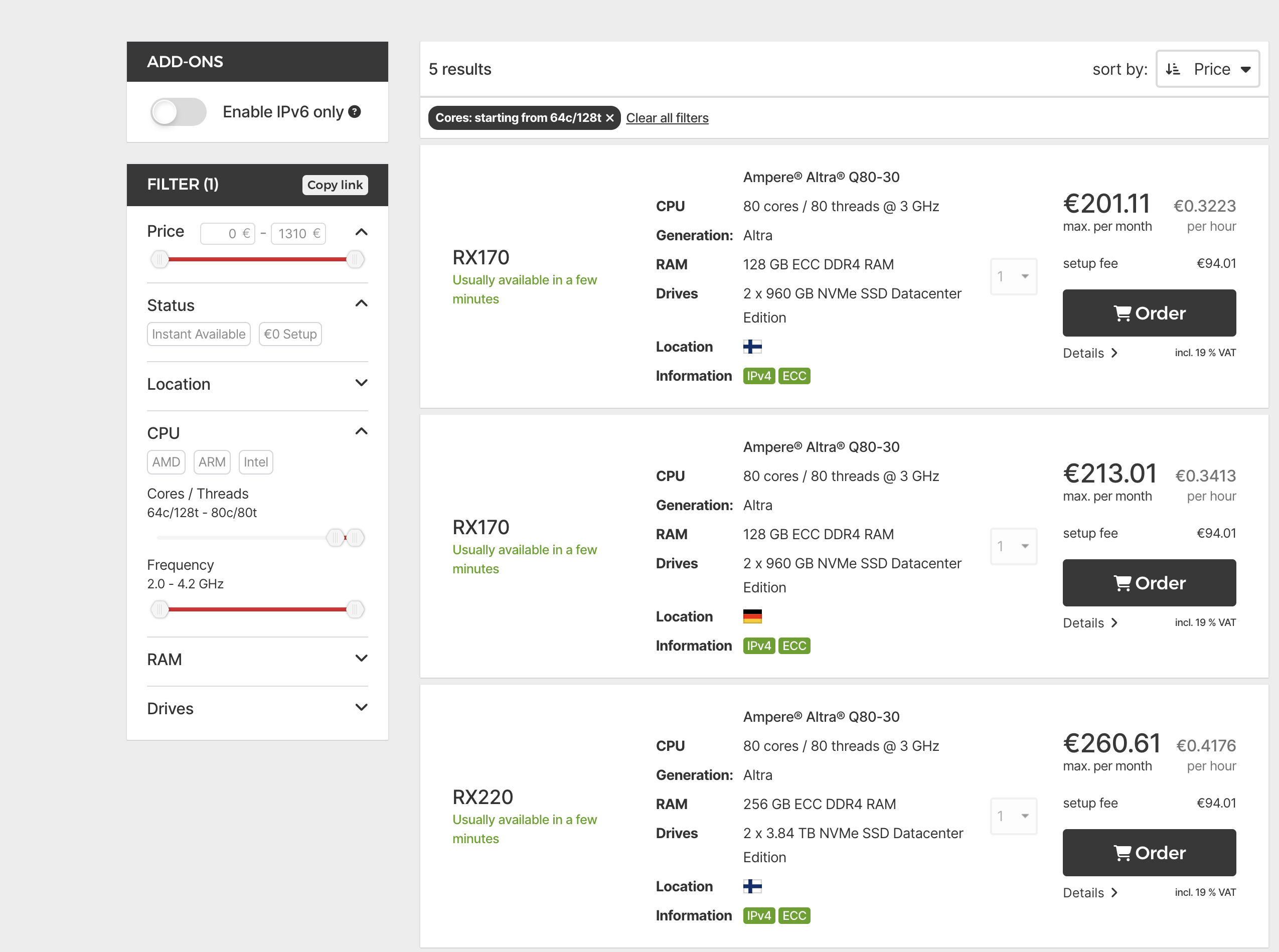

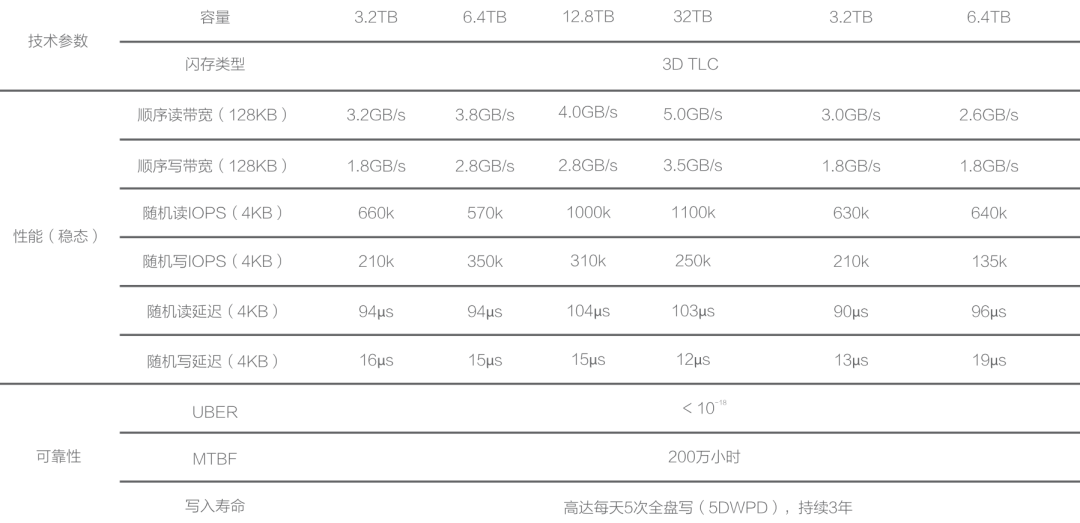

一问具体指标,数据量 60TB,用 TimescaleDB 扩展压缩后到 14 TB; QPS 5K,查询基本都是点查,扫个 100 条数据最多 —— 嗨,典型的 “数据量不算小,吞吐量不算大”。 不过这种量级在 2015 年也许要分布式,但在单卡 64 TB 的 2025 年,随便来个万把块的 NVMe SSD 跑个原生 PG 不就轻松解决了?单机 PG 随便每秒百万点查点写更是不在话下 —— 那么用分布式是什么原因?磁盘装不下了吗?

用户说:“因为会有突发流量,我要扩容加个从库,需要好几天。”

这就让我有点不解了:14TB 数据,万兆网卡环境下一次同步备份也就四五个小时吧? 我用 2016 年的破旧硬件限速跑,拖个3TB的从库都用不了半小时。 再说,加从库耗时主要取决于 I/O 能力,要是 I/O 卡脖子了,那扩容分布式也没用啊,分布式不也得做分区再平衡,能解决啥问题?

于是用户又说:“网络没问题,主要是磁盘成本太贵了:现在架构是一主三从,一个从库就一份数据,总共四份“。

用户还特意强调了两次 “磁盘太贵” 这就更让我纳闷了 —— 这年头企业级大容量 NVMe SSD 已经便宜的跟白菜价一样了,200 ¥/TB/年,你有这十几T数据,和折腾TimescaleDB,Citus人力,没钱买硬盘? 然后我看既然拖从库慢不是因为网络问题,那基本那就是磁盘问题了?磁盘这么慢,不会还在用 HDD机械硬盘吧,HDD 能贵到哪里去?

当然,因为用户都会手工拖从库,玩转 PG 扩展了,数据量也挺大,还敢上分布式,我已经默认他不是只会用云数据库,控制台点点点的菜鸟了。 但我还是想到了一种可能性 —— 难道说 …… 你买的是公有云厂商的 天价杀猪盘 ?

这位哥们说到:“是,我们现在用的 AWS 自建”。

嗨,破案了,又是一个花钱买罪受的杀猪盘受害用户。

天价杀猪盘,让动作都走形了

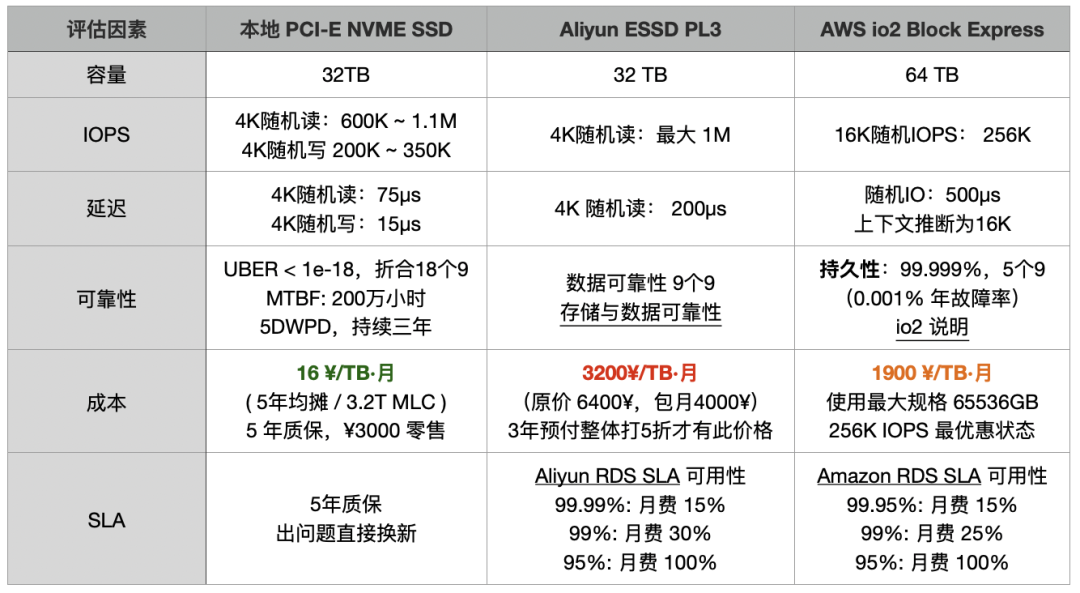

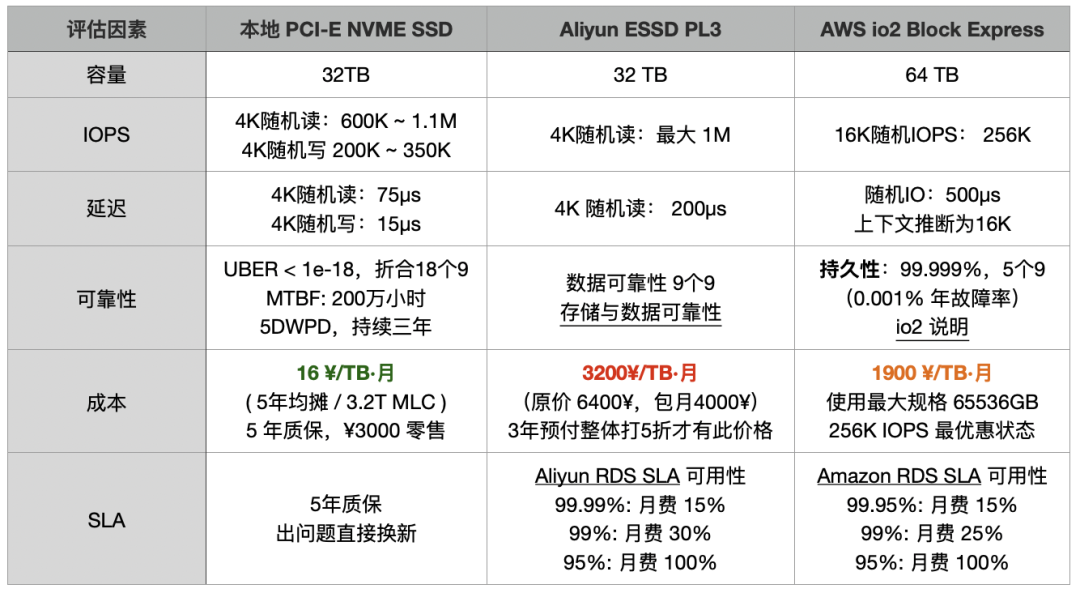

深聊后发现,用户在云上的开销非常惊人,光数据库毛估每年就有 200 万人民币,而得到的却是一个拉 14 TB 从库都要花几天的乞丐盘 —— 换算下来吞吐也就不到 100 MB/s。 一块 Gen5 NVMe SSD 的 12 GB/s 带宽,3M IOPS 性能,可以把这种乞丐盘轰杀成渣,而只要百分之一不到的成本。

按照 AWS EBS io2 盘折后价,大约 1900 元 / TB / 月,14 TB 数据 × 4 份 × 12 个月,光存储就差不多一年 128 万; 再加上 EC2 主机费用(通常云数据库存储/计算费用配比估算比例 2:1),每年 200 万往上走。 更离谱的是,花这么多钱换来的,却是性能奇差无比的存储。“交了保护费还得挨打”,可不就是这么回事?

为了省这笔云盘费,他们宁可把业务架构改得七零八落,或让运维花几天时间加从库,把无底洞似的人力和时间成本都扔进去。最后甚至想靠分布式数据库来“自救”,以为这样能省点存储费。 但结果呢?依然被“杀猪盘”牢牢锁死。每年烧掉 200 万,换来又慢又折腾的服务,还要自己额外承担把业务拆成拼图的成本。

那么追本溯源,分布式数据库的需求是怎么来的?—— 不是因为业务或数据真的需要分布式,而是云上块存储价格昂贵、性能太差。 这问题光靠换个“分布式数据库”或者用个 S3数据库是治不了本的。要真正治本,得先问:为什么云上的块存储会这么离谱?

其实,在 公有云是不是杀猪盘? 中,我早就告诉过你们答案。

花钱买罪受,别当云上大冤种

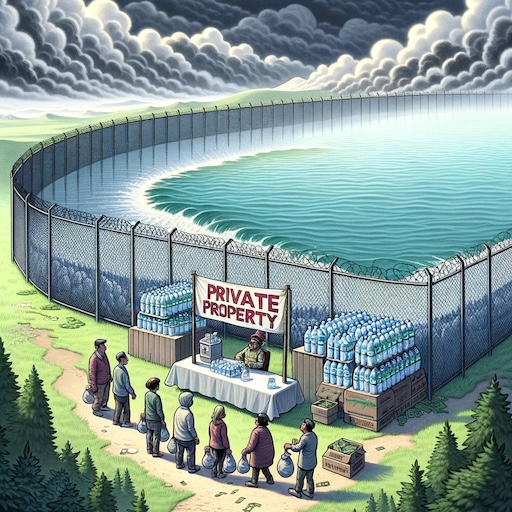

大型公有云厂商的核心套路无非是:用极便宜的小微实例和免费额度先把用户诱上云,再依托数据库等云 PaaS 技术壁垒锁定用户,用户上了规模之后“跑不掉”,只能留在云上持续出血,也就是所谓“杀猪盘”。

当然肯定会有人会说:大公有云厂商都有 Serverless ,或者弹性存储共享存储的云数据库服务嘛,肯定是这位客户 用云的姿势不对。 但实际上,去看看那些云数据库的荒谬定价吧!《云数据库是不是智商税》 ,用云数据库的成本只会比纯用资源的价格更震撼 —— 毕竟,PaaS 50% - 70% 的毛利可不是从天上掉下来的。

正如《下云奥德赛:是时候放弃云计算了吗?》中 DHH 所说: “在几个关键例子上,云的成本都极其高昂 —— 无论是大型物理机数据库、大型 NVMe 存储,或者只是最新最快的算力。 租生产队的驴所花的钱是如此高昂,以至于几个月的租金就能与直接购买它的价格持平。在这种情况下,你应该直接直接把这头驴买下来!”

以及 “被锁死困在亚马逊的云里,在实验新东西(比如固态硬盘)时,不得不忍受高昂到荒诞的定价带来的羞辱,这已经构成对核心价值观无法容忍的侵犯”。 我认为这一案例就是很典型的花钱买罪受 —— 花了每年两百万的巨款,得到的却是性能不堪入目的破烂。

而更关键地是,你以为云厂商会为你的业务负责到底吗? 用户耗费巨款买到的,除了加价一百倍卖给你的硬件,基本就是《草台班子唱大戏,阿里云RDS翻车记》这个案例里提供的售后支持 —— 你以为可以把锅甩给云厂商就能高枕无忧?真出了事,回旋镖还得打在自己头上。

别让这种付费续集不断上映

只要具备自建 PG 的能力,把数据库搬回自建机房或换家不捆绑 PaaS 的平价云,哪怕在 AWS 上直接选自带 Host Storage 的实例,都能把成本降好几个档次。 让我们做个小学三年级的算术:这类数据库实例放在云下,买几台托管物理机,一次性投入几十万、每年维护几万,五年甚至六七年都能用。

当然你要问搞不定怎么办?有不少 PG 专业供应商都提供技术咨询与技术支持服务,比如我就可以提供成熟且经过大规模实战验证的PG RDS解决方案, 在这一例中,我可以保证用20~40万一次性的硬件投入彻底解决每年两百万天价账单,同时性能还能比云上的乞丐盘高出 N 倍不止,而只收取只 15-40 万的咨询费。

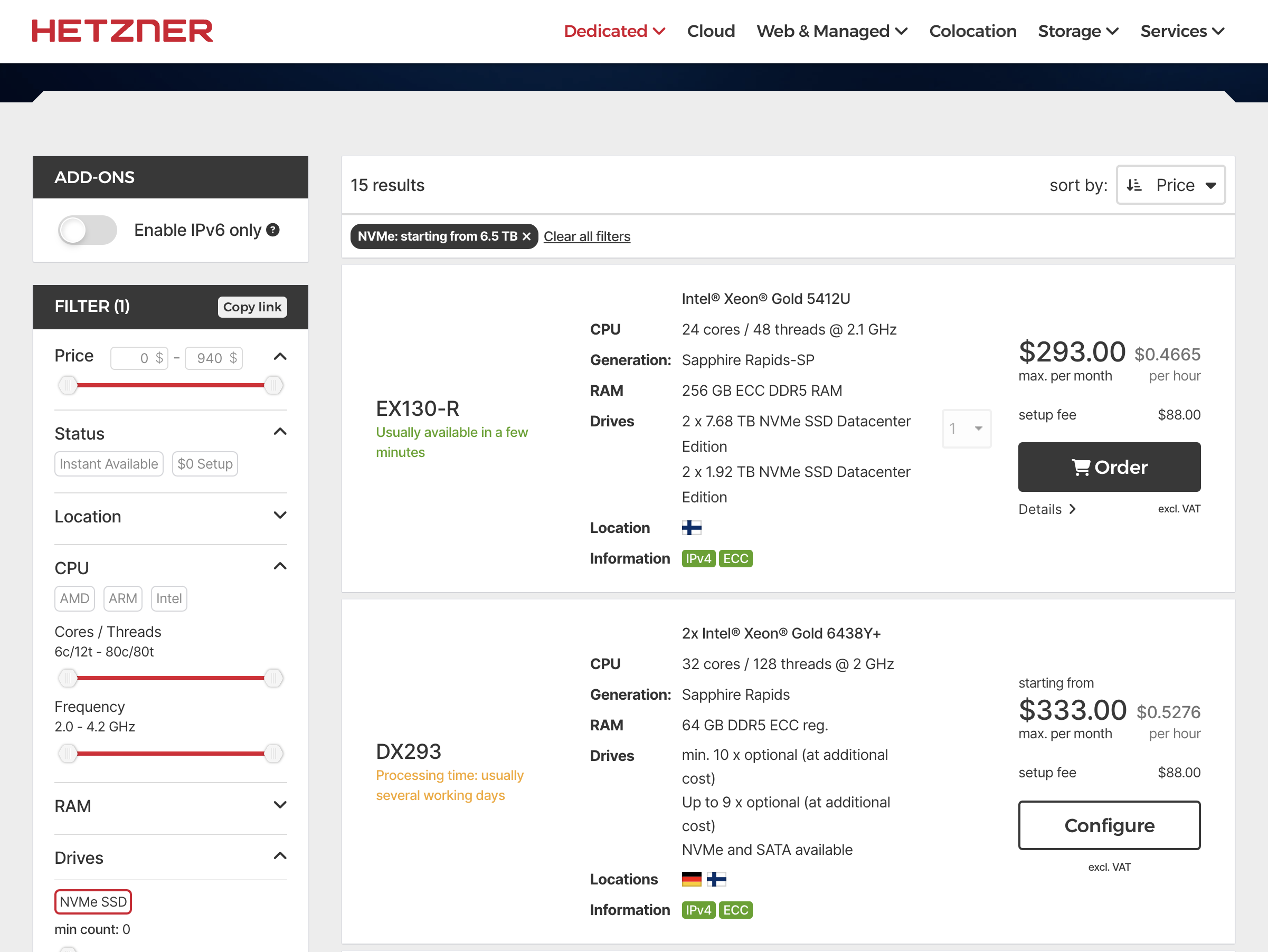

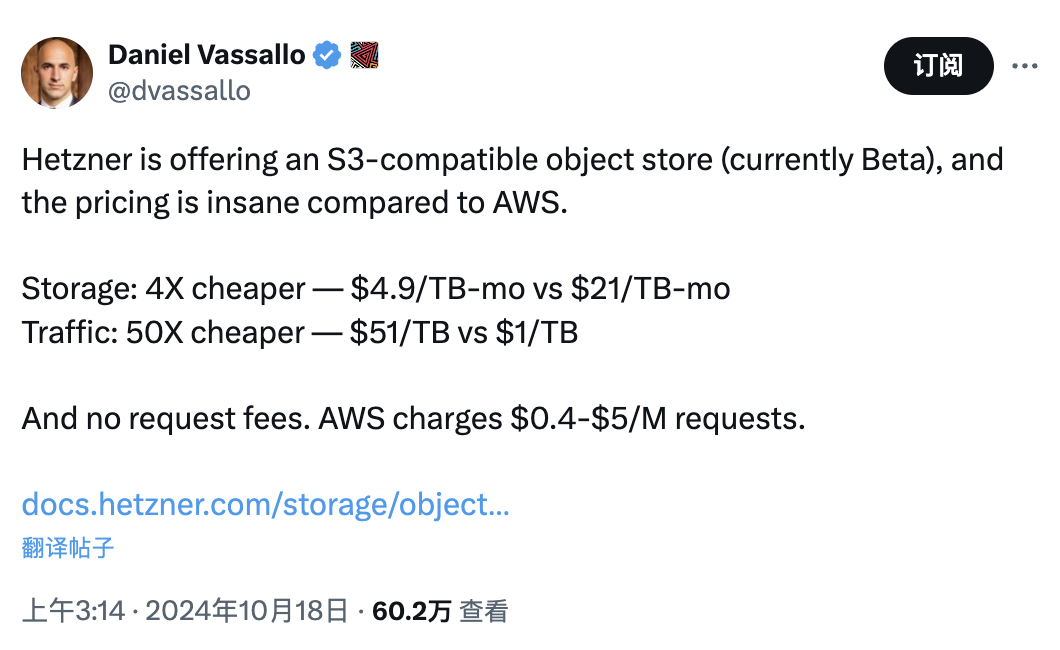

即使你不得不在云上跑,我也强烈建议选择那些没有 PaaS 绑定的平价云 —— 在保留云端“弹性”这一核心优势的同时,却能把成本打到每月万把块。 毕竟 Hetzner、Linode、DigitalOcean 都提供物美价廉(通常+15%毛利,很合理)的全托管专属服务器, 这些平价云的价格,足以让习惯了十倍百倍溢价的传统云计算妙瓦底的用户瞠目结舌。

嘿,我的意思是,你觉得 AWS 会为这样的规格每月收你多少钱?

开源 RDS 解决下云关键难题

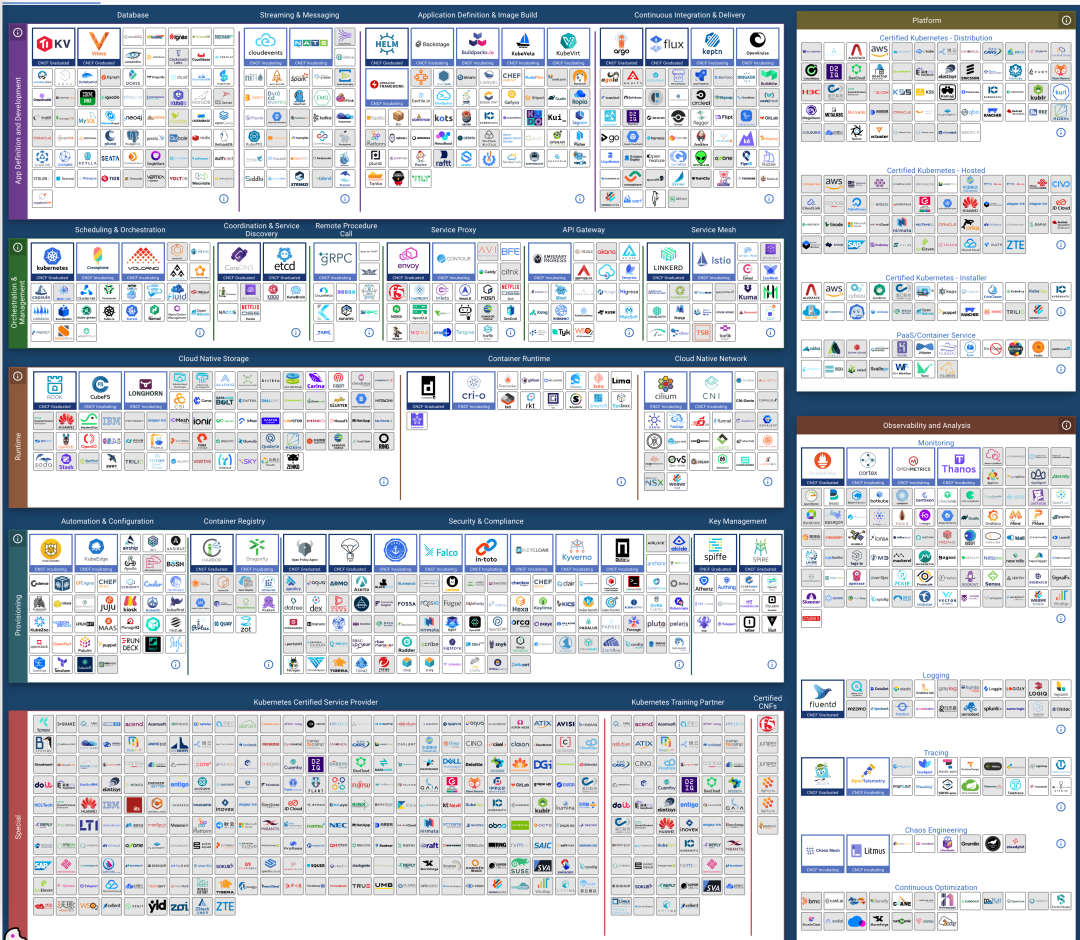

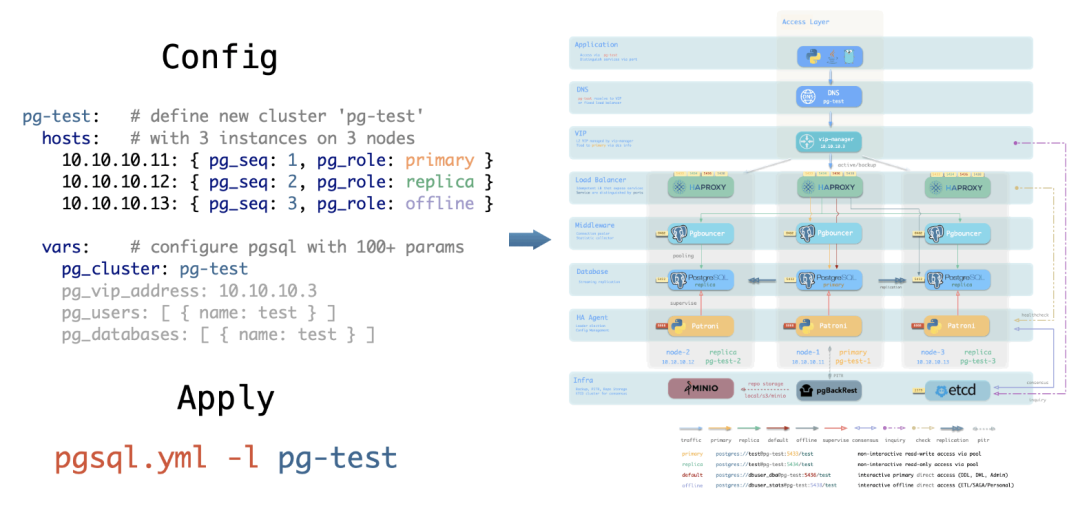

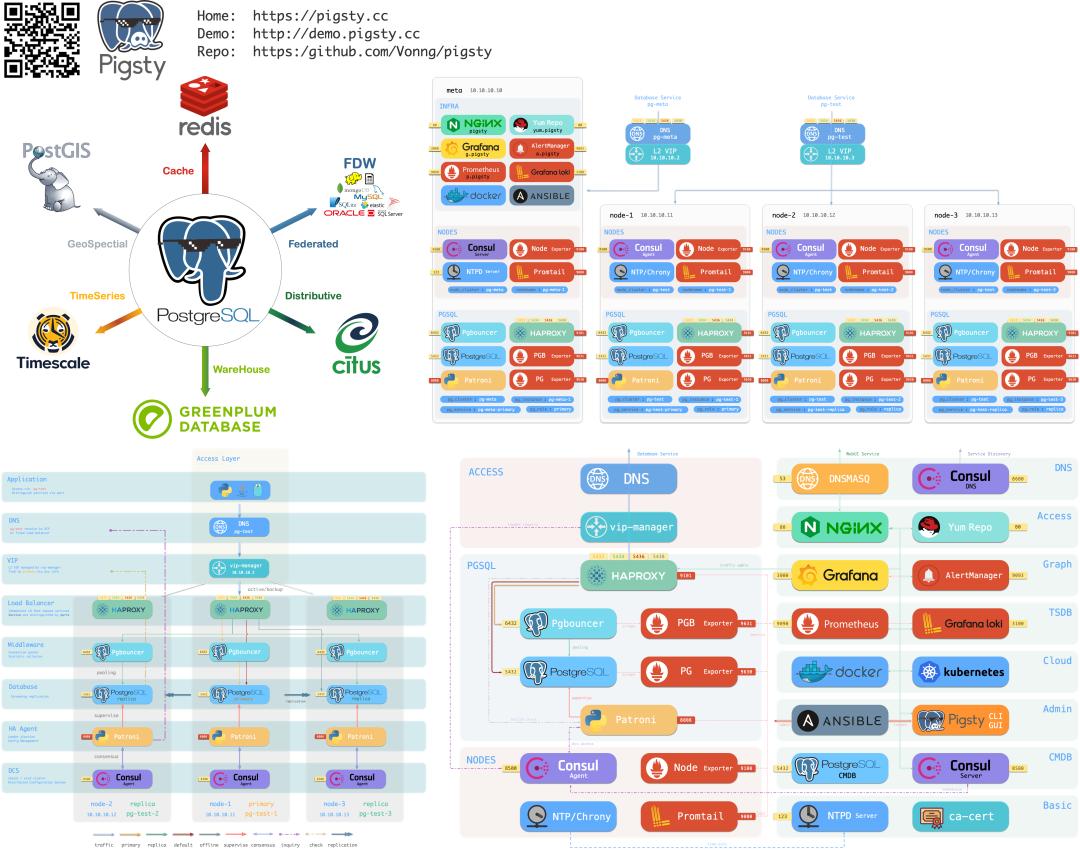

数据库是下云的关键卡点,微软 CEO 纳德拉说:你看到的这些 App 与应用不过都是数据库的漂亮封装而已 —— 所以下云最大的卡点就在于:能否在自己的服务器上跑好 PostgreSQL?应该怎么样解决这个问题?

当业务规模增长超出“云计算适用光谱”后,只有拥有了数据库自建的能力,用户才真正有自由去重新选择; 才能把所有云厂商当作纯资源供应商 —— 哪家收保护费,就能立马迁到另一家,实现真正的 “自由” 与 “自主可控”。

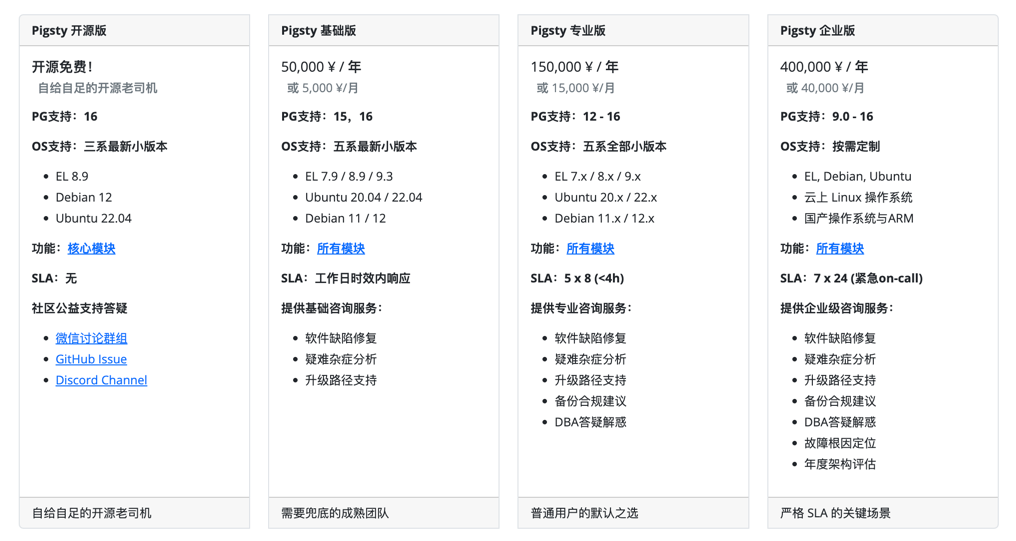

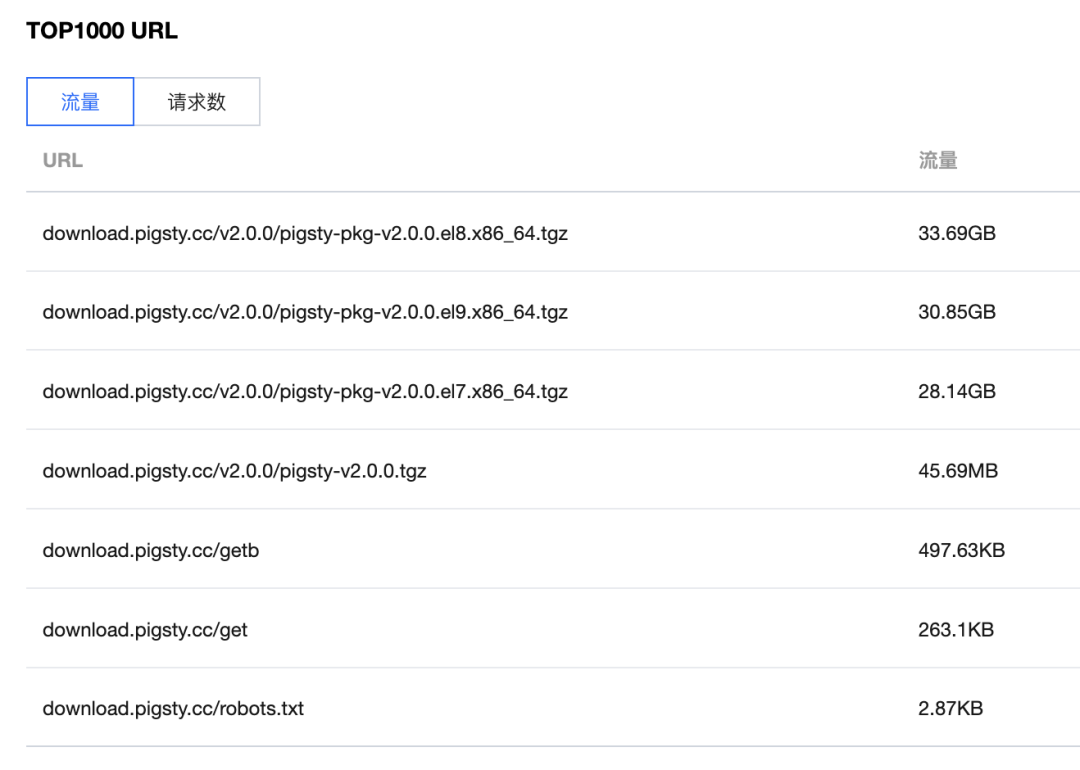

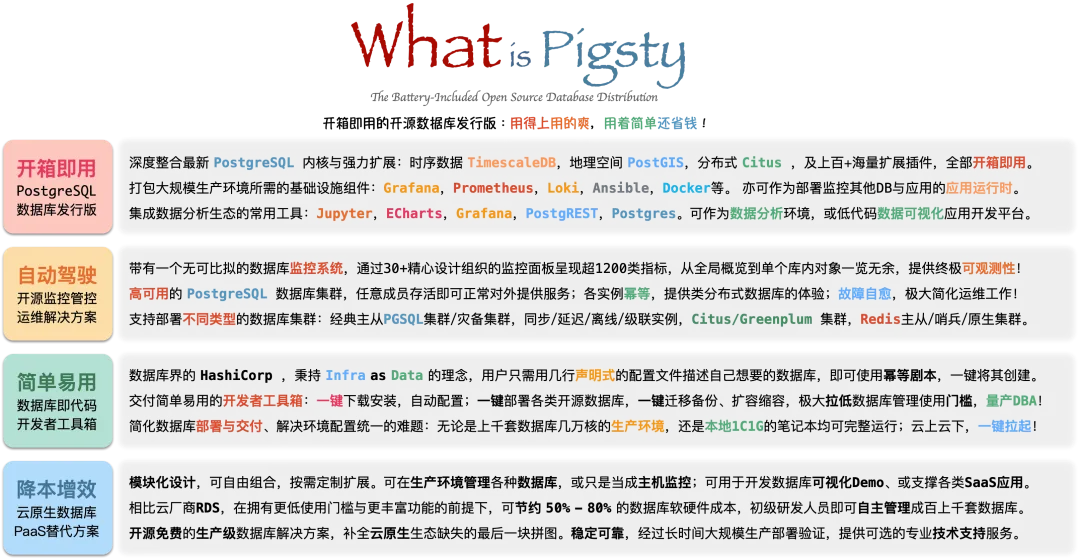

我一直主张,云数据库能力应该民主化普及到所有用户,而不是只能从几个垄断的赛博封建领主以天价租赁。 因此我做了开源版的 RDS for PostgreSQL:Pigsty,让你不依赖 DBA 专家,也能在物理机/虚拟机上一键拉起比 RDS 更强劲的 PostgreSQL, 并充分利用新硬件的高性能、低成本。解决下云的关键卡点。

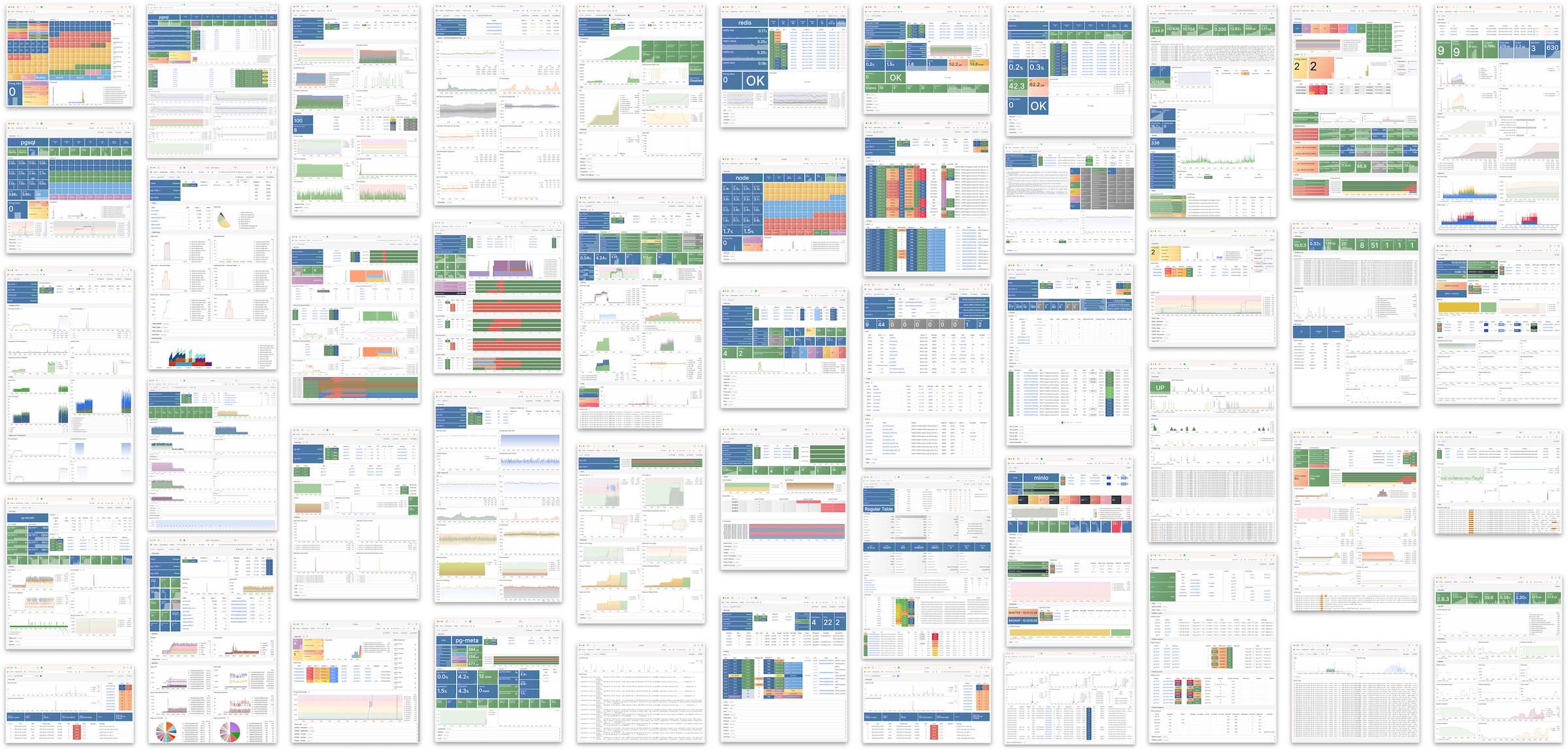

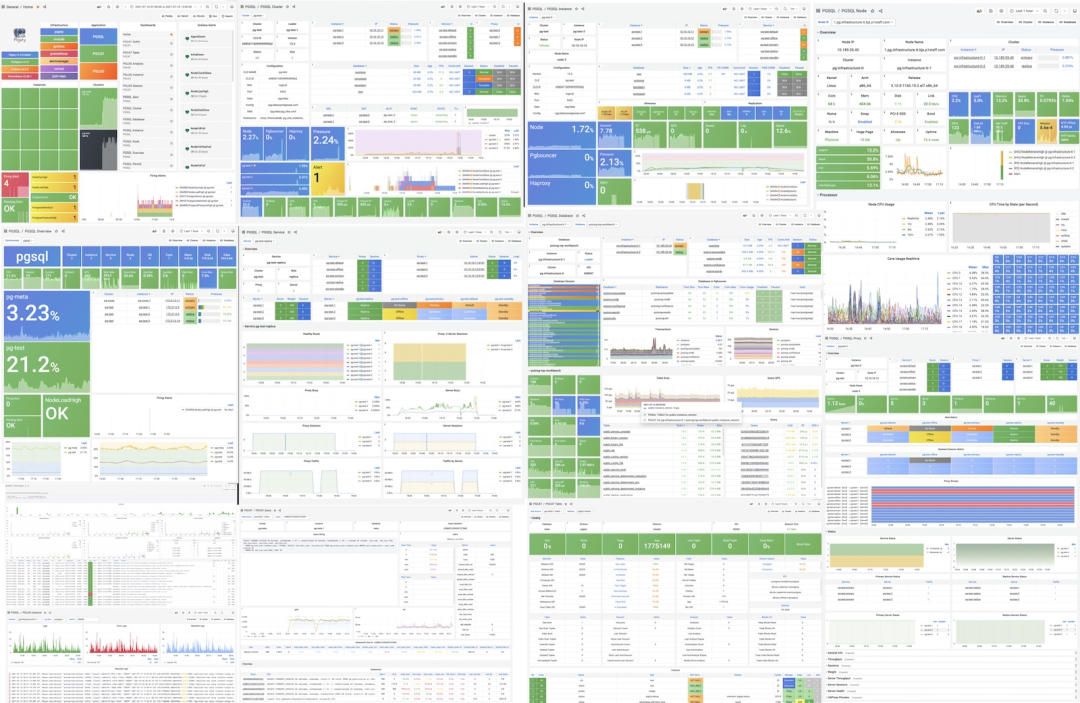

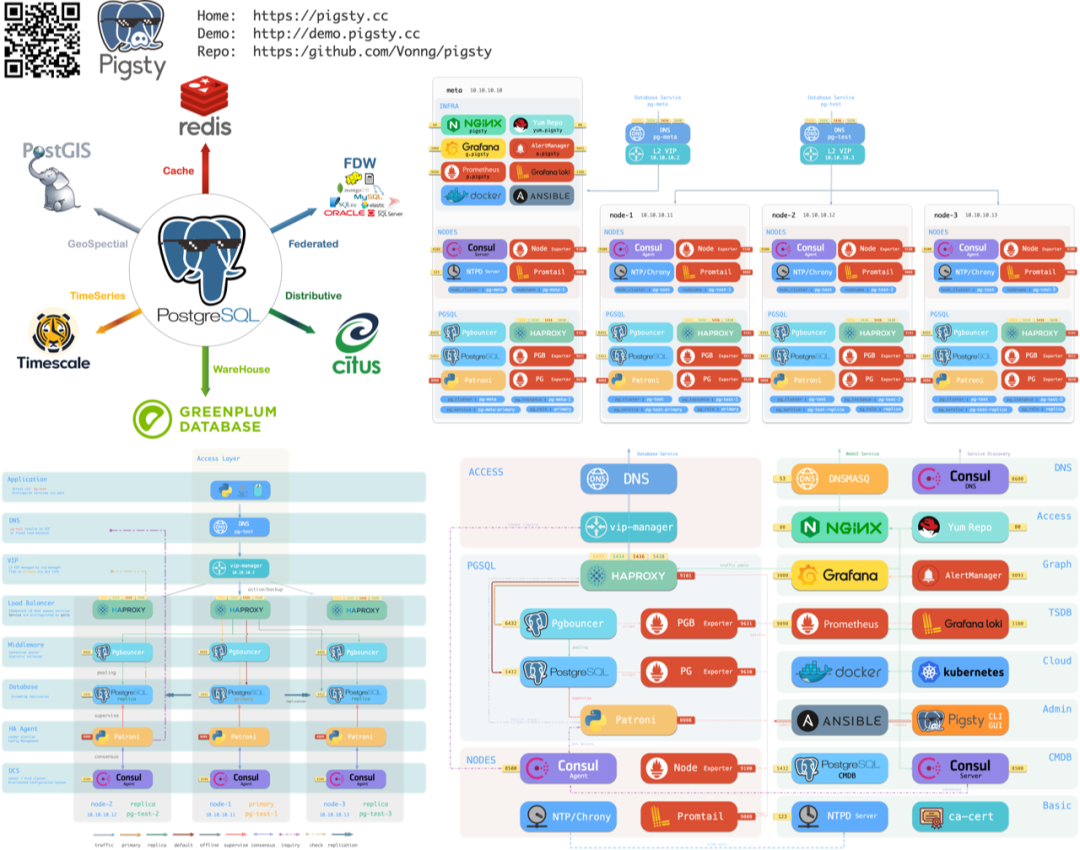

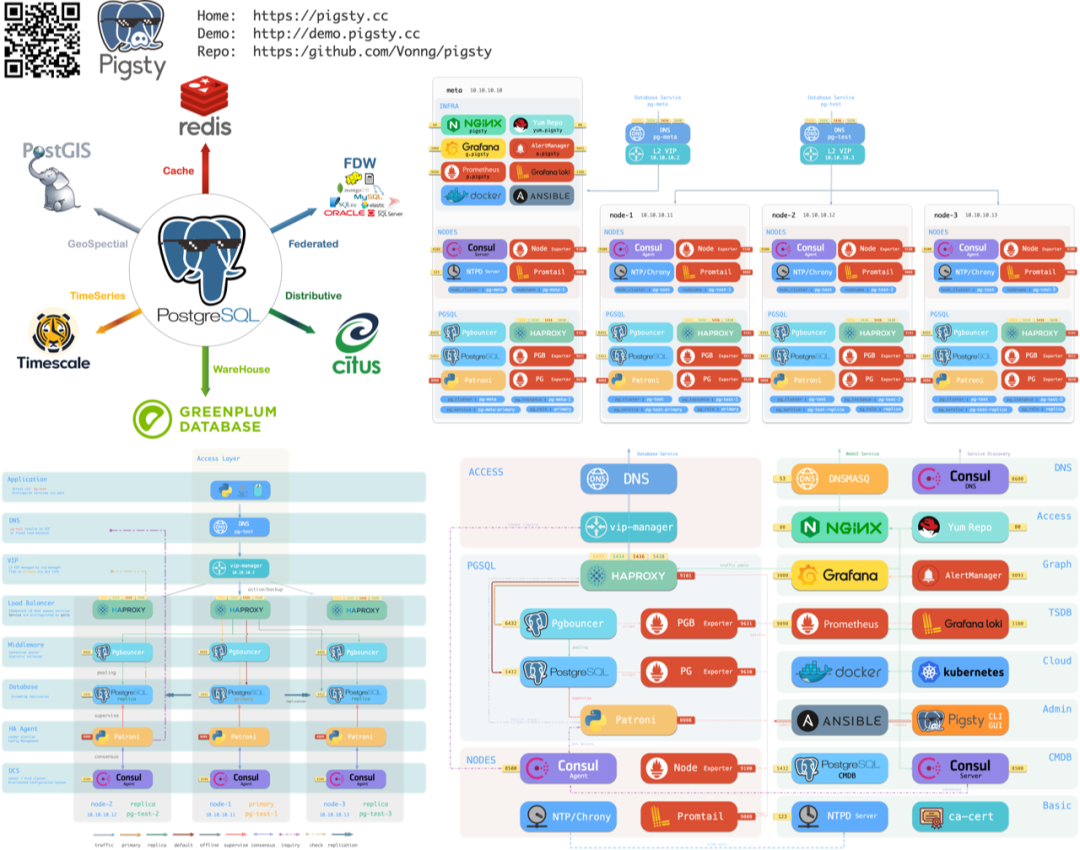

Pigsty 包含 PG 生态里独一无二的 351 个扩展插件,远胜云上那些可怜的几十个阉割版插件,还提供免配置开箱即用的 高可用架构 与业内领先的监控系统。 它已在互联网、金融、新能源、军工、制造业等行业广泛应用,目前在 OSSRANK 全球 PostgreSQL 生态开源榜单里排第 22 名。

Pigsty 采用 AGPLv3 开源协议,开源免费。如果有人觉得“还是希望付费买个安心”,我们也提供明码标价的商业咨询服务兜底。—— 用公道的价格解决实际问题,不玩那些花里胡哨的“杀猪盘”套路。

真正的“弹性”从来都不是“把钱丢给云厂商,自己却一脸懵逼”,而是知道什么时候该花钱、怎么花钱。愿所有数据库用户都能别做大冤种,让自己的时间与金钱花费的更有意义。

OpenAI全球宕机复盘:K8S循环依赖

12月11日,OpenAI 出现了全球范围内的不可用故障,影响了 ChatGPT,API,Sora,Playground 和 Labs 等服务。影响范围从 12 月 11 日下午 3:16 至晚上 7:38 期间,持续时间超过四个小时,产生显著影响。

根据 OpenIA 在事后发布的故障报告,此次故障的直接原因是新部署了一套监控,压垮了 Kubernetes 控制面。然后因为控制面故障导致无法直接回滚,进一步放大的故障影响,导致了长时间的不可用。

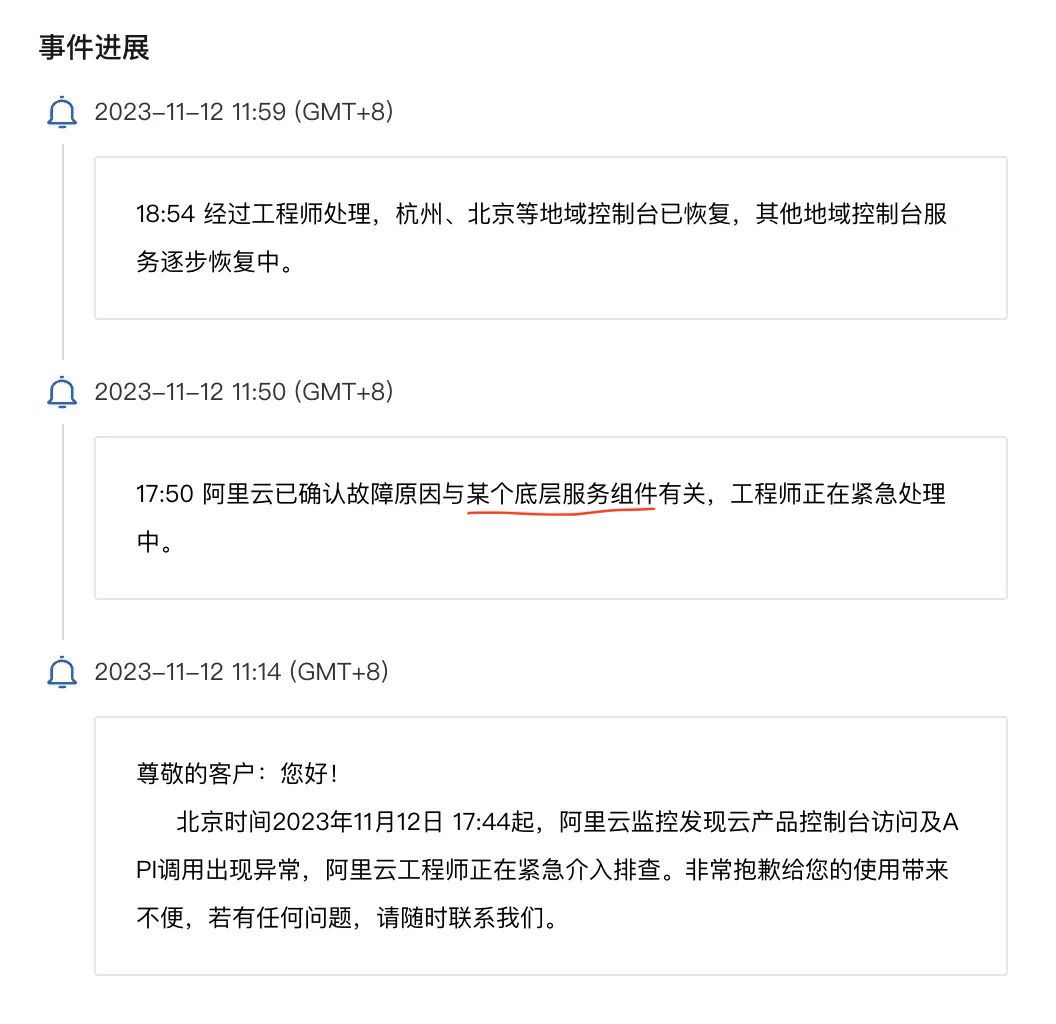

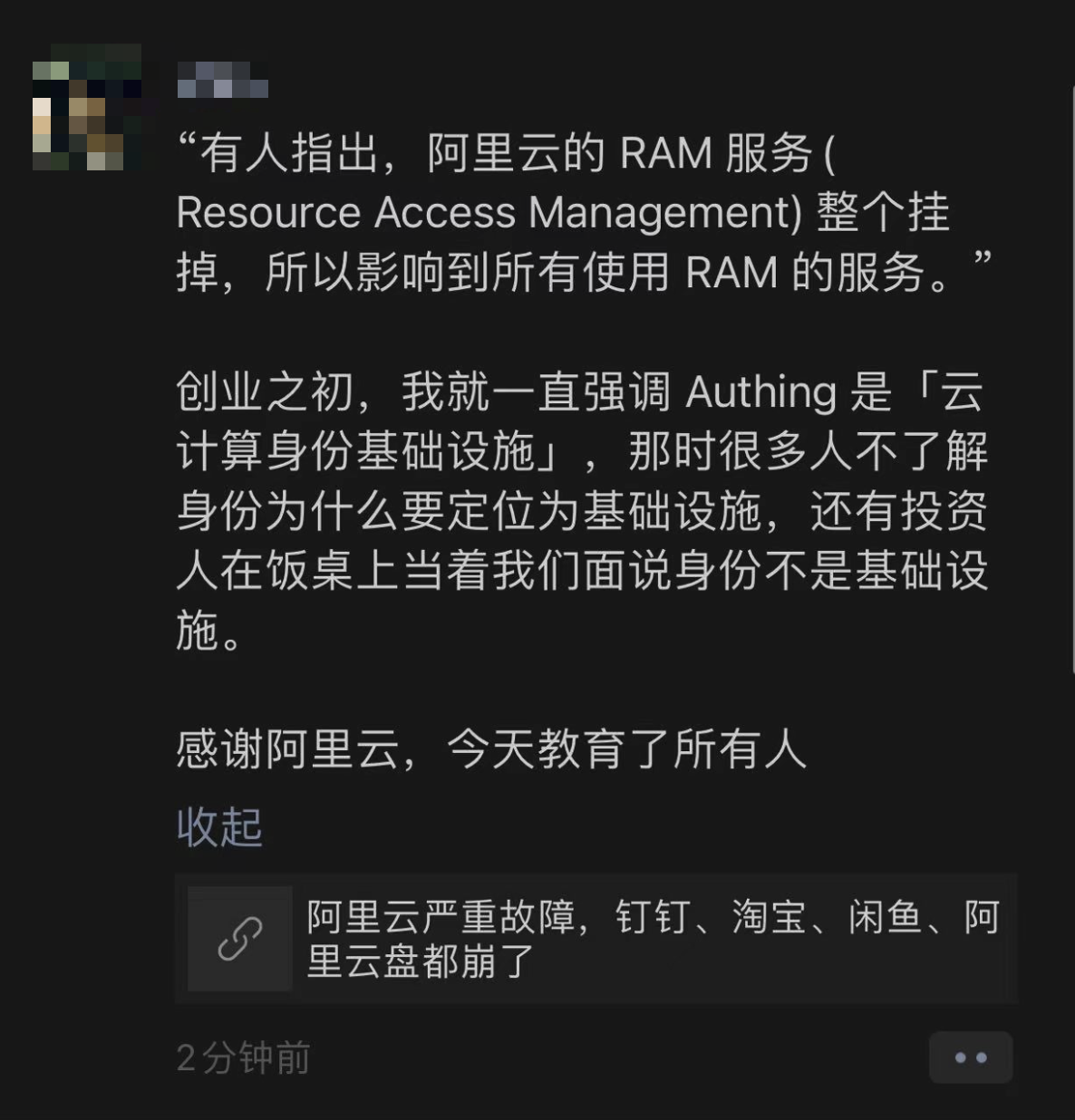

其实这个故障和去年双十一 阿里云全球史诗故障 非常类似。都是全球控制面不可用,根因都是循环依赖(以及测试/发布灰度不足)。无非是阿里云是 OSS 和 IAM 之间循环依赖,OpenAI 是 DNS 和 K8S 的循环依赖。

循环依赖是架构设计中的大忌,就像在基础设施中放了炸药包,容易被一些临时性偶发性故障点爆。这次故障再次为我们敲响警钟。当然这次故障的原因还可以进一步深挖,比如测试/灰度不足,以及 架构杂耍:

比如 K8S 官方建议的 最大集群规模是 5000 节点,而我还清晰记得 OpenAI 发表过一篇吹牛文章:《我们是如何通过移除一个组件来让K8S跑到7500节点的》 —— 不仅不留冗余,还要超载压榨50%,最终,这次还真就在集群规模上翻了大车。

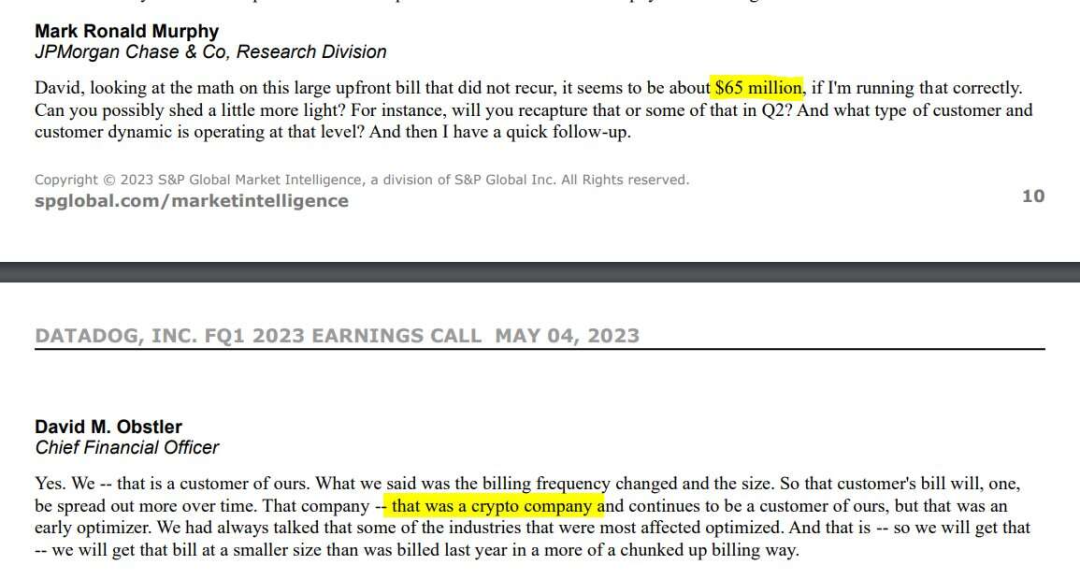

OpenAI 是 AI 领域的当红炸子鸡,其产品的实力与受欢迎程度毋庸置疑。这是依然无法掩盖其在基础设施上的薄弱 —— 基础设施想要搞好确实不容易,这也是为什么 AWS 和 DataDog 这样的公司赚得钵满盆翻的核心原因。

OpenAI 在去年在 PostgreSQL 数据库与 pgBouncer 连接池 上也翻过大车。这两年来的在基础设施可靠性上的表现也说不上亮眼。这次故障再次说明,即使是万亿级独角兽,在非专业领域上,也照样是个草台班子。

参考阅读

故障复盘原文

API、ChatGPT 和 Sora 出现问题

https://status.openai.com/incidents/ctrsv3lwd797

OpenAI故障报告复盘

本文详细记录了 2024 年 12 月 11 日发生的一次故障,当时所有 OpenAI 服务均出现了严重的停机问题。根源在于我们部署了新的遥测服务(telemetry service),意外导致 Kubernetes 控制平面负载过重,从而引发关键系统的连锁故障。我们将深入解析故障根本原因,概述故障处理的具体步骤,并分享我们为防止类似事件再次发生而采取的改进措施。

影响

在太平洋时间 2024 年 12 月 11 日下午 3:16 至晚上 7:38 之间,所有 OpenAI 服务均出现了严重降级或完全不可用。这起事故源于我们在所有集群中推出的新遥测服务配置,并非由安全漏洞或近期产品发布所致。从下午 3:16 开始,各产品性能均出现大幅下降。

- ChatGPT: 在下午 5:45 左右开始大幅恢复,并于晚上 7:01 完全恢复。

- API: 在下午 5:36 左右开始大幅恢复,于晚上 7:38 所有模型全部恢复正常。

- Sora: 于晚上 7:01 完全恢复。

根因

OpenAI 在全球范围内运营着数百个 Kubernetes 集群。Kubernetes 的控制平面主要负责集群管理,而数据平面则实际运行工作负载(如模型推理服务)。

为提升组织整体可靠性,我们一直在加强集群级别的可观测性工具,以加深对系统运行状态的可见度。太平洋时间下午 3:12,我们在所有集群部署了一项新的遥测服务,用于收集 Kubernetes 控制平面的详细指标。

由于遥测服务会涉及非常广泛的操作范围,这项新服务的配置无意间让每个集群中的所有节点都执行了高成本的 Kubernetes API 操作,并且该操作成本会随着集群规模的扩大而成倍增加。数千个节点同时发起这些高负载请求,导致 Kubernetes API 服务器不堪重负,进而瘫痪了大型集群的控制平面。该问题在规模最大的集群中最为严重,因此在测试环境并未检测到;另一方面,DNS 缓存也使问题在正式环境中的可见度降低,直到问题在整个集群开始全面扩散后才逐渐显现。

尽管 Kubernetes 数据平面大部分情况下可独立于控制平面运行,但数据平面的 DNS 解析依赖控制平面——如果控制平面瘫痪,服务之间便无法通过 DNS 相互通信。

简而言之,新遥测服务的配置在大型集群中意外地生成了巨大的 Kubernetes API 负载,导致控制平面瘫痪,进而使 DNS 服务发现功能中断。

测试与部署

我们在一个预发布(staging)集群中对变更进行了测试,当时并未发现任何问题。该故障主要对超过一定规模的集群产生影响;再加上每个节点的 DNS 缓存延迟了故障的可见时间,使得变更在正式环境被大范围部署之前并没有暴露出任何明显异常。

在部署之前,我们最关注的是这项新遥测服务本身对系统资源(CPU/内存)的消耗。在部署前也对所有集群的资源使用情况进行了评估,确保新部署不会干扰正在运行的服务。虽然我们针对不同集群调优了资源请求,但并未考虑 Kubernetes API 服务器的负载问题。与此同时,此次变更的监控流程主要关注了服务自身的健康状态,并没有完善地监控集群健康(尤其是控制平面的健康)。

Kubernetes 数据平面(负责处理用户请求)设计上可以在控制平面离线的情况下继续工作。然而,Kubernetes API 服务器对于 DNS 解析至关重要,而 DNS 解析对于许多服务都是核心依赖。

DNS 缓存在故障早期阶段起到了暂时的缓冲作用,使得一些陈旧但可用的 DNS 记录得以继续为服务提供地址解析。但在接下来 20 分钟里,这些缓存逐步过期,依赖实时 DNS 的服务开始出现故障。这段时间差恰好在部署持续推进时才逐渐暴露问题,使得最终故障范围更为集中和明显。一旦 DNS 缓存失效,集群里的所有服务都会向 DNS 发起新请求,进一步加剧了控制平面的负载,使得故障难以在短期内得到缓解。

故障修复

在大多数情况下,监控部署和回滚有问题的变更都相对容易,我们也有自动化工具来检测和回滚故障性部署。此次事件中,我们的检测工具确实正常工作——在客户受影响前几分钟就已经发出了警报。不过要真正修复这个问题,需要先删除导致问题的遥测服务,而这需要访问 Kubernetes 控制平面。然而,API 服务器在承受巨大负载的情况下无法正常处理管理操作,导致我们无法第一时间移除故障性服务。

我们在几分钟内确认了问题,并立即启动多个工作流程,尝试不同途径迅速恢复集群:

- 缩小集群规模: 通过减少节点数量来降低 Kubernetes API 总负载。

- 阻断对 Kubernetes 管理 API 的网络访问: 阻止新的高负载请求,让 API 服务器有时间恢复。

- 扩容 Kubernetes API 服务器: 提升可用资源以应对积压请求,从而为移除故障服务赢得操作窗口。

我们同时采用这三种方法,最终恢复了对部分控制平面的访问权限,从而得以删除导致问题的遥测服务。

一旦我们恢复对部分控制平面的访问权限,系统就开始迅速好转。在可能的情况下,我们将流量切换到健康的集群,同时对仍然存在问题的其他集群进行进一步修复。部分集群仍在修复过程出现资源竞争问题:很多服务同时尝试重新下载所需组件,导致资源饱和并需要人工干预。

此次事故是多项系统与流程在同一时间点相互作用、同时失效的结果,主要体现在:

- 测试环境未能捕捉到新配置对 Kubernetes 控制平面的影响。

- DNS 缓存使服务故障出现了时间延迟,从而让变更在故障完全暴露前被大范围部署。

- 故障发生时无法访问控制平面,导致修复进程十分缓慢。

时间线

- 2024 年 12 月 10 日: 新的遥测服务部署到预发布集群,经测试无异常。

- 2024 年 12 月 11 日 下午 2:23: 引入该服务的代码合并到主分支,并触发部署流水线。

- 下午 2:51 至 3:20: 变更逐步应用到所有集群。

- 下午 3:13: 告警触发,通知到工程师。

- 下午 3:16: 少量客户开始受到影响。

- 下午 3:16: 根因被确认。

- 下午 3:27: 工程师开始把流量从受影响的集群迁移。

- 下午 3:40: 客户影响达到最高峰。

- 下午 4:36: 首个集群恢复。

- 晚上 7:38: 所有集群恢复。

预防措施

为防止类似事故再次发生,我们正在采取如下措施:

1. 更健壮的分阶段部署

我们将继续加强基础设施变更的分阶段部署和监控机制,确保任何故障都能被迅速发现并限制在较小范围。今后所有与基础设施相关的配置变更都会采用更全面的分阶段部署流程,并在部署过程中持续监控服务工作负载以及 Kubernetes 控制平面的健康状态。

2. 故障注入测试

Kubernetes 数据平面需要进一步增强在缺失控制平面的情况下的生存能力。我们将引入针对该场景的测试手段,包括在测试环境有意注入“错误配置”来验证系统检测和回滚能力。

3. 紧急访问 Kubernetes 控制平面

当前我们还没有一套应对数据平面向控制平面施加过大压力时,依旧能访问 API 服务器的应急机制。我们计划建立“破冰”机制(break-glass),确保在任何情况下工程团队都能访问 Kubernetes API 服务器。

4. 进一步解耦 Kubernetes 数据平面和控制平面

我们目前对 Kubernetes DNS 服务的依赖,使得数据平面和控制平面存在耦合关系。我们会投入更多精力,使得控制平面对关键服务和产品工作负载不再是“负载核心”,从而降低对 DNS 的单点依赖。

5. 更快的恢复速度

我们将针对集群启动所需的关键资源引入更完善的缓存和动态限流机制,并定期进行“快速替换整个集群”的演练,以确保在最短时间内实现正确、完整的启动和恢复。

结语

我们对这次事故造成的影响向所有客户表示诚挚的歉意——无论是 ChatGPT 用户、API 开发者还是依赖 OpenAI 产品的企业。此次事件没有达到我们自身对系统可靠性的期望。我们意识到向所有用户提供高度可靠的服务至关重要,接下来将优先落实上述防范措施,不断提升服务的可靠性。感谢大家在此次故障期间的耐心等待。

发表于 23 小时前。2024 年 12 月 12 日 - 17:19 PST

已解决

在 2024 年 12 月 11 日下午 3:16 到晚上 7:38 期间,OpenAI 的服务不可用。大约在下午 5:40 开始,我们观察到 API 流量逐渐恢复;ChatGPT 和 Sora 则在下午 6:50 左右恢复。我们在晚上 7:38 排除了故障,并使所有服务重新恢复正常。

OpenAI 将对本次事故进行完整的根本原因分析,并在此页面分享后续详情。

2024 年 12 月 11 日 - 22:23 PST

监控

API、ChatGPT 和 Sora 的流量大体恢复。我们将继续监控,确保问题彻底解决。

2024 年 12 月 11 日 - 19:53 PST

更新

我们正持续推进修复工作。API 流量正在恢复,我们逐个地区恢复 ChatGPT 流量。Sora 已开始部分恢复。

2024 年 12 月 11 日 - 18:54 PST

更新

我们正努力修复问题。API 和 ChatGPT 已部分恢复,Sora 仍然离线。

2024 年 12 月 11 日 - 17:50 PST

更新

我们正继续研发修复方案。

2024 年 12 月 11 日 - 17:03 PST

更新

我们正继续研发修复方案。

2024 年 12 月 11 日 - 16:59 PST

更新

我们已经找到一个可行的恢复方案,并开始看到部分流量成功返回。我们将继续努力,使服务尽快恢复正常。

2024 年 12 月 11 日 - 16:55 PST

更新

ChatGPT、Sora 和 API 依然无法使用。我们已经定位到问题,并正在部署修复方案。我们正在尽快恢复服务,对停机带来的影响深表歉意。

2024 年 12 月 11 日 - 16:24 PST

已确认问题

我们接到报告称 API 调用出现错误,platform.openai.com 和 ChatGPT 的登录也出现问题。我们已经确认问题,并正在开展修复。

2024 年 12 月 11 日 - 15:53 PST

更新

我们正在继续调查此问题。

2024 年 12 月 11 日 - 15:45 PST

更新

我们正在继续调查此问题。

2024 年 12 月 11 日 - 15:42 PST

调查中

我们目前正在调查该问题,很快会提供更多更新信息。

发表于 2 天前。2024 年 12 月 11 日 - 15:17 PST

本次故障影响了 API、ChatGPT、Sora、Playground 以及 Labs。

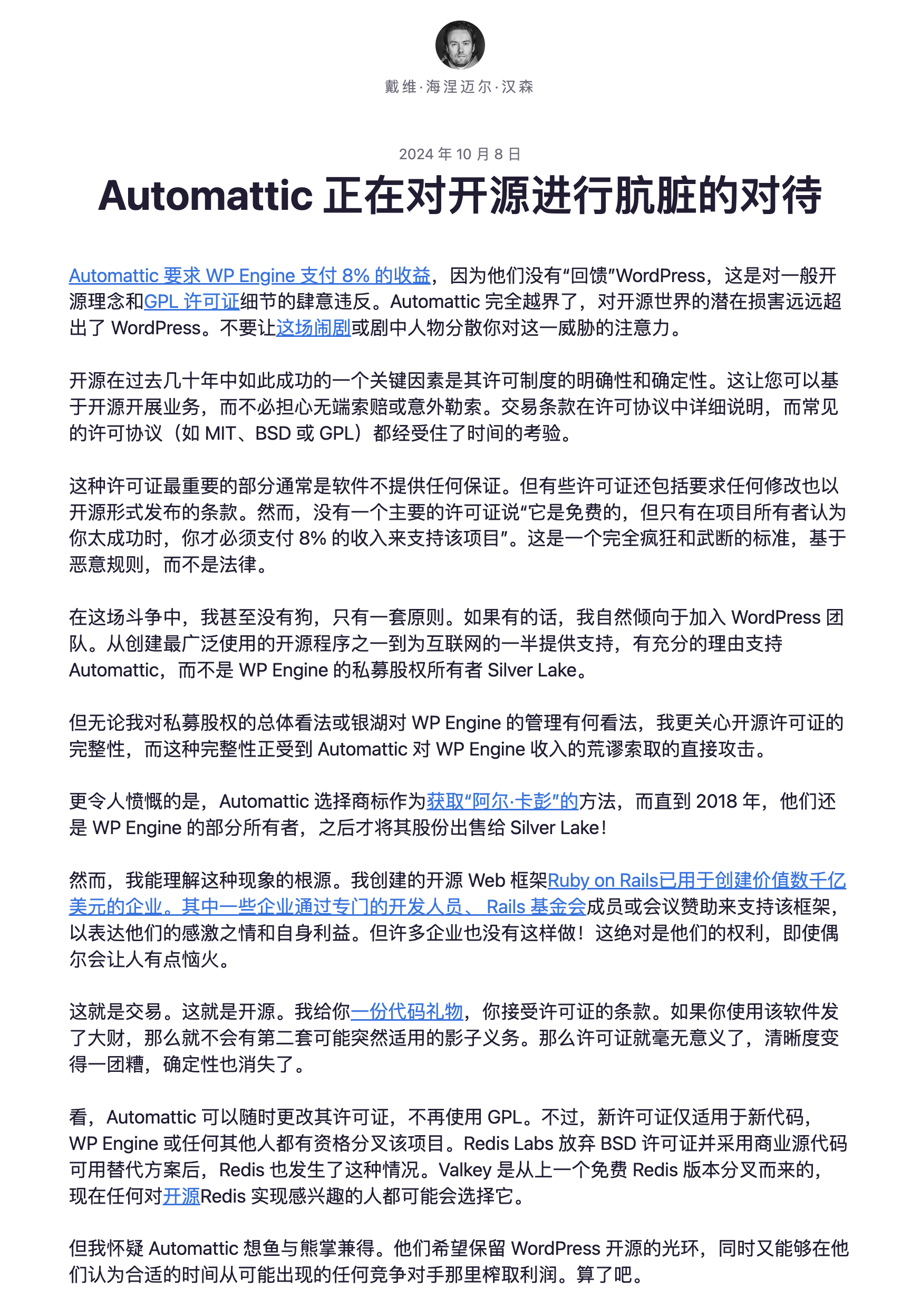

WordPress社区内战:论共同体划界问题

“我想直率地说:多年来,我们就像个傻子一样,他们拿着我们开发的东西大赚了一笔”。 —— Redis Labs 首席执行官 Ofer Bengal 的这句名言,成为 WordPress 社区内战,以及开源社区与商业利益之间的冲突的生动注脚。

我认为这个事件非常有代表性和启发意义 —— 当开源理想与商业利益出现冲突时,应该怎么做?一个开源项目的创始人,应当用什么样的方式来保护自己的利益,并维护社区的健康与可持续发展?这对 PostgreSQL 社区和其他开源软件社区与云厂商之间的冲突又能带来什么启示?

前因后果

最近,WordPress 风波沸沸扬扬,已经有不少文章报道过了。简单来说就是两家 WordPress 社区的两家主要公司发生了公开冲突。一方是 Automattic,另一方是 WP Engine,这俩公司都卖 WP 托管服务,年营收都是五亿美元左右。不过,A 公司的老板 Matt Mullenweg 是 WordPress 项目的联合创始人。

事情的导火索是 WordPress 联合创始人兼 Automattic CEO Matt Mullenweg 在最近的 WordCamp 大会上公开批评 WP Engine ,将 WP Engine 形容为社区的 “癌症”,质疑其对 WordPress 生态系统的贡献。他指出 WP Engine 和 Automattic 每年的收入均约为 5 亿美元,但 WP Engine 每周仅贡献 40 小时的开发资源,而 Automattic 则每周贡献了 3,988 小时。Mullenweg 认为,WP Engine 通过修改版的 GPL 代码盈利,却未能充分回馈社区。

然后,嘴炮很快升级成为了法律纠纷,双方都向对方发出了律师函;然后威吓又进一步升级为行动:Automattic 控制着 Word Press 网站,基础设施,包括扩展插件的 Registry,所以直接把 WP Engine (收购)的一个扩展插件截胡了。更具体来说,是 WP Engine 收购的一个 WP 扩展插件,ACF,有两百多万活跃安装用户,A 公司把这个扩展分叉了,然后占用了 WP 上老扩展的名字。

最后,在各路社交媒体上,都出现了两遍公司的骂战,这我就不放了,反正各种 Drama 满天飞。这里面最有名的要数下云先锋, Ruby on Rails 作者 DHH 得两篇博客了,以下是 DHH 两篇博客原文:

然后是当事人 Mullenweg 的两篇博客回应

老冯评论

我跟 WordPress 没啥利益关系,但作为一个开源社区的创始人,参与者,维护者,我在感情上是同情 Automattic 和其老板 —— WP 项目创始人 Matt Mullenweg ,我能理解他的愤怒沮丧心情,但确实没法苟同他在收到律师函之后的失智冲动举动。

从道德层面来说,WP Engine 白嫖社区却鲜有贡献合不合情理?不合情理。但从法律上来说,你用的 GPL 协议,按这个协议,别人在遵守开源协议的前提下,通过托管服务赚大钱是不是合法的?你向别人收取什一税,截胡插件有什么法律依据?没有!

那么问题在哪里呢?开源本质上是一种礼物馈赠,赠与者不应该对受赠人的回报有什么期待。如果你预期到,受赠人会拿着你送的礼物大赚一笔让你自己心里失衡,或者受赠人干脆用你的礼物回头抢你自己的生意,那你在第一天就不应该送给他。然而问题在于,传统的开源模型中没有办法实现这一点,即歧视性划界问题。

开源软件作为一个礼物,默认的赠送范围是 —— 整个人类世界,Public Domain!因为开源“不允许”歧视性条款。但你只想把你的软件礼物赠送给用户,而不是商业竞争对手,在传统开源协议下有什么办法呢?没有什么好办法,你写了一个很好的软件,使用 Apache 2.0 协议,很好,云厂商和竞争对手把你的软件装到他们的服务器上卖给用户大赚一笔,而你这个开发者得到了商业对手的鼓励(嘲讽) —— 请再接再厉,继续给我们打白工吧。

上古竞于道德,中世逐于智谋,当今争于气力。在上古时代,开源软件的参与者基本上都属于所谓的 “Pro-sumer”(产消合一者)。每个人都从其他人的贡献中获益,参与者也基本上都属于少部分没有经济压力的精英阶层,所以这种模式可以玩得转。繁荣健康的开源软件社区可以容得下一批纯消费者,并期待他们最终会有一部分成为产消合一者。

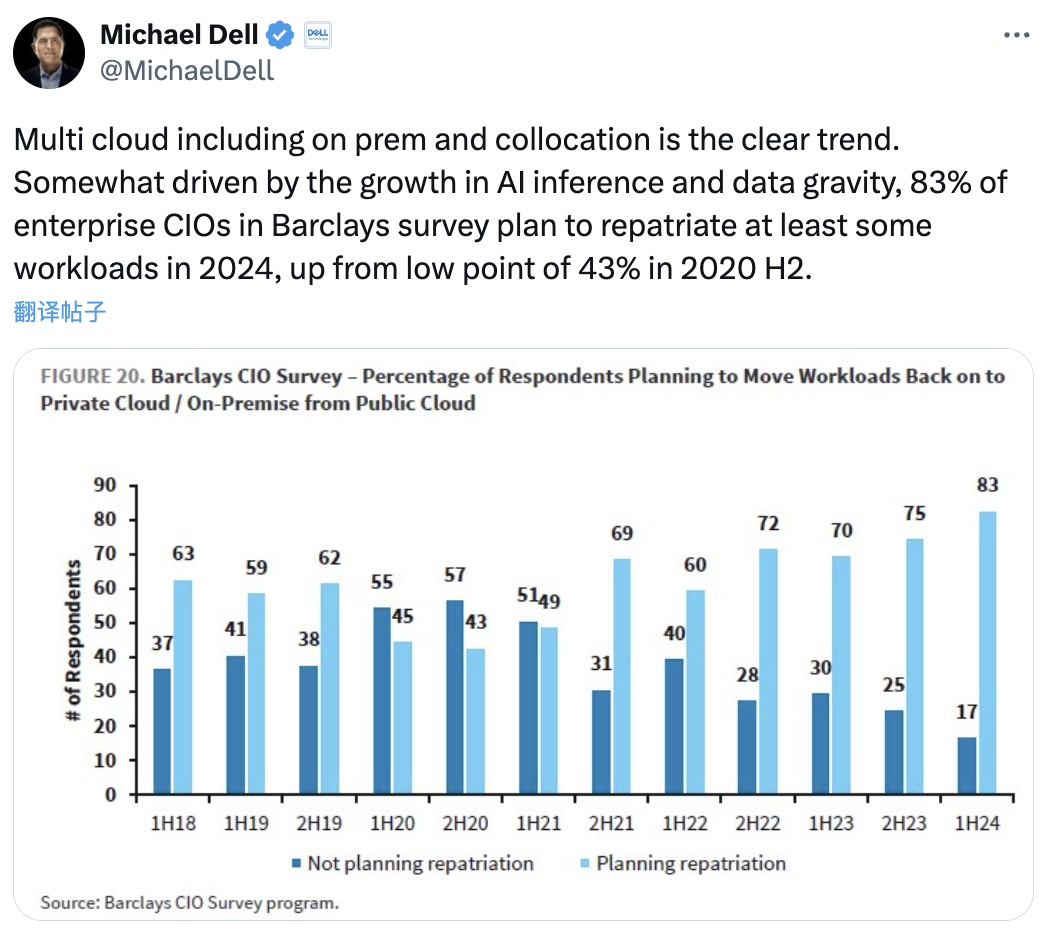

但以经典公有云厂商为代表的托管服务,通过掌控最终的交付环节,攫取捕获了开源生态的大部分价值。如果他们愿意成为体面的开源社区参与者积极回馈,这个模式还可以勉强继续运转下去,否则就会失衡 —— 社区秩序的消费者超出生产者的能力,开源模式就会面临危机。

怎么解决这个问题呢?开源社区其实已经给出了自己的答案。在 Redis不开源是“开源”之耻,更是公有云之耻 中我已经详细分析过了 —— 例如在数据库领域,不难发现近几年头部的数据库公司/社区与云厂商之间的关系都在进一步紧张升级中。 Redis 修改开源协议为 RSAL/SSPL,ElasticSearch 修改协议为 AGPLv3,MongoDB 使用更严苛的 SSPL,MinIO 和 Grafana 也转换到了更严苛的 AGPLv3 上来。Oracle 在 MySQL 开源版上摆烂,包括最为友善的 PostgreSQL 生态,也开始出现了不一样的声音。

我们要知道,开源许可证对于开源社区来说,就像章程一样。切换开源许可证本质上来说,就是一种重新划分社区共同体边界的行为。通过 AGPLv3 / SSPL 或者其他更严苛开源协议中的 “隐性歧视条款”,将不符合开源社区价值观的参与者,通过法律排除在外。

共同体边界的问题,是所有开源社区面临的最重要的问题 —— 它的重要性甚至在 “是否开源” 和 “独裁还是民主” 之上。很多“开源软件”社区/公司非常愿意为更深度地歧视与划界需求,而丧失 “开源” 的名分 —— 例如使用不被 OSI 认定为开源的 “SSPL“ 等协议。

“谁是我们?谁是我们的朋友,谁是我们的敌人?” 这是构建社区,建立秩序的基本问题。为开源软件做贡献的贡献者显然是核心,使用、消费软件的用户可以是社区主体;而架空,白嫖,吸血远大于贡献的竞争对手与“云托管服务”供应商,显然会是社区的敌人。

当然,开源社区/公司应该更精准地划分敌友,扩大自己的朋友圈,孤立自己的敌人:卖服务器的Dell浪潮,提供托管服务器的 Hentzer,Linode,DigitalOcean,世纪互联,甚至是公有云 IaaS 部门,提供接入/CDN 服务的 Cloudflare / Akamai 都可以是朋友,而把社区吭哧吭哧开发的开源软件拿去卖而不做对等回馈的公有云 PaaS 部门 / 团队 / 甚至具体的小组,那显然是竞争对手。让亲者快而仇者痛,这是有效运营的的基本原则。

WordPress 社区内战的例子给了我们一个很好的启示 —— Automattic 本来是占据了情理和大义名分的。但他没有在架构社区立法的阶段处理解决好“开放世界中的敌人” 的问题。反而在商业竞争中公器私用,使用了违法开源社区基本原则的不当手段,导致自己陷入现在这个尴尬的局面中 —— 这就跟肿瘤患者没有及早预防癌症的出现,一怒之下用刀剜开自己的肿瘤一样痛苦。

我相信,开源软件的社区/公司运营者一定能从这个案例学习到不少经验与教训。

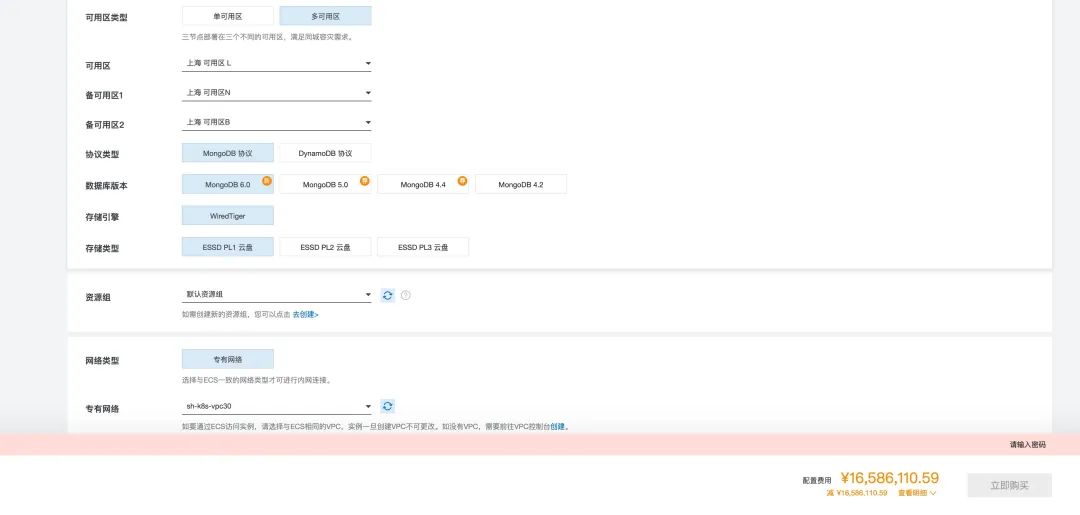

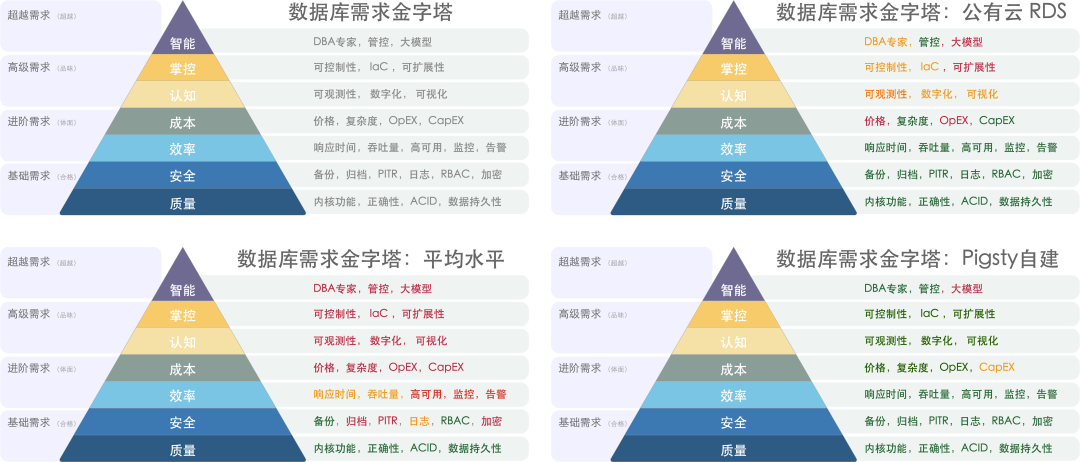

云数据库:用米其林的价格,吃预制菜大锅饭

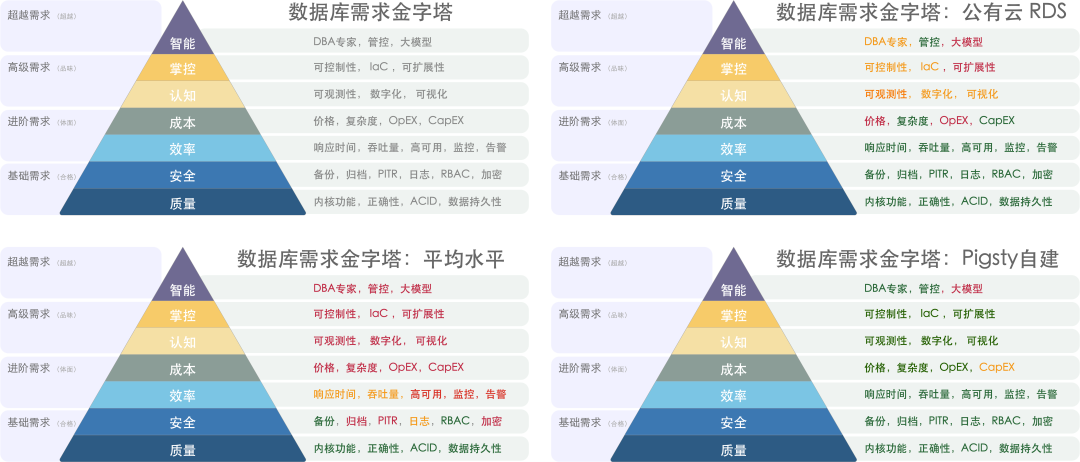

云数据库是不是天价大锅饭

RDS带来的数据库范式转变

质量安全效率成本剖析核算,

下云数据库自建,如何实战!

太长;不看

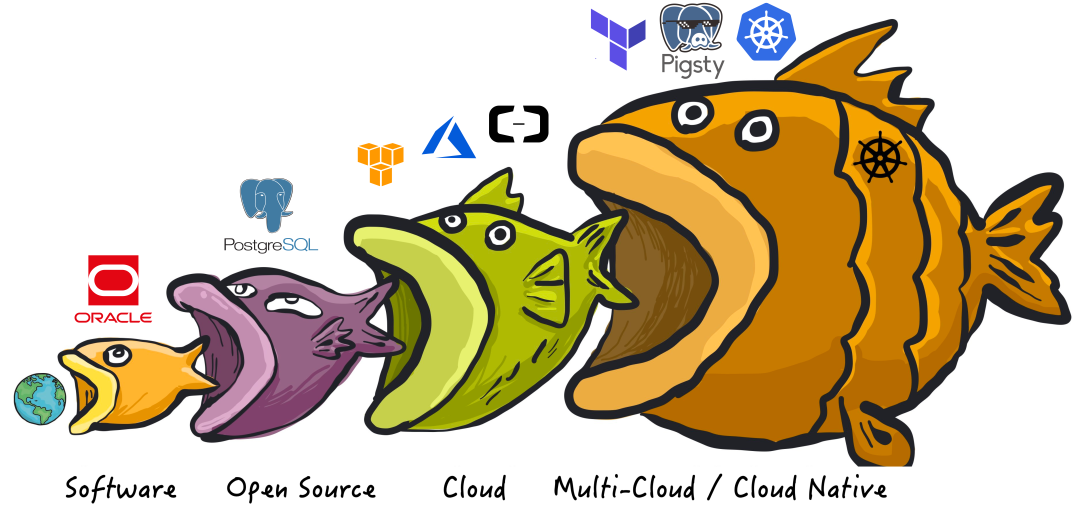

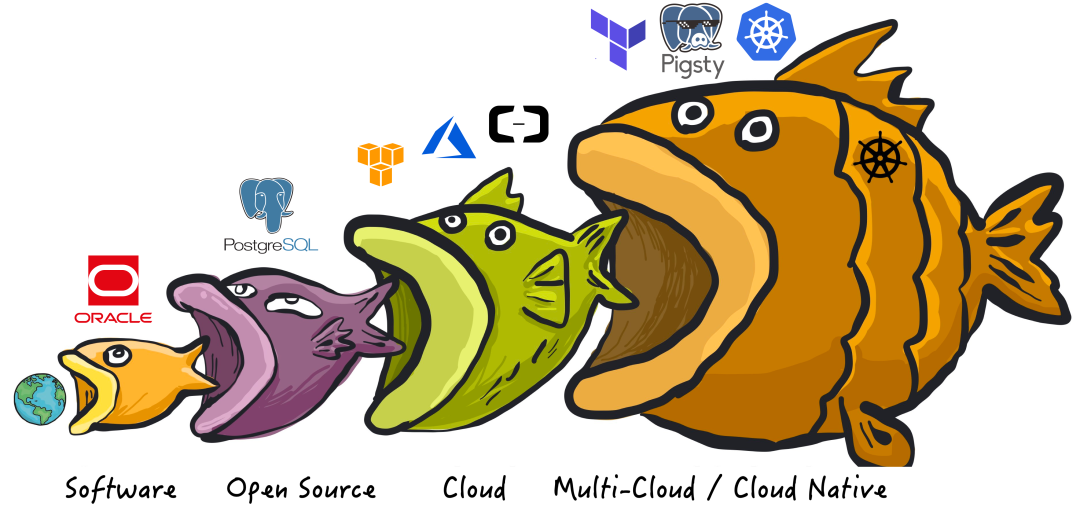

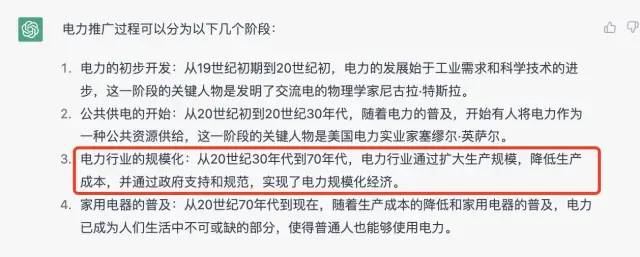

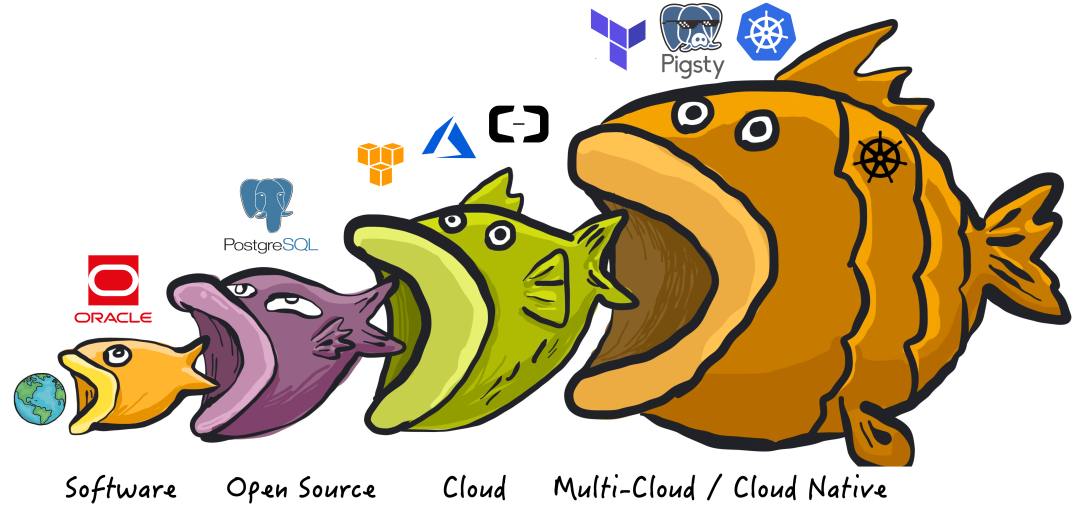

从商业软件到开源软件再到云软件,软件行业的范式出现了嬗变,数据库自然也不例外:云厂商拿着开源数据库内核,干翻了传统企业级数据库公司。

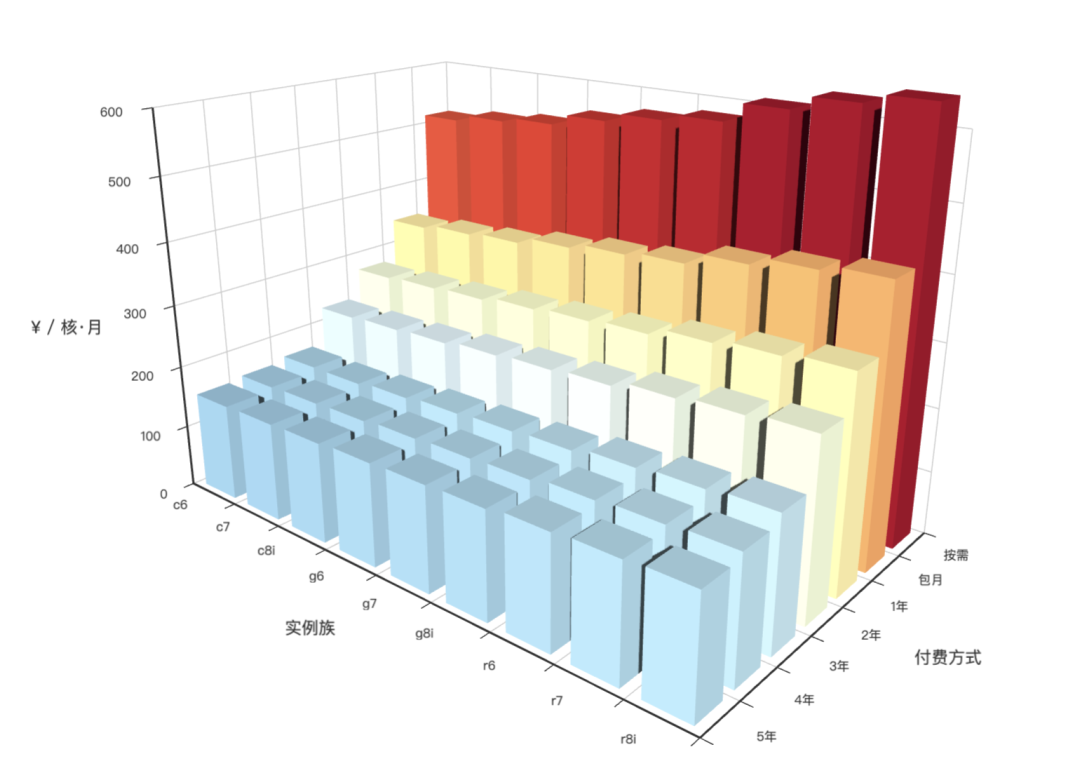

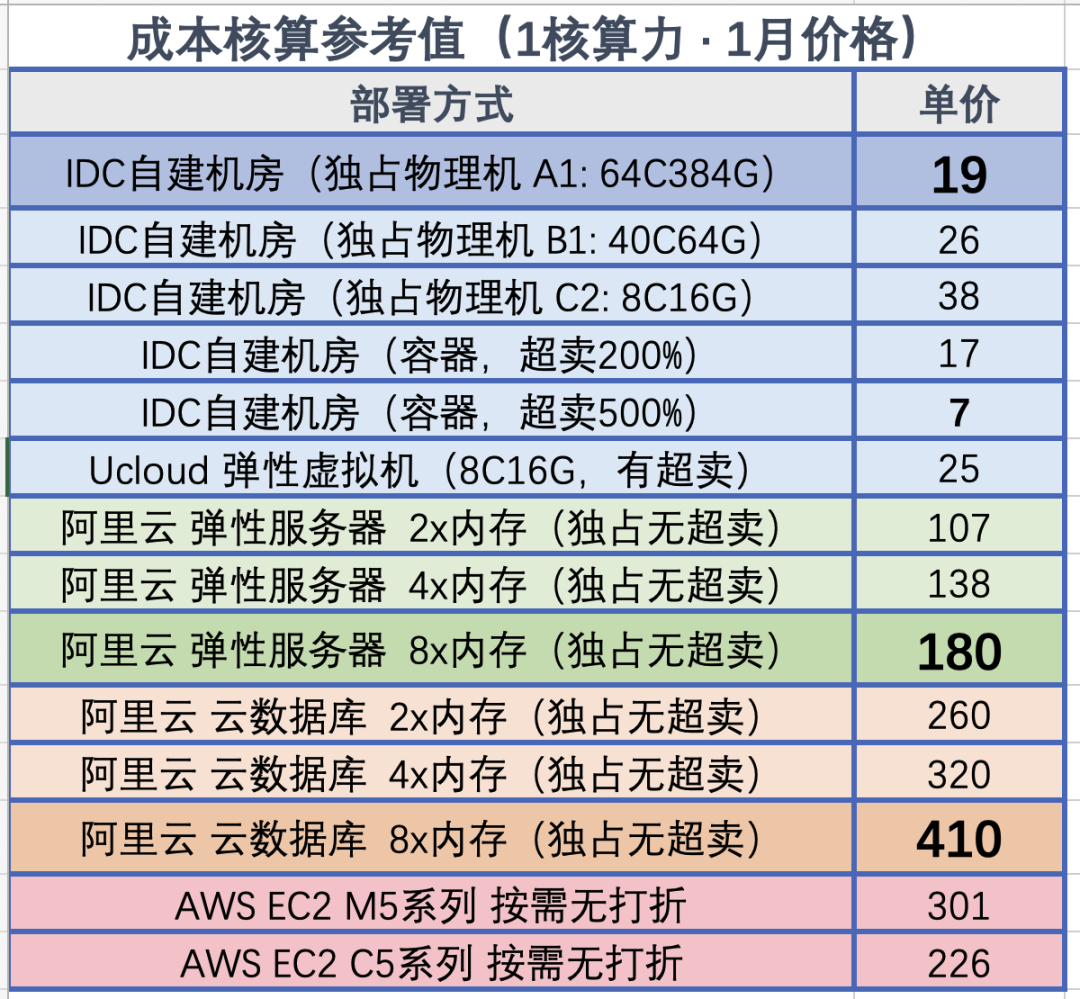

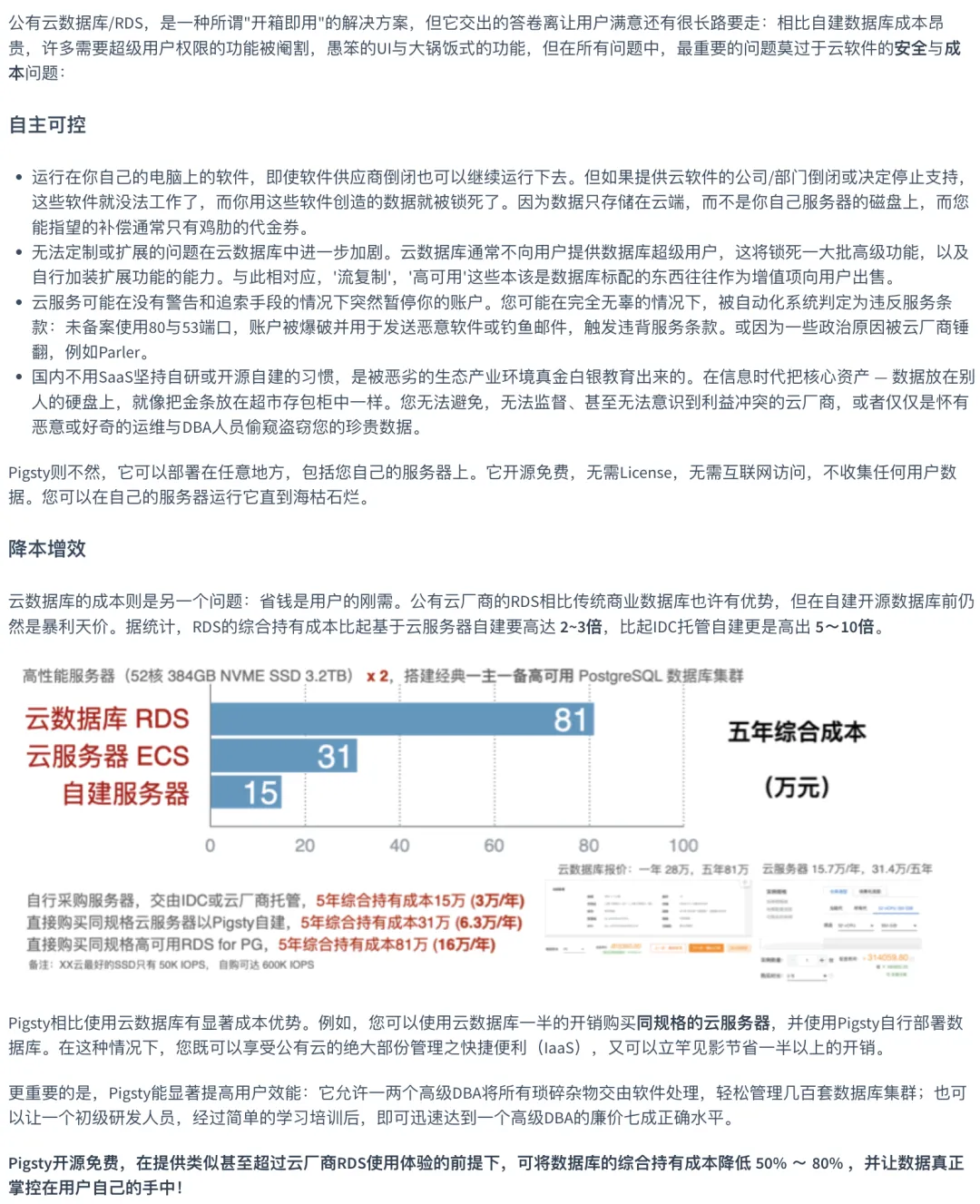

云数据库是一门非常有利可图的生意:可以将成本不到 20¥/核·月的硬件算力卖出十倍到几十倍的溢价,轻松实现 50% - 70% 甚至更高的毛利率。

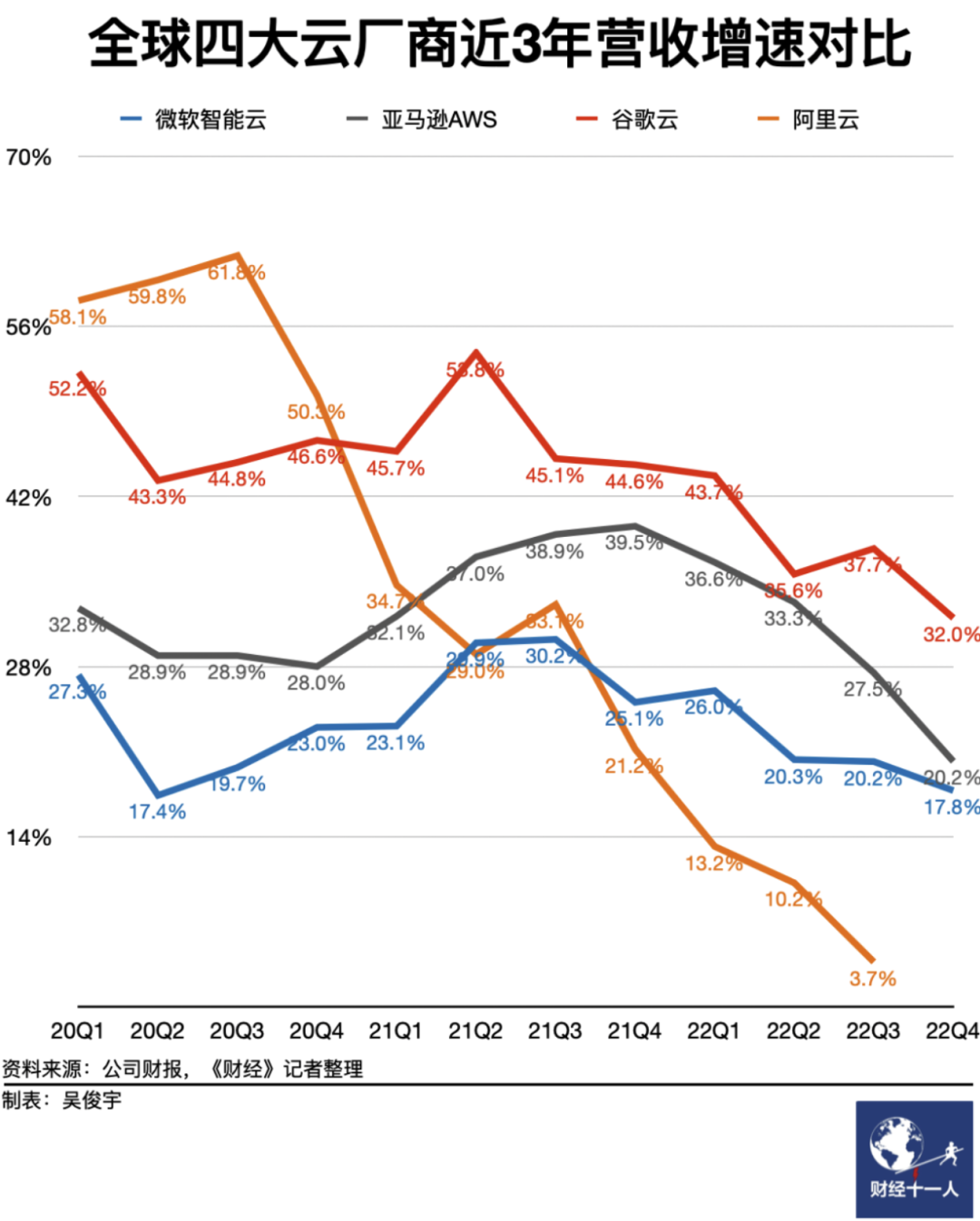

然而,随着硬件遵循摩尔定律发展,云管控软件出现开源平替,这个生意面临着严峻的挑战:云数据库服务丧失了性价比,而下云自建开始成为趋势。

云数据库是天价预制菜,如何理解?

你在家用微波炉加热黄焖鸡米饭料理包花费10元,餐馆老板替你用微波炉加热装碗上桌收费30元,你不会计较什么,房租水电人工服务也都是要钱的。 但如果现在老板端出同样一碗饭跟你收费 1000 元并说:我们提供的不是黄焖鸡米饭,而是可靠质保的弹性餐饮服务,反正十年前就这个价, 你会不会有削一顿这个老板的冲动? 这样的事情就发生在云数据库,以及其他各种云服务上。

对于规模以上的大型算力与大型存储来说,云服务的价格只能用离谱来形容:云数据库的溢价倍率可以达到十几倍到几十倍。 而作为一门生意,云数据库的毛利率可以轻松达到 50% - 70%,与苦哈哈卖资源的 IaaS (10% - 15%) 形成鲜明对比。 不幸地是,云服务并没有提供与高昂价格相对应的优质服务:云数据库服务的质量,安全,性能表现也并不尽人意。

更严峻的问题在于:随着硬件遵循摩尔定律发展,以及云管控软件出现开源平替,云数据库的模式正面临着严峻的挑战: 云数据库服务丧失了性价比,而下云自建开始成为趋势。

云数据库是什么?

云数据库,就是云上的数据库服务,这是一种软件交付的新兴范式:用户不是“拥有软件“,而是“租赁服务”。

与云数据库概念对应的是传统商业数据库(如 Oracle,DB2,SQL Server)与开源数据库(如 PostgreSQL,MySQL)。 这两种交付范式的共同特点是,软件是一种“产品”(数据库内核),用户“拥有”软件的副本,买回来/免费下载下来运行在自己的硬件上;

而云数据库服务(AWS/阿里云/…… RDS)通常会将软硬件资源打成包,把跑在云服务器上的开源数据库内核包装成“服务”: 用户通过云平台提供的数据库 URL 访问并使用数据库服务,并通过云厂商自研的管控软件(平台/PaaS)管理数据库。

数据库软件交付有哪几种范式?

最初,软件吞噬世界,以 Oracle 为代表的商业数据库,用软件取代了人工簿记,用于数据分析与事务处理,极大地提高了效率。不过 Oracle 这样的商业数据库非常昂贵,一核·一月光是软件授权费用就能破万,不是大型机构都不一定用得起,即使像壕如淘宝,上了量后也不得不”去O“。

接着,开源吞噬软件,像 PostgreSQL 和 MySQL 这样”开源免费“的数据库应运而生。软件开源本身是免费的,每核每月只需要几十块钱的硬件成本。大多数场景下,如果能找到一两个数据库专家帮企业用好开源数据库,那可是要比傻乎乎地给 Oracle 送钱要实惠太多了。

开源软件带来了巨大的行业变革,可以说,互联网的历史就是开源软件的历史。尽管如此,开源软件免费,但 专家稀缺昂贵。能帮助企业 用好/管好 开源数据库的专家非常稀缺,甚至有价无市。某种意义上来说,这就是”开源“这种模式的商业逻辑:免费的开源软件吸引用户,用户需求产生开源专家岗位,开源专家产出更好的开源软件。但是,专家的稀缺也阻碍了开源数据库的进一步普及。于是,“云软件”出现了。

然后,云吞噬开源。公有云软件,是互联网大厂将专家使用开源软件的能力产品化对外输出的结果。公有云厂商把开源数据库内核套上壳,包上管控软件跑在托管硬件上,并雇佣共享 DBA 专家提供支持,便成了云数据库服务 (RDS) 。云诚然是有价值的服务,也为很多软件变现提供了新的途径。但云厂商的搭便车行径,无疑对开源软件社区是一种剥削与攫取,而云计算罗宾汉们,也开始集结并组织反击。

经典商业数据库 Oracle,DB2, SQL Server 都卖得很贵,云数据库为什么不能卖高价?

商业软件时代,可以称为软件 1.0 时代,数据库中以 Oracle,SQL Server,IBM 为代表,价格其实非常高昂。

问:你觉得卖的贵,我要 Argue 一下,这不是正常的商业逻辑吗?

卖的贵不是大问题 —— 有只要最好的东西,根本不看价格的客户。然而问题在于云数据库不够好,第一:内核是开源 PG / MySQL,实际上自己做的就是个管控。而且在其营销却宣传中,好像是包治百病的万灵药,存算分离,Serverless,HTAP,云原生,超融合…,RDS 是先进的汽车,而老的数据库是马车……,blah

问:如果不是马车和汽车,那应该是什么?

区别最多算油车和电车,阐述数据库行业与汽车行业的类比。数据库:汽车;DBA:司机;商业数据库:品牌汽车;开源数据库:组装车;云数据库:出租车+出租司机,嘀嘀打车;这种模式是有其适用光谱的。

问:云数据库的适用光谱?

起步阶段,流量极小的简单应用 / 2 毫无规律可循,大起大落的负载 / 3 全球化出海合规场景 ,租售比。小微企业别折腾,上云(但上什么云值得商榷),大企业毫无疑问,下云。更务实的做法是买基线,租尖峰,混合云 —— 主体下云,弹性上云。

问:这么看来,云计算其实是有它的价值与生态位的。

-

《科技封建主义》,垄断巨头对生态造成的伤害。 / 2. 云营销,吹牛是要上税的。

-

抛开宏大叙事不谈,但云数据库的费用可不便宜。…… (弹性/百公里加速),引出成本问题。

为什么有此一说?为什么会觉得贵?

让我们用几个具体的例子来说明。

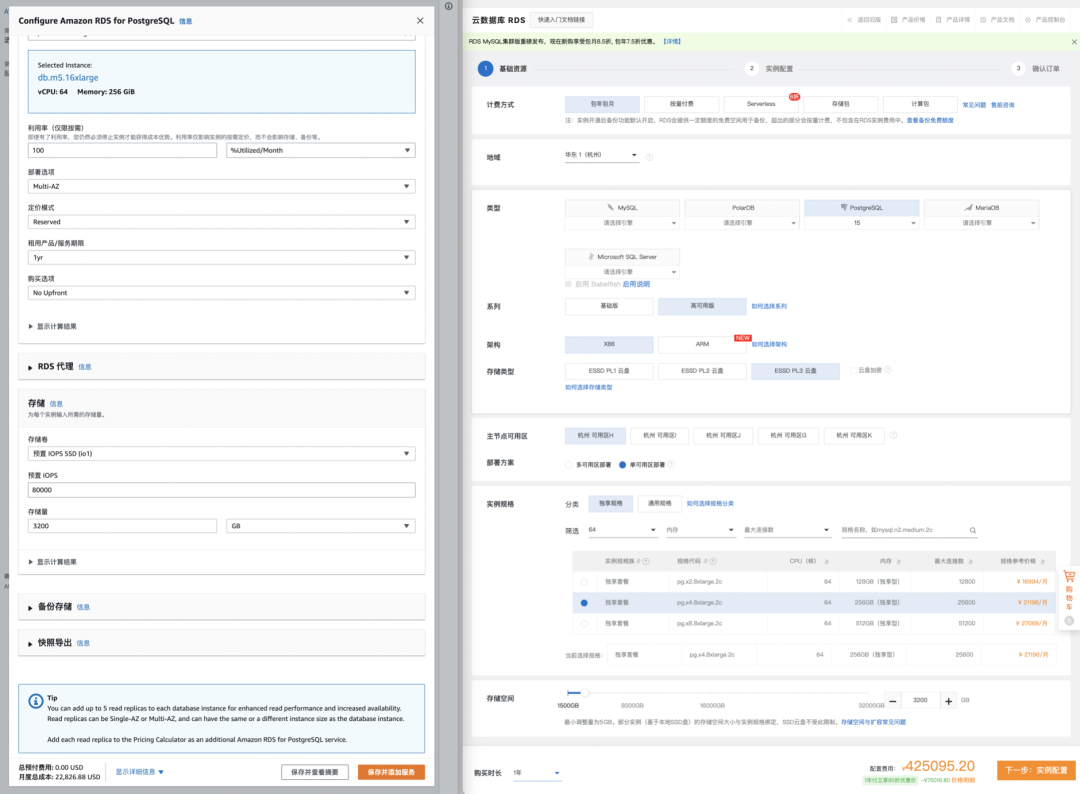

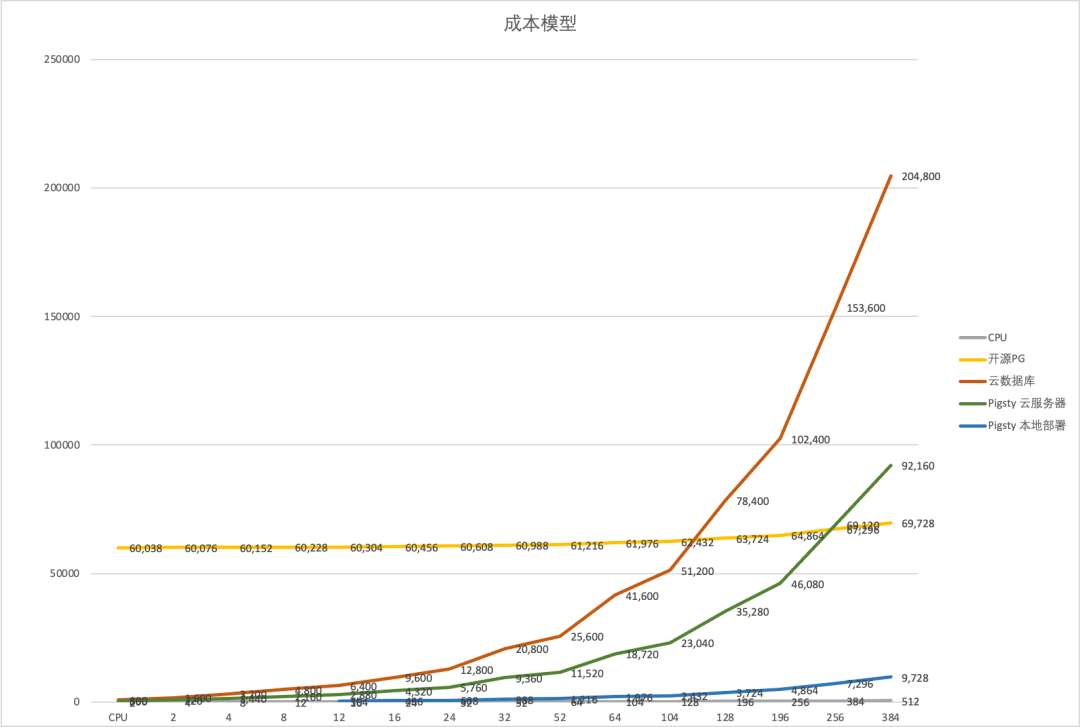

例如在探探时,我们曾经评估过上云后的成本。我们用的服务器整体 TCO 在

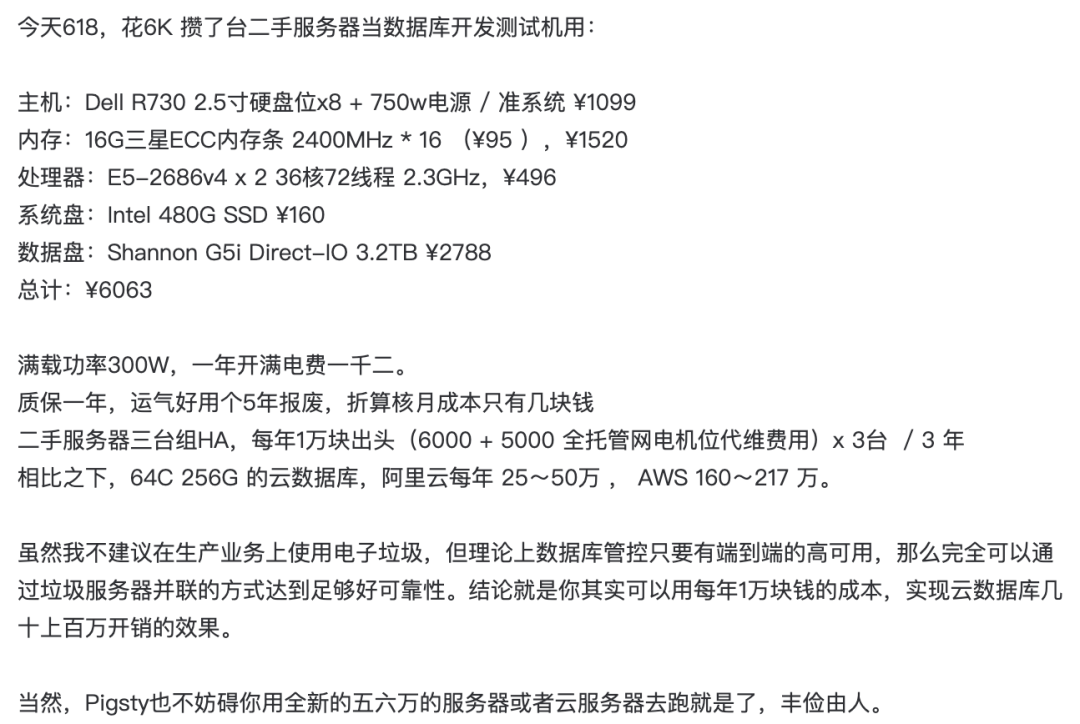

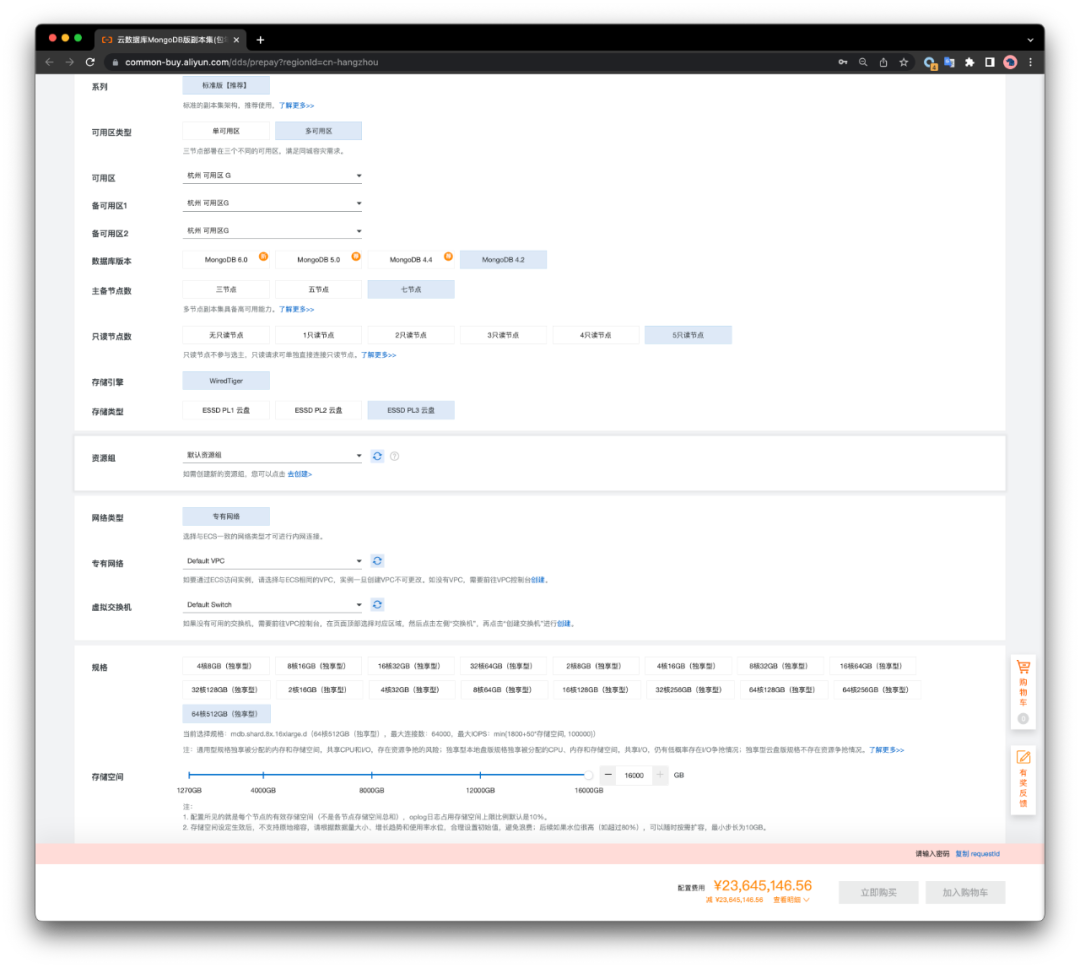

,一台是……5年7.5万,每年TCO 1.5w。两台组个高可用,就是每年3万块钱,阿里云华东1默认可用区,独享的64核256GB实例:pg.x4m.8xlarge.2c,并加装一块3.2TB的ESSD PL3云盘。每年的费用在25万(3年)到75万(按需)不等。 AWS 总体在每年160 ~ 217万元不等。

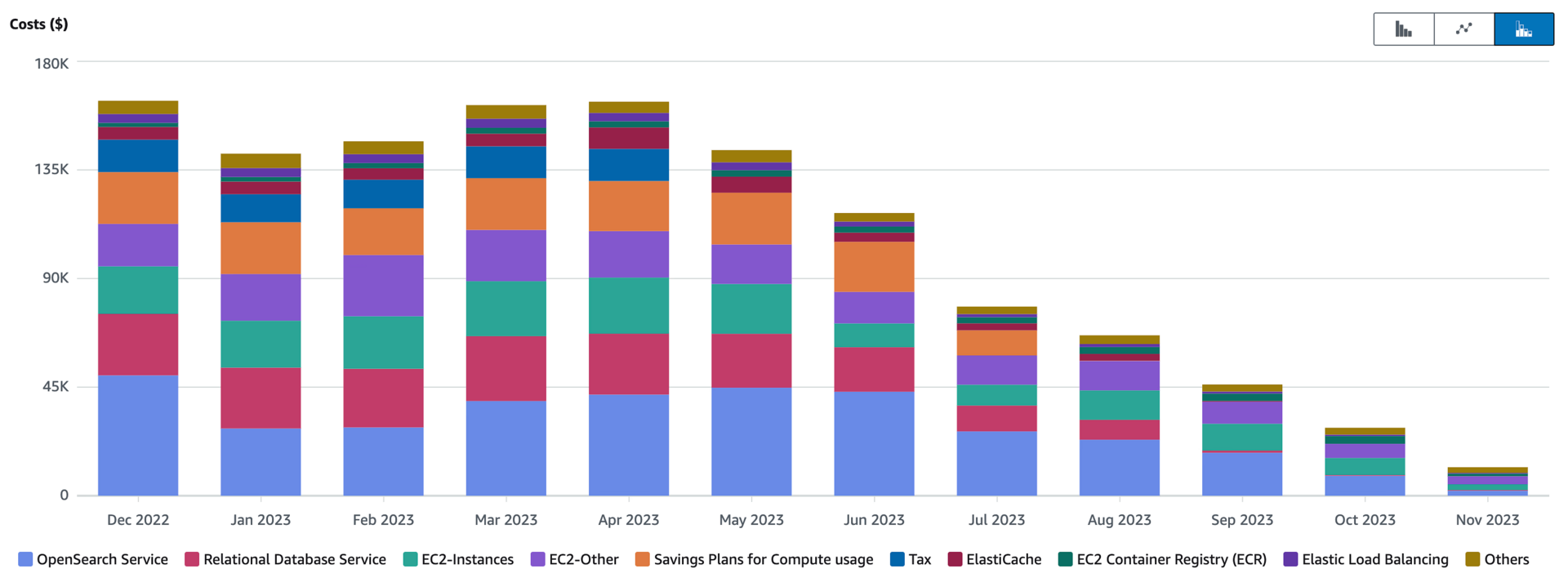

不只是我们,Ruby on Rails 的作者 DHH 在 2023 年分享了他们 37 Signal 公司从云上下来的完整历程。

介绍 DHH 下云的例子,每年 300w 美元年消费。一次性投入60万美元买了服务器自己托管后,年支出降到了 100 万美元,原来的1/3 。五年能省下 700 万美金。下云花了半年时间,也没有使用更多的人手来运营。

特别是考虑到开源替代的出现 ——

德不配位,必有灾殃,

字面意思:用云数据库,实际上是用五星级酒店米其林餐厅的价格,吃大食堂大锅饭预制菜料理包。

例如在 AWS 上,如果你想购买一套高规格的 PostgreSQL 云数据库实例,通常需要你要掏出对应云服务器十倍以上的价钱,考虑到云服务器本身有 5 倍左右的溢价,云服务相比规模自建

RDS带来的数据库范式转变

上期云计算泥石流,我们聊到了老罗在交个朋友淘宝直播间“卖云”:先卖着扫地机器人,然后姗姗来迟的老罗照本宣科念台词卖了四十分钟”云“,随即画风一转,马不停蹄地卖起了 高露洁无水酵素牙膏。这很明显是一场失败的直播尝试:超过千家企业在直播间下单了云服务器,100 ~ 200 块的云服务器客单价加上每家限购一台,也就是撑死了二十万的营收,说不定还没有罗老师出场费高。

我写了一篇文章《罗永浩救不了牙膏云》揶揄直播卖虚拟机的阿里云是个牙膏云,然后我的朋友瑞典马工马上写了一篇《牙膏云?您可别吹捧云厂商了》驳斥说:“任何一家本土云厂商都不配牙膏云这个称号。从利润率,到社会价值,到品牌管理,质量管理和市场教育,公有云厂商们都被牙膏厂全方面吊打”。

云数据库是什么,是一种软件范式转移吗?

最初,软件吞噬世界,以 Oracle 为代表的商业数据库,用软件取代了人工簿记,用于数据分析与事务处理,极大地提高了效率。不过 Oracle 这样的商业数据库非常昂贵,一核·一月光是软件授权费用就能破万,不是大型机构都不一定用得起,即使像壕如淘宝,上了量后也不得不”去O“。

接着,开源吞噬软件,像 PostgreSQL 和 MySQL 这样”开源免费“的数据库应运而生。软件开源本身是免费的,每核每月只需要几十块钱的硬件成本。大多数场景下,如果能找到一两个数据库专家帮企业用好开源数据库,那可是要比傻乎乎地给 Oracle 送钱要实惠太多了。

开源软件带来了巨大的行业变革,可以说,互联网的历史就是开源软件的历史。尽管如此,开源软件免费,但 专家稀缺昂贵。能帮助企业 用好/管好 开源数据库的专家非常稀缺,甚至有价无市。某种意义上来说,这就是”开源“这种模式的商业逻辑:免费的开源软件吸引用户,用户需求产生开源专家岗位,开源专家产出更好的开源软件。但是,专家的稀缺也阻碍了开源数据库的进一步普及。于是,“云软件”出现了。

然后,云吞噬开源。公有云软件,是互联网大厂将自己使用开源软件的能力产品化对外输出的结果。公有云厂商把开源数据库内核套上壳,包上管控软件跑在托管硬件上,并雇佣共享 DBA 专家提供支持,便成了云数据库服务 (RDS) 。这诚然是有价值的服务,也为很多软件变现提供了新的途径。但云厂商的搭便车行径,无疑对开源软件社区是一种剥削与攫取,而捍卫计算自由的开源组织与开发者自然也会展开反击。

云数据库是不是天价大锅饭

问:我们先来聊聊第一个问题,成本,成本不是云数据库所宣称的一项优势吗?

看和谁比,和传统商业数据库 Oracle 比可以,和开源数据库比就不行了 —— 特别是小规模可以(DBA),有点规模就不行了。

问:云是不是可以省下DBA/数据库专家的成本?

是的,好DBA稀缺难找。但用云数据库不代表你就不需要DBA了,你只是省去了系统建设的工作与日常运维性的工作,还有省不掉的部分。第二,我们可以具体算一笔账,在什么规模下,雇佣一个 DBA 相比云数据库是合算的。(聊一聊几种价格的模型)

问:RDS 和DBA 是什么关系?

RDS 和 DBA 提供的核心价值不是数据库产品,而是用好开源数据库内核的能力。…… 只不过一个主要靠DBA老司机,一个主要靠管控软件。一个是雇佣,一个是租赁。我觉得生态里还缺一种模式 —— 拥有管控软件,所以我做的东西就是开源的数据库管控软件。

问:所以云数据库成本上不占优势?

极小规模有优势,标准尺寸或者大规模数据库没有任何成本优势。

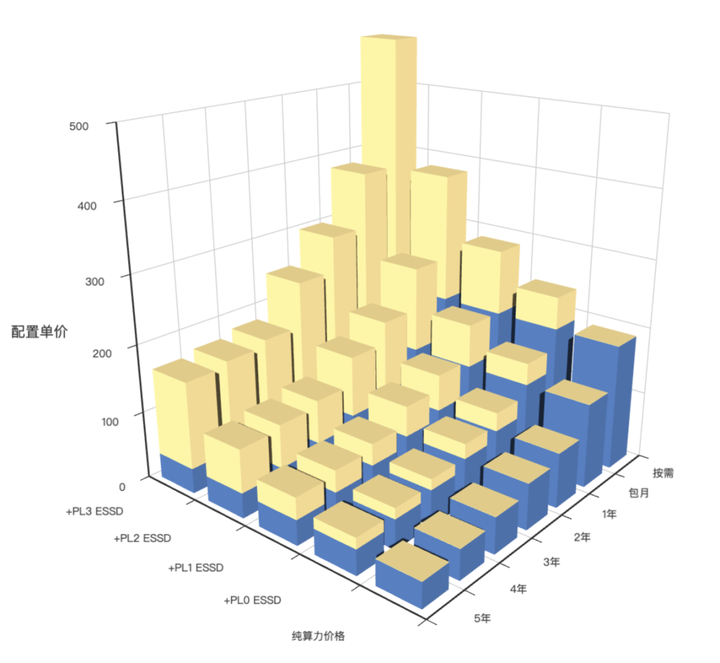

比较成本你要看怎么比。云数据库的计费项:计算+存储,当然还有流量费,数据库代理费,监控费用,备份费用。

大头是计算与存储,计算的单位是……,存储的单位是……(一些关键数字)

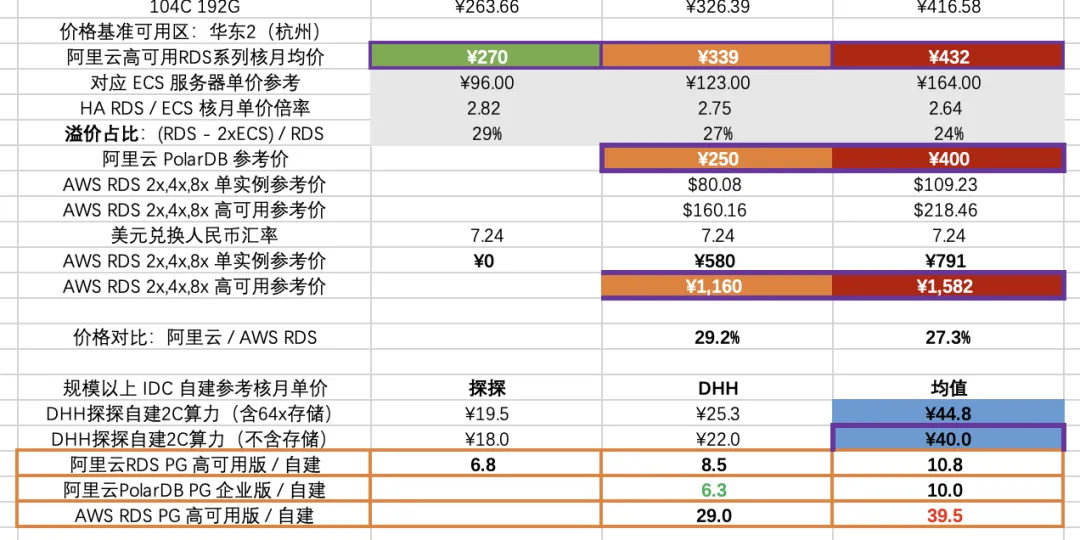

问:实例部分的钱怎么算?

阿里 RDS: 7x-11x,PolarDB: 6x~10x,AWS: 14x ~ 22x

| 双实例高可用版价格 | 4x 核月单价 | 8x 核月单价 |

|---|---|---|

| 高可用RDS系列核月均价 | ¥339 | ¥432 |

| AWS RDS 高可用参考价 | ¥1,160 | ¥1,582 |

| 阿里云 PolarDB 参考价 | ¥250 | ¥400 |

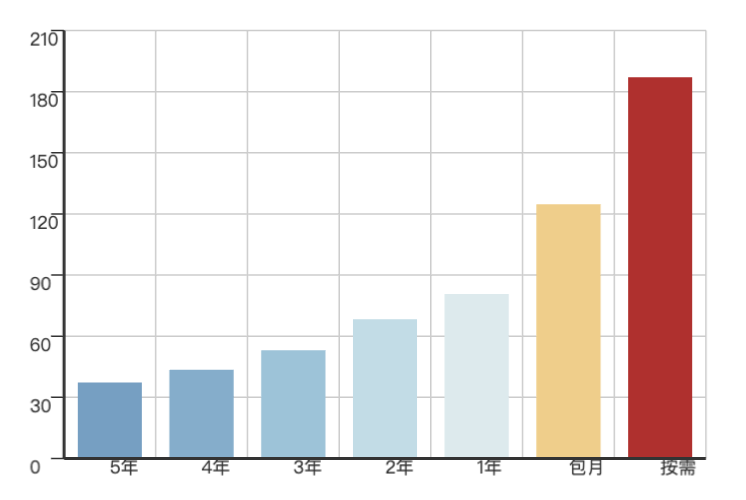

| DHH探探自建1C算力(不含存储) | ¥40 |

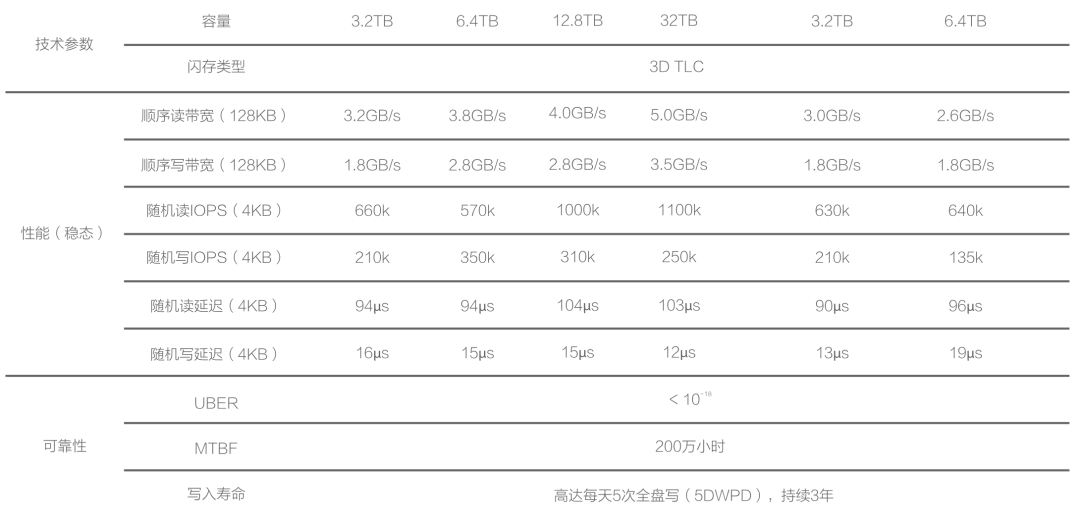

云服务器,云上按量,包月,包年,预付五年的单价分别为 187¥,125¥,81¥,37¥,相比自建的 20¥ 分别溢价了 8x, 5x, 3x, 1x。在配置常用比例的块存储后(1核:64GB, ESSD PL3),单价分别为:571¥,381¥,298¥,165¥,相比自建的 22.4¥ 溢价了 24x, 16x, 12x, 6x 。

问:存储部分的钱怎么算?

先来看零售单价,GB·月单价 ,两分钱,阿里云 ESSD 上分了几个不同的档次,从 1- 4块钱。

1TB 存储·月价格(满折扣):自己买 16 ,AWS 1900,阿里云 3200

问:上面聊了很多成本的问题,但你怎么能盯着成本呢?成本到底有多重要?

在你技术与产品有领先优势的情况下,成本没那么重要。但当技术与产品拉不开差距的时候,即 —— 你卖的是没有不可替代性的大路货标准品,成本就非常重要了。在十年前,云数据库也许是属于产品/技术主导的状态,可以心安理得的吃高毛利。但是在十年后的今天。云不是高科技了,云已经烂大街了。市场已经从价值定价转向了成本定价,成本至关重要。

阿里的主营业务电商,被“廉价”的拼多多打的稀里哗啦,拼多多靠的是什么?就是一个朴实无华的便宜。你淘宝天猫能卖的,我能卖一样还更便宜,这就是核心竞争力。你又不是爱马仕,劳力士,买个包买个表都要配几倍货才卖给你的奢侈品逻辑,在老罗直播间里夹在牙膏和吸尘器中间的大路货云服务器能拼什么,还不是一个便宜?

问:那么什么时候成本不重要呢?

第二个点是经济上行繁荣期,创业公司拼速度的发展期,扣成本可能为时过早。但现在很明显,是经济下行萧条期……。再比如说,如果你的东西足够好,那么用户也可以不在意成本。就好比你去五星级酒店,米其林餐厅吃饭,不会在意他们用的食材是多少成本;对吧,OpenAI ChatGPT 别无分号,仅此一家,爱买不买。但是,去菜市场买菜,那是是会看成本的。云数据库,云服务器,云盘,都是“食材”,而不是菜品,是要核算成本,比价的。(黄焖鸡米饭的故事)

质量安全效率成本剖析核算

问:性价比里的价格成本聊透了,那我们来聊聊质量、安全、效率

云数据库很贵,所以在卖的时候都有一些话术。虽然我们贵,但是我们好呀!数据库是基础软件里的皇冠明珠,凝聚着无数无形知识产权BlahBlah。因此软件的价格远超硬件非常合理…… 但是,云数据库真的好吗?

问:在功能上,云数据库怎么样?

只能 OLTP 的 MySQL 咱就不说了,但是 RDS PostgreSQL 还是可以聊一聊的。尽管 PostgreSQL 是世界上最先进的开源关系型数据库,但其独一无二的特点在于其极致可扩展性,与繁荣的扩展生态!不幸地是,《云 RDS 阉割掉了 PostgreSQL 的灵魂》 —— 用户无法在 RDS 上自由加装扩展,而一些强力扩展也注定不会出现在 RDS 中。使用 RDS 无法发挥出 PostgreSQL 真正的实力,而这是一个对云厂商来说无法解决的缺陷。

问:在功能扩展上,云上的 PostgreSQL 数据库有什么缺陷?

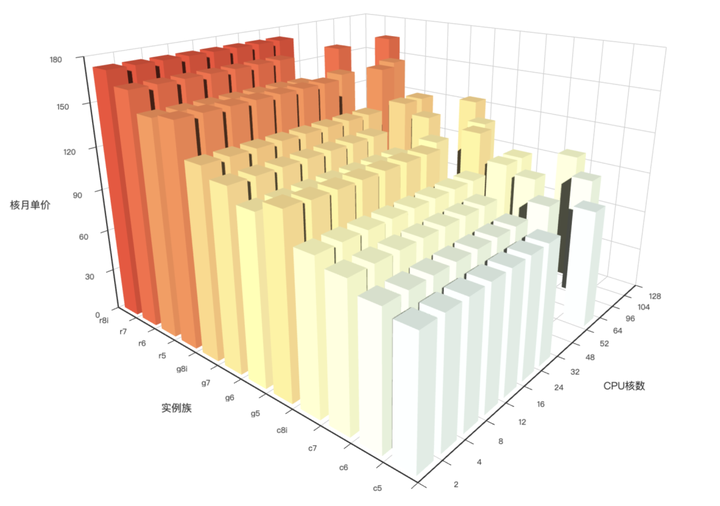

Contrib 模块作为 PostgreSQL 本体的一部分,已经包含了 73 个扩展插件,在 PG 自带的 73 个扩展中,阿里云保留了23个阉割了49个;AWS 保留了 49 个,阉割了 24 个。PostgreSQL 官方仓库 PGDG 收录的约 100 个扩展,Pigsty 作为PG发行版自身维护打包了20个强力扩展插件,在 EL/Deb 平台上总共可用的扩展已经达到了 234 个 —— AWS RDS 只提供了 94 个扩展,阿里云 RDS 提供了 104 个扩展。

在重要的扩展中,情况更严重。AWS与阿里云缺失的扩展有:(时序 TimescaleDB,分布式 Citus,列存 Hydra,全文检索 BM25,OLAP PG Analytics,消息队列 pgq,甚至一些基本的重要组件都没有提供,比如做 CDC 的 WAL2JSON),版本跟进跟进速度也不理想。

问:云数据库为什么不能提供这些扩展?

云厂商的口径是:安全性,稳定性,但这根本说不通。云上的扩展都是从 PostgreSQL 官方仓库 PGDG 下载打好包,测试好的 rpm / deb 包来用的。需要云厂商测试什么东西?但我认为更重要的一个问题是开源协议的问题,AGPLv3 带来的挑战。开源社区面对云厂商白嫖的挑战,已经开始集体转向了,越来越多的开源软件使用更为严格,歧视云厂商的软件许可证。比如 XXX 都用 AGPL 发布,云厂商就不可能提供,否则就要把自己的摇钱树 —— 管控软件开源。

这个我们后面可以单独开一期来聊这个事情。

问:上面提到了安全性的问题,那么云数据库真的安全吗?

1、多租户安全挑战(恶意的邻居,Kubecon 案例);2. 公网的更大攻击面(SSH爆破,SHGA);

3、糟糕的工程实践(AK/SK,Replicator密码,HBA修改); 4 没有保密性、完整性兜底。

5、缺乏可观测性,因此难以发现安全问题,更难取证,就更别提追索了。

问:云数据库的可观测性一团稀烂,怎么说?

信息、数据、情报对于管理来说什么至关重要。但是云上提供的这个监控系统吧,质量只能说一言难进。早在 2017 年的时候我就调研过世面上所有 PostgreSQL 数据库监控系统 …… 指标数量,图表数量,信息含量。可观测性理念,都一塌糊涂。监控的颗粒度也很低(分钟级),想要5秒级别的?不好意思请加钱。

AustinDatabase 号主今天刚发了一篇《上云后给我一个 不裁 DBA的理由》聊到这个问题:在阿里云上想开 Ticket 找人分析问题,客服会疯狂给你推荐 DAS(数据库自动驾驶服务),请加钱,每月每实例 6K 的天价。

没有足够好的监控系统,你怎么定责,怎么追索?(比如硬件问题,超卖,IO抢占导致的性能雪崩,主从切换,给客户造成了损失)

问:除了安全和可观测性的问题,相当一部分用户更在意的是质量可靠性

云数据库并不提供对可靠性兜底,没有SLA 条款兜底这个。

只有可用性的 SLA ,还是很逊色的 SLA,赔付比例跟玩一样。 营销混淆:将 SLA 混淆为真实可靠性战绩。

乞丐版的标准版数据库甚至连 WAL 归档与 PITR 都没有,就单纯给你回滚到特定备份,用户也没有能力自助解决问题。

著名的双十一大故障,草台班子理论,降本增笑。中亭团伙……可观测性团队的草台班子,都是刚毕业的在维护。

业务连续性上的战绩并不理想:RTO,RPO ,嘴上说的都是 =0 =0,实际…… 。腾讯云硬盘故障导致初创公司数据丢失的案例。

问:云数据库真的好吗?(性能维度)

我们先来聊一聊性能吧。刚才聊了云盘的价格,没说云盘的性能,EBS 块存储的典型性能,IOPS,延迟,本地盘。更重要的是这个高等级的云盘还不是你想用就给你用的。如果你买的量低于1.2TB,他们是不卖给你 ESSD PL3 的。而次一档的 ESSD PL2 的 IOPS 吞吐量就只有 ESSD PL3 的 1/10 。

第二个问题是资源利用率。RDS 管控 2GB 的管控……,什么都不干内存吃一半。Java管控,日志Agent。

高可用版的云数据库,有个备库,但是不给你读。吃你一倍的资源对不对,你想要只读实例要额外单独申请。

最后,资源利用率提高的是云厂商白赚,好处让云厂商赚走了,代价让用户承担了。

问:其他的点呢?比如可维护性?

每个操作要发验证码短信,100套 PostgreSQL 集群怎么办?ClickOps小农经济,企业用户和开发者真正的正道是 IaC,但是在这一点上比较拉垮。K8S Pigsty 都做的很好,原生内置了 IaC。RPA 机器人流程自动化。糟糕的API 设计,举个例子,几种不同风格的错误代码,实例状态表,(驼峰法,蛇形法,全大写,两段式)体现出了低劣的软件工程质量水平。

业务连续性,RTO RPO,比如做 PITR 是通过创建一个新的按量付费的实例来实现的。那原来的实例怎么办?怎么回滚?怎么保障恢复的时长?这种合格DBA都应该知道的东西,云RDS 为什么不知道?

云数据库有没有啥出彩之处?

问:云数据库就没有一些做的出色的地方吗?

有,弹性,公有云的弹性就是针对其商业模式设计的:启动成本极低,维持成本极高。低启动成本吸引用户上云,而且良好的弹性可以随时适配业务增长,可是业务稳定后形成供应商锁定,尾大不掉,极高的维持成本就会让用户痛不欲生了。这种模式有一个俗称 —— 杀猪盘。这个模式发展到极致就是 Serverless。 云厂商的假 Serverless。

问:还有一个与弹性经常一起说的,叫敏捷?

敏捷,以前算是云数据库的独门优势,但现在也没有了。第一,真正的 Serverless ,Neon,Supabase,Vercel 免费套餐,赛博菩萨。第二,Pigsty 管控,上线一套新数据库也是5分钟。云厂商的极致弹性,秒级扩容其实骗人的,几百秒也是秒……

问:聊聊 Serverless,这会是未来吗?为什么说这是榨钱术?

云厂商的 RDS Serverles,本质上是一种弹性计费模式,而不是什么技术创新。真正技术创新的 Serverless RDS,可以参考 Neon:

- Scale to Zero,

- 无需事先配置,直接连上去自动创建实例并使用。

RDS Serverless 是一种营销宣传骗术。只是计费模式的区别,是一个恶劣的笑话。按照云厂商的营销策略,我拿个共享的 PG 集群,来一个租户就新建一个 Database 给他,不做资源隔离随便用,然后按实际的 Query 数量或者 Query Time 收费,这也可以叫 Serverless。

然后按照这个定义,云厂商的各种产品一下子就全部都变成 Serverless 了。然后 Serverless 这个词的实质意思就被篡夺了,变成平庸无聊的计费技术。真正做的好的 Serverless ,应该去看看赛博菩萨 Cloudflare。

这里我还提一点,Serverless 说是要解决极致弹性的问题,但弹性本身其实没有多重要

问:为什么弹性不重要,传统企业如何应对弹性问题?

弹性峰值能到平时的几十上百倍,我觉得弹性有价值。否则以现在物理资源的价格,直接超配十倍也没多少钱……云厂商的弹性溢价差不多就是十几倍。大甲方的思路很清晰,有这个钱租赁,我干嘛不超配10倍。小用户用 serverless 可以理解。弹性转折点,40 QPS 。唯一场景就是那些 MicroSaaS。但那些 MicroSaas 可以直接用免费套餐的 Vercel,Neon,Supabase,Cloudflare……

传统企业如何解决?我们有 15 % 的 机器 Buffer 池,如果不够用,把低利用率的从库摘几台就够了,机器到位,PG 5分钟上线。服务器到上架IDC大概两周左右,现在 IDC 上架已经到 半天 / 一天了。

问:所以云数据库整体来看,到底怎么样?

刚才,我们已经从质量、效率、安全、成本剖析了云数据库的方方面面。基本上除了弹性,表现都很一般,而唯一能称得上出彩的弹性,其实也没他们说的那么重要。我对云数据库的总体评价就是 —— 预制菜,能不能吃?能吃,也吃不死人,但你也不要指望这种大锅饭能好吃到哪里去。

草台班子,也没有什么品牌形象。例如 IBM DeveloperWorks。 《破防了,谁懂啊家人们:记一次mysql问题排查》

下云数据库自建,如何实战!

问:什么时候应该用云数据库,什么时候不应该。或者说,什么规模应该上云,什么规模应该下云?

光谱的两端,DBaaS 替代,开源自建。经典阈值,团队水平。

平均水准的技术团队:100 ~ 300 万年消费,云上 KA。没有任何懂的人,服务器厂商给出的估算规模是1000万。

优秀的技术团队:一台物理机 ~ 一个机柜的量,下云,年消费几万到几十万。

问:小企业用数据库有什么其他选择?

Neon,Supabase,Vercel,或者在赛博佛祖 Cloudflare 上直接托管。

问:你给云数据库旷旷一顿批判,那是你站在头部甲方,顶级DBA的视角去看的。一般企业没有这个条件,怎么办?

做好三件事:管控软件的开源替代怎么解决,硬件资源的置备供给怎么解决,人怎么解决?

问:开源管控软件,怎么说?

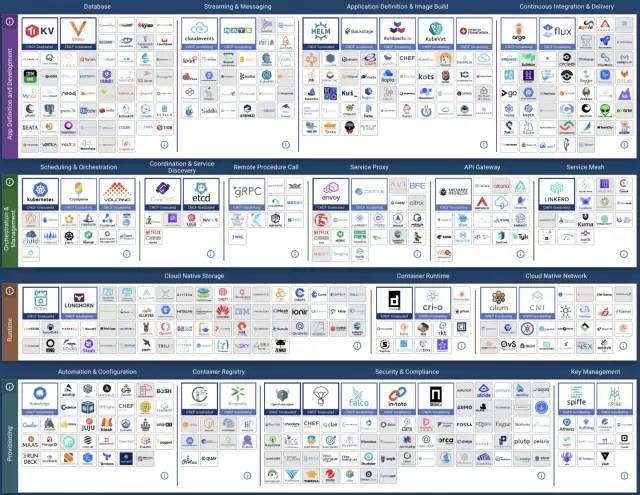

举个例子,管理服务器的开源管控软件, KVM / Proxmox / OpenStack,新一代的就是 Kubernetes。对象存储的开源平替 MinIO,或者物美价廉的 Cloudflare R2

问:硬件资源如何置备管理?

IDC:Deft,Equinix,世纪互联。IDC 也可以按月付费,人家很清楚,一点儿都不贪心,30% 的毛利明明白白。

服务器 + 30% 毛利,按月付费,机柜成本:4000 ~ 6000 ¥/月(42U 10A/20A),可放十几台服务器。网络带宽:独享带宽(通用) 100 块 / MB·月 ;专用带宽可达 20 ¥ / MB·月

你也可以考虑长租公有云厂商的云服务器,租五年的话,比 IDC 贵一倍。请选择那些带有本地 NVMe 存储的实例自建,不要使用EBS云盘。

问:还有个问题,在线的问题如何解决?云上的网络/可用区不是一般 IDC 可以解决的吧?

Cloudflare 解决问题。

问:人怎么解决,DBA ?

当下的经济形势与就业率背景,用合理的价格想要找到能用的人并不难。初级的运维与DBA遍地都是,高级的专家找咨询公司,比如我就提供这样的服务。

问:专业的人做专业的事情,从云上集中管理到云下自建,是不是一种开倒车的行为?

云厂商是不是最专业的人在做这件事,我相当怀疑。1. 云厂商摊子铺得太大,每个具体领域投入的人并不多;2. 云厂商的精英员工流失严重,自己出来创业的很不少。3. 自建绝不是开是历史倒车,而是历史前进的一个过程。历史发展本来就是钟摆式往复,螺旋式发展上升的一个过程。

问:云数据库的问题解决了,但是想要从云上下来,需要解决的问题不止数据库,其他的东西怎么办?对象存储,虚拟化,容器

留待下一期来讨论!

阿里云:高可用容灾神话破灭

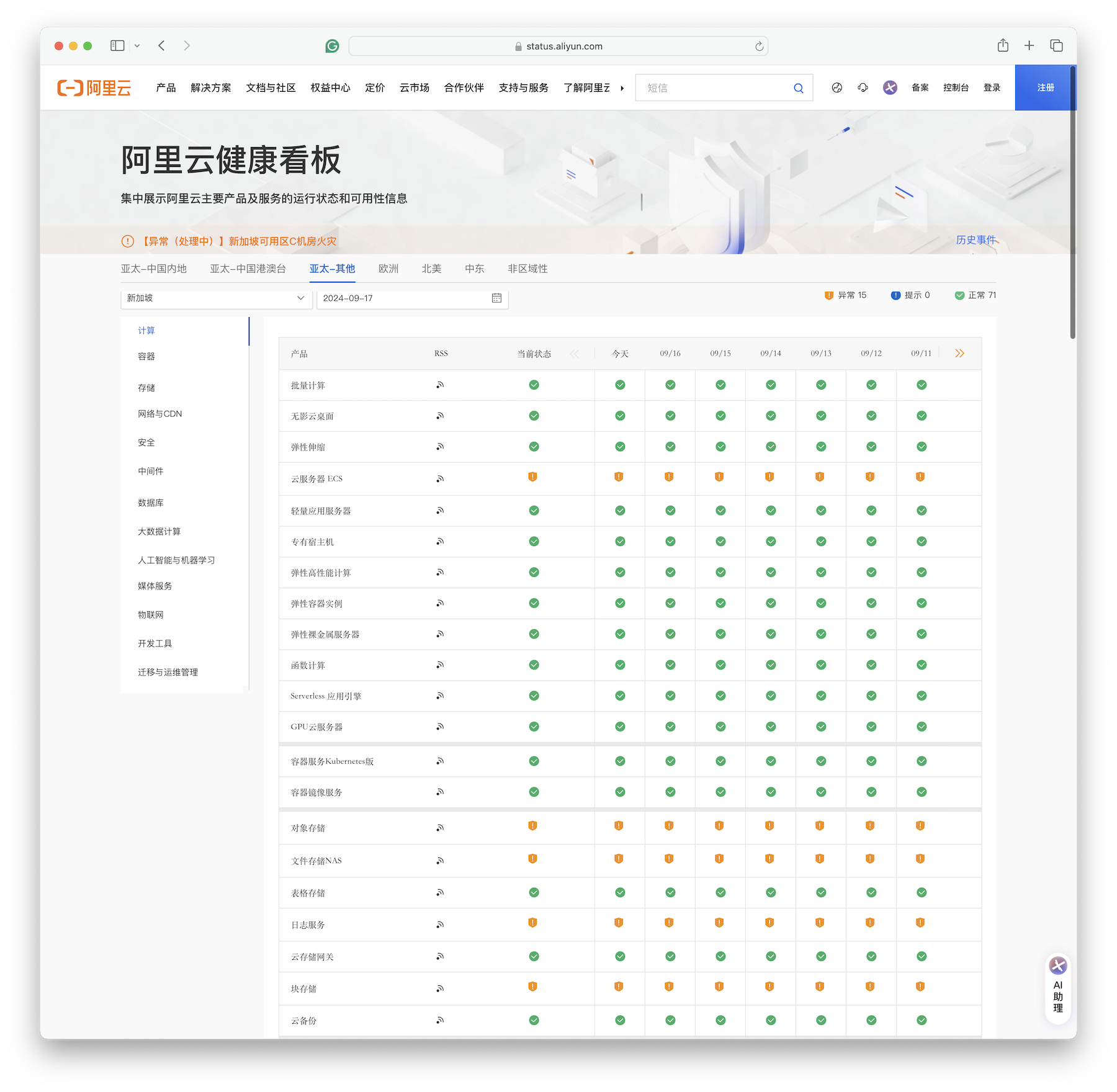

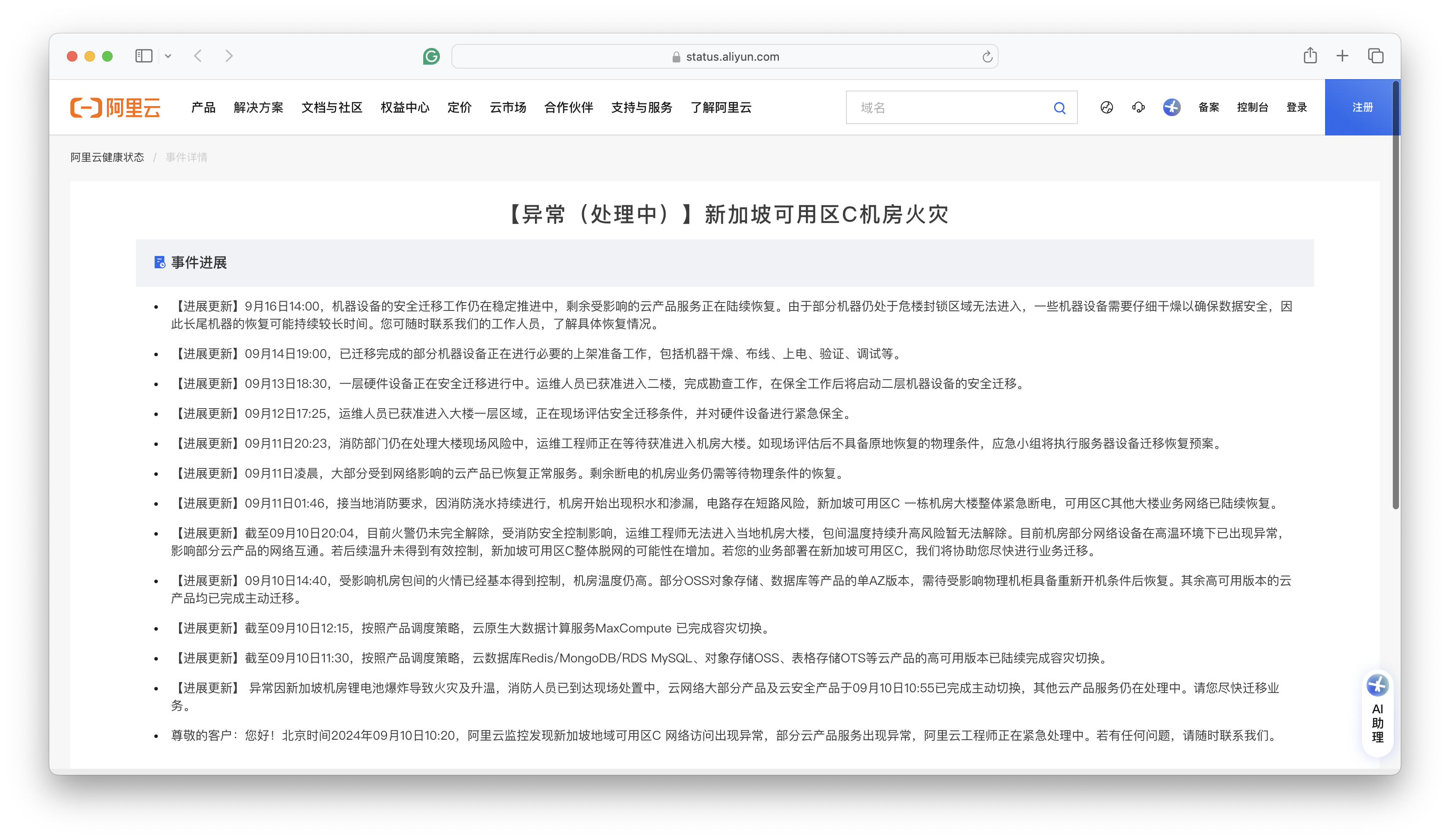

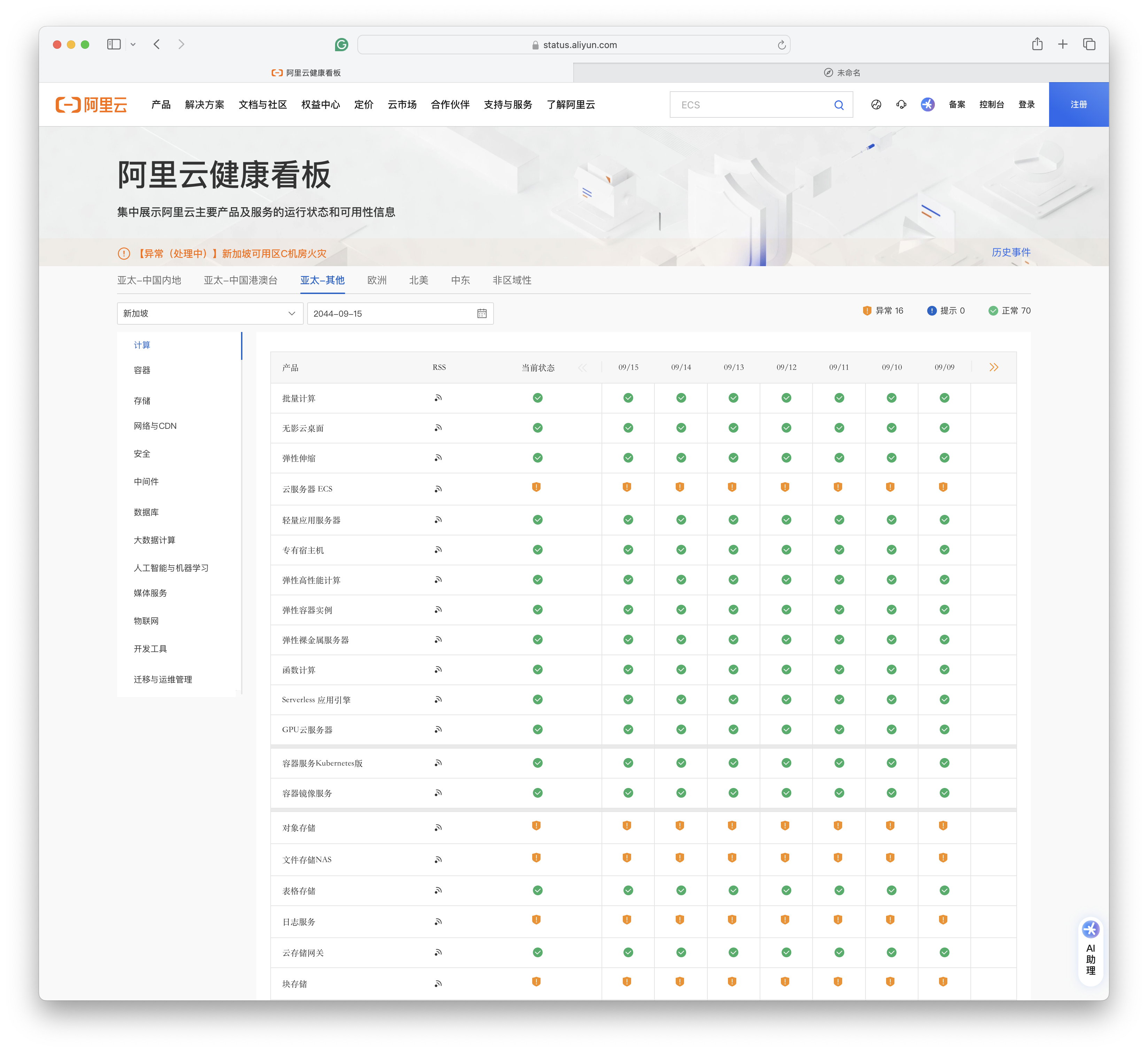

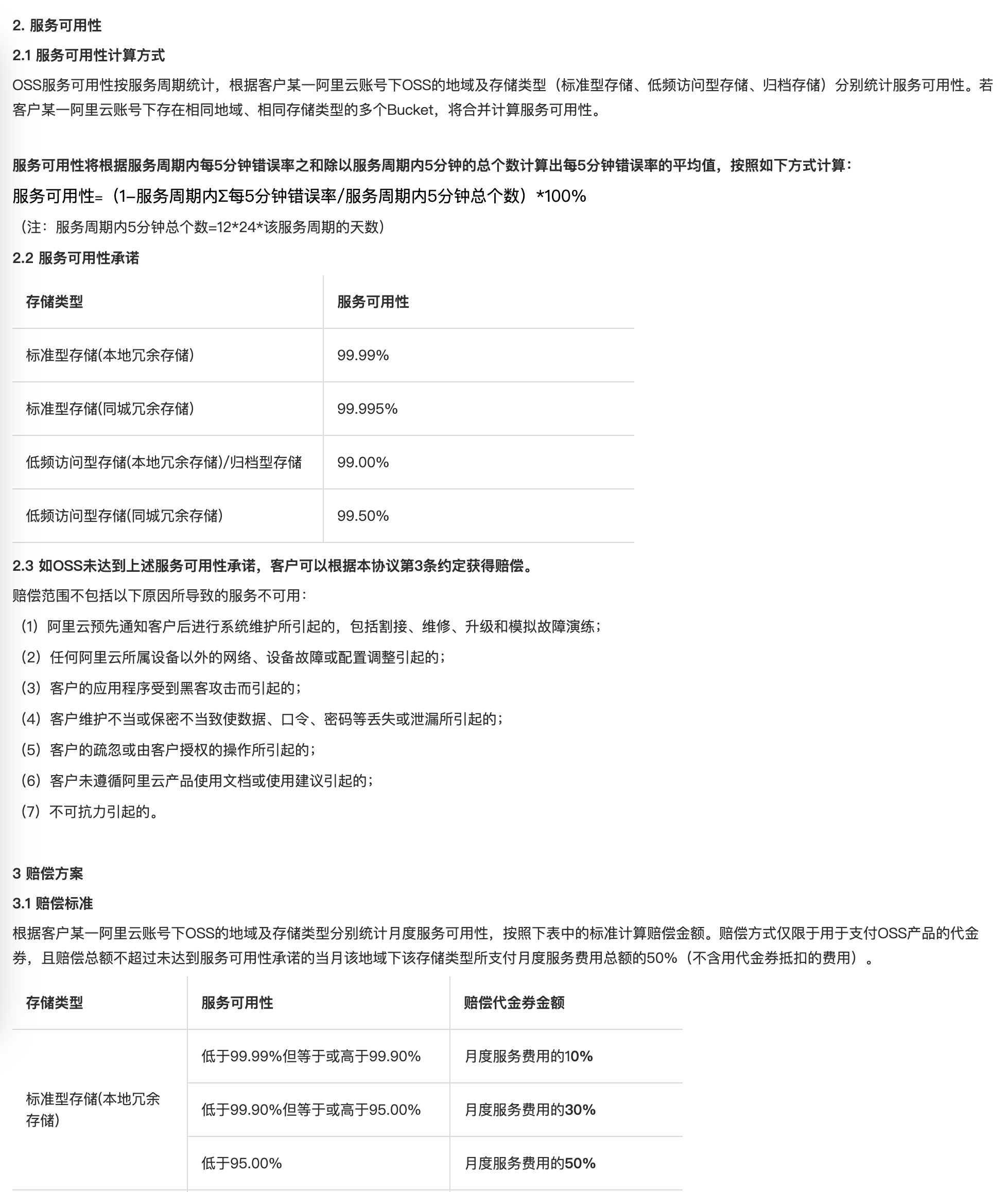

2024年9月10日,阿里云新加坡可用区C数据中心因锂电池爆炸导致火灾,到现在已经过去一周了,仍未完全恢复。 按照月度 SLA 定义的可用性计算规则(7天+/30天≈75%),服务可用性别说几个9了,连一个8都不剩了,而且还在进一步下降中。 当然,可用性八八九九已经是小问题了 —— 真正的问题是,放在单可用区里的数据还能不能找回来?

截止至 09-17,关键服务如 ECS, OSS, EBS, NAS, RDS 等仍然处于异常状态

通常来说,如果只是机房小范围失火的话,问题并不会特别大,因为电源和UPS通常放在单独房间内,与服务器机房隔离开。 但一旦触发了消防淋水,问题就大条了:一旦服务器整体性断电,恢复时间基本上要以天计; 如果泡水,那就不只是什么可用性的问题了,要考虑的是数据还能不能找回 —— 数据完整性 的问题了。

目前公告上的说法是,14号晚上已经拖出来一批服务器,正在干燥、一直到16号都没干燥完。从这个“干燥”说法来看,有很大概率是泡水了。 虽然在任何官方公告出来前,我们无法断言事实如何,但根据常理判断,出现数据一致性受损是大概率事件,只是丢多丢少的问题。 所以目测这次新加坡火灾大故障的影响,基本与 2022 年底 香港盈科机房大故障 与2023年双十一全球不可用 故障在一个量级甚至更大。

天有不测风云,人有旦夕祸福。故障的发生概率不会降为零,重要的是我们从中故障能学到什么经验与教训?

容灾实战战绩

整个数据中心着火是一件很倒霉的事,绝大多数用户除了靠异地冷备份逃生外,通常也只能自认倒霉。我们可以讨论锂电池还是铅酸电池更好,UPS电源应该怎么布局这类问题,但因为这些责备阿里云也没什么意义。

但有意义的是,在这次 可用区级故障中,标称自己为 “跨可用区容灾高可用” 的产品,例如云数据库 RDS,到底表现如何?故障给了我们一次用真实战绩来检验这些产品容灾能力的机会。

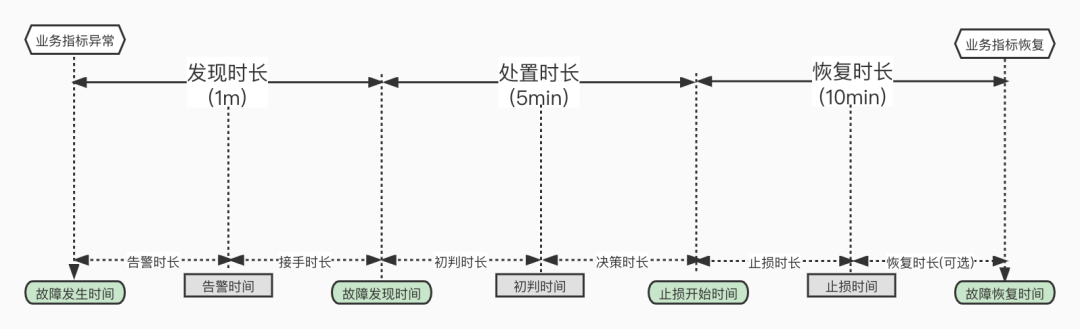

容灾的核心指标是 RTO (恢复耗时)与 RPO(数据损失),而不是什么几个9的可用性 —— 道理很简单,你可以单凭运气做到不出故障,实现 100% 的可用性。但真正检验容灾能力的,是灾难出现后的恢复速度与恢复效果。

| 配置策略 | RTO | RPO |

|---|---|---|

| 单机 + 什么也不做 | 数据永久丢失,无法恢复 | 数据全部丢失 |

| 单机 + 基础备份 | 取决于备份大小与带宽(几小时) | 丢失上一次备份后的数据(几个小时到几天) |

| 单机 + 基础备份 + WAL归档 | 取决于备份大小与带宽(几小时) | 丢失最后尚未归档的数据(几十MB) |

| 主从 + 手工故障切换 | 十分钟 | 丢失复制延迟中的数据(约百KB) |

| 主从 + 自动故障切换 | 一分钟内 | 丢失复制延迟中的数据(约百KB) |

| 主从 + 自动故障切换 + 同步提交 | 一分钟内 | 无数据丢失 |

毕竟,SLA 中的规定的几个9 可用性指标并非真实历史战绩,而是达不到此水平就补偿月消费XX元代金券的承诺。要想考察产品真正的容灾能力,还是要靠演练或真实灾难下的实际战绩表现。

然而实际战绩如何呢?在这次在这次新加坡火灾中,整个可用区 RDS 服务的切换用了多长时间 —— 多AZ高可用的 RDS 服务在 11:30 左右完成切换,耗时 70 分钟(10:20 故障开始),也就是 RTO < 70分。

这个指标相比 2022 年香港C可用区故障 RDS 切换的的 133 分钟,有进步。但和阿里云自己标注的指标(RTO < 30秒)还是差了两个数量级。

至于单可用区的基础版 RDS 服务,官方文档上说 RTO < 15分,实际情况是:单可用区的RDS都要过头七了。RTO > 7天,至于 RTO 和 RPO 会不会变成 无穷大 ∞ (彻底丢完无法恢复),我们还是等官方消息吧。

如实标注容灾指标

阿里云官方文档 宣称:RDS 服务提供多可用区容灾, “高可用系列和集群系列提供自研高可用系统,实现30秒内故障恢复。基础系列约15分钟即可完成故障转移。” 也就是高可用版 RDS 的 RTO < 30s,基础单机版的 RTO < 15min,中规中矩的指标,没啥问题。

我相信在单个集群的主实例出现单机硬件故障时,阿里云 RDS 是可以实现上面的容灾指标的 —— 但既然这里声称的是 “多可用区容灾”,那么用户的合理期待是当整个可用区故障时,RDS 故障切换也可以做到这一点。

可用区容灾是一个合理需求,特别是考虑到阿里云在最近短短一年内出现过好几次整个可用区范围的故障(**甚至还有一次全球/全服务级别的故障。

2024-09-10 新加坡可用区C机房火灾

2024-07-02 上海可用区N网络访问异常

2024-04-27 浙江地域访问其他地域或其他地域访问杭州地域的OSS、SLS服务异常

2024-04-25 新加坡地域可用区C部分云产品服务异常

2023-11-27 阿里云部分地域云数据库控制台访问异常

2023-11-12 阿里云云产品控制台服务异常 (全球大故障)

2023-11-09 中国内地访问中国香港、新加坡地域部分EIP无法访问

2023-10-12 阿里云杭州地域可用区J、杭州地域金融云可用区J网络访问异常

2023-07-31 暴雨影响北京房山地域NO190机房

2023-06-21 阿里云北京地域可用区I网络访问异常

2023-06-20 部分地域电信网络访问异常

2023-06-16 移动网络访问异常

2023-06-13 阿里云广州地域访问公网网络异常

2023-06-05 中国香港可用区D某机房机柜异常

2023-05-31 阿里云访问江苏移动地域网络异常

2023-05-18 阿里云杭州地域云服务器ECS控制台服务异常

2023-04-27 部分北京移动(原中国铁通) 用户网络访问存在丢包现象

2023-04-26 杭州地域容器镜像服务ACR服务异常

2023-03-01 深圳可用区A部分ECS访问Local DNS异常

2023-02-18 阿里云广州地域网络异常

2022-12-25 阿里云香港地域电讯盈科机房制冷设备故障

那么,为什么在实战中,单个RDS集群故障可以做到的指标,在可用区级故障时就做不到了呢?从历史的故障中我们不难推断 —— 数据库高可用依赖的基础设施本身,很可能就是单AZ部署的。包括在先前香港盈科机房故障中展现出来的 :单可用区的故障很快蔓延到了整个 Region —— 因为管控平面本身不是多可用区容灾的。

12月18日10:17开始,阿里云香港Region可用区C部分RDS实例出现不可用的报警。随着该可用区受故障影响的主机范围扩大,出现服务异常的实例数量随之增加,工程师启动数据库应急切换预案流程。截至12:30,RDS MySQL与Redis、MongoDB、DTS等大部分跨可用区实例完成跨可用区切换。部分单可用区实例以及单可用区高可用实例,由于依赖单可用区的数据备份,仅少量实例实现有效迁移。少量支持跨可用区切换的RDS实例没有及时完成切换。经排查是由于这部分RDS实例依赖了部署在香港Region可用区C的代理服务,由于代理服务不可用,无法通过代理地址访问RDS实例。我们协助相关客户通过临时切换到使用RDS主实例的地址访问来进行恢复。随着机房制冷设备恢复,21:30左右绝大部分数据库实例恢复正常。对于受故障影响的单机版实例及主备均在香港Region可用区C的高可用版实例,我们提供了克隆实例、实例迁移等临时性恢复方案,但由于底层服务资源的限制,部分实例的迁移恢复过程遇到一些异常情况,需要花费较长的时间来处理解决。

ECS管控系统为B、C可用区双机房容灾,C可用区故障后由B可用区对外提供服务,由于大量可用区C的客户在香港其他可用区新购实例,同时可用区C的ECS实例拉起恢复动作引入的流量,导致可用区 B 管控服务资源不足。新扩容的ECS管控系统启动时依赖的中间件服务部署在可用区C机房,导致较长时间内无法扩容。ECS管控依赖的自定义镜像数据服务,依赖可用区C的单AZ冗余版本的OSS服务,导致客户新购实例后出现启动失败的现象。

我建议在包括 RDS 在内的云产品应当实事求是,如实标注历史故障案例里的 RTO 和 RPO 战绩,以及真实可用区灾难下的实际表现。不要笼统地宣称 “30秒/15分钟恢复,不丢数据,多可用区容灾”,承诺一些自己做不到的事情。

阿里云的可用区到底是什么?

在这次新加坡C可用区故障,包括之前香港C可用区故障中,阿里云表现出来的一个问题就是,单个数据中心的故障扩散蔓延到了整个可用区中,而单个可用区的故障又会影响整个区域。

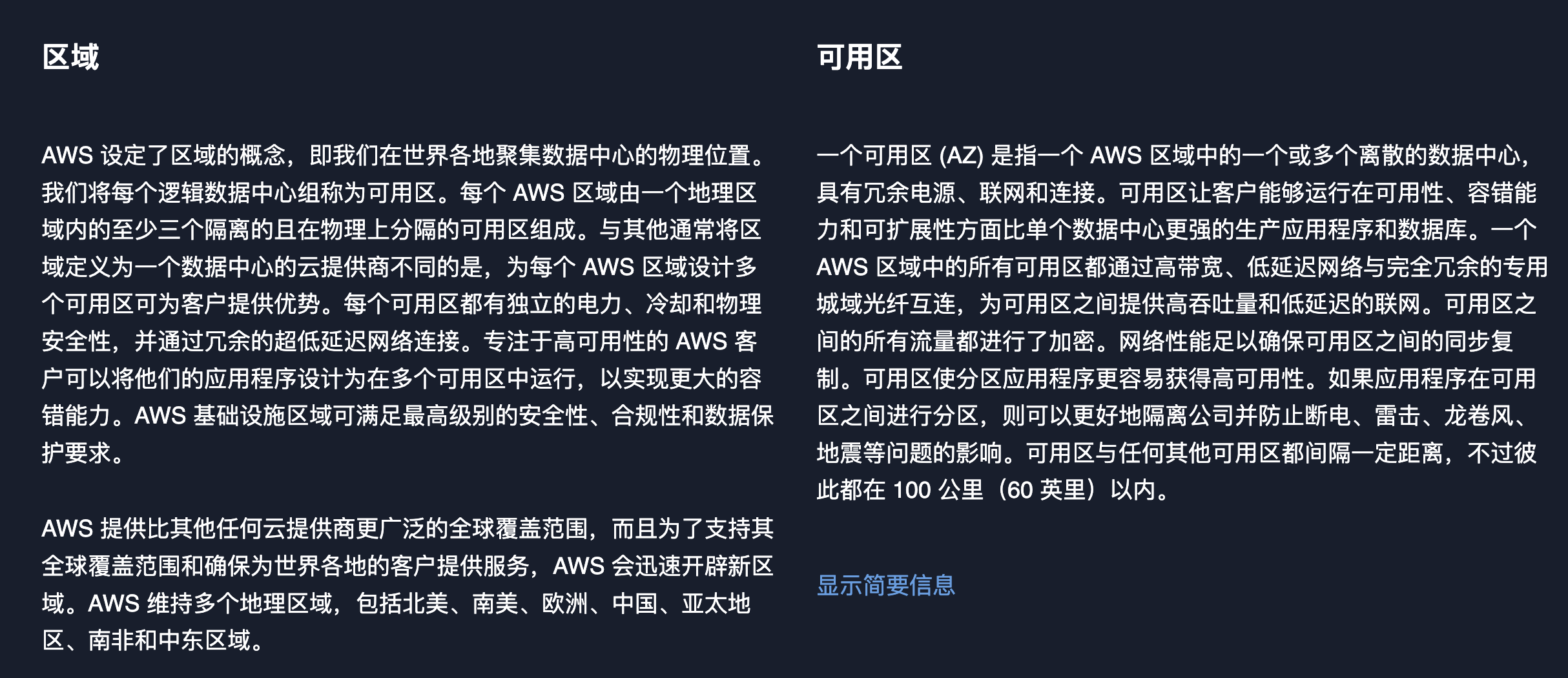

在云计算中, 区域(Region) 与 可用区(AZ) 是一个非常基本的概念,熟悉 AWS 的用户肯定不会感到陌生。 按照 AWS的说法 :一个 区域 包含多个可用区,每个可用区是一个或多个独立的数据中心。

对于 AWS 来说,并没有特别多的 Region,比如美国也就四个区域,但每个区域里 两三个可用区,而一个可用区(AZ)通常对应着多个数据中心(DC)。 AWS 的实践是一个 DC 规模控制在八万台主机,DC之间距离在 70 ~ 100 公里。这样构成了一个 Region - AZ - DC 的三级关系。

不过 阿里云 上似乎不是这样的,它们缺少了一个关键的 数据中心(DC) 的概念。 因此 可用区(AZ) 似乎就是一个数据中心,而 区域(Region) 与 AWS 的上一层 可用区(AZ)概念 对应。

把原本的 AZ 拔高成了 Region,把原本的 DC (或者DC的一部分,一层楼?)拔高成了可用区。

我们不好说阿里云这么设计的动机,一种可能的猜测是:本来可能阿里云国内搞个华北,华东,华南,西部几个区域就行,但为了汇报起来带劲(你看AWS美国才4个Region,我们国内有14个!),就搞成了现在这个样子。

云厂商有责任推广最佳实践

TBD

提供单az对象存储服务的云,可不可以评价为:不是傻就是坏?

云厂商有责任推广好的实践,不然出了问题,也还是“都是云的问题”

你给别人默认用的就是本地三副本冗余,绝大多数用户就会选择你默认的这个。

看有没有告知,没告知,那就是坏,告知了,长尾用户能不能看懂?但其实很多人不懂的。

你都已经三副本存储了,为什么不把一个副本放在另一个 DC 或者另一个 AZ ? 你都已经在存储上收取了上百倍的溢价了,为什么就不不愿意多花一点点钱去做同城冗余,难道是扣数据跨 AZ 复制的那点流量费吗?

做健康状态页请认真一点

TBD

我听说过天气预报,但从未听说过故障预报。但阿里云健康看板为我们提供了这一神奇的能力 —— 你可以选择未来的日期,而且未来日期里还有服务健康状态数据。例如,你可以查看20年后的服务健康状态 ——

未来的 “故障预报” 数据看上去是用当前状态填充的。所以当前处于故障状态的服务在未来的状态还是异常。如果你选择 20 年后,你依然可以看到当前新加坡大故障的健康状态为“异常”。

也许 阿里云是想用这种隐晦的方式告诉用户:新加坡区域单可用区里的数据已经泡汤了,Gone Forever, 乃们还是不要指望找回了。当然,更合理推断是:这不是什么故障预报,这个健康看板是实习生做的。完全没有设计 Review,没有 QA 测试,不考虑边际条件,拍拍脑袋拍拍屁股就上线了。

云是新的故障单点,那怎么办?

TBD

信息安全三要素 CIA:机密性,完整性,可用性,最近阿里都遇上了大翻车。

前有 阿里云盘灾难级BUG 泄漏隐私照片破坏机密性;

后有这次可用区故障故障,击穿多可用区/单可用区可用性神话,甚至威胁到了命根子 —— 数据完整性。

参考阅读

云计算泥石流:合订本

世人常道云上好,托管服务烦恼少。我言云乃杀猪盘,溢价百倍实厚颜。

赛博地主搞垄断,坐地起价剥血汗。运维外包嫖开源,租赁电脑炒概念。

世人皆趋云上游,不觉开销似水流。云租天价难为持,开源自建更稳实。

下云先锋把路趟,引领潮流一肩扛。不畏浮云遮望眼,只缘身在最前线。

曾几何时,“上云“近乎成为技术圈的政治正确,整整一代应用开发者的视野被云遮蔽。就让我们用实打实的数据分析与亲身经历,讲清楚公有云租赁模式的价值与陷阱 —— 在这个降本增效的时代中,供您借鉴与参考。

云基础资源篇

云商业模式篇

-

阿里云降价背后折射出的绝望【转载】

下云奥德赛篇

云故障复盘篇

RDS翻车篇

云厂商画像篇

-

牙膏云?您可别吹捧云厂商了【转载】

-

互联网技术大师速成班 【转载】

-

门内的国企如何看门外的云厂商【转载】

-

卡在政企客户门口的阿里云【转载】

-

互联网故障背后的草台班子们【转载】

-

云厂商眼中的客户:又穷又闲又缺爱【转载】

序

曾几何时,“上云“近乎成为技术圈的政治正确,整整一代应用开发者的视野被云遮蔽。DHH 以及像我这样的人愿意成为这个质疑者,用实打实的数据与亲身经历,讲清楚公有云租赁模式的陷阱。

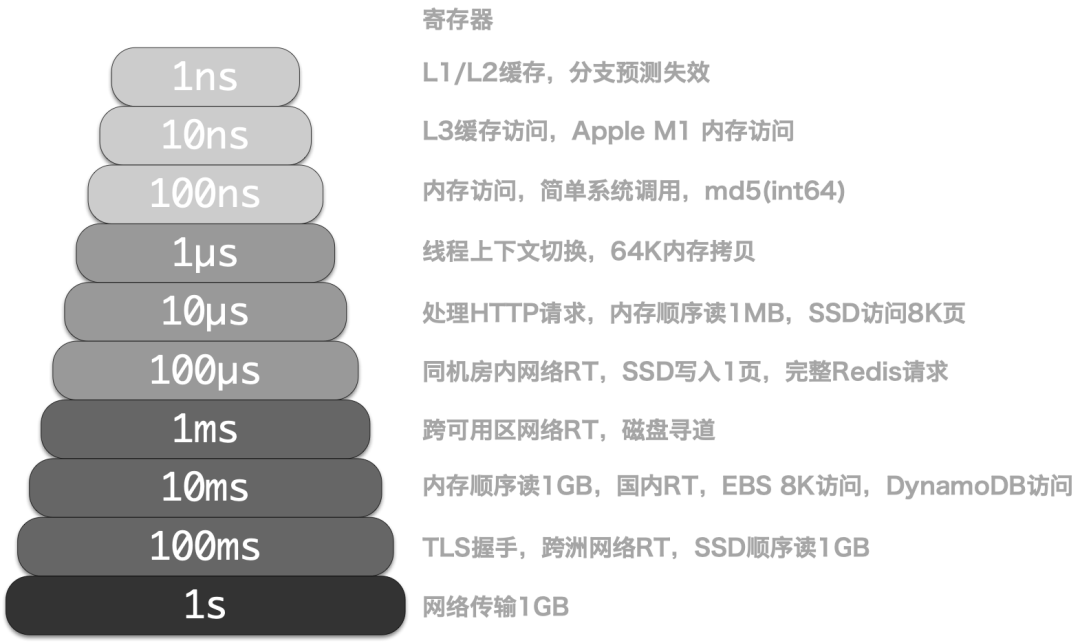

很多开发者并没有意识到,底层硬件已经出现了翻天覆地的变化,性能与成本以指数方式攀升与坍缩。许多习以为常的工作假设都已经被打破,无数利弊权衡与架构方案值得重新思索与设计。

公有云有其存在意义 —— 对那些非常早期、或两年后不复存在的公司,那些完全不在乎花钱、有着极端大起大落不规则负载的公司,对那些需要出海合规,CDN服务的公司而言,公有云仍然是非常值得考虑的选项。

然而对绝大多数已经发展起来,有一定规模与韧性,能在几年内摊销资产的公司而言,真的应该重新审视一下这股云热潮。好处被大大夸张了 —— 在云上跑东西通常和你自己弄一样复杂,却贵得离谱,我们真诚建议您好好算一下账。

最近十年间,硬件以摩尔定律的速度持续演进,IDC2.0与资源云提供了公有云资源的物美价廉替代,开源软件与开源管控调度软件的出现更是让自建能力唾手可及 —— 云下开源自建,在成本,性能,与安全自主可控上都会有显著回报。

我们提倡下云理念,并提供了实践的路径与切实可用的自建替代品 —— 我们将为认同这一结论的追随者提前铺设好意识形态与技术上的道路。不为别的,只是期望所有用户都能拥有自己的数字家园,而不是从科技巨头云领主那里租用农场。这也是一场对互联网集中化与反击赛博地主垄断收租的运动,让互联网 —— 这个美丽的自由避风港与理想乡可以走得更长。

云基础资源篇

EC2 / S3 / EBS 这样的基础资源价格,是所有云服务的定价之锚。基础资源篇的五篇文章,分别剖析了 EC2 算力,S3 对象存储,EBS 云盘,RDS 云数据库,以及 CDN 网络的方方面面:质量、安全、效率,以及大家最关心的一系列问题。

重新拿回计算机硬件的红利

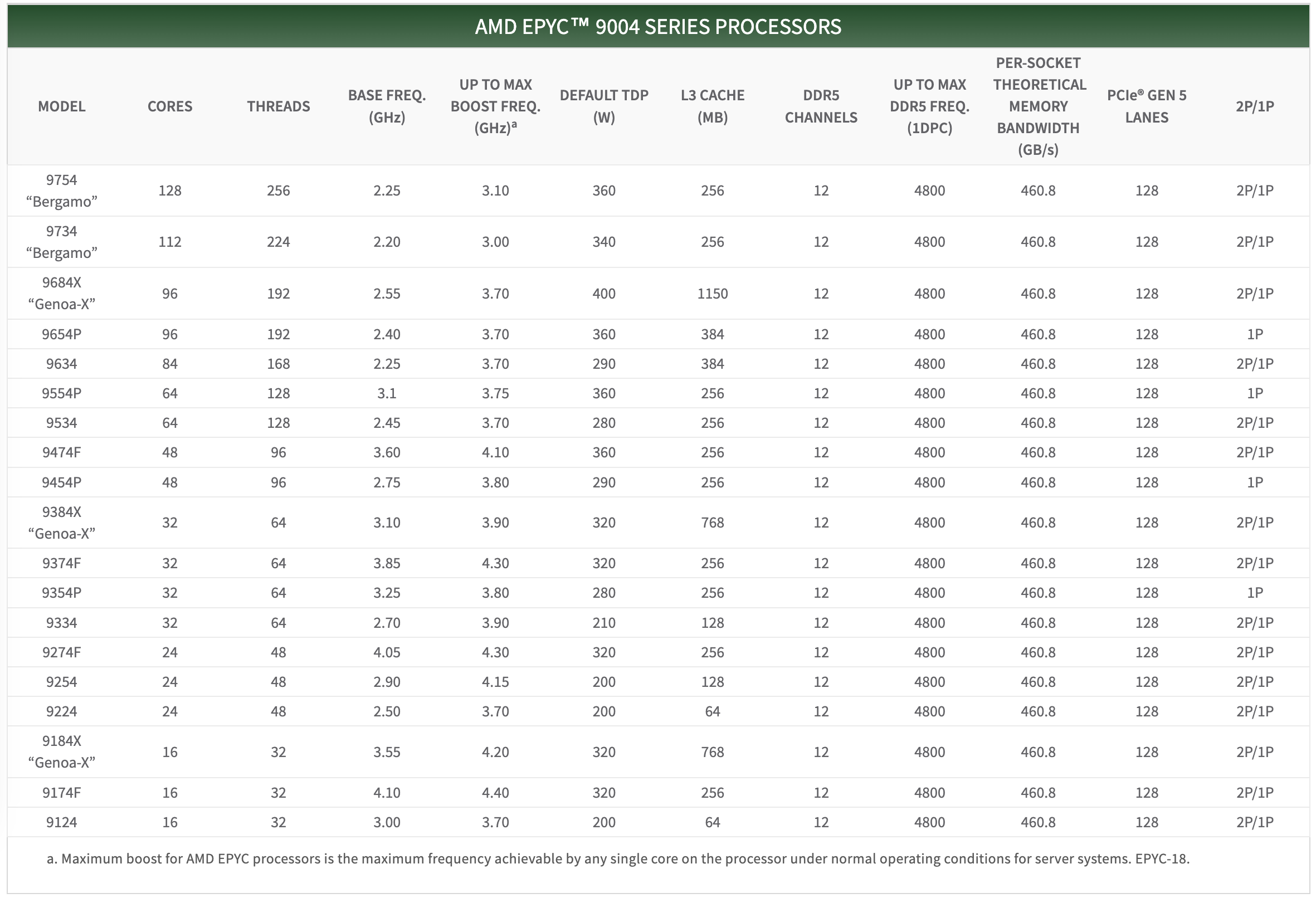

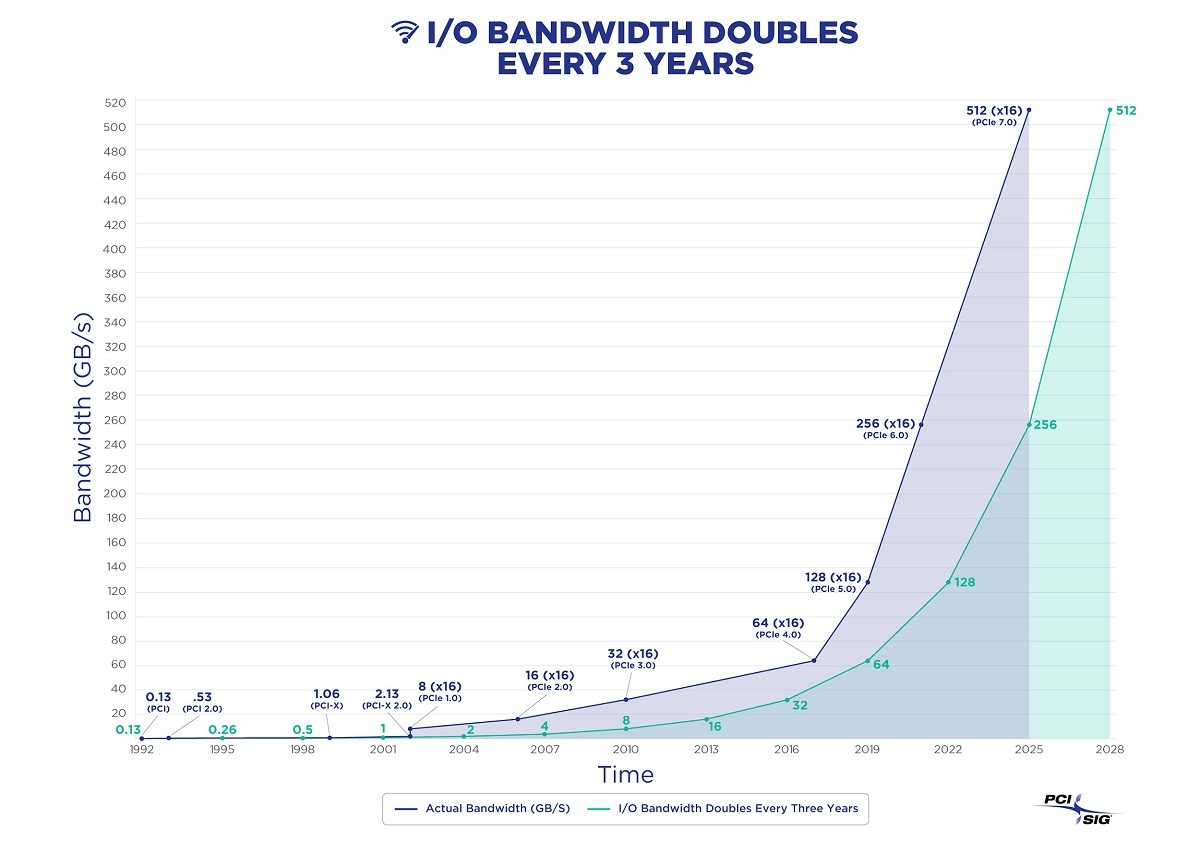

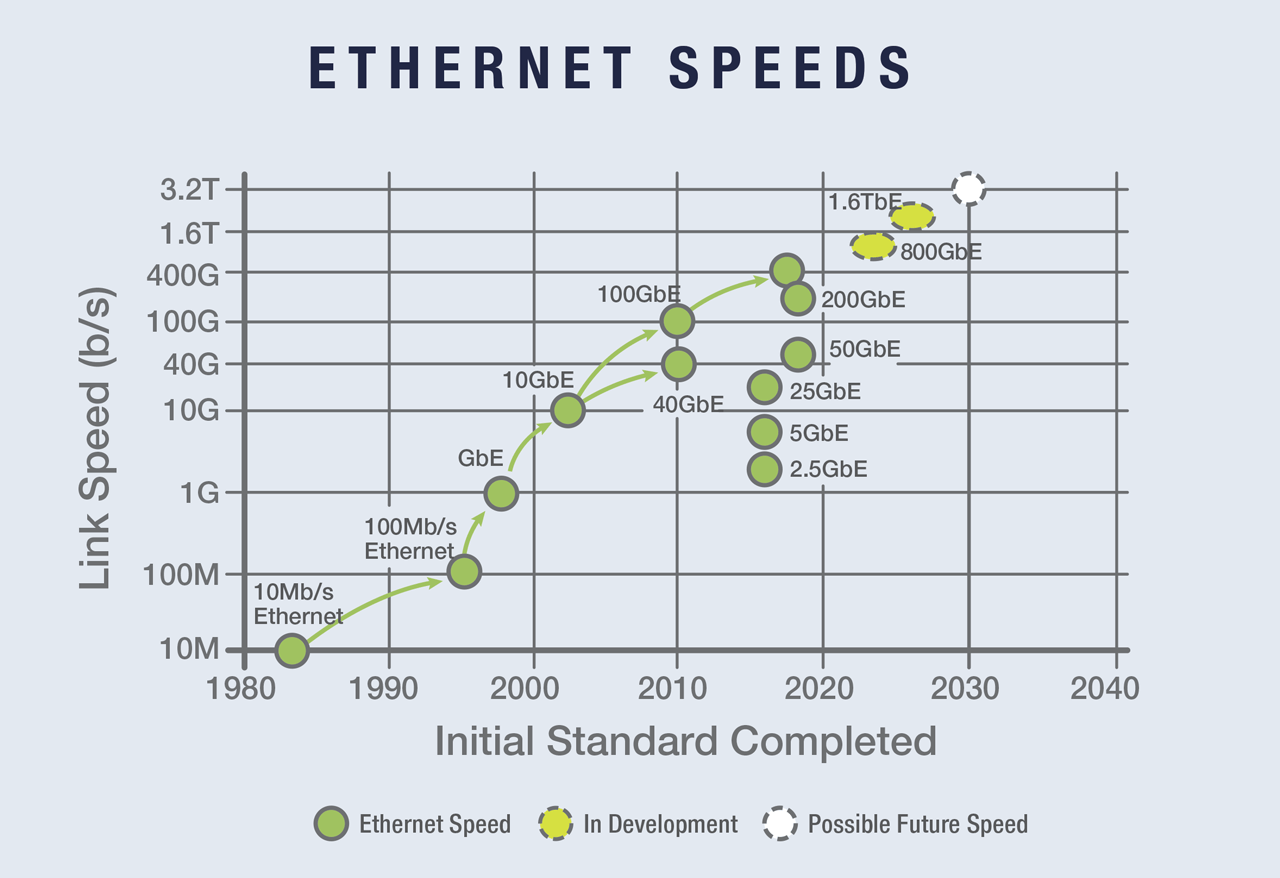

在当下,硬件重新变得有趣起来,AI 浪潮引发的显卡狂热便是例证。但有趣的硬件不仅仅是 GPU —— CPU 与 SSD 的变化却仍不为大多数开发者所知,有一整代软件开发者被云和炒作营销遮蔽了双眼,难以看清这一点。

许多软件与商业领域的问题的根都在硬件上:硬件的性能以指数模式增长,让分布式数据库几乎成为了伪需求。硬件的价格以指数模式衰减,让公有云从普惠基础设施变成了杀猪盘。让我们用算力与存储的价格变迁来说明这一点。

扒皮云对象存储:从降本到杀猪

对象存储是云计算的定义性服务,曾是云上降本的典范。然而随着硬件的发展,资源云(Cloudflare / IDC 2.0)与开源平替(MinIO)的出现,曾经“物美价廉”的对象存储服务失去了性价比,和EBS一样成为了杀猪盘。

硬件资源的价格随着摩尔定律以指数规律下降,但节省的成本并没有穿透云厂商这个中间层,传导到终端用户的服务价格上。 逆水行舟,不进则退,降价速度跟不上摩尔定律就是其实就是变相涨价。以S3为例,在过去十几年里,云厂商S3虽然名义上降价6倍,但硬件资源却便宜了 26 倍,S3 的价格实际上是涨了 4 倍。

云盘是不是杀猪盘?

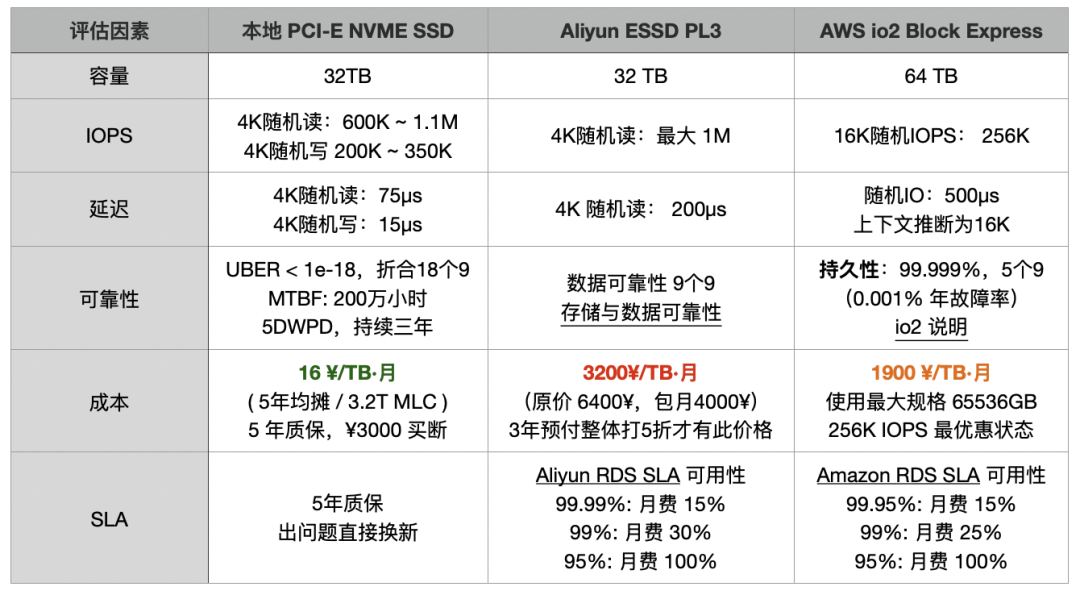

EC2 / S3 / EBS 是所有云服务的定价之锚。如果说 EC2/S3 定价还勉强能算合理,那么 EBS 的定价乃是故意杀猪。公有云厂商最好的块存储服务与自建可用的 PCI-E NVMe SSD 在性能规格上基本相同。然而相比直接采购硬件,AWS EBS 的成本高达 120 倍,而阿里云的 ESSD 则可高达 200 倍。

公有云的弹性就是针对其商业模式设计的:启动成本极低,维持成本极高。低启动成本吸引用户上云,而且良好的弹性可以随时适配业务增长,可是业务稳定后形成供应商锁定,尾大不掉,极高的维持成本就会让用户痛不欲生了。这种模式有一个俗称 —— 杀猪盘。

云数据库是不是智商税

近日,Basecamp & HEY 联合创始人 DHH 的一篇文章引起热议,主要内容可以概括为一句话:“我们每年在云数据库(RDS/ES)上花50万美元,你知道50万美元可以买多少牛逼的服务器吗?我们要下云,拜拜了您呐!“

在专业甲方用户眼中,云数据库只能算 60 分及格线上的大锅饭标品,却敢于卖出十几倍的天价资源溢价。如果用云数据库1年的钱,就够买几台甚至十几台性能更好的服务器自建更好的本地 RDS,那么使用云数据库的意义到底在哪里?在有完整开源平替的情况下,这算不算交了智商税?

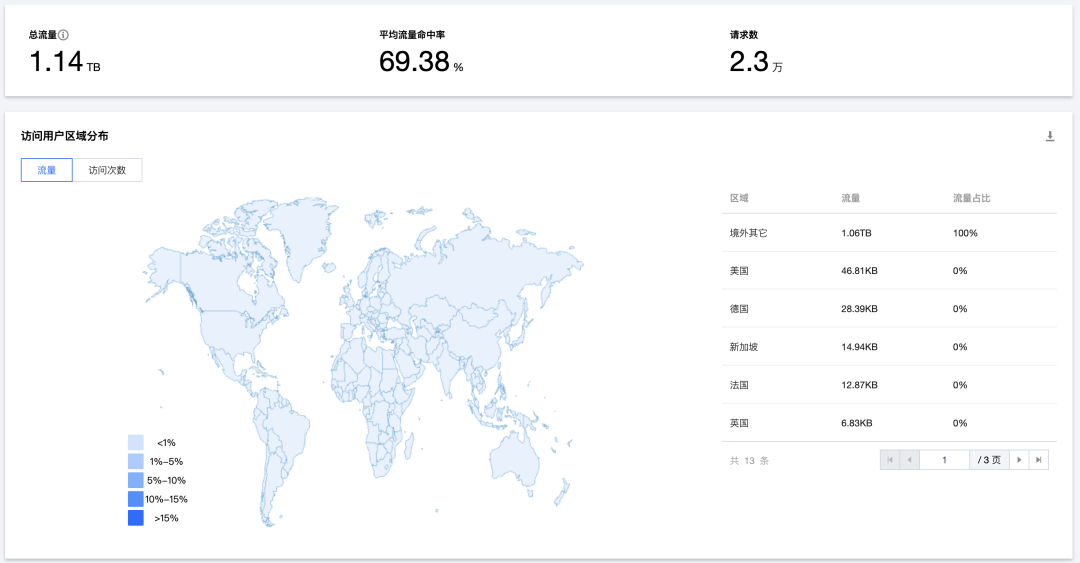

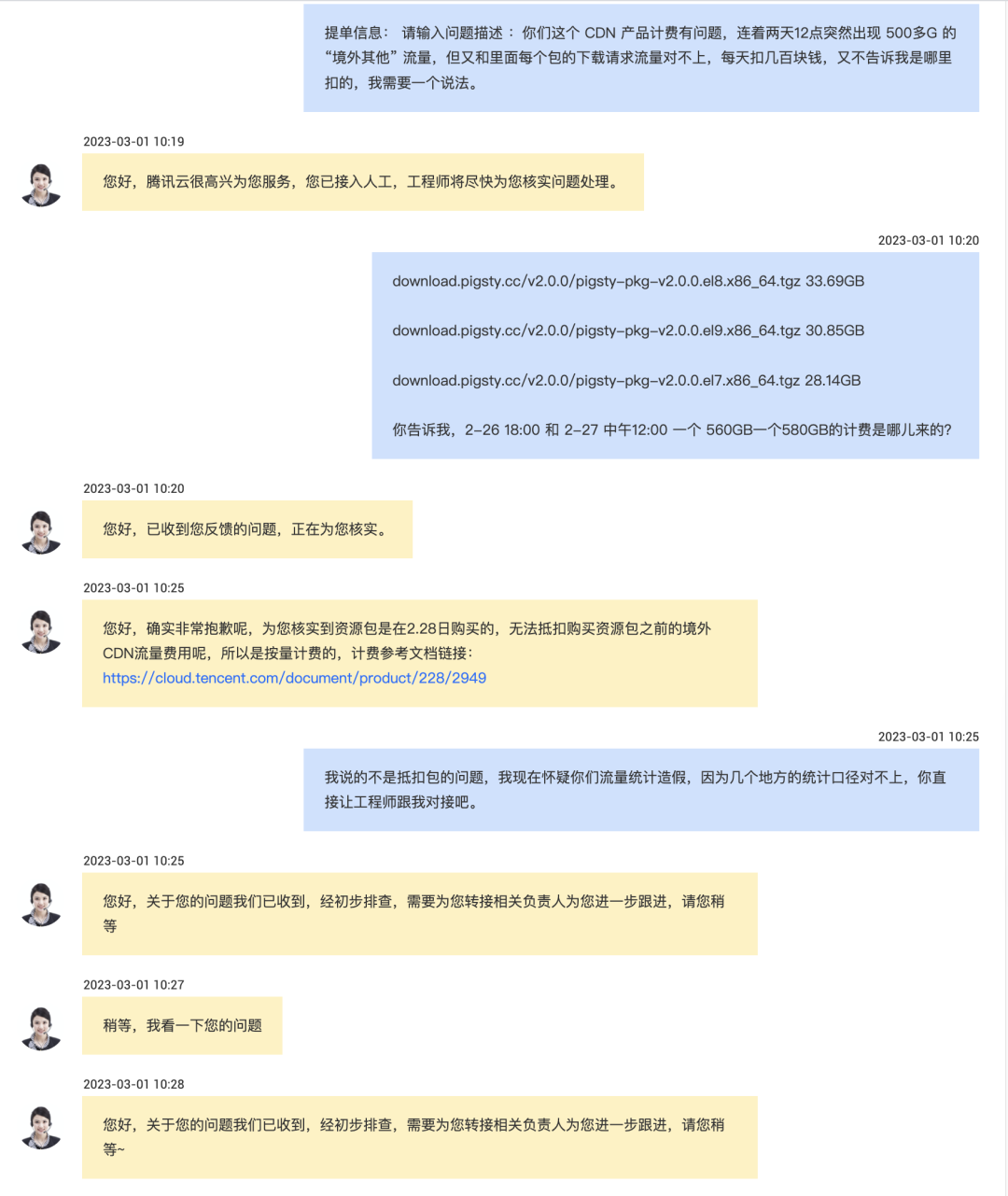

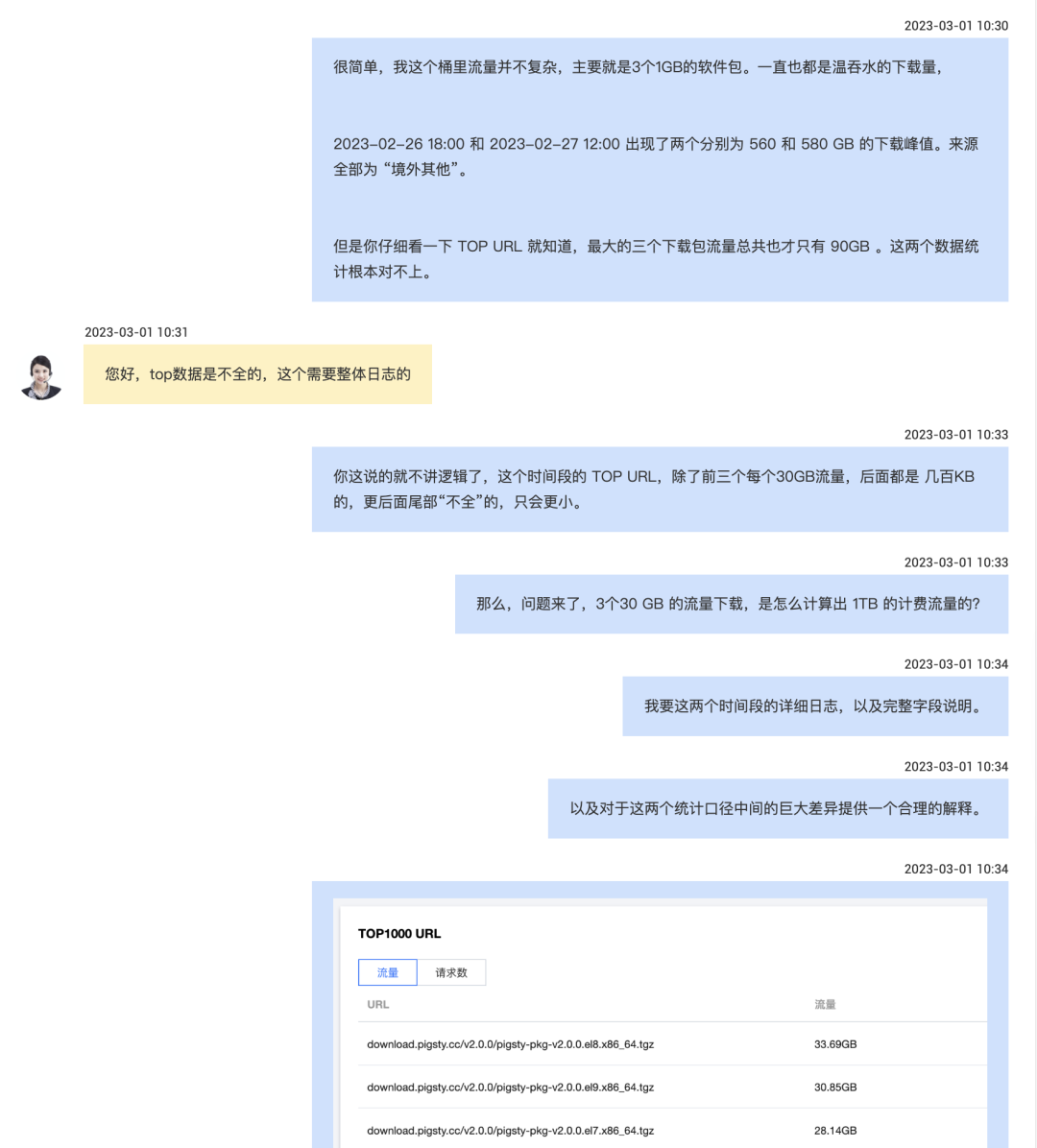

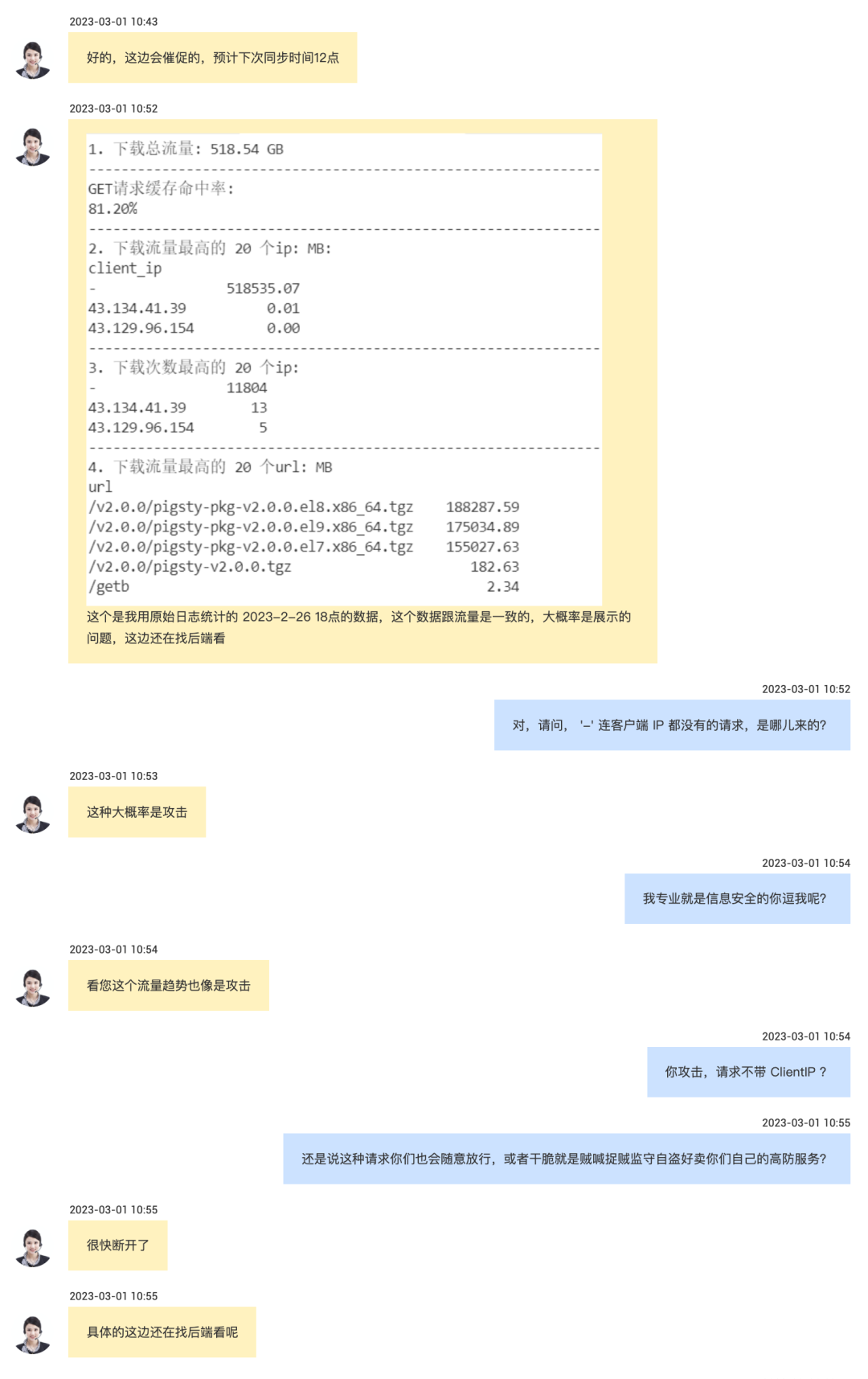

垃圾腾讯云CDN:从入门到放弃

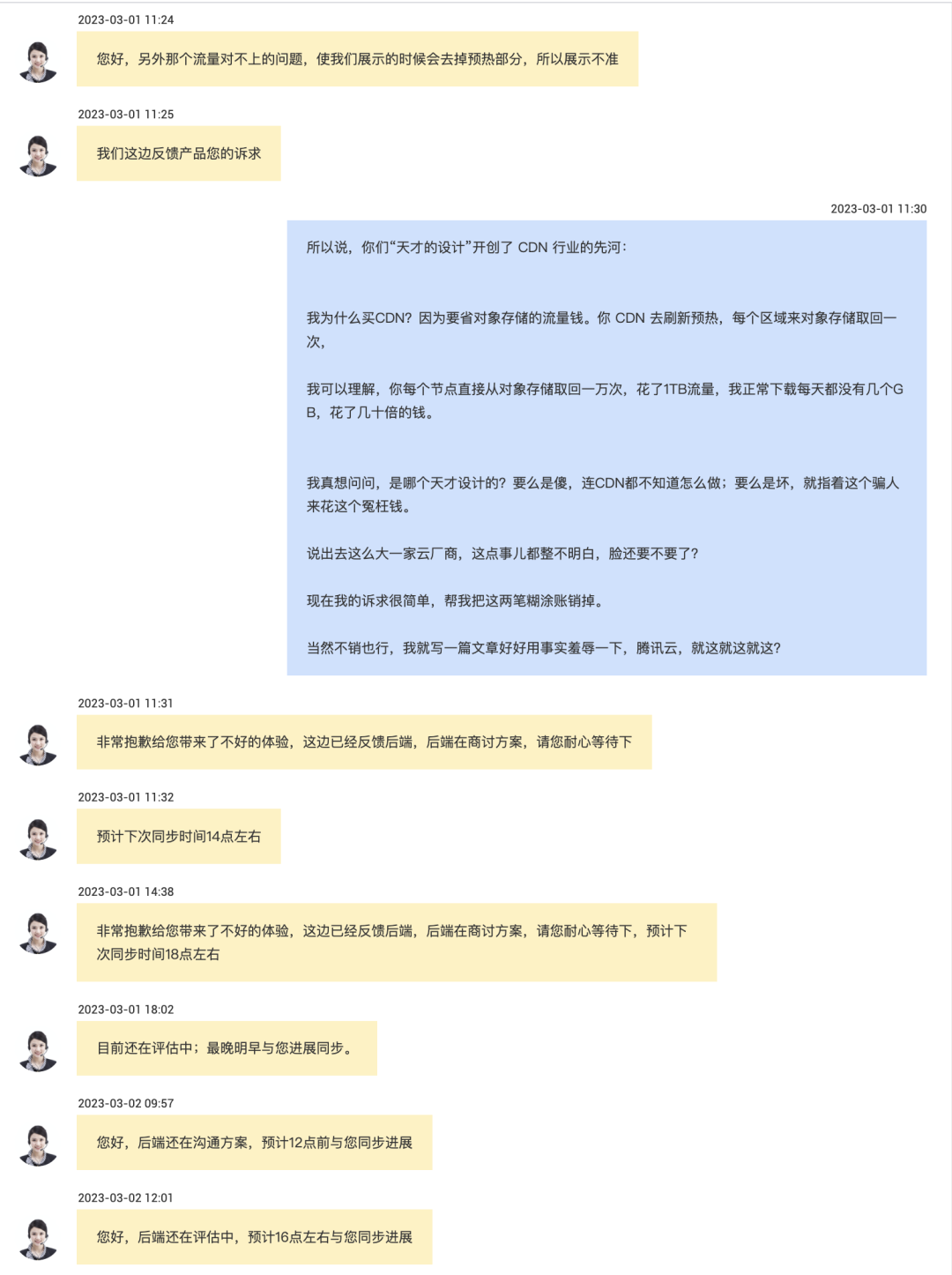

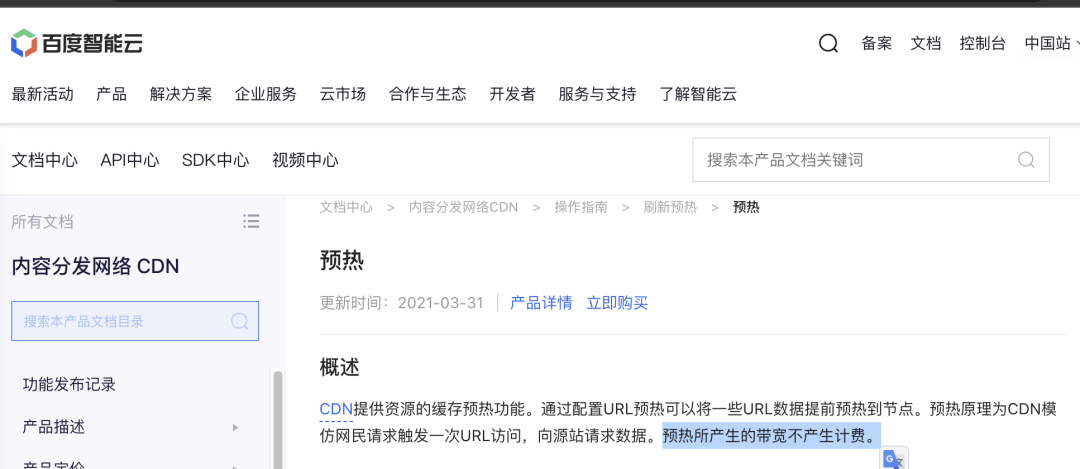

本来我相信至少在 IaaS 的存储,计算,网络三大件上,公有云厂商还是可以有不小优势的。只不过在腾讯云 CDN 上的亲身体验让我的想法动摇了。

云商业模式篇

FinOps终点是下云

FinOps关注点跑偏,公有云是个杀猪盘。自建能力决定议价权,数据库是自建关键。

搞 FinOps 的关注减少浪费资源的数量,却故意无视了房间里的大象 —— 云资源单价。云算力的成本是自建的五倍,块存储的成本则可达百倍以上,堪称终极成本刺客。对于有一定规模企业来说,IDC自建的总体成本在云服务列表价1折上下。下云是原教旨 FinOps 的终点,也是真正 FinOps 的起点。

拥有自建能力的用户即使不下云也能谈出极低的折扣,没有自建能力的公司只能向公有云厂商缴纳高昂的 “无专家税” 。无状态应用与数仓搬迁相对容易,真正的难点是在不影响质量安全的前提下,完成数据库的自建。

云计算为啥还没挖沙子赚钱?

公有云毛利不如挖沙子,杀猪盘为何成为赔钱货?卖资源模式走向价格战,开源替代打破垄断幻梦。服务竞争力逐渐被抹平,云计算行业将走向何方?

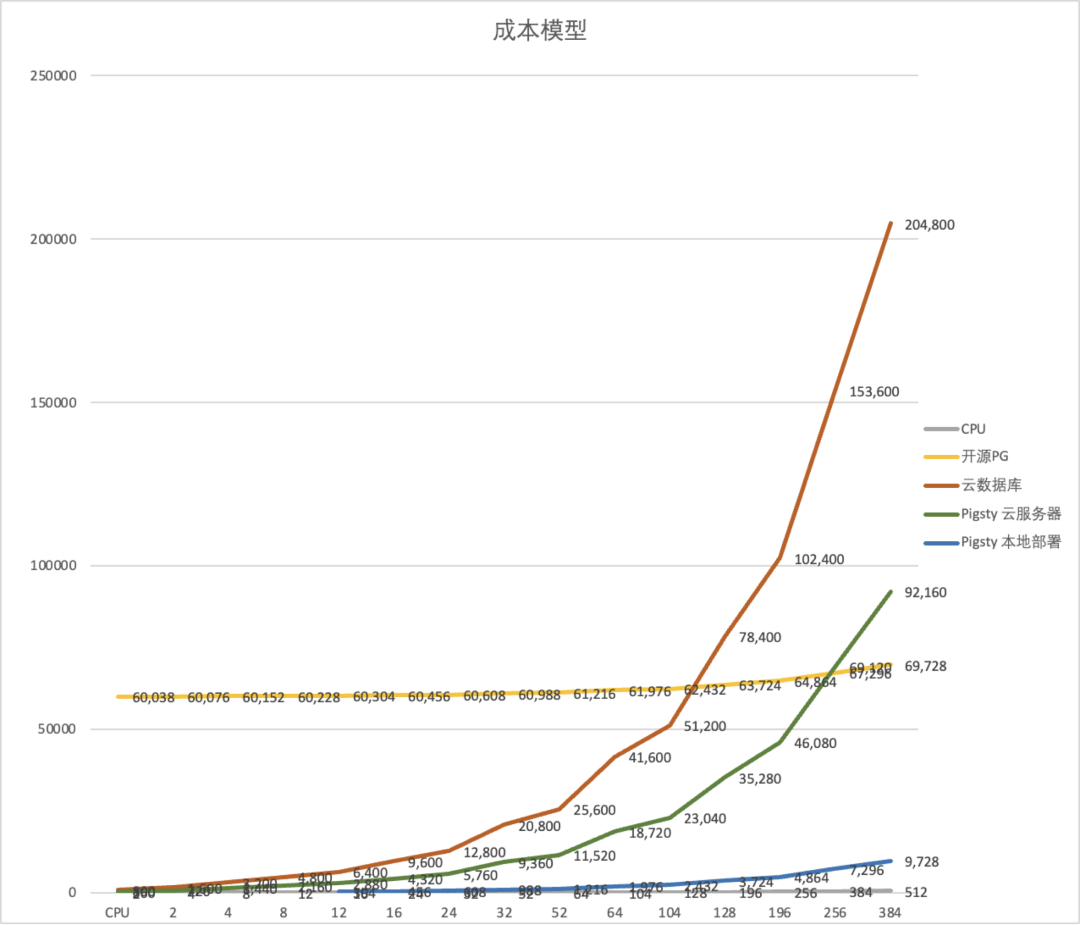

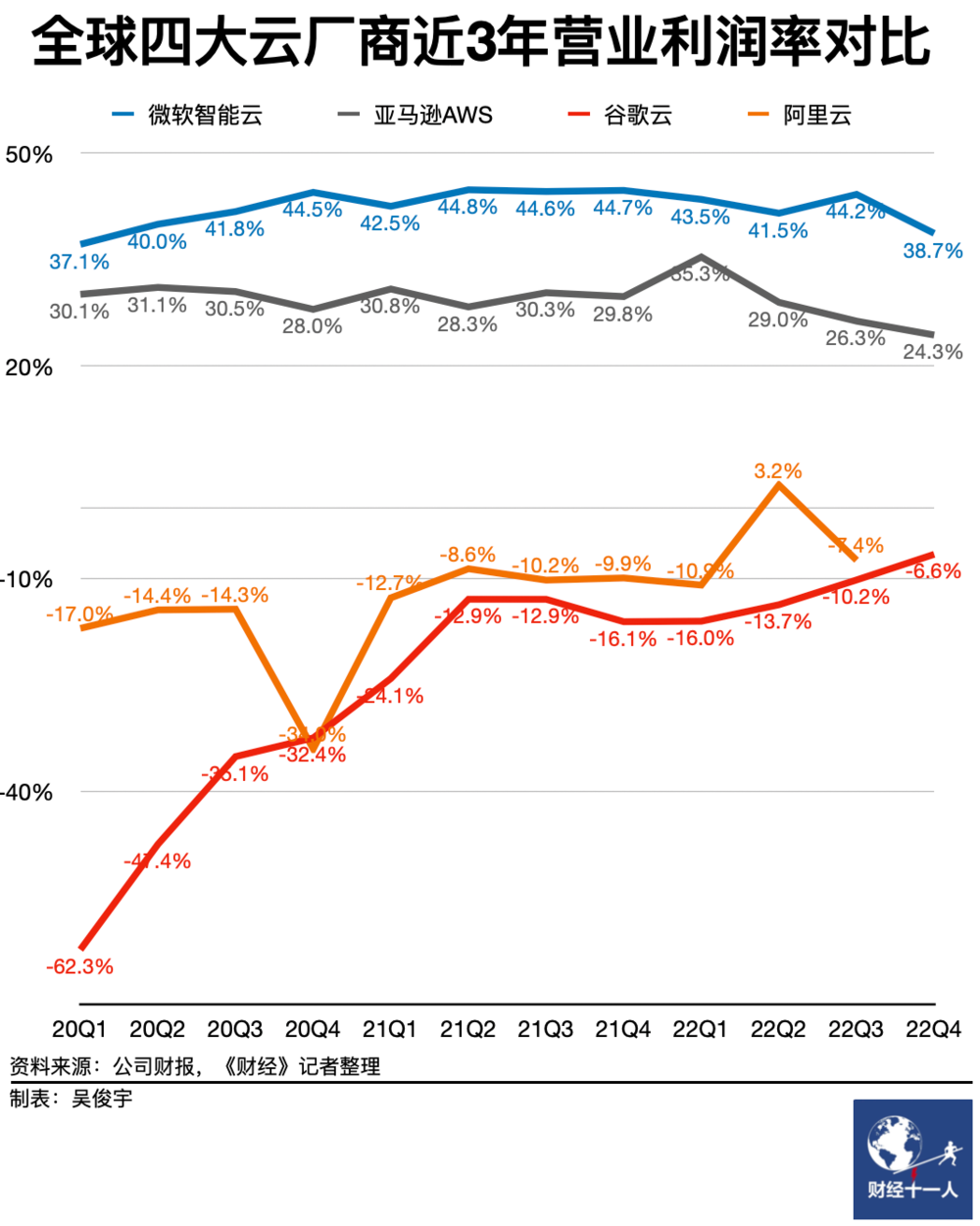

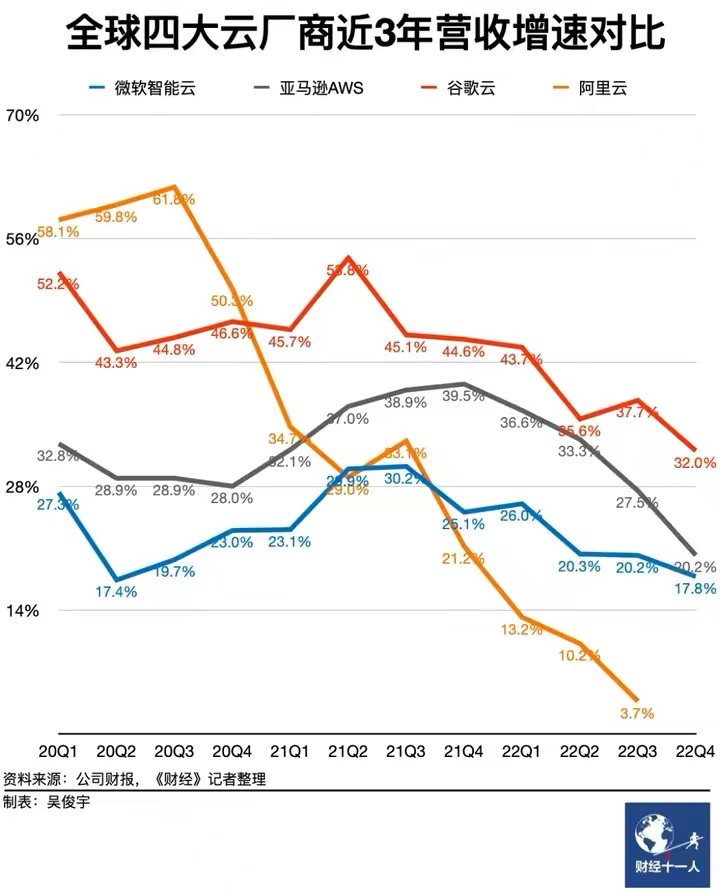

业界标杆的 AWS 与 Azure 毛利就可以轻松到 60% 与 70% 。反观国内云计算行业,毛利普遍在个位数到 15% 徘徊,至于像金山云这样的云厂商,毛利率直接一路干到 2.1%,还不如打工挖沙子的毛利高。这不禁让人好奇,这些本土云厂商是怎么把一门百分之三四十纯利的生意能做到这种地步的?

云SLA是不是安慰剂?

在云计算的世界里,服务等级协议(SLA)曾被视为云厂商对其服务质量的承诺。然而,当我们深入研究这些由一堆9组成的 SLA 时,会发现它们并不能像期望的那样“兜底”:与其说是 SLA 是对用户的补偿,不如说 SLA 是对云厂商服务质量没达标时的“惩罚”。

你以为给自己的服务上了保险可以高枕无忧,但 SLA 对用户来说不是兜底损失的保险单。在最坏的情况下,它是吃不了兜着走的哑巴亏。在最好的情况下,它才是提供情绪价值的安慰剂。

杀猪盘真的降价了吗?

存算资源降价跟不上摩尔定律发展其实就是变相涨价。但能看到有云厂商率先打起了降价第一枪,还是让人欣慰的:云厂商向 PLG 的康庄大道迈出了第一步:用技术优化产品,靠提高效率去降本,而不是靠销售吹逼打折杀猪。

范式转移:从云到本地优先

软件吞噬世界,开源吞噬软件。云吞噬开源,谁来吃云?与云软件相对应的本地优先软件开始如雨后春笋一般出现。而我们,就在亲历见证这次范式转移。

最终会有这么一天,公有云厂商与自由软件世界达成和解,心平气和地接受基础设施供应商的角色定位,为大家提供水与电一般的存算资源。而云软件终将会回归正常毛利,希望那一天人们能记得人们能记得,这不是因为云厂商大发慈悲,而是因为有人带来过开源平替。

下云奥德赛篇

下云奥德赛:是时候放弃云计算了吗?

下云先锋:David Heinemeier Hansson,网名 DHH,37 Signal 联创与CTO,Ruby on Rails 作者,下云倡导者、实践者、领跑者。反击科技巨头垄断的先锋。

DHH 下云已省下了近百万美元云支出,未来的五年还能省下上千万美元。我们跟进了下云先锋的完整进展,挑选了十四篇博文,译为中文,合辑为《下云奥德赛》,记录了他们从云上搬下来的波澜壮阔的完整旅程。

- 10-27 推特下云省掉 60% 云开销

- 10-06 托管云服务的代价

- 09-15 下云后已经省了百万美金

- 06-23 我们已经下云了!

- 05-03 从云遣返到主权云!

- 05-02 下云还有性能回报?

- 04-06 下云所需的硬件已就位!

- 03-23 裁员前不先考虑下云吗?

- 03-11 失控的不仅仅是云成本!

- 02-22 指导下云的五条价值观

- 02-21 下云将给咱省下五千万!

- 01-26 折腾硬件的乐趣重现

- 01-10 “企业级“替代品还要离谱

- 2022-10-19 我们为什么要下云?

半年下云省千万:DHH下云FAQ答疑

DHH 与 X马斯克的下云举措在全球引发了激烈的讨论,有许多的支持者,也有许多的质疑者。面对众人提出的问题与质疑,DHH 给出了自己充满洞见的回复。

- 在硬件上省下的成本,会不会被更大规模的团队薪资抵消掉?

- 为什么你选择下云,而不是优化云账单呢?

- 那么安全性呢?你不担心被黑客攻击吗?

- 不需要一支世界级超级工程师团队来干这些吗?

- 这是否意味着你们在建造自己的数据中心?

- 谁来做码放服务器、拔插网络电缆这些活呢?

- 那么可靠性呢?难道云不是为你做到了这些吗?

- 那国际化业务的性能呢?云不是更快吗?

- 你有考虑到以后更换服务器的成本吗?

- 那么隐私法规和GDPR呢?

- 那么需求激增时怎么办?自动伸缩呢?

- 你在服务合同和授权许可费上花费了多少?

- 如果云这么贵,你们为什么会选择它?

下云高可用的秘诀:拒绝智力自慰

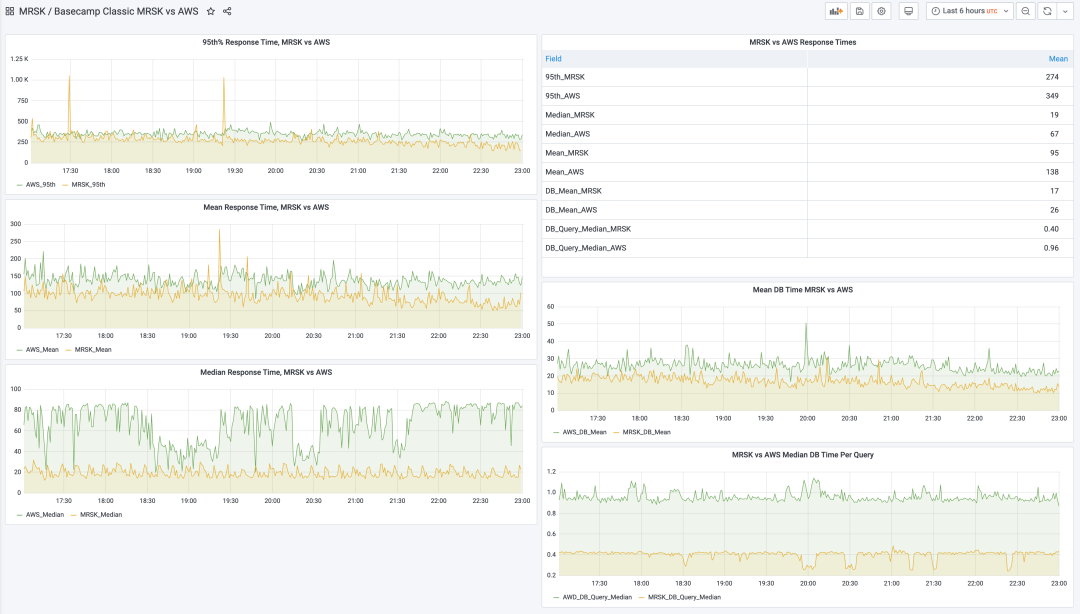

下云之后,DHH 的几个业务都保持了 99.99% 以上的可用性。Basecamp 2 更是连续两年实现 100% 可用性的魔法。相当一部分归功于他们选择了简单枯燥、朴实无华的,复杂度很低,成熟稳定的技术栈 —— 他们并不需要 Kubernetes 大师或花哨的数据库与存储。

程序员极易被复杂度所吸引,就像飞蛾扑火一样。系统架构图越复杂,智力自慰的快感就越大。坚决抵制这种行为,是在云下自建保持稳定可靠的关键所在。

云故障复盘篇

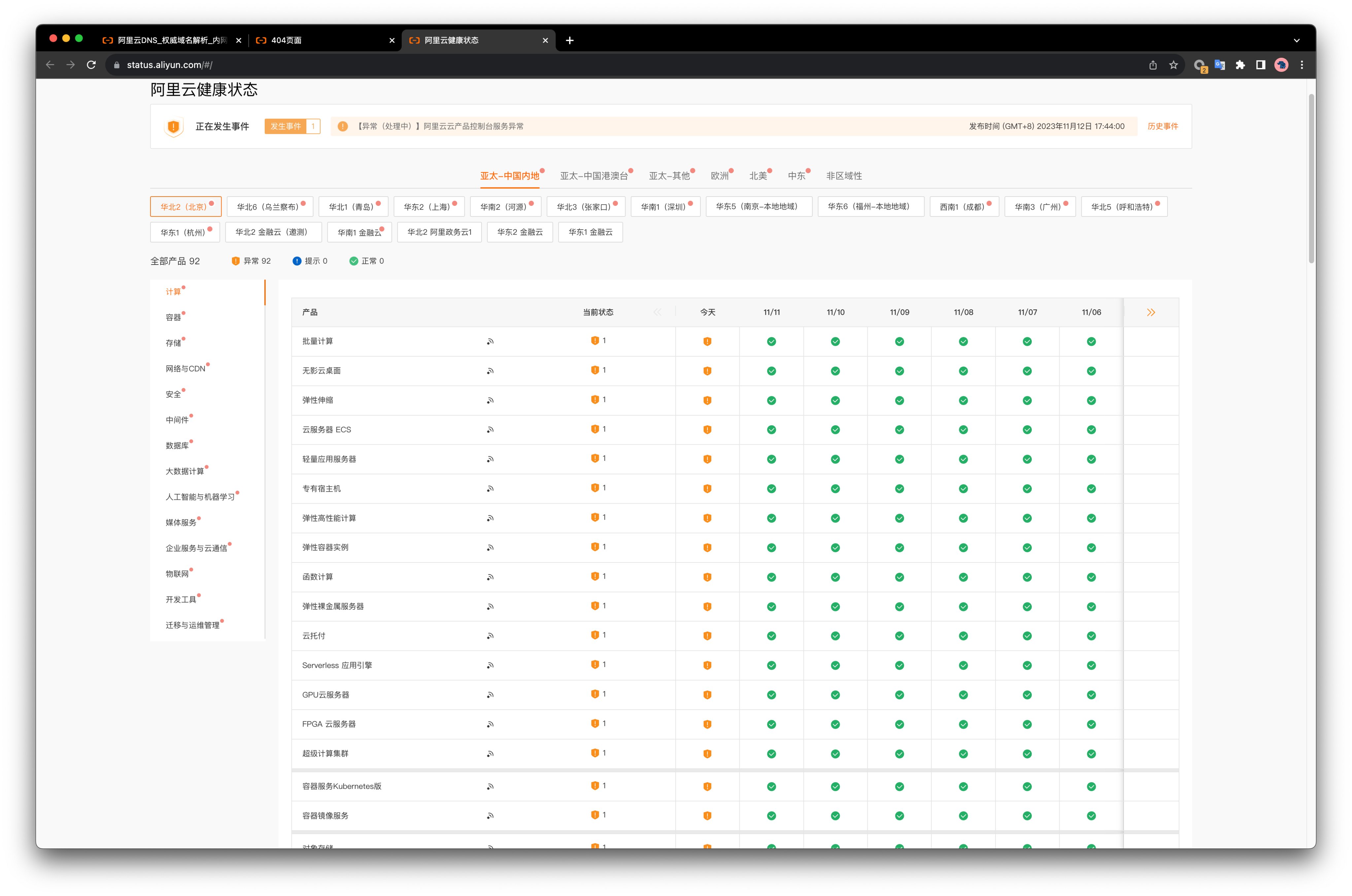

【阿里】云计算史诗级大翻车来了

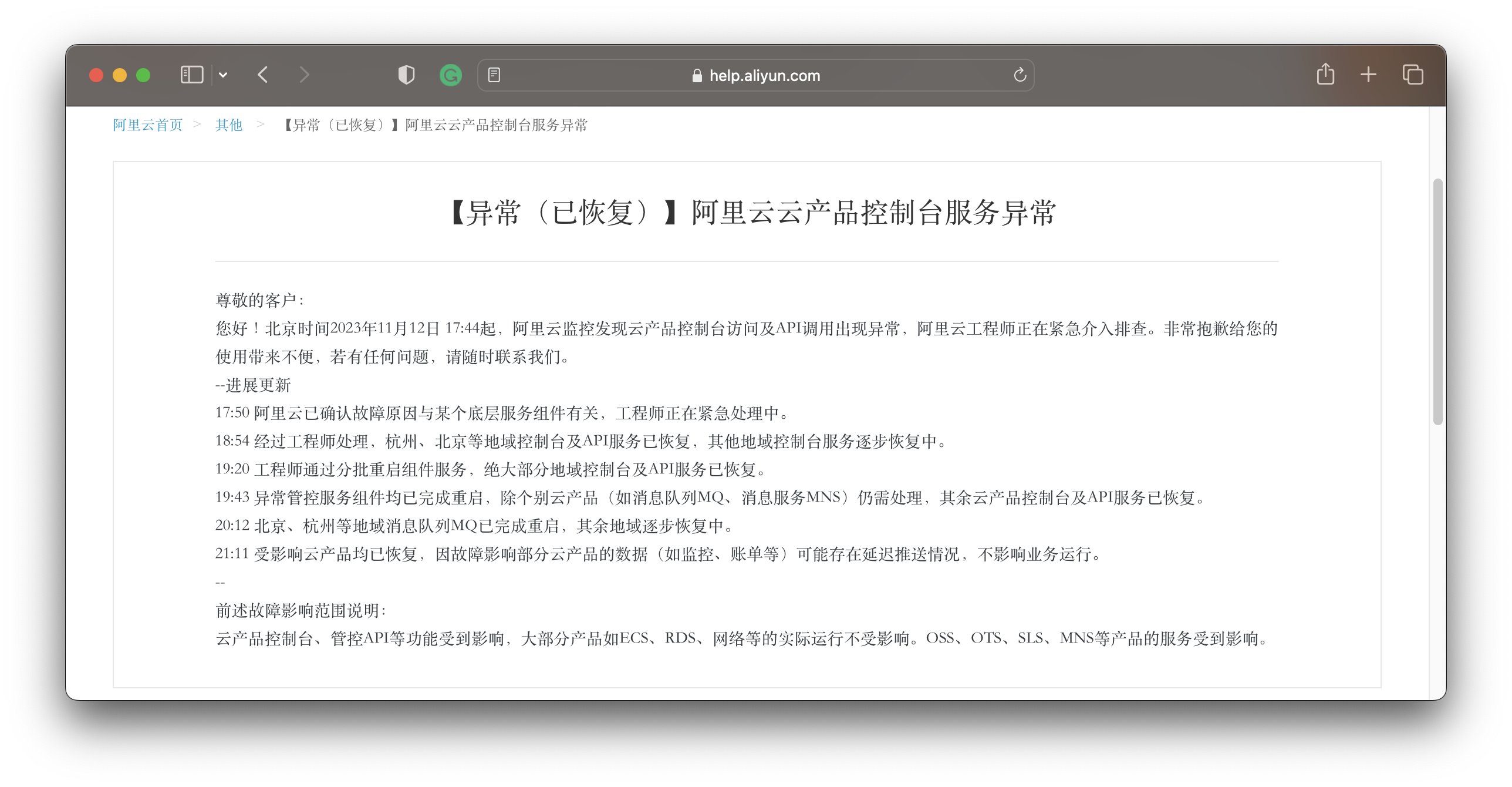

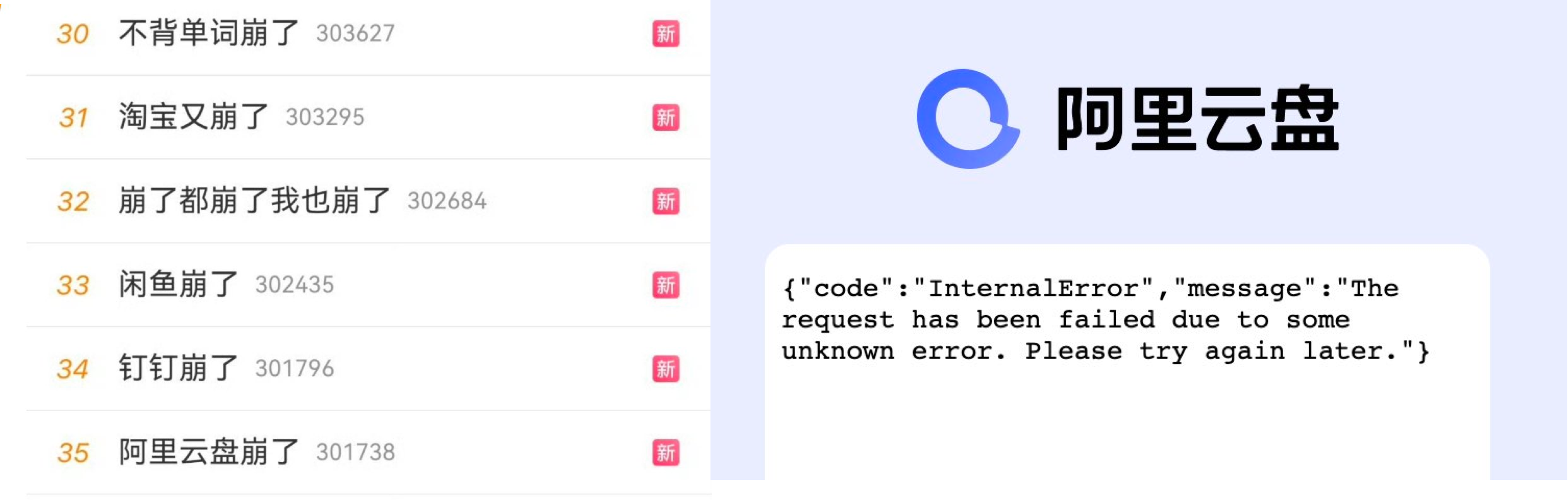

2023年11月12日,也就是双十一后的第一天,阿里云发生了一场史诗级大翻车。根据阿里云官方的服务状态页,全球范围内所有可用区 x 所有服务全部都出现异常,时间从 17:44 到 21: 11,共计3小时27分钟。规模与范围创下全球云计算行业的历史纪录。

阿里云周爆:云数据库管控又挂了

阿里云 11. 12 大故障两周 过去,还没有看到官方的详细复盘报告,结果又来了一场大故障:中美7个区域的数据库管控宕了近两个小时。这一次和 11.12 故障属于让官方发全站公告的显著故障。

11月份还有两次较小规模的局部故障。这种故障频率即使是对于草台班子来说也有些过分了。某种意义上说,阿里云这种周爆可以凭一己之力,毁掉用户对公有云云厂商的托管服务的信心

我们能从阿里云史诗级故障中学到什么

时隔一年阿里云又出大故障,并创造了云计算行业闻所未闻的新记录 —— 全球所有区域/所有服务同时异常。 阿里云不愿意发布故障复盘报告,那我就来替他复盘 —— 我们应当如何看待这一史诗级故障案例,以及,能从中学习到什么经验与教训?

从降本增笑到真的降本增效

双十一刚过,阿里云就出了打破行业纪录的全球史诗级大翻车,然后开始了11月连环炸模式 —— 从月爆到周爆再到日爆。话音未落,滴滴又出现了一场超过12小时,资损几个亿的大故障。

年底正是冲绩效的时间,互联网大厂大事故却是一波接一波。硬生生把降本增效搞成了“降本增笑” —— 这已经不仅仅是梗了,而是来自官方的自嘲。大厂故障频发,处理表现远远有失水准,乱象的背后到底是什么问题?

RDS翻车篇

更好的开源RDS替代:Pigsty

我希望,未来的世界人人都有自由使用优秀服务的事实权利,而不是只能被圈养在几个公有云厂商提供的猪圈(Pigsty)里吃粑粑。这就是我要做 Pigsty 的原因 —— 一个更好的,开源免费的 PostgreSQL RDS替代。让用户能够在任何地方(包括云服务器)上,一键拉起有比云RDS更好的数据库服务。

Pigsty 是对 PostgreSQL 的彻底补完,更是对云数据库的辛辣嘲讽。它本意是“猪圈”,但更是 Postgres In Great STYle 的缩写,即“全盛状态下的 PostgreSQL”。它是一个完全基于开源软件的,可以运行在任何地方的,浓缩了 PostgreSQL 使用管理最佳实践的 Me-Better RDS 开源替代。

驳《再论为什么你不应该招DBA》

云计算鼓吹者马工大放厥词说:云数据库可以取代 DBA。之前在《你怎么还在招聘DBA》,以及回应文《云数据库是不是智商税》中,我们便已交锋过。

当别人把屎盆子扣在这个行业所有人头上时,还是需要人来站出来说几句的。因此撰文以驳斥马工的谬论:《再论为什么你不应该招DBA》。

云RDS:从删库到跑路

用户都已经花钱买了‘开箱即用’的云数据库了,为什么连 PITR 恢复这么基本的兜底都没有呢?因为云厂商心机算计,把WAL日志归档/PITR这些PG的基础功能阉割掉,放进所谓的“高可用”版本高价出售。

云数据库也是数据库,云数据库并不是啥都不用管的运维外包魔法,不当配置使用,一样会有数据丢失的风险。没有开启WAL归档就无法使用PITR,甚至无法登陆服务器获取现存WAL来恢复误删的数据。当故障发生时,用户甚至被断绝了自救的可能性,只能原地等死。

数据库应该放入K8S里吗?

Kubernetes 作为一个先进的容器编排工具,在无状态应用管理上非常趁手;但其在处理有状态服务 —— 特别是 PostgreSQL 和 MySQL 这样的数据库时,有着本质上的局限性。

将数据库放入 K8S 中会导致 “双输” —— K8S 失去了无状态的简单性,不能像纯无状态使用方式那样灵活搬迁调度销毁重建;而数据库也牺牲了一系列重要的属性:可靠性,安全性,性能,以及复杂度成本,却只能换来有限的“弹性”与资源利用率 —— 但虚拟机也可以做到这些!对于公有云厂商之外的用户来说,几乎都是弊远大于利。

把数据库放入Docker是一个好主意吗?

对于无状态的应用服务而言,容器是一个相当完美的开发运维解决方案。然而对于带持久状态的服务 —— 数据库来说,事情就没有那么简单了。我认为就目前而言,将生产环境数据库放入Docker / K8S 中仍然是一个馊主意。

对于云厂商来说,使用容器的利益是自己的,弊端是用户的,他们有动机这样去做,但这样的利弊权衡对普通用户而言并不成立。

云厂商画像篇

这个系列多数来自云计算鼓吹者马工,为本土云厂商与互联网大厂绘制了精准幽默,幽默滑稽的肖像。

卡在政企客户门口的阿里云

互联网故障背后的草台班子们

云厂商眼中的客户:又穷又闲又缺爱

互联网技术大师速成班

门内的国企如何看门外的云厂商

阿里云专辑

云计算泥石流系列原文链接

- 云计算泥石流

- 拒绝用复杂度自慰,下云也保稳定运行

- 扒皮对象存储:从降本到杀猪

- 半年下云省千万:DHH下云FAQ答疑

- 从降本增笑到真的降本增效

- 阿里云周爆:云数据库管控又挂了

- 重新拿回计算机硬件的红利

- 我们能从阿里云史诗级故障中学到什么

- 【阿里】云计算史诗级大翻车来了

- 是时候放弃云计算了吗?

- 云计算泥石流合集 —— 用数据解构公有云

- 下云奥德赛

- FinOps终点是下云

- 云计算为啥还没挖沙子赚钱?

- 云SLA是不是安慰剂?

- 技术反思录:正本清源

- 杀猪盘真的降价了吗?

- 公有云是不是杀猪盘?

- 云数据库是不是智商税

- 垃圾腾讯云CDN:从入门到放弃

- 驳《再论为什么你不应该招DBA》

- 范式转移:从云到本地优先

- 云RDS:从删库到跑路

- 是时候和GPL说再见了 【译】

- 互联网技术大师速成班(转载瑞典马工)

- 门内的国企如何看门外的云厂商 (转载思维南人)

- 卡在政企客户门口的阿里云(转载瑞典马工)

- 互联网故障背后的草台班子们(转载瑞典马工)

- 云厂商眼中的客户:又穷又闲又缺爱(转载瑞典马工)

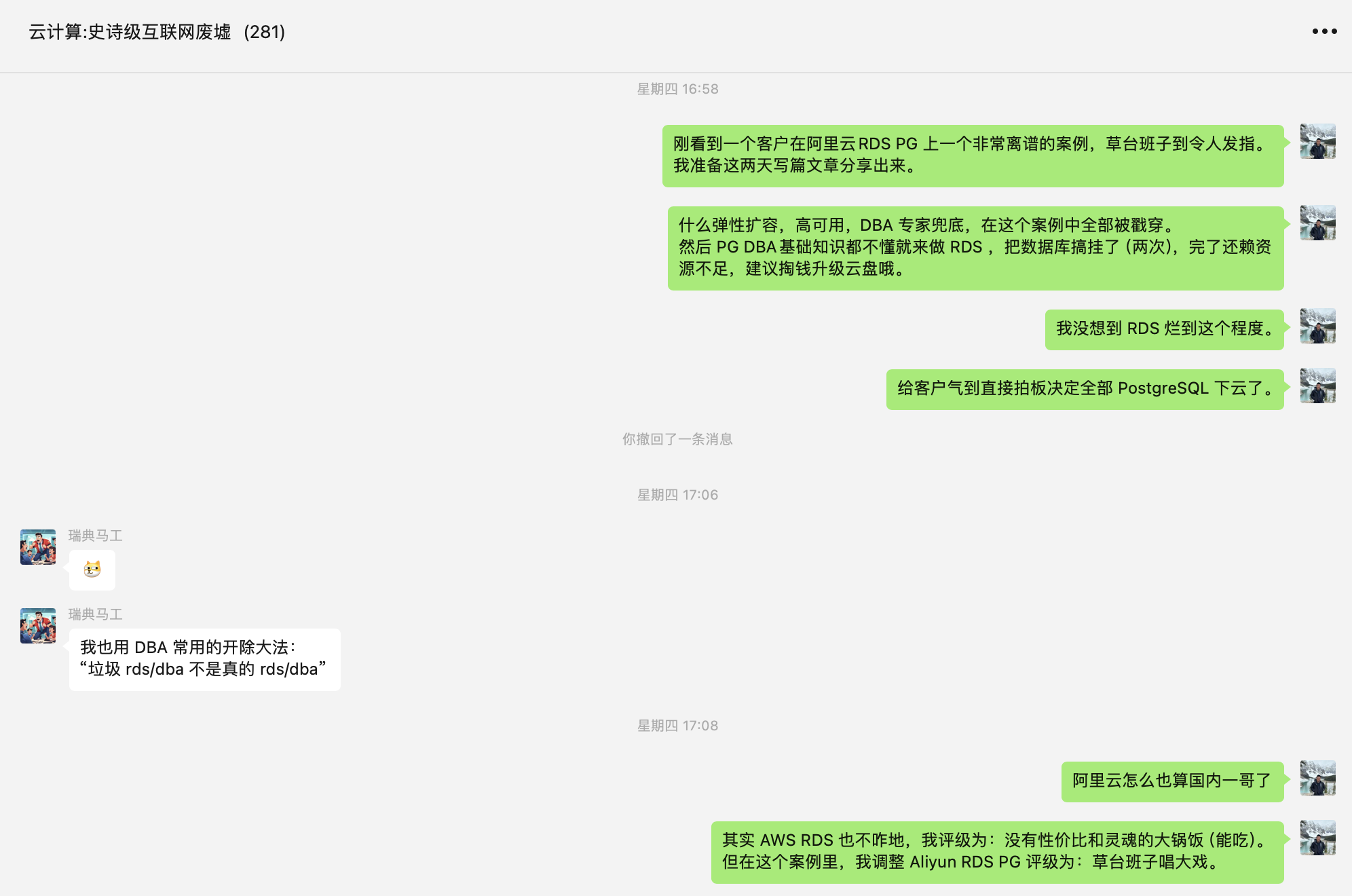

草台班子唱大戏,阿里云PG翻车记

在《云数据库是不是智商税》中,我对云数据库 RDS 的评价是:“用五星酒店价格卖给用户天价预制菜”—— 但正规的预制菜大锅饭也是能吃的 也一般吃不死人,不过最近一次发生在阿里云上的的故障让我改变了看法。

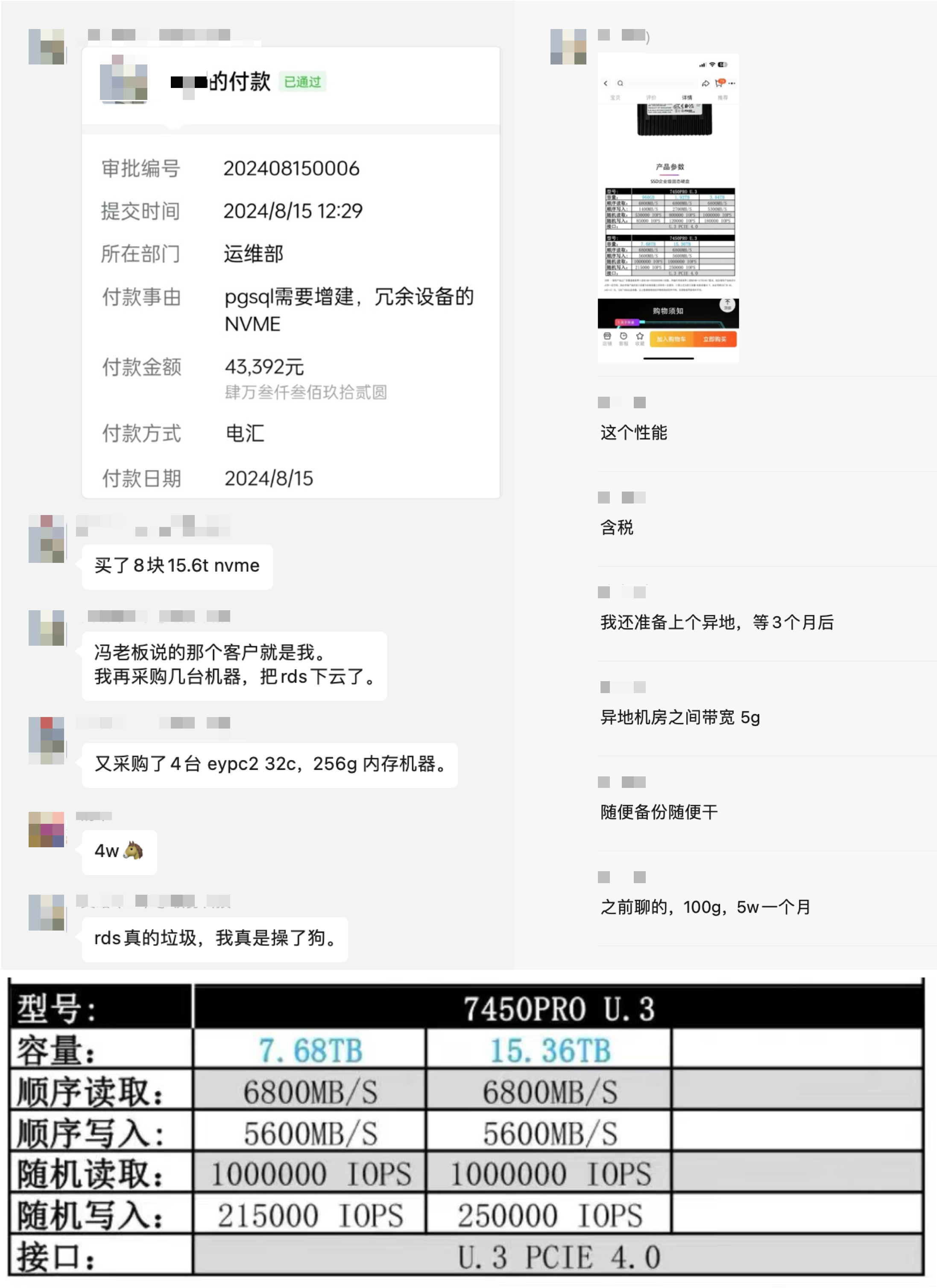

我有一位客户L,这两天跟我吐槽了一个在云数据库上遇到的离谱连环故障:一套高可用 PG RDS 集群,因为扩容个内存,主库从库都挂了,给他们折腾到凌晨。期间建议昏招迭出,给出的复盘也相当敷衍。经过客户L同意后,我将这个案例分享出来,也供大家借鉴参考品评。

事故经过:匪夷所思

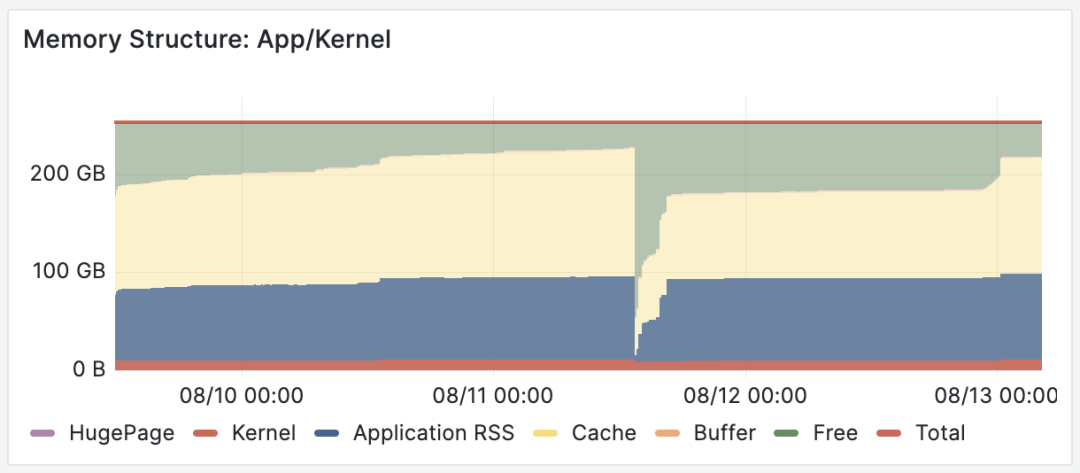

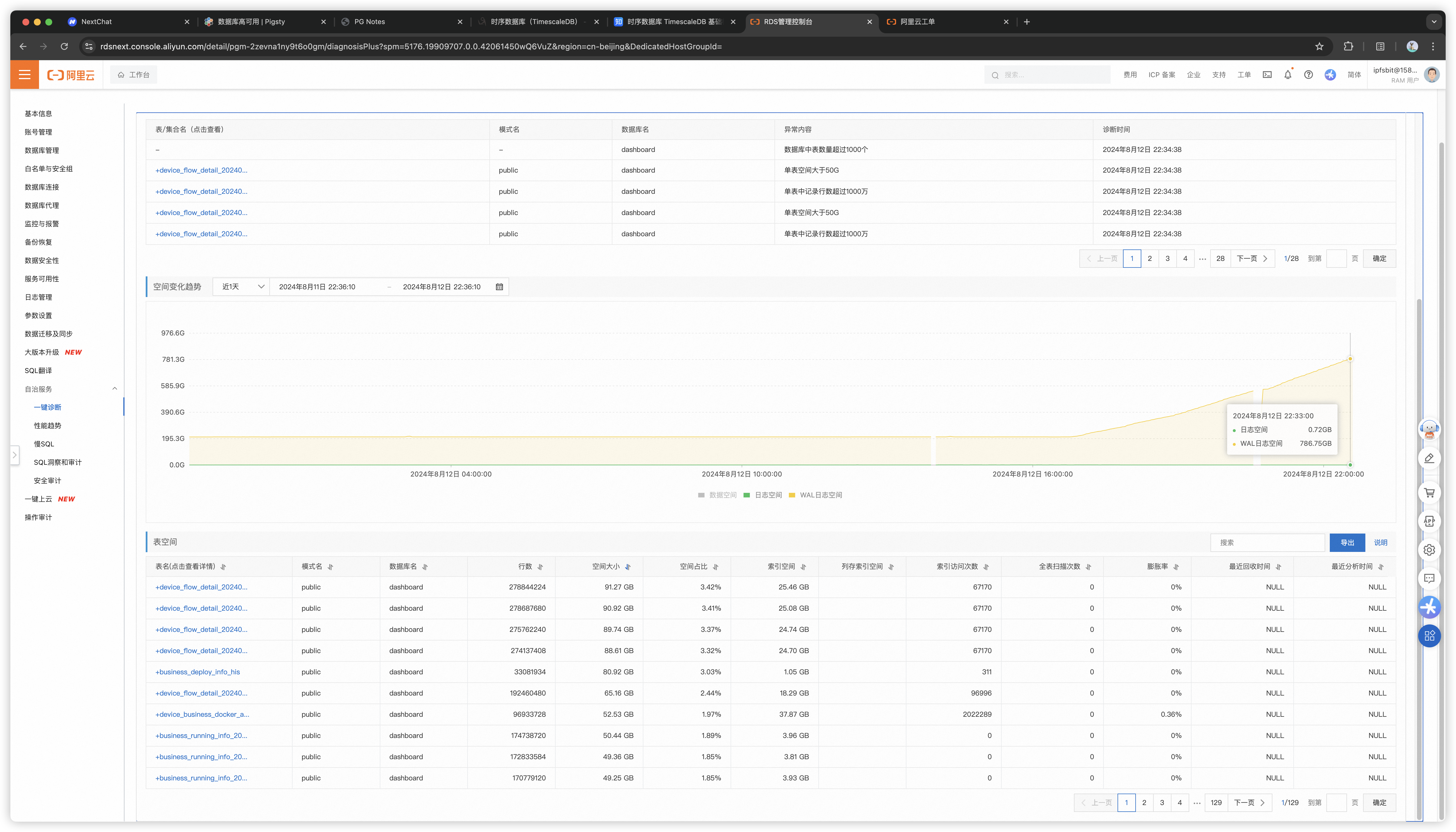

客户L的数据库场景比较扎实,大几TB的数据,TPS两万不到,写入吞吐8000行/每秒,读取吞吐7万行/s。用的是 ESSD PL1 ,16c 32g 的实例,一主一备高可用版本,独享型规格族,年消费六位数。

整个事故经过大致如下:客户L收到 内存告警,提工单,接待的售后工程师诊断:数据量太大,大量行扫描导致内存不足,建议扩容内存。客户同意了,然后工程师扩容内存,扩内存花了三个小时,期间主库和从库挂了,吭哧吭哧手工一顿修。

然后扩容完了之后又遇到 WAL日志堆积 的问题,堆了 800 GB 的WAL日志要把磁盘打满了,又折腾了两个小时到十一点多。售后说是 WAL 日志归档上传失败导致的堆积,失败是因为磁盘IO吞吐被占满,建议扩容 ESSD PL2。

吃过了内存扩容翻车的教训,这次扩容磁盘的建议客户没立即买单,而是找我咨询了下,我看了一下这个故障现场,感觉到匪夷所思:

- 你这负载也挺稳定的,没事扩容内存干啥?

- RDS 不是号称弹性秒级扩容么,怎么升个内存花三个小时?

- 花三个小时就算了,扩个容怎么还把主库从库都搞挂了呢?

- 从库挂了据说是参数没配对,那就算了,那主库是怎么挂了的?

- 主库挂了高可用切换生效了吗?怎么 WAL 又怎么开始堆积了?

- WAL 堆积是有地方卡住了,建议你升级云盘等级/IOPS有什么用?

事后也有一些来自乙方的解释,听到后我感觉更加匪夷所思了:

- 从库挂是因为参数配错拉不起来了

- 主库挂是因为 “为了避免数据损坏做了特殊处理”

- WAL堆积是因为卡BUG了,还是客户侧发现,推断并推动解决的

- 卡 BUG “据称” 是因为云盘吞吐打满了

我的朋友瑞典马工一贯主张,“用云数据库可以替代DBA”。 我认为在理论上有一定可行性 —— 由云厂商组建一个专家池,提供DBA时分共享服务。

但现状很可能是:云厂商没有合格的 DBA,不专业的工程师甚至会给你出馊主意,把跑得好好的数据库搞坏,最后赖到资源不足上,再建议你扩容升配好多赚一笔。

在听闻这个案例之后,马工也只能无奈强辩:“辣鸡RDS不是真RDS”。

内存扩容:无事生非

资源不足是一种常见的故障原因,但也正因如此,有时会被滥用作为推卸责任,甩锅,或者要资源,卖硬件的万金油理由。

内存告警 OOM 也许对其他数据库是一个问题,但对于 PostgreSQL 来说非常离谱。我从业这么多年来,见识过各种各样的故障:数据量大撑爆磁盘我见过,查询多打满CPU我见过,内存比特位反转腐坏数据我见过,但因为独占PG因为读查询多导致内存告警,我没见过。

PostgreSQL 使用双缓冲,OLTP实例在使用内存时,页面都放在一个尺寸固定的 SharedBuffer 中。作为一个 众所周知的最佳实践,PG SharedBuffer 配置通常为物理内存的 1/4 左右,剩下的内存由文件系统 Cache 使用。也就是说通常有六七成的内存是由操作系统来灵活调配的,不够用逐出一些 Cache 就好了。如果说因为读查询多导致内存告警,我个人认为是匪夷所思的。

所以,因为一个本来不一定是问题的问题(假告警?),RDS 售后工程师给出的建议是:内存不够啦,请扩容内存。客户相信了这个建议,选择将内存翻倍。

按道理,云数据库宣传自己的极致弹性,灵活伸缩,还是用 Docker 管理的。难道不应该是原地改一下 MemLimit 和 PG SharedBuffer 参数,重启一下就生效的吗?几秒还差不多。结果这次扩容却折腾了三个小时才完成,并引发了一系列次生故障。

内存不足,两台32G扩64G,按照《剖析阿里云服务器算力成本》我们精算过的定价模型,一项内存扩容操作每年带来的额外收入就能有万把块。如果能解决问题那还好说,但事实上这次内存扩容不但没有解决问题,还引发了更大的问题。

从库宕机:素养堪忧

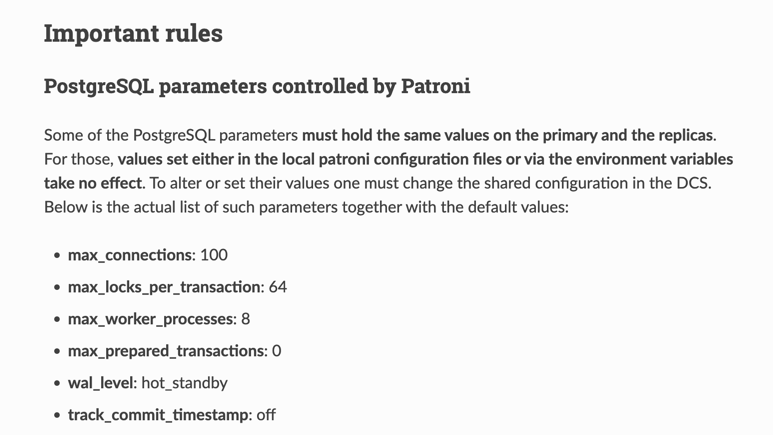

第一个因为内存扩容而发生的次生故障是这样的,备库炸了,为什么炸了,因为PG参数没配置正确:max_prepared_transaction,这个参数为什么会炸?因为在一套 PG 集群中,这个参数必须在主库和从库上保持一致,否则从库就会拒绝启动。

为什么这里出现了主从参数不一致的问题?我推测 是因为 RDS 的设计中,该参数的值是与实例内存成正比设置的,所以内存扩容翻倍后这个参数也跟着翻倍。主从不一致,然后从库重启的时候就拉不起来了。

不管怎样,因为这个参数翻车是一个非常离谱的错误,属于 PG DBA 101 问题,PG文档明确强调了这一点。但凡做过滚动升降配这种基操的 DBA,要么已经在读文档的时候了解过这个问题,要么就在这里翻过车再去看文档。

如果你用 pg_basebackup 手搓从库,你不会遇到这个问题,因为从库的参数默认和主库一致。如果你用成熟的高可用组件,也不会遇到这个问题:开源PG高可用组件 Patroni 就会强制控制这几个参数并在文档中显眼地告诉用户:这几个参数主从必须一致,我们来管理不要瞎改。

在一种情况下你会遇到这种问题:考虑不周的家酿高可用服务组件,或未经充分测试的自动化脚本,自以为是地替你“优化”了这个参数。

主库宕机:令人窒息

如果说从库挂了,影响一部分只读流量那也就算了。主库挂了,对于客户L这种全网实时数据上报的业务可就要血命了。关于主库宕机,工程师给出的说法是:在扩容过程中,因为事务繁忙,主从复制延迟一直追不上,“为了避免数据损坏做了特殊处理”。

这里的说法语焉不详,但按字面与上下文理解的意思应当为:扩容时需要做主从切换,但因为有不小的主从复制延迟,直接切换会丢掉一部分尚未复制到从库上的数据,所以RDS替你把主库流量水龙头关掉了,追平复制延迟后再进行主从切换。

老实说,我觉得这个操作让人窒息。主库 Fencing 确实是高可用中的核心问题,但一套正规的生产 PG 高可用集群在处理这个问题时,标准SOP是首先将集群临时切换到同步提交模式,然后执行 Switchover,自然一条数据也不会丢,切换也只会影响在这一刻瞬间执行的查询,亚秒级闪断。

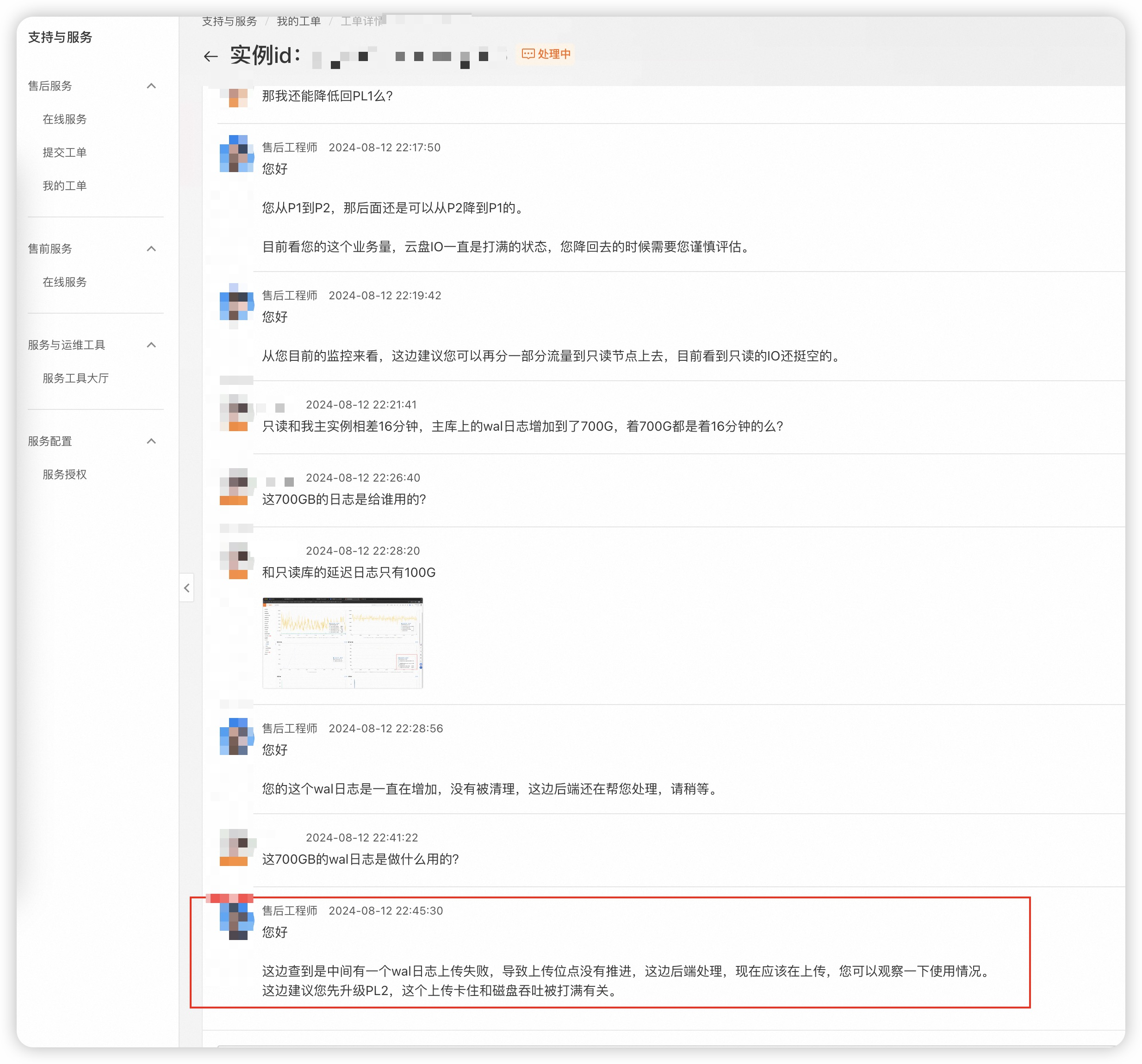

“为了避免数据损坏做了特殊处理” 这句话确实很有艺术性 —— 没错,把主库直接关掉可以实现 Fencing ,也确实不会在高可用切换时因为复制延迟丢数据,但客户数据写不进去了啊!这丢的数据可比那点延迟多多了。这种操作,很难不让人想起那个著名的《善治伛者》笑话:

WAL堆积:专家缺位

主从宕机的问题修复后,又折腾一个半小时,总算是把主库内存扩容完成了。然而一波未平一波又起,WAL日志又开始堆积起来,在几个小时内就堆积到了近 800 GB,如果不及时发现并处理,撑爆磁盘导致整库不可用是早晚的事。

也可以从监控上看到两个坑

RDS 工程师给出的诊断是,磁盘 IO 打满导致 WAL 堆积,建议升级磁盘,从 ESSD PL1 升级到 PL2。不过,这一次客户已经吃过一次内存扩容的教训了,没有立刻听信这一建议,而是找到了我咨询。

我看了情况后感觉非常离谱,负载没有显著变化,IO打满也不是这种卡死不动的情形。那么 WAL 堆积的原因不外乎那几个:复制延迟落后100多GB,复制槽保留了不到1GB,那剩下的大头就是 WAL归档失败。

我让客户给 RDS 提工单处理找根因,最后 RDS 侧确实找到问题是WAL归档卡住了并手工解决了,但距离 WAL 堆积已经过去近六个小时了,并在整个过程中体现出非常业余的素养,给出了许多离谱的建议。

另一个离谱建议:把流量打到复制延迟16分钟的从库上去

阿里云数据库团队并非没有 PostgreSQL DBA 专家,在阿里云任职的 德哥 Digoal 绝对是 PostgreSQL DBA 大师。然而看起来在 RDS 的产品设计中,并没有沉淀下多少 DBA 大师的领域知识与经验;而 RDS 售后工程师表现出来的专业素养,也与一个合格的 PG DBA ,哪怕是 GPT4 机器人都相差甚远。

我经常看到 RDS 的用户遇到了问题,通过官方工单没有得到解决,只能绕过工单,通过在 PG 社区中 直接求助德哥解决 —— 而这确实是比较考验运气与关系的一件事。

扩容磁盘:创收有术

在解决了“内存告警”, “从库宕机”, “主库宕机”, “WAL 堆积” 等连环问题后,已经接近凌晨了。但 WAL 堆积的根因是什么仍然不清楚,工程师的回复是 “与磁盘吞吐被打满有关”,再次建议升级 ESSD 云盘。

在事后的复盘中,工程师提到了 WAL归档失败的原因是 “RDS上传组件BUG”。所以回头看,如果客户真的听了建议升级云盘,也就就白花冤枉钱了。

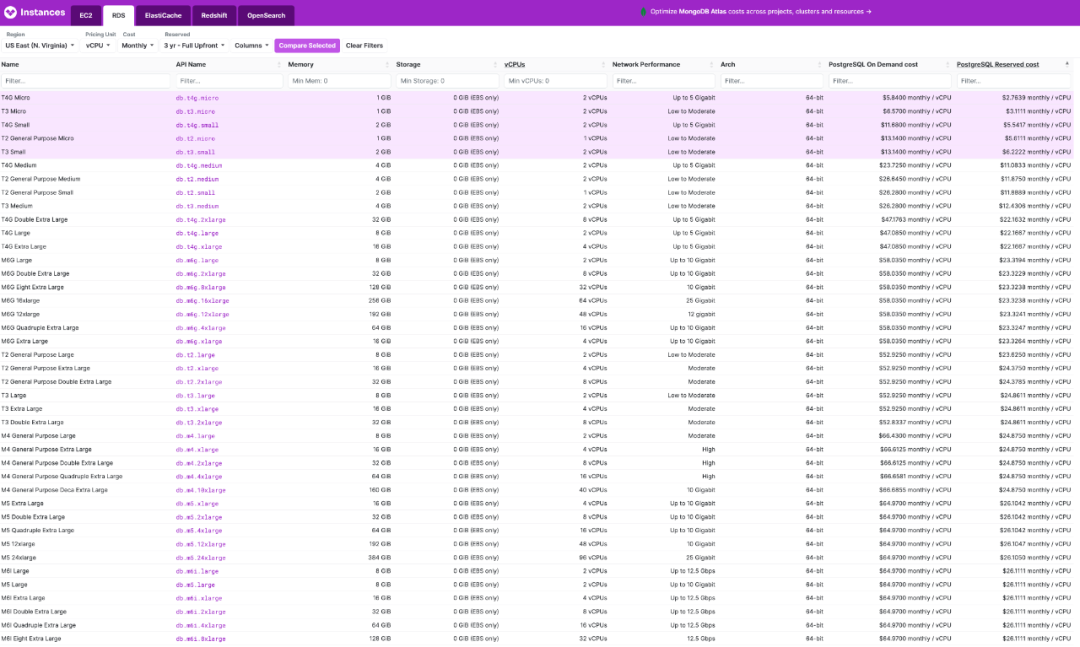

在 《云盘是不是杀猪盘》中我们分析过,云上溢价最狠的基础资源就是 ESSD 云盘。按照 《阿里云存算成本剖析》中给出的数字:客户 5TB 的 ESSD PL1 云盘包月价格 1 ¥/GB,那么每年光是云盘费用就要 12万。

| 单位价格:¥/GiB月 | IOPS | 带宽 | 容量 | 按需价格 | 包月价格 | 包年价格 | 预付三年+ |

|---|---|---|---|---|---|---|---|

| ESSD 云盘 PL0 | 10K | 180 MB/s | 40G-32T | 0.76 | 0.50 | 0.43 | 0.25 |

| ESSD 云盘 PL1 | 50K | 350 MB/s | 20G-32T | 1.51 | 1.00 | 0.85 | 0.50 |

| ESSD 云盘 PL2 | 100K | 750 MB/s | 461G-32T | 3.02 | 2.00 | 1.70 | 1.00 |

| ESSD 云盘 PL3 | 1M | 4 GB/s | 1.2T-32T | 6.05 | 4.00 | 3.40 | 2.00 |

| 本地 NVMe SSD | 3M | 7 GB/s | 最大单卡64T | 0.02 | 0.02 | 0.02 | 0.02 |

如果听从建议 “升级”到 ESSD PL2,没错 IOPS 吞吐能翻一倍,但单价也翻了一倍。单这一项“升级”操作,就能给云厂商带来额外 12 万的收入。

即使是 ESSD PL1 乞丐盘,也带有 50K 的 IOPS,而客户L场景的 20K TPS,80K RowPS 换算成 随机4K 页面 IOPS ,就算退一万步讲不考虑 PG 与 OS 的缓冲区(比如 99% 缓存命中率对于这种业务场景是很正常的),想硬打满它也是一件相当不容易的事情。

我不好判断遇到问题就建议 扩容内存 / 扩容磁盘 这样的做法,到底是出于专业素养不足导致的误诊,还是渴望利用信息不对称创收的邪念,抑或两者兼而有之 —— 但这种趁病要价的做法,确实让我联想起了曾经声名狼藉的莆田医院。

协议赔偿:封口药丸

在《云SLA是不是安慰剂》一文中,我已提醒过用户:云服务的 SLA 根本不是对服务质量的承诺,在最好的情况下它是提供情绪价值的安慰剂,而在最坏的情况下它是吃不了兜着走的哑巴亏。

在这次故障中,客户L收到了 1000 元代金券的补偿提议,对于他们的规模来说,这三瓜两枣差不多能让这套 RDS 多跑几天。在用户看来这基本上属于赤裸裸的羞辱了。

有人说云提供了“背锅”的价值,但那只对不负责的决策者与大头兵才有意义,对于直接背结果的 CXO 来说,整体性的得失才是最重要的,把业务连续性中断的锅甩给云厂商,换来一千块代金券没有任何意义。

当然像这样的事情其实不止一次,两个月前客户L还遇到过另一次离谱的从库宕机故障 —— 从库宕机了,然后控制台上监控根本看不到,客户自己发现了这个问题,提了工单,发起申诉,还是 1000¥ SLA 安慰补偿。

当然这还是因为客户L的技术团队水平在线,有能力自主发现问题并主动发出声索。如果是那种技术能力接近零的小白用户,也许就这么拖着瞒着 糊弄过去了。

还有那种 “SLA” 根本不管的问题 —— 例如也是客户L再之前的一个案例(原话引用):“为了折扣要迁移到另一个新的阿里云账号,新账号起了个同配置的 RDS 逻辑复制,复制了近1个月数据都没同步完,让我们直接放弃新账号迁移,导致浪费几万块钱。” —— 属实是花钱买罪受了,而且根本没地方说理去。

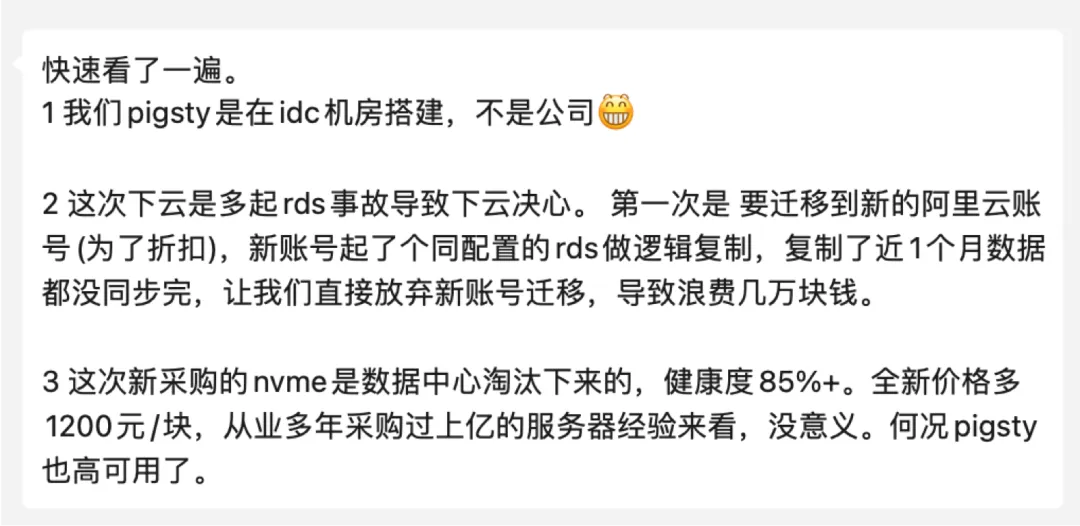

经过几次故障带来的糟心体验,客户L终于在这次事故后难以忍受,拍板决定下云了。

解决方案:下云自建

客户L在几年前就有下云的计划了,在 IDC 里弄了几台服务器,用 Pigsty 自建搭建了几套 PostgreSQL 集群,作为云上的副本双写,跑得非常不错。但要把云上 RDS 下掉,还是免不了要折腾一下,所以一直也就这样两套并行跑着。包括此次事故之前的多次糟心体验,最终让客户L做了下云的决断。

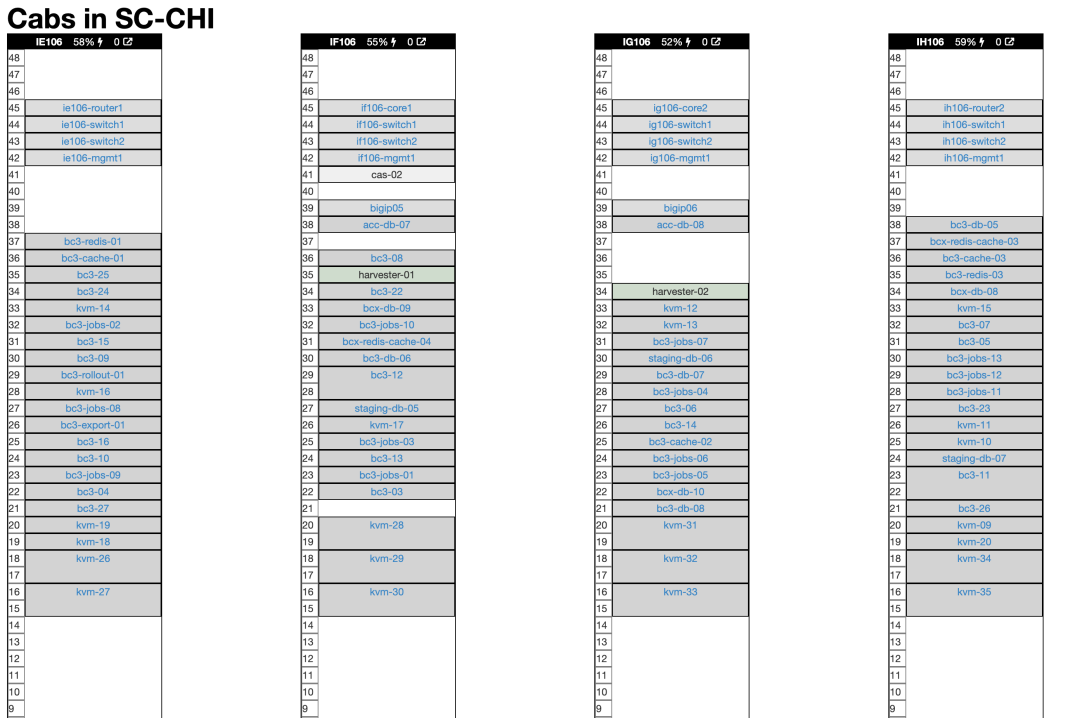

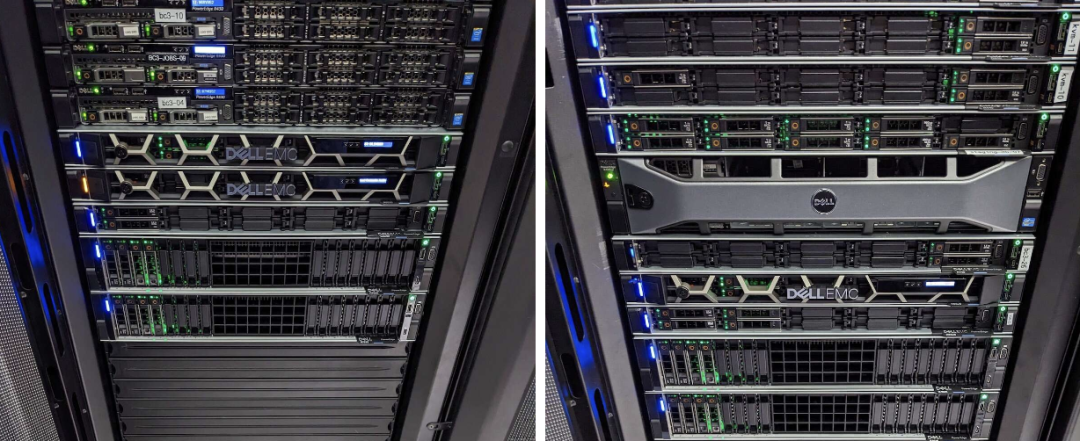

客户L直接下单加购了四台新服务器,以及 8块 Gen4 15TB NVMe SSD。特别是这里的 NVMe 磁盘,IOPS 性能是云上 ESSD PL1 乞丐盘的整整 20倍(1M vs 50K),而 TB·月 单位价格则是云上的 1/166 (1000¥ vs 6¥)。

题外话:6块钱TB月价,我只在黑五打折的 Amazon 上看到过。125 TB 才 44K ¥(全新总共再加 9.6K ¥),,果然是术业有专攻,经手了过亿采购的成本控制大师。

如在《下云奥德赛:是时候放弃云计算了吗》 中 DHH 所说的那样:

“我们明智地花钱:在几个关键例子上,云的成本都极其高昂 —— 无论是大型物理机数据库、大型 NVMe 存储,或者只是最新最快的算力。租生产队的驴所花的钱是如此高昂,以至于几个月的租金就能与直接购买它的价格持平。在这种情况下,你应该直接直接把这头驴买下来!我们将把我们的钱,花在我们自己的硬件和我们自己的人身上,其他的一切都会被压缩。”

对客户L来说,下云带来的好处是立竿见影的:只需要 RDS 几个月费用的一次性投资,就足够超配几倍到十几倍的硬件资源,重新拿回硬件发展的红利,实现惊人的降本增效水平 —— 你不再需要对着账单抠抠搜搜,也不用再发愁什么资源不够。这确实是一个值得思考的问题:如果云下资源单价变为十分之一甚至百分之几,那么云上鼓吹的弹性还剩多大意义?而阻止云上用户下云自建的原因又会是什么呢?

下云自建 RDS 服务最大的挑战其实是人与技能,客户L已经有着一个技术扎实的团队,但确实缺少在 PostgreSQL 上的专业知识与经验。这也是客户L之所以愿意为 RDS 支付高溢价的一个核心原因。但 RDS 在几次事故中体现出来的专业素养,甚至还不如客户本身的技术团队强,这就让继续留在云上变得毫无意义。

广告时间:专家咨询

在下云这件事上, 我很高兴能为客户L提供帮助与支持。 Pigsty 是沉淀了我作为顶级 PG DBA 领域知识与经验的开源 RDS 自建工具,已经帮助无数世界各地的用户自建了自己的企业级 PostgreSQL 数据库服务。尽管它已经将开箱即用,扩展整合,监控系统,备份恢复,安全合规,IaC 这些运维侧的问题解决的很好了。但想要充分发挥 PostgreSQL 与 Pigsty 的完整实力,总归还是需要专家的帮助来落地。

所以我提供了明码标价的 专家咨询服务 —— 对于客户L这样有着成熟技术团队,只是缺乏领域知识的客户,我只收取固定的 5K ¥/月 咨询费用,仅仅相当于半个初级运维的工资。但足以让客户放心使用比云上低一个数量级的硬件资源成本,自建更好的本地 PG RDS 服务 —— 而即使在云上继续运行 RDS,也不至于被“砖家”被嘎嘎割韭菜忽悠。

我认为咨询是一种站着挣钱的体面模式:我没有任何动机去推销内存与云盘,或者说胡话兜售自己的产品(因为产品是开源免费的!)。所以我完全可以站在甲方立场上,给出对甲方利益最优的建议。甲乙双方都不用去干苦哈哈的数据库运维,因为这些工作已经被 Pigsty 这个我编写的开源工具完全自动化掉了。我只需要在零星的关键时刻提供专家意见与决策支持,并不会消耗多少精力与时间,却能帮助甲方实现原本全职雇佣顶级DBA专家才能实现的效果,最终实现双方共赢。

但是我也必须强调,我提倡下云理念,从来都是针对那些有一定数据规模与技术实力的客户,比如这里的客户L。如果您的场景落在云计算舒适光谱中(例如用 1C2G 折扣 MySQL 跑 OA ),也缺乏技术扎实或值得信赖的工程师,我会诚实地建议你不要折腾 —— 99 一年的 RDS 总比你自己的 yum install 强不少,还要啥自行车呢?当然针对这种用例,我确实建议你考虑一下 Neon,Supabase,Cloudflare 这些赛博菩萨们的免费套餐,可能连一块钱都用不着。

而对于那些有一定规模,绑死在云数据库上被不断抽血的客户,你确实可以考虑另外一个选项:自建数据库服务绝非什么高深的火箭科学 —— 你需要做的只是找到正确的工具与正确的人而已。

扩展阅读

云数据库是不是智商税

我们能从网易云音乐故障中学到什么?

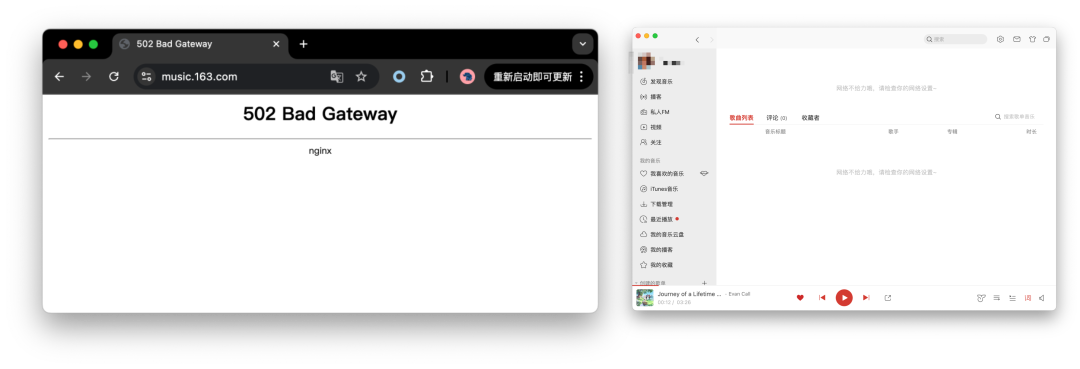

今天下午 14:44 左右,网易云音乐出现 不可用故障,至 17:11 分恢复。网传原因为基础设施/云盘存储相关问题。

故障经过

故障期间,网易云音乐客户端可以正常播放离线下载的音乐,但访问在线资源会直接提示报错,网页版则直接出现 502 服务器报错无法访问。

在此期间,网易 163门户也出现 502 服务器报错,并在一段时间后 302 重定向到移动版主站。期间也有用户反馈网易新闻与其他服务也受到影响。

许多用户都反馈连不上网易云音乐后,以为是自己网断了,卸了APP重装,还有以为公司 IT 禁了听音乐站点的,各种评论很快将此次故障推上微博热搜:

期间截止到 17:11 分,网易云音乐已经恢复,163 主站门户也从移动版本切换回浏览器版本,整个故障时长约两个半小时,P0 事故。

17:16 分,网易云音乐知乎账号发布通知致歉,并表示明天搜“畅听音乐”可以领取 7 天黑胶 VIP 的朋友费。

原因推断

在此期间,出现各种流言与小道消息。总部着火🔥 (老图),TiDB 翻车(网友瞎编),下载《黑神话悟空》打爆网络,以及程序员删库跑路等就属于一眼假的消息。

但也有先前网易云音乐公众号发布的一篇文章《云音乐贵州机房迁移总体方案回顾》,以及两份有板有眼的网传聊天记录,可以作为一个参考。

网传此次故障与云存储有关,网传聊天记录就不贴了,可以参考《网易云音乐宕机,原因曝光!7月份刚迁移完机房,传和降本增效有关。》一文截图,或者权威媒体的引用报道《独家|网易云音乐故障真相:技术降本增效,人手不足排查了半天》。

我们可以找到一些关于网易云存储团队的公开信息,例如,网易自研的云存储方案 Curve 项目被枪毙了。

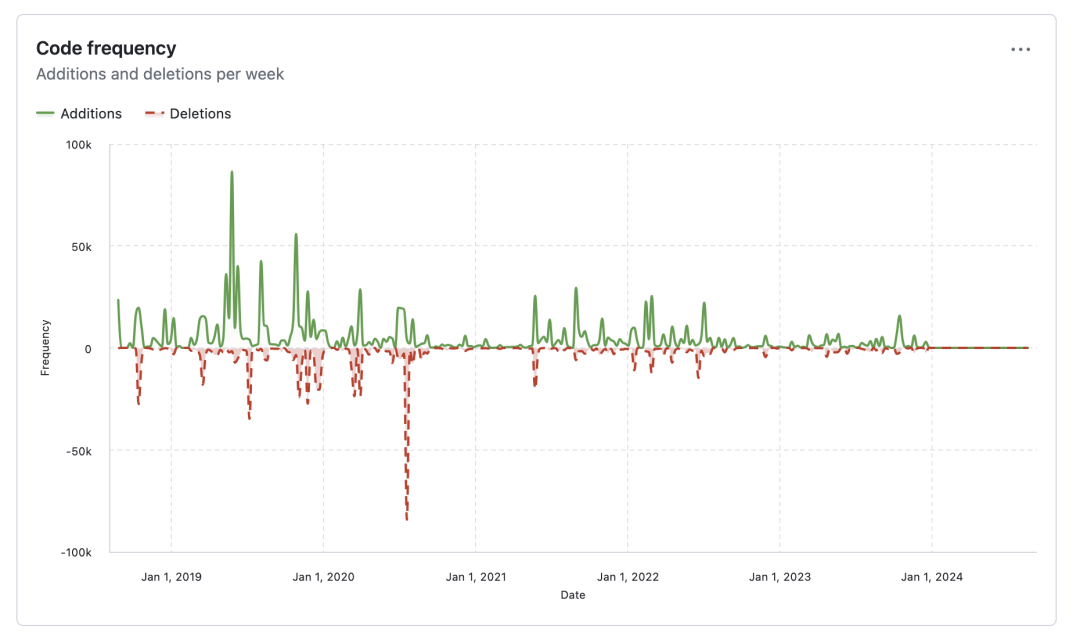

查阅 Github Curve 项目主页,发现项目在 2024 年初后就陷入停滞状态:

最后一个 Release 一直停留在RC没有发布正式版,项目已经基本无人维护,进入静默状态。

Curve 团队负责人还发表过一篇《curve:遗憾告别 未竟之旅》的公众号文章,并随即遭到删除。我对这件事有些印象,因为 Curve 是 PolarDB 推荐的两个开源共享存储方案之一,所以特意调研过这个项目,现在看来……

经验教训

关于裁员与降本增效的老生长谈已经说过很多了,我们又还能从这场事故中学习到什么教训呢?以下是我的观点:

第一个教训是,不要用云盘跑严肃数据库!在这件事上,我确实可以说一句 “ Told you so” 。底层块存储基本都是提供给数据库用的。如果这里出现了故障,爆炸半径与 Debug 难度是远超出一般工程师的智力带宽的。如此显著的故障时长(两个半小时),显然不是在无状态服务上的问题。

第二个教训是 —— 自研造轮子没有问题,但要留着人来兜底。降本增效把存储团队一锅端了,遇到问题找不到人就只能干着急。

第三个教训是,警惕大厂开源。作为一个底层存储项目,一旦启用那就不是简单说换就能换掉的。而网易毙掉 Curve 这个项目,所有这些用 Curve 的基建就成了没人维护的危楼。Stonebraker 老爷子在他的名著论文《What Goes Around Comes Around》中就提到过这一点:

参考阅读

蓝屏星期五:甲乙双方都是草台班子

最近,因为网络安全公司 CrowdStrike 发布的一个配置更新,全球范围内无数 Windows 电脑都陷入蓝屏死机状态,无数的混乱 —— 航司停飞,医院取消手术,超市、游乐园、各行各业歇业。

表:受到影响的行业领域、国家地区与相关机构(CrowdStrike导致大规模系统崩溃事件的技术分析)

| 涉及领域 | 相关机构 |

|---|---|

| 航空运输 | 美国、澳大利亚、英国、荷兰、印度、捷克、匈牙利、西班牙、中国香港、瑞士等部分航空公司出现航班延误或机场服务中断。美国达美航空、美国航空和忠实航空宣布停飞所有航班。 |

| 媒体通信 | 以色列邮政、法国电视频道TF1、TFX、LCI和Canal+Group网络、爱尔兰国家广播公司RTÉ、加拿大广播公司、沃丰达集团、电话和互联网服务提供商Bouygues Telecom等。 |

| 交通运输 | 澳大利亚货运列车运营商Aurizon、西日本旅客铁道公司、马来西亚铁路运营商KTMB、英国铁路公司、澳大利亚猎人线和南部高地线的区域列车等。 |

| 银行与****金融服务 | 加拿大皇家银行、加拿大道明银行、印度储备银行、印度国家银行、新加坡星展银行、巴西布拉德斯科银行、西太平洋银行、澳新银行、联邦银行、本迪戈银行等。 |

| 零售 | 德国连锁超市Tegut、部分麦当劳和星巴克、迪克体育用品公司、英国杂货连锁店Waitrose、新西兰的Foodstuffs和Woolworths超市等。 |

| 医疗服务 | 纪念斯隆凯特琳癌症中心、英国国家医疗服务体系、德国吕贝克和基尔的两家医院、北美部分医院等。 |

| …… | …… |

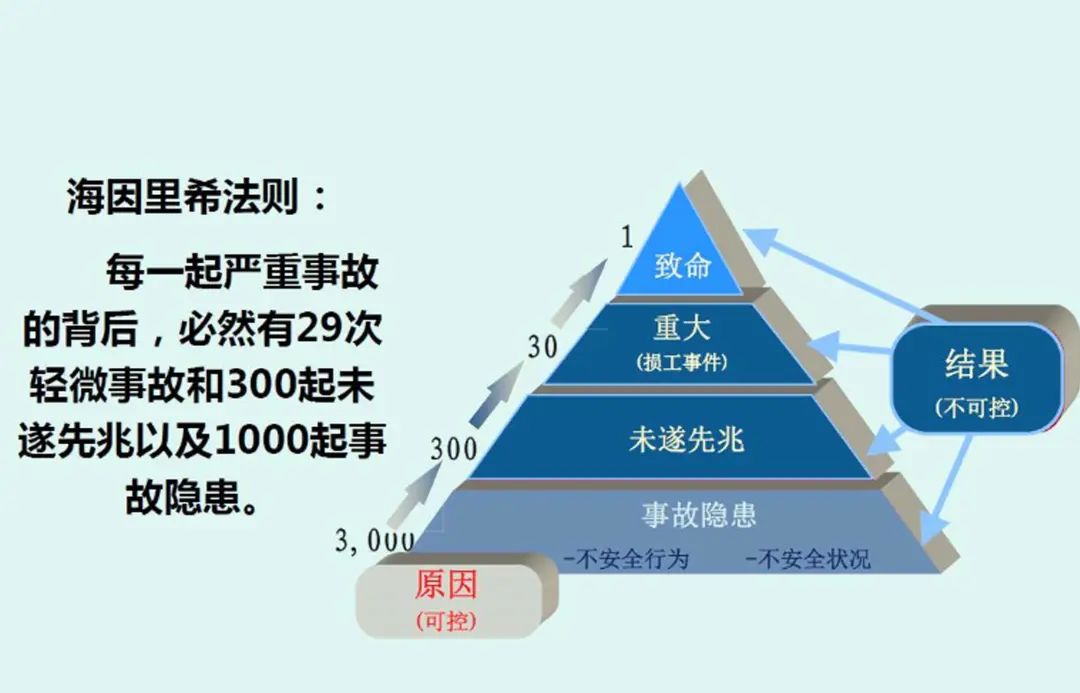

在这次事件中,有许多程序员在津津乐道哪个 sys 文件或者配置文件搞崩了系统 (CrowdStrike官方故障复盘),或者XX公司是草台班子云云 —— 做安全的乙方和甲方工程师撕成一片。但在我看来,这个问题根本不是一个技术问题,而是一个工程管理问题。重要的也不是指责谁是草台班子,而是我们能从中吸取什么教训?

在我看来,这场事故是甲乙方两侧的共同责任 —— 乙方的问题在于:崩溃率如此之高的变更,为什么在没有灰度的情况下迅速发布到了全球?有没有做过灰度测试与验证?甲方的问题在于:将自己的终端安全全部寄托于供应链的可靠之上,为什么能允许这样的变更联网实时推送到自己的电脑上而不加以控制?

控制爆炸半径是软件发布中的一条基本原则,而灰度发布则是软件发布中的基本操作。互联网行业中的许多应用会采用精细的灰度发布策略,比如从 1% 的流量开始滚动上量,一旦发现问题也能立刻回滚,避免一勺烩大翻车的出现。

数据库与操作系统变更同理,作为一个管理过大规模生产环境数据库集群的 DBA,我们在对数据库或者底层操作系统进行变更时,都会极为小心地采取灰度策略:首先在 Devbox 开发环境中测试变更,然后应用到预发/UAT/Staging环境中。跑几天没事后,开始针对生产环境发布:从一两套边缘业务开始,然后按照业务重要性划分的 A、B、C 三级,以及从库/主库的顺序与批次进行灰度变更。

某头部券商运维主管也在群里分享了金融行业的最佳实践 —— 直接网络隔离,禁止从互联网更新,买微软 ELA,在内网搭建补丁服务器,然后几万台终端/服务器从补丁服务器统一更新补丁和病毒库。灰度的方式是每个网点和分支机构/每个业务部门都选择一到两台组成灰度环境,跑一两天没事,进入大灰度环境跑满一周,最后的生产环境分成三波每天更新一次,完成整个发布。如果遇到紧急安全事件 —— 也会使用同样的灰度流程,只是把时间周期从一两周压缩到几个小时而已。

当然,有些乙方安全公司,安全出身的工程师会提出不同的看法:“安全行业不一样,我们要与病毒赶时间“,”病毒研究员发现最新的病毒,然后判断如何最快的全网防御”,“病毒来的时候,我的安全专家判断需要启用,跟你们打招呼来不及”,“蓝屏总好过数字资产丢失或者被人随意控制”。但对甲方来说,安全是一整个体系,配置灰度发布晚一点不是什么大不了的事情,然而集中批量崩溃这种惊吓则是让人难以接受的。

至少对于企业客户来说,更不更新,什么时候更新,这个利弊权衡应该是由甲方来做的,而不是乙方去拍脑袋决定。而放弃这一职责,无条件信任乙方供应商给你空中升级的甲方,也是草台班子。安全软件是合法的,大规模肉鸡软件,即使用户以最大的善意信任供应商没有主观恶意,但在实践中也难以避免因为无心之失与愚蠢傲慢导致的灾难(比如这次蓝屏星期五)。

(美剧《太空部队》名梗:紧急任务遇到Microsoft强制更新)

如果你的系统真的很重要,在接受任何变更与更新前请切记 —— Trust,But Verify。如果供应商不提供 Verify 这个选项,你应该在权力范围内果断 Say No。

我认为这次事件会极大利好 “本地优先软件” 的理念 —— 本地优先不是不更新,不变更,一个版本用到地老天荒,而是能够在无需联网的情况下,在你自己的电脑与服务器上持续运行。用户与供应商依然可以通过补丁服务器,与定期推送的方式升级功能,更新配置,但更新的时间点、方法、规模、策略都应当允许由用户自行指定,而不是由供应商越俎代庖替你决策。我认为这一点才是 “自主可控” 概念的真正实质。

在我们自己的开源 PostgreSQL RDS,数据库管控软件 Pigsty 中,也一直践行着本地优先的原则。每当我们发布一个新版本时,我们会对所有需要安装的软件及其依赖取一个快照,制作成离线软件安装包,让用户在没有互联网访问的环境下,无需容器也可以轻松完成高度确定性的安装。如果用户希望部署更多套数据库集群,他可以期待环境中的版本总是一致的 —— 这意味着,你可以随意移除或添加节点进行新陈代谢,让数据库服务跑到地老天荒。

如果您需要升级软件版本打补丁,将新版本软件包加入本地软件源,使用 Ansible 剧本批量更新即可。您可以选择用老旧 EOL 版本跑到地老天荒,也可以选择在第一时间发布就更新并尝鲜最新特性,您可以按照软件工程最佳实践依次灰度发布,但真想要糙猛快一把梭全量上也随意,我们只提供默认的行为与实践的工具,但说到底,这是用户的自由与选择。

俗话说,物极必反,在 SaaS 与云服务盛行的当下,关键基础设施故障的单点风险与脆弱性愈加凸显。相信在本次事故后,本地优先软件的理念将会在未来得到更多的关注与实践。

Ahrefs不上云,省下四亿美元

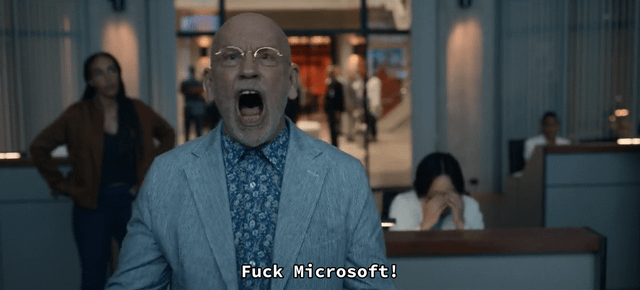

原文:How Ahrefs Saved US$400M in 3 Years by NOT Going to the Cloud

最近云计算在 IT 基础设施领域非常流行,上云成为一种趋势。基础设施即服务云(IaaS)确实有很多优点:灵活、部署敏捷、伸缩简便、在全球多地区都能即时上线,等等等等。

云服务提供商已经成为专业的 IT 服务外包供应商,提供便捷且易用的服务 —— 通过出色的营销、会议、认证和精心挑选的使用案例,他们很容易让人以为,云计算是现代企业 IT 的唯一合理选择。

但是,这些外包云计算服务的成本,有时高到离谱,高到我们担心如果基础设施 100% 依赖云计算,我们的业务是否还能存在。这促使我们基于事实,进行实际的比较。以下是比较结果:

Ahrefs 自有硬件概览

Ahrefs 在新加坡租用了一个托管数据中心 —— 高度同质化的标准基础设施。我们核算了这个数据中心的所有成本,并分摊到每台服务器上,然后与 Amazon Web Services (AWS) 云中进行类似的规格进行了成本对比(因为 AWS 是 IaaS 领导者,所以我们将其用作对比的标杆)

Ahrefs 服务器

我们的硬件相对较新。托管合同始于 2020 年中 —— 即 COVID-19 疫情高峰期。所有设备也都是从那时候起新买的。我们在该数据中心的服务器配置基本都是一致的,唯一的区别是 CPU 有两种代际,但核数相同。我们每台服务器都有很高的核数,2TB 内存和两个 100G 网口,硬盘的话,平均每台服务器有16块 15TB 的硬盘。

为了计算每月成本,我们把所有硬件按五年摊销归零核算,超过五年后继续用算白赚。因此这些设备的 启动成本,会摊销到 60 个月中进行核算。

所有的 持续性成本,例如租金和电费,均以 2022 年 10 月的价格计算。尽管通货膨胀也会有影响,但这里把通胀算上就太复杂了,所以我们先忽略通胀因素。

我们的托管费用由两部分组成:租金,以及 实际消耗的电费。自 2022 年初以来,电价大幅上涨。我们计算的时候使用的是最新的高电价,而不是整个租赁周期的的平均电价,这样计算上会让 AWS 占些便宜。

此外,我们还要支付 IP 网络传输费用,和数据中心与我们驻地之间的暗光纤费用(暗光纤:已经铺设但是没有投入使用的光缆)。

下表显示了我们每台服务器的月度支出。服务器硬件占整体月度支出的 2/3,而数据中心租金和电费 (DC)、互联网服务提供商 (ISP) 的 IP 传输费用、暗光纤 (DF) 和内部网络硬件 (Network HW) 构成了剩余的三分之一。

| 自建成本项 | 每月成本(美元) | 每月成本(人民币) | 百分比 |

|---|---|---|---|

| 服务器 | $ 1,025 | ¥ 7,380 | 66% |

| 数据中心、ISP、DF、网络硬件 | $ 524 | ¥ 3,772.8 | 34% |

| 自建总成本 | $ 1,550 | ¥ 11,160 | 100% |

我们的自有本地硬件成本结构

AWS 成本结构

由于我们托管的数据中心位于新加坡,所以我们使用 AWS 亚太地区(新加坡)区域的价格进行对比。

AWS 的成本结构与托管中心不同。不幸的是,AWS 没有提供与我们服务器核数相匹配的 EC2 服务器实例。因此,我们找到了CPU & 内存正好是一半的相应 AWS 实例,然后将一台 Ahrefs 服务器的成本,与两台这种 EC2 实例的成本进行对比。

译注:EC2 定价正比与核数与内存配比,这样成本对比没有问题

此外我们考虑了长期折扣,因此我们使用 EC2 实例的最低价格 —— 三年预留,与五年摊销的本地自建服务器进行对比。

译注:AWS EC2 三年 Reserved All Upfront 即提供最好的折扣

除了 EC2 实例外,我们还添加了弹性块存储 (EBS) ,它并不是直接附加存储的精准替代品 —— 因为我们在服务器中使用的是大容量且快速的 NVMe 盘。为了简化计算,我们选择了更便宜的 gp3 EBS(尽管这种盘速度比我们的慢很多)。其成本由两部分组成:存储大小和 IOPS 费用。

译注:EBS

gp3延迟在 ms 量级,io2在百微秒量级,本地盘在 55/9µs 量级

We keep two copies of a data chunk on our servers. But we only order usable space in EBS that takes care of the replication for us. So we should consider the price of the gp3 storage size equal to the size of our drives divided by 2: (11TB + 1615TB)/2 ≈ 120TB per server.

因为我们自己用磁盘的时候会复制一份,但是买 EBS 的时候只买实际存储空间,EBS 替你处理数据复制。

所以在成本对比时,我们购买的 gp3 存储大小是本地磁盘的一半:(1TB + 15TB 16块) / 2 ≈ 每台服务器 120TB。

我们还没有把 IOPS 的成本算进来,也忽略了 EBS gp3 的各种限制;例如,gp3 云盘的最大吞吐量/实例为 10GB/s。而单个 PCIe Gen 4 NVMe 驱动器的性能为 6-7GB/s,而我们有 16 个并行工作的磁盘,总吞吐要大的多。因此,这完全不是一个维度上的公平比较。这种比较方法显著低估了 AWS 上的存储成本,并进一步让 AWS 在比较中占尽便宜。

关于网络流量费用,AWS 与托管机房不同,AWS 不是按照带宽来计费的,而是按每GB下行流量来收费。因此,我们大致估算了每台服务器的平均下行流量,然后使用 AWS 网络计费方法进行估算。

将所有三项成本组合起来,我们得到了 AWS 上的的成本分布,如下表所示

| AWS 成本项 | 每月成本(美元) | 每月成本(人民币) | 百分比 |

|---|---|---|---|

| EBS 成本 | $ 11,486 | ¥ 82,699.2 | 65% |

| EC2 成本 | $ 5,607 | ¥ 40,370.4 | 32% |

| 数据传输 | $ 464 | ¥ 3,340.8 | 3% |

| AWS 总成本 | $ 17,557 | ¥ 126,410.4 | 100% |

AWS成本结构

自建与AWS对比

综合以上两个表格不难看出,AWS 上的支出比想象中要高得多。

| 自建成本项 | 月成本 $ | 占比 | AWS 成本项目 | 月消 $ | 占比 | |

|---|---|---|---|---|---|---|

| 服务器 | 1,025 | 66% | EBS 成本 | 11,486 | 65% | |

| DC、ISP、DF、网络硬件 | 524 | 34% | EC2 成本 | 5,607 | 32% | |

| 数据传输 | 464 | 3% | ||||

| 本地总成本 | 1,550 | 100% | AWS 总成本 | 17,557 | 100% |

我们的自建成本与 AWS EC2 月消费对比:一台 AWS 服务器成本粗略等于 11.3 台 Ahrefs 自建服务器

在 AWS 上一台具有相似可用 SSD 空间的替代 EC2 实例的成本,大致相当于托管数据中心中 11.3 台服务器的成本。相应来说,如果上了云,我们这 20 台服务器的机架就只能剩下大约 2 台服务器了!

20 台 Ahrefs 服务器的成本与 2 台 AWS 服务器相当

那么用我们在自建数据中心里实际使用了两年半的这 850 台服务器来计算,算出总成本数字后,不难看到极其惊人的差异!

| 自建本地服务器 | 每月成本 $ | AWS EC2 实例 | 每月成本 $ | |

|---|---|---|---|---|

| 850台服务器每月成本 | 1,317,301 | 850台服务器每月成本 | 14,923,154 | |

| 30个月总成本 | 39,519,025 | 30个月总成本 | 447,694,623 | |

| AWS成本 - 自建成本 | $ 408,175,598 |

850 台服务器用30个月的成本:AWS vs 自建

假设我们在实际的 2.5 年数据中心使用期间运行 850 台服务器。计算后可以看到显著的差异。

如果我们选择在 2020 年使用新加坡区域的 AWS,而不是自建,那我们需要向 AWS 支付 超过 4 亿美元 这样一笔天文数字 ,才能让自己的基础设施跑起来!

有人可能会想,“或许 Ahrefs 能付得起?”

确实,Ahrefs 是一家盈利且自给自足可持续的公司,所以让我们来看一下它的营收,并算一算。尽管我们是一家私有公司,不必公布我们的财务数据。但在《海峡时报》关于 2022 年和 2023 年新加坡增长最快的公司的文章中可以找到一些 Ahrefs 的收入信息。这些文章提供了 Ahrefs 在 2020 年和 2021 年的收入数据。

我们还可以线性外推出 2022 年的收入。这是一个粗略估计,但足以让我们得出一些结论了。

| 年份 | 类型 | 收入, 新币 | SGD/USD | 收入, 美元 |

|---|---|---|---|---|

| 2020 | 实际 | SGD 86,741,880 | 0.7253 | USD 62,913,886 |

| 2021 | 实际 | SGD 115,335,291 | 0.7442 | USD 85,832,524 |

| 2022 | 外推 | ??? | 0.7265 | USD 108,751,162 |

| 总计 | USD 257,497,571 |

Ahrefs 2020 - 2022 营收估计

从上表可以看出,Ahrefs 在过去三年的总收入约为 2.57 亿美元。但我们也计算出,如果将这样一个自建数据中心替换为 AWS,成本约为 4.48 亿美元。因此,公司收入甚至无法覆盖这 2.5 年的 AWS 使用成本。

这是一个令人震惊的结果!

但是我们的利润会去哪儿呢?

正如波音公司 Dr. LJ Hart-Smith 在这份 已有 20 年历史的报告中所述:“如果原厂或总包商无法通过将工作外包来获利,那么谁会受益呢?当然是分包商。”

需要记住的是,我们已经在计算时让 AWS 占尽便宜了 —— 自建数据中心算电费时使用高于平均水平的电价,算EBS 云盘存储价格时只算了空间部分没算 IOPS,并无视了 EBS 其实非常拉垮的慢。而且,这个数据中心并不是我们唯一的成本中心。我们在其他数据中心、服务器、服务、人员、办公室、营销活动上也有支出。

因此,如果我们主要的基础设施放在云上,Ahrefs 几乎无法生存。

其他考虑因素

这篇文章没有考虑其他使比较更加复杂的方面。这些方面包括人员技能、财务控制、现金流、根据负载类型进行的容量规划等。

结论

Ahrefs 省下了约 4 亿美元,因为在过去的两年半中,基础设施没有 100% 上云。这个数字还在增加,因为目前我们又在一个新地方,用新硬件搞了另一个大型托管数据中心。

Ahrefs 利用 AWS 的优势,在世界各地托管我们的前端,但 Ahrefs 基础设施的绝大部分,隐藏在托管数据中心的自有硬件上。如果我们的产品完全依赖 AWS,Ahrefs 将无法盈利,甚至无法存在。

如果我们采用只用云的方式,我们的基础设施成本将高出 10 倍以上。但正因为我们没有这样做,我们可以将节省下来的资金,用于实际的产品改进和开发。而且也带来了更快、更好的结果 —— 因为(考虑到云上的限制),我们的服务器比云计算能提供的速度更快。我们的报表也生成得更快且更全面,因为每份报告所需的时间更短。

基于此,我建议那些对可持续增长感兴趣的 CFO、CEO 和企业主们,仔细考虑并定期重新评估云计算的优势与实际成本。虽然云计算是早期创业公司的自然选择,或者说 100% 吧。但随着公司及其基础设施的增长,完全依赖云计算可能会使公司陷入困境。

而这里就出现了两难:

一旦上了云,想要离开是很复杂的。云计算很方便,但会带来供应商锁定。而且,仅仅是出于更高的成本而放弃云计算基础设施,可能并不是工程团队想要的。他们可能会正确地认为 —— 云计算比传统的砖瓦数据中心和物理服务器环境更容易、更灵活。

对于更成熟阶段的公司,下云迁移到自有基础设施是困难的。在迁移过程中保持公司生存也是一个挑战。但这种痛苦的转变可能是拯救公司的关键,因为它可以避免将收入越来越多的一部分不断支付给云厂商。

大公司,尤其是 FAANG 多年来吸走了大量人才。他们一直在招聘工程师来运营他们庞大的数据中心和基础设施,给小公司留下的机会很少。但最近几个月大科技公司的大规模裁员,带来了重新评估云计算的机会 —— 确实可以考虑一下招聘数据中心领域资深专业人士,并从云上搬迁下来。

如果你要创办一家新公司,考虑一下这种方案:买个机架和服务器,把它们放在你家的地下室。也许这样能从第一天起就提高你们公司的可持续性。

下云老冯评论

很高兴又看到一个难以忍受天价云租金的大客户站出来,发起对云厂商的控诉。 Ahrefs 的经验与我们一致 —— 云上服务器的综合持有成本在是本地自建的 10 倍左右 —— 即使考虑了最好的 Saving Plan 与深度折扣。37 Signal 的 DHH 则提供了另一个更有代表性的下云案例。

同时在 Ahrefs 成本核算中我们不难看出成本的结构化差异 —— 自建的存储成本是服务器成本的一半,而云上的存储成本却是服务器成本的一倍 —— 我有一篇文章专门聊过这个问题 —— 云盘是不是杀猪盘?

在几个关键例子上,云的成本都极其高昂 —— 无论是大型物理机数据库、大型 NVMe 存储,或者只是最新最快的算力。在这些用例上,云上的客户不得不忍受高昂到荒诞的定价带来的羞辱 —— 租生产队的驴所花的钱是如此高昂,以至于几个月甚至几周的租金就能与直接购买它的价格持平。在这种情况下,你应该直接直接把这头驴买下来,而不是给赛博领主交租!

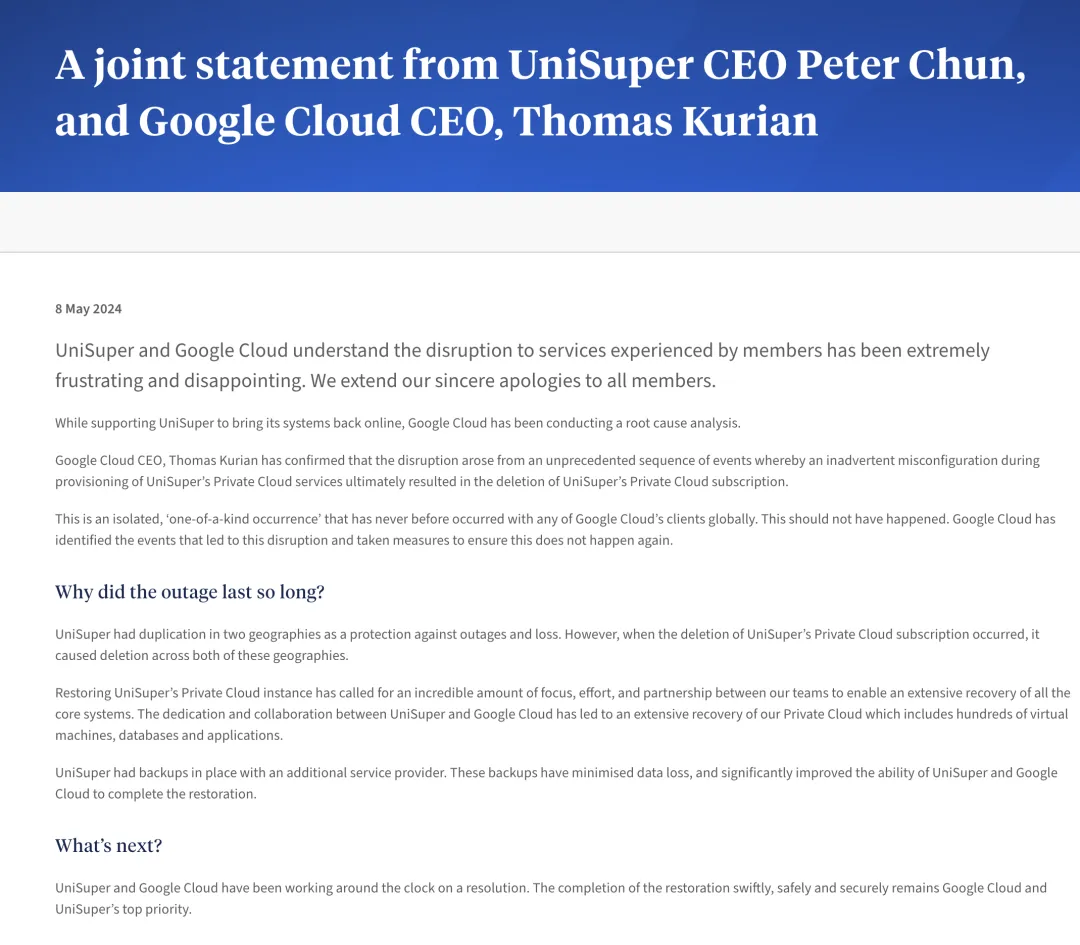

删库:Google云爆破了大基金的整个云账户

由于“前所未有的配置错误”,Google Cloud 误删了 UniSuper 的云账户。

澳洲养老金基金负责人与 Google Cloud 全球首席执行官联合发布声明,为这一“极其令人沮丧和失望”的故障表示道歉。

因为一次 Google Cloud “举世无双” 的配置失误,澳洲养老金基金 Unisuper 的整个云账户被误删了,超过五十万名 UniSuper 基金会员一周都无法访问他们的养老金账户。故障发生后,服务于上周四开始恢复,UniSuper 表示将尽快更新投资账户的余额。

UniSuper首席执行官 Peter Chun 在周三晚上向62万名成员解释,此次中断非由网络攻击引起,且无个人数据在此次事件中泄露。Chun 明确指出问题源于 Google的云服务。

在 Peter Chun 和 Google Cloud 全球CEO Thomas Kurian 的联合声明中,两人为此次故障向成员们致歉,称此事件“极其令人沮丧和失望”。他们指出,由于配置错误,导致 UniSuper 的云账户被删除,这是 Google Cloud 上前所未有的事件。

Google Cloud CEO Thomas Kurian 确认了这次故障的原因是,在设定 UniSuper 私有云服务过程中的一次疏忽,最终导致 UniSuper 的私有云订阅被删除。两人表示,这是一次孤立的、前所未有的事件,Google Cloud 已采取措施确保类似事件不再发生。

虽然 UniSuper 通常在两个不同的地理区域设置了备份,以便于服务故障或丢失时能够迅速恢复,但此次删除云订阅的同时,两地的备份也同时被删除了。

万幸的是,因为 UniSuper 在另一家供应商里还有一个备份,所以最终成功恢复了服务。这些备份在极大程度上挽回了数据损失,并显著提高了 UniSuper 与 Google Cloud 完成恢复的能力。

“UniSuper 私有云实例的全面恢复,离不开双方团队的极大专注努力,以及双方的密切合作” 通过 UniSuper 与 Google Cloud 的共同努力与合作,我们的私有云得到了全面恢复,包括 数百台虚拟机、数据库和应用程序。

UniSuper 目前管理着大约 1250 亿美元 的基金。

下云老冯评论

如果说 阿里云全球服务不可用 大故障称得上是 “史诗级”,那么 Google 云上的这一次故障堪称 “无双级” 了。前者主要涉及服务的可用性,而这次故障直击许多企业的命根 —— 数据完整性。

据我所知这应当是云计算历史上的新纪录 —— 第一次如此大规模的删库。上一次类似的数据完整性受损事件还是 腾讯云与 “前言数控” 的案例。

但一家小型创业公司与掌管千亿美金的大基金完全不可同日而语;影响的范围与规模也完全不可同日而语 —— 整个云账户下的所有东西都没了!

这次事件再次展示了(异地、多云、不同供应商)备份的重要性 —— UniSuper 是幸运的,他们在别的地方还有其他备份。

但如果你相信公有云厂商在其他的区域 / 可用区的数据备份可以帮你“兜底”,那么请记住这次案例 —— 避免 Vendor Lock-in,并且 Always has Plan B。

云上黑暗森林:打爆云账单,只需要S3桶名

公有云上的黑暗森林法则出现了:只要你的 S3 对象存储桶名暴露,任何人都有能力刷爆你的云账单。

试想一下,你在自己喜欢的区域创建了一个空的、私有的 AWS S3 存储桶。第二天早上,你的 AWS 账单会是什么样子?

几周前,我开始为客户开发一个文档索引系统的概念验证原型 (PoC)。我在 eu-west-1 区域创建了一个 S3 存储桶,并上传了一些测试文件。两天后我去查阅 AWS 账单页面,主要是为了确认我的操作是否在免费额度内。结果显然事与愿违 —— 账单超过了 1300美元,账单面板显示,执行了将近 1亿次 S3 PUT 请求,仅仅发生在一天之内!

我的 S3 账单,按每天/每个区域计费

这些请求从哪儿来?

默认情况下,AWS 并不会记录对你的 S3 存储桶的请求操作。但你可以通过 AWS CloudTrail 或 S3 服务器访问日志 启用此类日志记录。开启 CloudTrail 日志后,我立刻发现了成千上万的来自不同账户的写请求。

为何会有第三方账户对我的 S3 存储桶发起未授权请求?

这是针对我的账户的类 DDoS 攻击吗?还是针对 AWS 的?事实证明,一个流行的开源工具的默认配置,会将备份存储至 S3 中。这个工具的默认存储桶名称竟然和我使用的完全一致。这就意味着,每一个部署该工具且未更改默认设置的实例,都试图将其备份数据存储到我的 S3 存储桶中!

备注:遗憾的是,我不能透露这个工具的名称,因为这可能会使相关公司面临风险(详情将在后文解释)。

所以,大量未经授权的第三方用户,试图在我的私有 S3 存储桶中存储数据。但为何我要为此买单?

对未授权的请求,S3也会向你收费!

在我与 AWS 支持的沟通中,这一点得到了证实,他们的回复是:

是的,S3 也会对未授权请求(4xx)收费,这是符合预期的。

因此,如果我现在打开终端输入:

aws s3 cp ./file.txt s3://your-bucket-name/random_key

我会收到 AccessDenied 错误,但你要为这个请求买单。

还有一个问题困扰着我:为什么我的账单中有一半以上的费用来自 us-east-1 区域?我在那里根本没有存储桶!原来,未指定区域的 S3 请求默认发送至 us-east-1,然后根据具体情况进行重定向。而你还需要支付重定向请求的费用。

安全层面的问题

现在我明白了,为什么我的 S3 存储桶会收到数以百万计的请求,以及为什么我最终面临一笔巨额的 S3 账单。当时,我还想到了一个点子。如果所有这些配置错误的系统,都试图将数据备份到我的 S3 存储桶,如果我将其设置为 “公共写入” 会怎样?我将存储桶公开不到 30 秒,就在这短短时间内收集了超过 10GB 的数据。当然,我不能透露这些数据的主人是谁。但这种看似无害的配置失误,竟可能导致严重的数据泄漏,令人震惊!

我从中学到了什么?

第一课:任何知道你S3存储桶名的人,都可以随意打爆你的AWS账单

除了删除存储桶,你几乎无法防止这种情况发生。当直接通过 S3 API 访问时,你无法使用 CloudFront 或 WAF 来保护你的存储桶。标准的 S3 PUT 请求费用仅为每一千请求 0.005 美元,但一台机器每秒就可以轻松发起数千次请求。

第二课:为你的存储桶名称添加随机后缀可以提高安全性。

这种做法可以降低因配置错误或有意攻击而受到的威胁。至少应避免使用简短和常见的名称作为 S3 存储桶的名称。

第三课:执行大量 S3 请求时,确保明确指定 AWS 区域。

这样你可以避免因 API 重定向而产生额外的费用。

尾声

-

我向这个脆弱开源工具的维护者报告了我的发现。他们迅速修正了默认配置,不过已部署的实例无法修复了。

-

我还向 AWS 安全团队报告了此事。我希望他们可能会限制这个不幸的 S3 存储桶名称,但他们不愿意处理第三方产品的配置不当问题。

-

我向在我的存储桶中发现数据的两家公司报告了此问题。他们没有回复我的邮件,可能将其视为垃圾邮件。

-

AWS 最终同意取消了我的 S3 账单,但强调了这是一个例外情况。

感谢你花时间阅读我的文章。希望它能帮你避免意外的 AWS 费用!

下云老冯评论

公有云上的黑暗森林法则出现了:只要你的 S3 对象存储桶名暴露,任何人都有能力刷爆你的 AWS 账单。只需要知道你的存储桶名称,别人不需要知道你的 ID,也不需要通过认证,直接强行 PUT / GET 你的桶,不管成败,都会被收取费用。

这引入了一种类似于 DDoS 的新攻击类型 —— DoCC (Denial of Cost Control),刷爆账单攻击。

在一些群里,AWS 的售后,与工程师给出了他们的解释 —— “AWS设计收费策略有一个原则:就是如果AWS产生了成本(用户本身有一定原因),就一定要向用户收费”,AWS 销售给出的解释则是这个客户不会使用 AWS ,应该参加 AWS SA 考试培训后再上岗。

但从常理来看,这完全不合理 —— 由别人发起的,连 Auth 都没有通过的请求,为什么要向用户收费?而用户除了选择不用这一个选项之外,似乎压根没有办法防止这种情况发生 —— 这是一个设计上的漏洞,也是一个安全上的漏洞。

但是在 AWS 看来,该特性被视为 Feature,而不是安全漏洞或者 Bug,可以用来咔咔爆用户的金币。同样的设计逻辑贯穿在 AWS 的产品设计逻辑中,例如,Route53 查询没有解析的域名也会收费,所以知道域名是 AWS 解析的话,也可以进行 DDoS。

我并不确定本土云厂商是否使用了同样的处理逻辑。但他们基本都是直接或间接借鉴 AWS 的。所以有比较大的概率,也会是一样的情况。

作为信安专业出身,我很清楚业界的一些玩法,比如打DDoS 卖高防 —— 来自某群友的截图

在 《Cloudflare圆桌访谈》中,我也提到过安全问题,比如监守自盗刷流量的问题。

最后我想说一下安全,我认为安全才是 Cloudflare 核心价值主张。为什么这么说,还是举一个例子。有一个独立站长朋友用了某个头部本土云 CDN ,最近两年有莫名其妙的超多流量。一个月海外几个T流量,一个IP 过来吃个10G 流量然后消失掉。后来换了个地方提供服务,这些奇怪的流量就没了。运行成本变为本来的 1/10,这就有点让人细思恐极 —— 是不是这些云厂商坚守自盗,在盗刷流量?或者是是云厂商本身(或其附属)组织在有意攻击,从而推广他们的高防 IP 服务? 这种例子其实我是有所耳闻的。

因此,在使用本土云 CDN 的时候,很多用户会有一些天然的顾虑与不信任。但 Cloudflare 就解决了这个问题 —— 第一,流量不要钱,按请求量计费,所以刷流量没意义;第二,它替你抗 DDoS,即使是 Free Plan 也有这个服务,CF不能砸自己的招牌 —— 这解决了一个用户痛点,就是把账单刷爆的问题 —— 我确实有见过这样的案例,公有云账号里有几万块钱,一下子给盗刷干净了。用 Cloudflare 就彻底没有这个问题,我可以确保账单有高度的确定性 —— 如果不是确定为零的话。

Well,总的来说,账单被刷爆,也算一种公有云上独有的安全风险了 —— 希望云用户保持谨慎小心,一点小失误,也许就会在账单上立即产生难以挽回的损失。

Cloudflare圆桌访谈与问答录

上周我作为圆桌嘉宾受邀参加了 Cloudflare 在深圳举办的 Immerse 大会,在 Cloudflare Immerse 鸡尾酒会和晚宴上,我与 Cloudflare 亚太区CMO,大中华区技术总监,以及一线工程师深入交流探讨了许多关于 Cloudflare 的问题。

本文是圆桌会谈纪要与问答采访的摘录,从用户视角点评 Cloudflare 请参考本号前一篇文章:《吊打公有云的赛博佛祖 Cloudflare》

第一部分:圆桌访谈

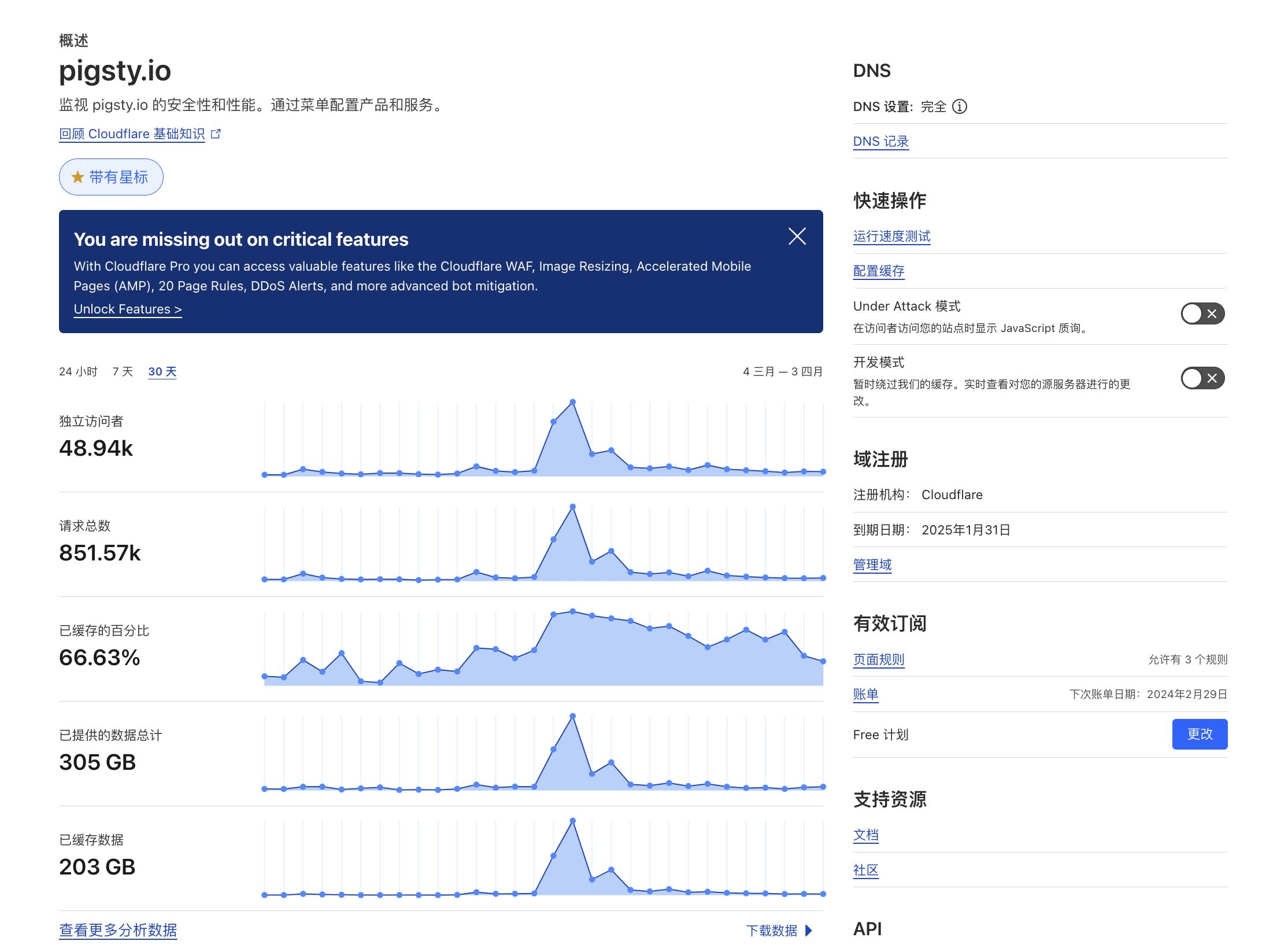

您与 Cloudflare 如何结缘?

我是冯若航,现在做 PostgreSQL 数据库发行版 Pigsty,运营着一个开源社区,同时作为一个数据库 & 云计算领域的 KOL,在国内宣扬下云理念。在 Cloudflare 的场子里讲下云挺有意思,但我并不是来踢馆的。

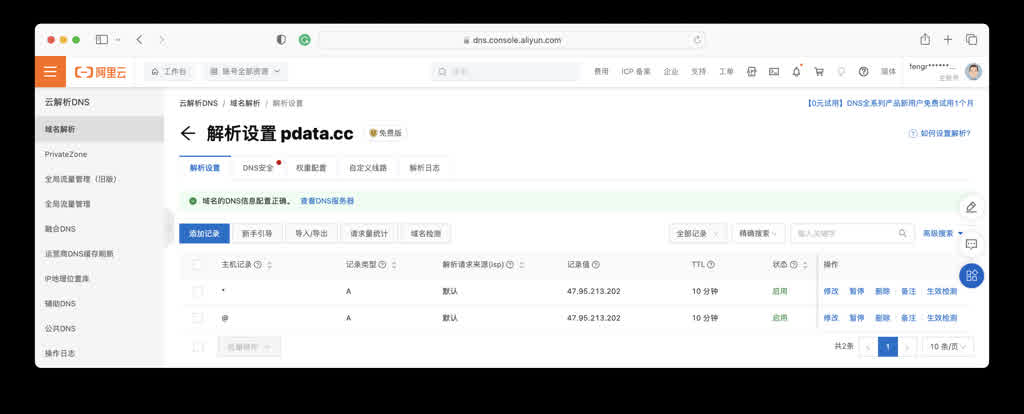

事实上我与 Cloudflare 还有好几层缘分,所以今天很高兴在这里和大家分享一下我的三重视角 :作为一个独立开发者终端用户,作为一个开源社区的成员与运营者,作为一个公有云计算反叛军,我是如何看待 Cloudflare 的。

作为一个开源软件供应商,我们需要一种稳定可靠的软件分发方式。我们最开始使用了本土的阿里云与腾讯云,在国内的体验尚可,但当我们需要走出海外,面向国外用户时,使用体验确实不尽如人意。我们尝试了 AWS,Package Cloud ,但最终选择了 Cloudflare。包括我们有几个网站也托管到了CF。

作为 PostgreSQL 社区的一员,我们知道 Cloudflare 深度使用了 PostgreSQL 作为底层存储的数据库。并且不同于其他云厂商喜欢将其包装为 RDS 白嫖社区,Cloudflare 一直是杰出的开源社区参与者与建设者。甚至像 Pingora 和 Workerd 这样的核心组件都是开源的。我对此给出高度评价,这是开源软件社区与云厂商共存的典范。

作为下云理念的倡导者,我一直认为传统公有云使用了一种非常不健康的商业模式。所以在中国引领着一场针对公有云的下云运动。我认为 Cloudflare 也许是这场运动中的重要盟友 —— 传统 IDC 开源自建,难以解决 “在线” 的问题,而 Cloudflare 的接入能力,边缘计算能力,都弥补了这一块短板。所以我非常看好这种模式。

您用到了哪些 Cloudflare的服务,打动你的是什么?

我用到了 Cloudflare 的静态网站托管服务 Pages,对象存储服务 R2 和边缘计算 Worker。最打动我的有这么几点:易用性,成本,质量,安全,专业的服务态度,以及这种模式的前景与未来。

首先聊一聊易用性吧,我使用的第一项服务是 Pages。我自己有一个网站,静态 HTML 托管在这里。我把这个网站搬上 Cloudflare 用了多长时间?一个小时!我只是创建了一个新的 GitHub Repo,把静态内容提交上去,然后在 Cloudflare 点点按钮,绑定一个新的子域名,链接到 GitHub Repo,整个网站瞬间就可以被全世界访问,你不需要操心什么高可用,高并发,全球部署,HTTPS 证书,抗 DDoS 之类的问题 —— 这种丝滑的用户体验让我非常舒适,并很乐意在这上面花点钱解锁额外功能。

再来聊一聊成本吧。在独立开发者,个人站长这个圈子里,我们给 Cloudflare 起了一个外号 —— “赛博佛祖”。这主要是因为 Cloudflare 提供了非常慷慨的免费计划。Cloudflare 有着相当独特的商业模式 —— 免流量费,靠安全赚钱。

比如说 R2,我认为这就是专门针对 AWS S3 进行啪啪打脸的。我曾经作为大甲方对各种云服务与自建的成本进行过精算 —— 得出会让普通用户感到震惊的结论。云上的对象存储 / 块存储 比本地自建贵了两个数量级,堪称史诗级杀猪盘。AWS S3 标准档价格 0.023 $/GB·月,而 Cloudflare R2 价格 0.015 $/GB·月,看上去只是便宜了 1/3 。但重要的是流量费全免!这就带来质变了!

比如,我自己那个网站也还算有点流量,最近一个月跑了 300GB ,没收钱,我有一个朋友每月跑掉 3TB 流量,没收钱;然后我在推特上看到有个朋友 Free Plan 跑黄网图床,每月 1PB 流量,这确实挺过分了,于是 CF 联系了他 —— 建议购买企业版,也仅仅是 “建议”。

接下来我们来聊一聊质量。我讲下云的一个前提是:各家公有云厂商卖的是没有不可替代性的大路货标准品。比如那种在老罗直播间中,夹在吸尘器与牙膏中间卖的云服务器。但是 Cloudflare 确实带来了一些不一样的东西。

举个例子,Cloudflare Worker 确实很有意思,比起传统云上笨拙的开发部署体验来说,CF worker 真正做到了让开发者爽翻天的 Serverless 效果。开发者不需要操心什么数据库连接串,AccessPoint,AK/SK密钥管理,用什么数据库驱动,怎么管理本地日志,怎么搭建 CI/CD 流程这些繁琐问题,最多在环境变量里面指定一下存储桶名称这类简单信息就够了。写好 Worker 胶水代码实现业务逻辑,命令行一把梭就可以完成全球部署上线。

与之对应的是传统公有云厂商提供的各种所谓 Serverless 服务,比如 RDS Serverless,就像一个恶劣的笑话,单纯是一种计费模式上的区别 —— 既不能 Scale to Zero,也没什么易用性上的改善 —— 你依然要在控制台去点点点创建一套 RDS,而不是像 Neon 这种真 Serverless 一样用连接串连上去就能直接迅速拉起一个新实例。更重要的是,稍微有个几十上百的QPS,相比包年包月的账单就要爆炸上天了 —— 这种平庸的 “Serverless” 确实污染了这个词语的本意。

最后我想说一下安全,我认为安全才是 Cloudflare 核心价值主张。为什么这么说,还是举一个例子。有一个独立站长朋友用了某个头部本土云 CDN ,最近两年有莫名其妙的超多流量。一个月海外几个T流量,一个IP 过来吃个10G 流量然后消失掉。后来换了个地方提供服务,这些奇怪的流量就没了。运行成本变为本来的 1/10,这就有点让人细思恐极 —— 是不是这些云厂商坚守自盗,在盗刷流量?或者是是云厂商本身(或其附属)组织在有意攻击,从而推广他们的高防 IP 服务?这种例子其实我是有所耳闻的。

因此,在使用本土云 CDN 的时候,很多用户会有一些天然的顾虑与不信任。但 Cloudflare 就解决了这个问题 —— 第一,流量不要钱,按请求量计费,所以刷流量没意义;第二,它替你抗 DDoS,即使是 Free Plan 也有这个服务,CF不能砸自己的招牌 —— 这解决了一个用户痛点,就是把账单刷爆的问题 —— 我确实有见过这样的案例,公有云账号里有几万块钱,一下子给盗刷干净了。用 Cloudflare 就彻底没有这个问题,我可以确保账单有高度的确定性 —— 如果不是确定为零的话。

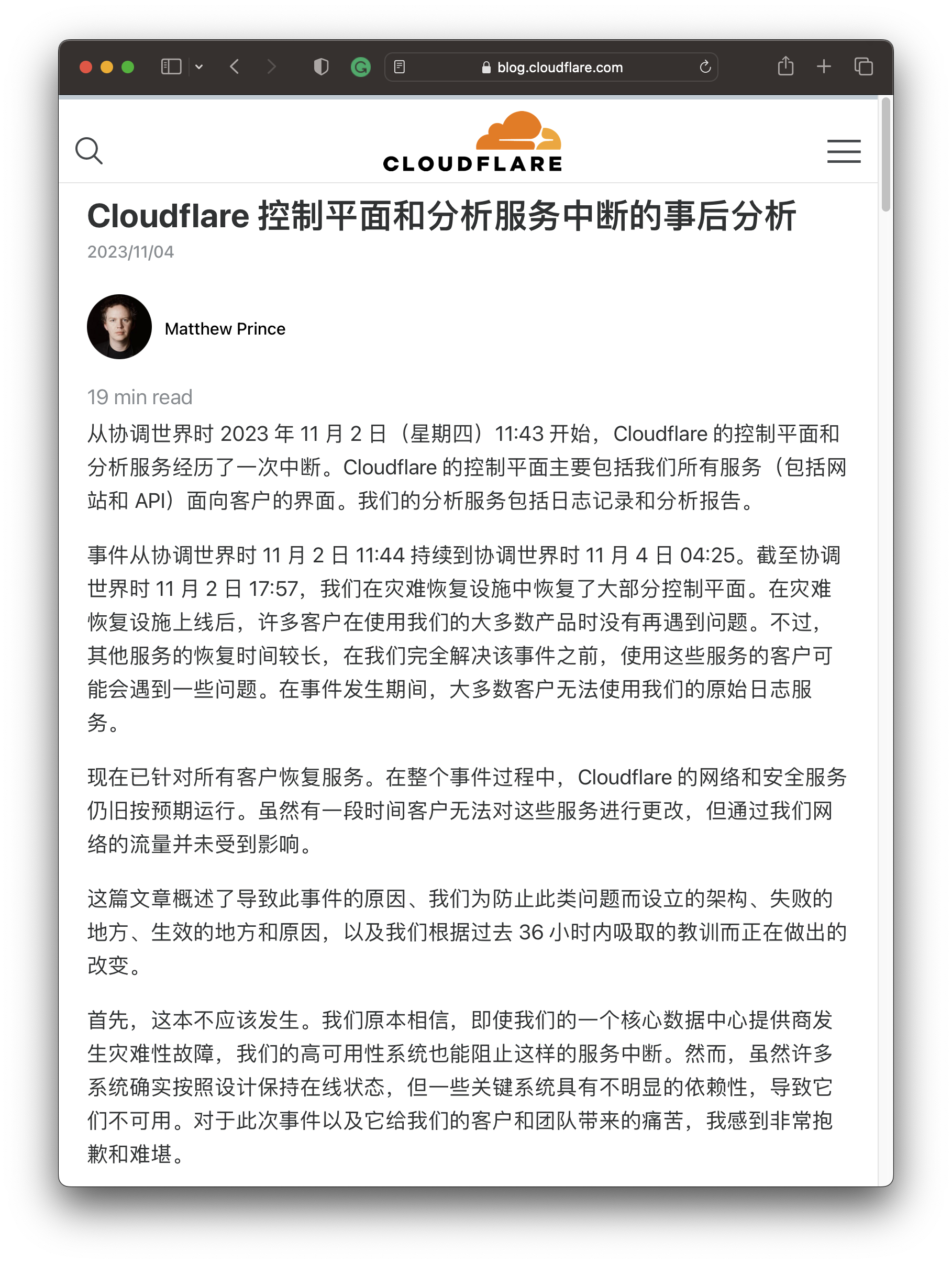

专业的服务态度指的是?

本土云厂商在面对大故障时,体现出相当业余的专业素养与服务态度,这一点我专门写了好几篇文章进行批判。说起来特别赶巧,去年双十一,阿里云出了一个史诗级全球大故障。Cloudflare 也出了个机房断电故障。一周前 4.8 号,腾讯云也出了个翻版全球故障,Cloudflare 也恰好在同一天又出了 Code Orange 机房断电故障。作为一个工程师,我理解故障是难以避免的 —— 但出现故障后,体现出来的专业素养和服务态度是天差地别的。

首先,阿里云和腾讯云的故障都是人为操作失误/糟糕的软件工程/架构设计导致的,而 Cloudflare 的问题是机房断电,某种程度上算不可抗力的天灾。其次,在处理态度上,阿里云到现在都没发布一个像样的故障复盘,我替它做了一个非官方故障复盘;至于腾讯云,我干脆连故障通告都替他们发了 —— 比官网还快10分钟。腾讯云倒是在前天发布了一个故障复盘,但是也比较敷衍,专业素养不足,这种复盘报告拿到 Apple 和 Google 都属于不合格的 Post-Mortem ……

Cloudflare 则恰恰相反,在故障的当天 CEO 亲自出来撰写故障复盘,细节翔实,态度诚恳,你见过本土云厂商这么做吗?没有!

您对 Cloudflare 未来有什么期待?

我主张下云理念,是针对中型以上规模的企业。像我之前任职的探探,以及美国 DHH 37 Signal 这样的。但是 IDC 自建有个问题,接入的问题,在线的问题 —— 你可以自建KVM,K8S,RDS,甚至是对象存储。但你不可能自建 CDN 吧?Cloudflare 就很好地弥补了这个缺憾。

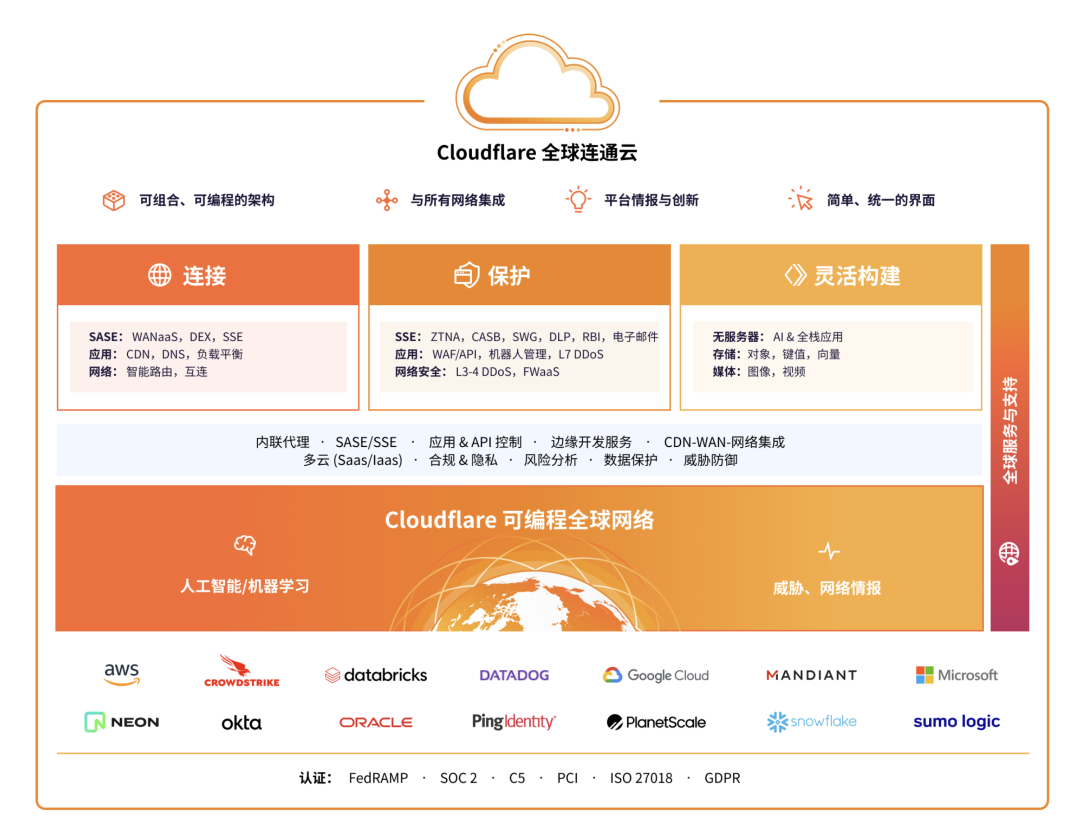

我认为,Cloudflare 是下云运动的坚实盟友。Cloudflare 并没有提供传统公有云上的那些弹性计算、存储、K8S、RDS 服务。但幸运地是,Cloudflare 可以与公有云 / IDC 良好地配合协同 —— 从某种意义上来说,因为 Cloudflare 成功解决了 “在线” 的问题,这使得传统数据库中心 IDC 2.0 也同样可以拥有比肩甚至超越公有云的 “在线” 能力,两者配合,在事实上摧毁了一些公有云的护城河,并挤压了传统公有云厂商的生存空间。

我非常看好 Cloudflare 这种模式,实际上,这种丝滑的体验才配称的上是云,配享太庙,可以心安理得吃高科技行业的高毛利。实际上,我认为 Cloudflare 应该主动出击,去与传统公有云抢夺云计算的定义权 —— 我希望未来人们说起云的时候,指的应该是 Cloudflare 这种慷慨体面的连接云,而不是传统公有杀猪云。

第二部分:互动问答

在 Cloudflare Immerse 鸡尾酒会和晚宴上,我与 Cloudflare 亚太区CMO,大中华区技术总监,以及一线工程师深入交流探讨了许多关于 Cloudflare 的问题,收获颇丰,这里给出了一些适合公开的问题与答案。因为我也不会录音,因此这里的文字属于我的事后回忆与阅读理解,仅供参考,不代表 CF 官方观点。

Cloudflare 如何定位自己,和 AWS 这种传统公有云是什么关系?

其实 Cloudflare 不是传统公有云,而是一种 SaaS。我们现在管自己叫做 “Connectivity Cloud”(翻译为:全球联通云),旨在为所有事物之间建立连接,与所有网络相集成;内置情报防范安全风险,并提供统一、简化的界面以恢复可见性与控制。从传统的视角来看,我们做的像是安全、CDN与边缘计算的一个整合。AWS 的 CloudFront 算是我们的竞品。

Cloudflare 为什么提供了如此慷慨的免费计划,到底靠什么赚钱?

Cloudflare 的免费服务就像 Costco 的5美元烤鸡一样。实际上除了免费套餐,那个 Workers 和 Pages 的付费计划也是每月五美元,跟白送的一样,Cloudflare 也不是从这些用户身上赚钱的。

Cloudflare 的核心商业模式是安全。相比于只服务付费客户,更多的免费用户可以带来更深入的数据洞察 —— 也就能够发现更为广泛的攻击与威胁情报,为付费用户提供更好的安全服务。

我们的 Free 计划有何优势?

在 Cloudflare,我们的使命是帮助建立更好的互联网。我们认为 web 应该是开放和免费的,所有网站和 web 用户,无论多小,都应该是安全、稳固、快速的。由于种种原因,Cloudflare 始终都提供慷慨的免费计划。

我们努力将网络运营成本降至最低,从而能在我们的 Free 计划中提供巨大价值。最重要的是,通过保护更多网站,我们能就针对我们网络的各类攻击获得更完善的数据,从而能为所有网站提供更佳的安全和保护。

作为隐私第一的公司,我们绝不出售您的数据。事实上,Cloudflare 承认个人数据隐私是一项基本人权,并已采取一系列措施来证明我们对隐私的承诺。

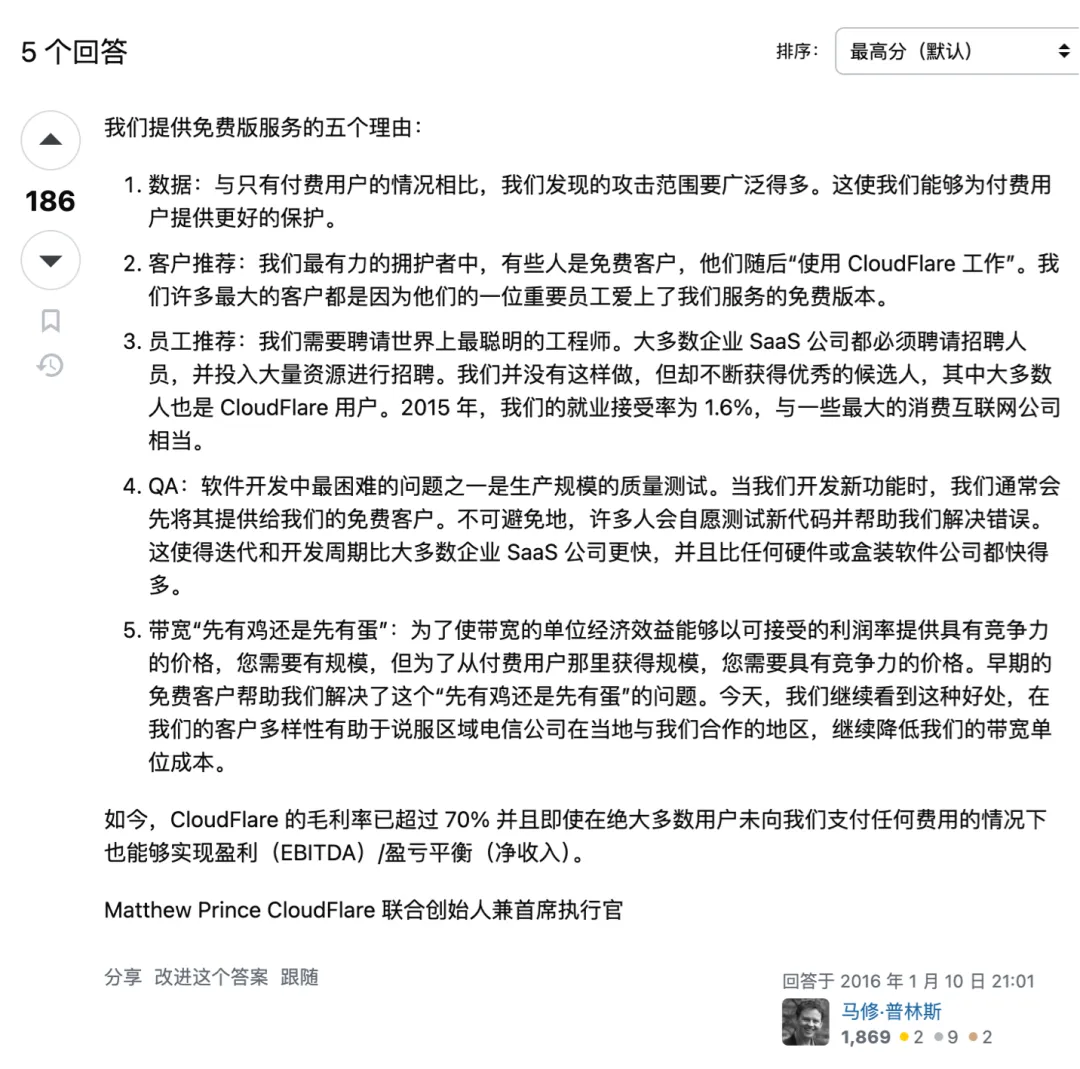

实际上 Cloudflare 的 CEO 在 StackOverflow 亲自对这个问题作出过回答:

Five reasons we offer a free version of the service and always will:

- Data: we see a much broader range of attacks than we would if we only had our paid users. This allows us to offer better protection to our paid users.

- Customer Referrals: some of our most powerful advocates are free customers who then “take CloudFlare to work.” Many of our largest customers came because a critical employee of theirs fell in love with the free version of our service.

- Employee Referrals: we need to hire some of the smartest engineers in the world. Most enterprise SaaS companies have to hire recruiters and spend significant resources on hiring. We don’t but get a constant stream of great candidates, most of whom are also CloudFlare users. In 2015, our employment acceptance rate was 1.6%, on par with some of the largest consumer Internet companies.

- QA: one of the hardest problems in software development is quality testing at production scale. When we develop a new feature we often offer it to our free customers first. Inevitably many volunteer to test the new code and help us work out the bugs. That allows an iteration and development cycle that is faster than most enterprise SaaS companies and a MUCH faster than any hardware or boxed software company.

- Bandwidth Chicken & Egg: in order to get the unit economics around bandwidth to offer competitive pricing at acceptable margins you need to have scale, but in order to get scale from paying users you need competitive pricing. Free customers early on helped us solve this chicken & egg problem. Today we continue to see that benefit in regions where our diversity of customers helps convince regional telecoms to peer with us locally, continuing to drive down our unit costs of bandwidth.

Today CloudFlare has 70%+ gross margins and is profitable (EBITDA)/break even (Net Income) even with the vast majority of our users paying us nothing.

Matthew Prince Co-founder & CEO, CloudFlare

创始人的情怀与愿景其实挺重要的 …… ,Cloudflare 早期的许多服务一直都是免费提供的,第一个付费服务其实是 SSL 证书,现在也不要钱了。总的来说,就是靠企业级客户为安全付费。